Time files, for better or for the worst. The last time I bored you with ramblings on this blog was more than 2 years ago already, prepping up for Tracker 3.0. Since I’m sure you don’t need a general catch up about these last 2 years, let’s stay on that same subject.

Nowadays, we are very close to GNOME 43, and an accompanying 3.4.0 release of Tracker SPARQL library and data miners, that is 4 minor releases ahead! What happened since then? Most immediately after that previous blog post, the 3.0 release did roll in, the uncertainty behind all major structural changes vanished, and the transition could largely be called a success: The overhauled internals for complete SPARQL support stood ground without large regressions; the promises of portals and data isolation delivered and to this day remains unchallenged (except for spare requests to let more pieces of metadata through); the increased genericity and versatility kept fostering further improvements.

Overall, there’s been no major regrets, and we are now sitting comfortably in 3.x. And that was all good, since we could use all that time not fixing fallout in keeping up with the improvements. Let’s revisit what happened since.

Tracker (SPARQL library)

Testing

Ever since 3.0, test coverage has been growing fairly steadily. A very good thing about SPARQL and Tracker API is that it is very “circular” altogether, every data format used in information exchange must be both parsed and produced by the SPARQL implementation, every external request made is also a external request it should be able to serve, and so on.

This makes it fairly easy to reach all corners in testing coverage, despite the involved complexity. It has been also quite a rule for some time that SPARQL language compliance fixes come with tests. The accumulated result over the years is a fairly large collection of tests to the internal machinery, there’s over 330 subtests already for the SPARQL language alone.

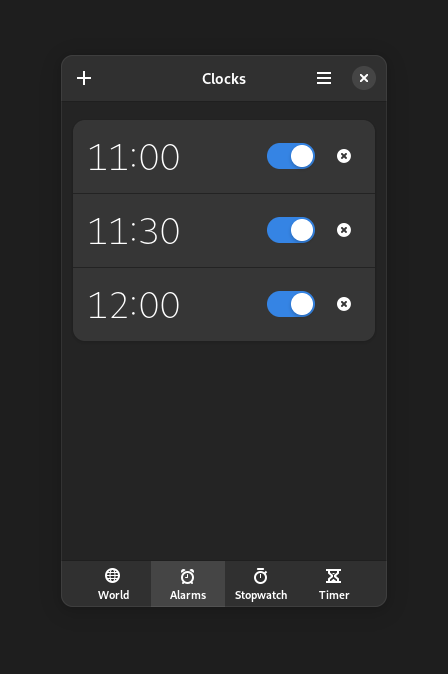

To the day of writing, Tracker stands at 76.4% coverage (Up-to-date for the posterity:  ), we are getting very good at catching deviations from how the SPARQL library should behave, and decently good at catching how it should not misbehave. Simply following W3C standards and recommendations pays off here too, since it settles the direction and resolves most matters about what the right behavior is.

), we are getting very good at catching deviations from how the SPARQL library should behave, and decently good at catching how it should not misbehave. Simply following W3C standards and recommendations pays off here too, since it settles the direction and resolves most matters about what the right behavior is.

Developer Experience

3.0 marked the point where being able to create private Tracker databases with custom data models transitioned from an easter egg to a first-class feature. This also means that developers can now write an ontology (or data model, or schema, pick a name) that suits their data like a glove, instead of using the default Nepomuk one, which is well-trodden and literally written by academics, but will be overkill for the needs of individual applications.

And of course there is room for failure in writing those ontologies. Last year, GSoC student Abanoub Ghadban worked hard on “breaking it”, polishing the experience and trying to produce helpful warnings, so developer mistakes are easily visible and solvable. The CLI tools provided facilitate these checks, e.g. creating a temporary endpoint that loads the ontology being edited, and running queries against it.

The documentation front got also steady improvements, the API itself is 100% documented and there is now a fully fleshed out SPARQL tutorial. Also, drawing inspiration from SQLite, there are now miscellaneous docs on some implementation details like limits, a discussion on the security considerations of the implemented specs, or extensions and interpretations of the SPARQL spec. The examples have been also modernized, and written in Python and Javascript in addition.

A great blunder of how Tracker tended to be used in applications was having SPARQL mixed in code, or worse, built through string manipulation. The latter got better API-wise in the past with compiled statements, but the mix of code and database logic was still prevalent. Since 3.3, there is support for loading and creating compiled statements from query files located in GResources. This neatly addresses the separation of queries and code, while keeping them indissoluble to the produced binary, while preserving the benefits of compiled statements (compile once, run many times).

Performance

One of the benefits of the API provided by Tracker is that it gives a great amount of leeway in internal refactors without altering the surface. We are largely just backwards-compatibility constrained by the underlying database format. This has allowed for further optimizations to happen under the hood since 3.0, database updates both in terms of database changes and queries. Databases also take now less space, specially in the presence of many blank nodes.

Although the greatest performance boost for data producers can be obtained through the TrackerBatch API (Since 3.1). Prior to it, normally a TrackerResource would be used build RDF data, then used to produce a SPARQL update, and the SPARQL update parsed to generate and apply the RDF changes. This new API can efficiently traverse a series of TrackerResources (describing RDF already) and turn them to database modifications skipping the SPARQL middle man altogether.

In the git tree, there now is a small utility to benchmark certain uses of Tracker API, let’s see how the output looks on a modern Intel i7 for an in-memory database:

Batch size: 5000, Individual test duration: 30 sec

Opening in-memory database…

Test Elements Elems/sec Min Max Avg

Resource batch update (sync) 6169883.801 205662.793 4.292 usec 5.615 usec 4.862 usec

SPARQL batch update (sync) 2430664.747 81022.158 11.889 usec 14.255 usec 12.342 usec

Resource modification (sync) 4440988.603 148032.953 6.588 usec 8.438 usec 6.755 usec

Resource insert+delete (sync) 3033137.552 101104.585 9.689 usec 12.669 usec 9.891 usec

Prepared statement query (sync) 8566182.714 285539.424 3.000 usec 745.000 usec 3.502 usec

SPARQL query (sync) 1329076.956 44302.565 21.000 usec 189.000 usec 22.572 usec

After the usual disclaimer that this benchmark utility greatly relies on CPU and disk characteristics, and your mileage may vary, there’s a few things to highlight here:

- Using modern APIs always pays off. Inserting data directly from TrackerResource (Resource batch update) is 2.5x faster than inserting data through SPARQL updates (SPARQL batch update), and querying through prepared statements (Prepared statement query) is ~6.5x faster than repeating SPARQL queries (SPARQL query).

- Even though the tests are run synchronously on the main loop, queries can be greatly parallelized, so the actual throughput on a modern machine will be much higher in reality. Updates are single-threaded though.

- Used the right way, Tracker code is never in the hot paths, merits there go to SQLite and production of data itself. You are expected to get results that are in the same ballpark than using SQLite directly, given similar volumes and layout of data.

But this snapshot does not fully highlight the improvements done. For a reference baseline, a backported version of this benchmark on the same computer over Tracker 3.0.x gives:

Batch size: 5000, Individual test duration: 30 sec

Opening in-memory database…

Test Elements Elems/sec Min Max Avg

SPARQL batch update (sync) 1387346.192 46244.873 17.035 usec 24.290 usec 21.624 usec

Resource modification (sync) 259923.682 8664.123 49.863 usec 122.236 usec 115.418 usec

Resource insert+delete (sync) 707638.539 23587.951 41.593 usec 73.702 usec 42.395 usec

Prepared statement query (sync) 7729898.742 257663.291 3.000 usec 527.000 usec 3.881 usec

SPARQL query (sync) 888896.319 29629.877 31.000 usec 180.000 usec 33.750 usec

Looking past the lack of TrackerBatch there, it’s still easy to see there has been massive improvements pretty much all over the board. As we already encourage, using the latest Tracker gives you the best Tracker.

Data serialization

SPARQL was very much thought out with the task of storing RDF data, querying into RDF data, and moving RDF data around. From the tiniest resource/value existence check, to full content dumps, everything is one query away.

What we were lacking is a consistent way to convert all that data into something that could easily be piped through, saved, processed, etc. To make this task easy, there is now API to serialize and deserialize data between a Tracker database and the popular RDF file formats. This is performed efficiently, with flat RAM usage on both ends, it is even possible to pipe these APIs with the RDF data never existing anywhere at once during the process.

And since this serialization to RDF formats is a builtin feature of HTTP endpoints, it has allowed us to level up our support for these. A Tracker HTTP endpoint is now entirely compliant and indistinguishable from the larger players.

The most immediately useful usage of this serialization support is in the CLI tools, the import and export commands now use this API and can deal with these formats. But what is this for? Is this driven by a level of completionism that borders the sickness? Well, yes, but there are of course plans around these features, more on that later.

Tracker Miners

Performance

You might think the SPARQL library improvements above would be the larger improvement the filesystem miner could get, and you would be wrong.

Part of the raison d’être of a filesystem indexer is to stay up-to-date with filesystem changes. In the GNOME world, this catching up is usually done through a GFileMonitor, which provides a GLib-friendly way to do the dirty job of setting up an tracking an inotify handle to track changes on an individual directory for you. What is wrong with that? Nothing, unless you do that at a large scale like indexers do. Each of those GFileMonitors is backed by a pollable FD, and a GSource wrapping it, and iterating a GMainContext that has thousands of GSources attached to it massively, thoroughly sucks.

Is this a case of Tracker abusing a perfectly fine GLib API? Or on the contrary is this a case of bad GLib API design? I will let you debate on that, as I am unclear myself.

The first solution to alleviate that (since 3.1) was delegating file monitoring to a separate thread, so the GMainContext that is expensive to iterate only affects file monitors, as opposed to everything that goes on. Later on, FANotify finally gained the missing features that made it suitable for indexers (not requiring CAP_SYS_ADMIN was one of them) and Tracker Miners got an implementation for it (since 3.3). Most notably here, with this kernel API it is only necessary to poll a single FD to receive events for all FANotify marks set on the filesystem.

In what it sounds like a case of miscommunication between kernel developers developing independent new features that didn’t mix well, unfortunately it’s not possible nowadays to mix the bleeding edge in file monitors (FANotify) with the bleeding edge in filesystems (btrfs), for these (and other) cases Tracker Miners will still fallback on plain glib/inotify. Hopefully the situation will be resolved at some point.

At 3.1, the filesystem indexer also implemented flow control mechanisms that allowed its RAM usage to stay mostly flat independently of the filesystem size and layout. At the peak of its activity, tracker-miner-fs-3 uses 30-40MB here (per gnome-system-monitor), and idles at 5MB. Needless to say, it is also many times faster than its past 3.0 self.

But all this was about tracker-miner-fs-3, the daemon in charge of monitoring filesystem changes and mirroring file/folder structure into the database. What about tracker-extract-3, the daemon in charge of nitty-gritty file metadata extraction? When this step needs to happen (say, newly indexed or modified files). It’s for all accounts expensive, now by the sheer magic of everything else shrinking, it comparatively only got worse. There is a reason we avoid that from happening frequently at all costs.

But what is slow there? Roughly speaking, it should be just a loop going through files, getting the metadata and inserting it, and that should be fucking fast as per the benchmarks above, right? Right, the problem is in the “getting the metadata” step. This will wildly fluctuate depending on the files scattered in the filesystem, their mimetypes, and the libraries used to extract their metadata. The plain text extractor or the in-tree MP3 extractor are capable of opening, extracting metadata from, and closing multiple thousands of files per second. All the external libraries used for metadata extraction (yes, all of them) are slower, ranging from several times over to up to 4 orders of magnitude, also depending on the input files (I curated some infernal PDFs). The worst offenders are Poppler, GStreamer and libtiff.

As it’s evident here (You don’t need to believe me, add TRACKER_DEBUG=statistics to /etc/environment and reset/restart the miner), most libraries dealing with files and formats optimize for library resources being long lived across an application lifetime, while optimizing the creation and disposal of these library resources is often overlooked. The metadata extraction daemon faces that hard fact file after file, so its slowness is just a reflection of the slowness of these libraries in setting themselves up. If, after all of this, someone thinks the filesystem indexer is slow, that is where the money is.

Extending and maintaining metadata

Although the focus has been mainly on making things work reliably, rather than going crazy with extending the metadata stored (yet), a point worth noting here is last year’s GSoC work from student Nishit Patel, who worked on indexing creation time (in the filesystems that support/enable it), and allowing for its search all across the stack.

We also got support for a number of game file formats (mainly, retro ones), which GNOME Games (now Highscore) readily made use of. LOL, jk.

Handling and following failures

Whenever a file is broken or corrupted, or a 3rd party library crashes or produces a syscall that is caught up by seccomp, the tracker-extract-3 daemon would quit (with varying degrees of gracefulness) and be taught on the next restart to avoid the file that triggered this situation. This is not precisely new behavior, what is new though is that these failures are now recorded and can be easily inspected over the CLI with tracker3 status. Most bugs we receive about broken extraction are reactive (e.g. “why does Music not show this file?”), this would allow for a more proactive approach to fixing metadata extraction bugs, if users happen to look there and cooperate.

There is also a slight possibility that extraction bugs are due to Tracker itself, but these are largely a think of the past.

Coming up next…

I very much cheered when I learnt of the “Local first” initiative. In fact, I so much anticipated it that I literally anticipated it. Development of the serialization APIs started sometime around the last year, with a plan to provide facilities to transparently and neatly synchronize RDF data across instances in multiple machines owned by the same user.

Who wants that? Certainly not the filesystem indexer. However, there’s indeed a desire to avoid reliance on third party services for user sensitive data like their own health information, chat logs, or bookmarked sites. With some truckloads of optimism, I would hope that this becomes a cornerstone of that goal, for applications under the GNOME umbrella that need to deal with a non-trivial amount of data.

How would that work? What do we need to get there? We need a query language that supports it (check, duh), a data model that can handle the different patterns that might emerge in synchronizing data (check), a way to make these machines talk between each other (check), and a way to diff missing data (check). All the pieces are really set, so what is missing is putting those together, of course drawing inspiration from Christian Hergert’s Bonsai to make machines discover each other.

And of course there is still very much a desire to keep the heart of content applications compelling and relevant. There’s still opportunities to further extend and link the metadata stored by the filesystem indexer, perhaps with the help of the actual semantic web that lives out there. We already have a number of universal identifiers available (musicbrainz tags, IMDB IDs, game rom IDs) to interrelate and cross-reference data.

Now that the codebase features are settled and working well, we can start thinking on new fancy features, stay tuned for the next installment of this series in 2024, when I talk about Tracker 3.8.0, or perhaps Tracker 3.5.20. If you made it this far, you have my appreciation, until the next time!

I also had a pretty huge smile on my face when reading the hints as to who will be getting this year’s award.

I also had a pretty huge smile on my face when reading the hints as to who will be getting this year’s award.