$ time cmakebuild.sh --target deqp-vk [6/6] Linking CXX executable external/vulkancts/modules/vulkan/deqp-vk real 0m25.137s user 0m22.280s sys 0m3.440s

After getting thouroughly nerd-sniped a few weeks back, we now have FreeBSD support through qemu in the freedesktop.org ci-templates. This is possible through the qemu image generation we have had for quite a while now. So let's see how we can easily add a FreeBSD VM (or other distributions) to our gitlab CI pipeline:

Now, so far this may all seem quite familiar. And indeed, this is almost exactly the same process as for normal containers (see Part 1), the only difference is the .fdo.qemu-build base template. Using this template means we build an image babushka: our desired BSD image is actual a QEMU RAW image sitting inside another generic container image. That latter image only exists to start the QEMU image and set up the environment if need be, you don't need to care what distribution it runs out (Fedora for now).

.freebsd:

variables:

FDO_DISTRIBUTION_VERSION: '13.0'

FDO_DISTRIBUTION_TAG: 'freebsd.0' # some value for humans to read

build-image:

extends:

- .freebsd

- .fdo.qemu-build@freebsd

variables:

FDO_DISTRIBUTION_PACKAGES: "curl wget"

Because of the nesting, we need to handle this accordingly in our script: tag for the actual test job - we need to start the image and make sure our jobs are actually built within. The templates set up an ssh alias "vm" for this and the vmctl script helps to do things on the vm:

Now, there's a bit to unpack but with the comments above it should be fairly obvious what is happening. We start the VM, copy our working directory over and then run a command on the VM before cleaning up. The reason we use touch .success is simple: it allows us to copy things out and clean up before actually failing the job.

test-build:

extends:

- .freebsd

- .fdo.distribution-image@freebsd

script:

# start our QEMU image

- /app/vmctl start

# copy our current working directory to the VM

# (this is a yaml multiline command to work around the colon)

- |

scp -r $PWD vm:

# Run the build commands on the VM and if they succeed, create a .success file

- /app/vmctl exec "cd $CI_PROJECT_NAME; meson builddir; ninja -C builddir" && touch .success || true

# Copy results back to our run container so we can include them in artifacts:

- |

scp -r vm:$CI_PROJECT_NAME/builddir .

# kill the VM

- /app/vmctl stop

# Now that we have cleaned up: if our build job before

# failed, exit with an error

- [[ -e .success ]] || exit 1

Obviously, if you want to build any other distribution you just swap the freebsd out for fedora or whatever - the process is the same. libinput has been using fedora qemu images for ages now.

Thanks to the work done by Josè Expòsito, libinput 1.19 will ship with a new type of gesture: Hold Gestures. So far libinput supported swipe (moving multiple fingers in the same direction) and pinch (moving fingers towards each other or away from each other). These gestures are well-known, commonly used, and familiar to most users. For example, GNOME 40 recently has increased its use of touchpad gestures to switch between workspaces, etc. Swipe and pinch gestures require movement, it was not possible (for callers) to detect fingers on the touchpad that don't move.

This gap is now filled by Hold gestures. These are triggered when a user puts fingers down on the touchpad, without moving the fingers. This allows for some new interactions and we had two specific ones in mind: hold-to-click, a common interaction on older touchscreen interfaces where holding a finger in place eventually triggers the context menu. On a touchpad, a three-finger hold could zoom in, or do dictionary lookups, or kill a kitten. Whatever matches your user interface most, I guess.

The second interaction was the ability to stop kinetic scrolling. libinput does not actually provide kinetic scrolling, it merely provides the information needed in the client to do it there: specifically, it tells the caller when a finger was lifted off a touchpad at the end of a scroll movement. It's up to the caller (usually: the toolkit) to implement the kinetic scrolling effects. One missing piece was that while libinput provided information about lifting the fingers, it didn't provide information about putting fingers down again later - a common way to stop scrolling on other systems.

Hold gestures are intended to address this: a hold gesture triggered after a flick with two fingers can now be used by callers (read: toolkits) to stop scrolling.

Now, one important thing about hold gestures is that they will generate a lot of false positives, so be careful how you implement them. The vast majority of interactions with the touchpad will trigger some movement - once that movement hits a certain threshold the hold gesture will be cancelled and libinput sends out the movement events. Those events may be tiny (depending on touchpad sensitivity) so getting the balance right for the aforementioned hold-to-click gesture is up to the caller.

As usual, the required bits to get hold gestures into the wayland protocol are either in the works, mid-flight or merge-ready so expect this to hit the various repositories over the medium-term future.

I have not talked about raytracing in RADV for a while, but after some procrastination being focused on some other things I recently got back to it and achieved my next milestone.

In particular I have been hacking away at CTS and got to a point where CTS on dEQP-VK.ray_tracing.* runs to completion without crashes or hangs. Furthermore, I got the passrate to 90% of non-skiped tests. So we’re finally getting somewhere close to usable.

As further show that it is usable my fixes for CTS also fixed the corruption issues in Quake 2 RTX (Github version), delivering this image:

Of course not everything is perfect yet. Besides the not 100% CTS passrate it has like half the Windows performance at 4k right now and we still have some feature gaps to make it really usable for most games.

TL;DR Because I haven’t optimized it yet and implemented every shortcut imaginable.

Raytracing with Vulkan works with two steps:

With RDNA2 AMD started accelerating this by adding an instruction that allowed doing intersection tests between a ray and a single BVH node, where the BVH node can either be

Of course this isn’t quite enough to deal with all geometry types in Vulkan so we also add two more:

With a search tree like a BVH it is very possibly to make trees that are very useless. As an example consider a binary search tree that is very unbalanced. We can have similarly bad things with a BVH including making it unbalanced or having overlapping bounding volumes.

And my implementation is the simplest thing possible: the input geometry becomes the leaves in exactly the same order and then internal nodes are created just as you’d draw them. That is probably decently fast in building the BVH but surely results in a terrible BVH to actually use.

After we built a BVH we can start tracing some rays. In rough pseudocode the current implementation is

stack = empty

insert root node into stack

while stack is not empty:

node = pop a node from the stack

if we left the bottom level BVH:

reset ray origin/direction to initial origin/direction

result = amd_intersect(ray, node)

switch node type:

triangle:

if result is a hit:

load some node data

process hit

box node:

for each box hit:

push child node on stack

custom node 1 (instance):

load node data

push the root node of the bottom BVH on the stack

apply transformation matrix to ray origin/direction

custom node 2 (AABB geometry):

load node data

process hit

We already knew there were inherently going to be some difficulties:

Furthermore this also clearly shows some difficulties with how we approached the intersection instruction. Some advantages of the intersection instruction are that it avoids divergence in computing collisions if we have different node types in a subgroup and to be cheaper when there are only a few lanes active. (A single CU can process one ray/node intersection per cycle, modulo memory latency, while it can process an ALU instruction on 64 lanes per cycle).

However even if it avoids the divergence in the collision computation we still introduce a ton of divergence in the processing of the results of the intersection. So we are still doing pretty bad here.

Another thing to be noted is our traversal stack size. According to the Vulkan specification a bottom level acceleration structure should support 2^24 -1 triangles and a top level acceleration structure should support 2^24 - 1 bottom level structures. Combined with a tree with 4 children in each internal node we can end up with a tree depth of about 24 levels.

In each internal node iteration of our loop we pop one element and push up to 4 elements, so at the deepest level of traversal we could end up with a 72 entry stack. Assuming these are 32-bit node identifiers, that ends up with 288 bytes of stack per lane, or ~18 KiB per 64 lane workgroup (the minimum which could possibly keep a CU busy with an ALU only workload). Given that we have 64 KiB of LDS (yes I am using LDS since there is no divergent dynamic register addressing) per CU that leaves only 3 workgroups per CU, leaving very little options for parallelism between different hardware execution units (e.g. the ALU and the texture unit that executes the ray intersections) or latency hiding of memory operations.

So ideally we get this stack size down significantly.

First step is to get CTS passing and getting an initial merge request into upstream Mesa. As a follow on to that I’d like to get a minimal prototype going for some DXR 1.0 games with vkd3d-proton just to make sure we have the right feature coverage.

After that we’ll have to do all the traversal optimizations. I’ll probably implement a bunch of instrumentation so I actually have a clue on what to optimize. This is where having some runnable games really helps get the right idea about performance bottlenecks.

Finally, with some luck better shaders to build a BVH will materialize as well.

If you want to write an X application, you need to use some library that speaks the X11 protocol. For a long time this meant libX11, often called xlib, which - like most things about X - is a fantastic bit of engineering that is very much a product of its time with some confusing baroque bits. Overall it does a very nice job of hiding the icky details of the protocol from the application developer.

One of the details it hides has to do with how resource IDs are allocated in X. A resource ID (an XID, in the jargon) is a 32 29-bit integer that names a resource - window, colormap, what have you. Those 29 bits are split up netmask/hostmask style, where the top 8 or so uniquely identify the client, and the rest identify the resource belonging to that client. When you create a window in X, what you really tell the server is "I want a window that's initially this size, this background color (etc.) and from now on when I say (my client id + 17) I mean that window." This is great for performance because it means resource allocation is assumed to succeed and you don't have to wait for a reply from the server.

Key to all this is that in xlib the XID is the return value from the call that issues the resource creation request. Internally the request gets queued into the protocol's write buffer, but the client can march ahead and issue the next few commands as if creation had succeeded - because it probably did, and if it didn't you're probably going to crash anyway.

So to allocate XIDs the client just marches forward through its XID range. What happens when you hit the end of the range? Before X11R4, you'd crash, because xlib doesn't keep track of which XIDs it's allocated, just the lowest one it hasn't allocated yet. Starting in R4 the server added an extension called XC-MISC that lets the client ask the server for a list of unused XIDs, so when xlib hits the end of the range it can request a new range from the server.

But. UI programming tends to want threads, and xlib is perhaps not the most thread-friendly. So XCB was invented, which sacrifices some of xlib's ease of use for a more direct binding to the protocol and (in theory) an explicitly thread-safe design. We then modified xlib and XCB to coexist in the same process, using the same I/O buffers, reply and event management, etc.

This literal reflection of the protocol into the API has consequences. In XCB, unlike xlib, XID generation is an explicit step. The client first calls into XCB to allocate the XID, and then passes that XID to the creation request in order to give the resource a name.

Which... sorta ruins that whole thread-safety thing.

Let's say you call xcb_generate_id in thread A and the XID it returns is the last one in your range. Then thread B schedules in and tries to allocate another XID. You'll ask the server for a new range, but since thread A hasn't called its resource creation request yet, from the server's perspective that "allocated" XID looks like it's still free! So now, whichever thread issues their resource creation request second will get BadIDChoice thrown at them if the other thread's resource hasn't been destroyed in the interim.

A library that was supposed to be about thread safety baked a thread safety hazard into the API. Good work, team.

How do you fix this without changing the API? Maybe you could keep a bitmap on the client side that tracks XID allocation, that's only like 256KB worst case, you can grow it dynamically and most clients don't create more than a few dozen resources anyway. Make xcb_generate_id consult that bitmap for the first unallocated ID, and mark it used when it returns. Then track every resource destruction request and zero it back out of the bitmap. You'd only need XC-MISC if some other client destroyed one of your resources and you were completely out of XIDs otherwise.

And you can implement this, except. One, XCB has zero idea what a resource destruction request is, that's simply not in the protocol description. Not a big deal, you can fix that, there's only like forty destructors you'd need to annotate. But then two, that would only catch resource destruction calls that flow through XCB's protocol binding API, which xlib does not, xlib instead pushes raw data through xcb_writev. So now you need to modify every client library (libXext, libGL, ...) to inform XCB about resource destruction.

Which is doable. Tedious. But doable.

I think.

I feel a little weird writing about this because: surely I can't be the first person to notice this.

Debugging programs using printf statements is not a technique that everybody appreciates. However, it can be quite useful and sometimes necessary depending on the situation. My past work on air traffic control software involved using several forms of printf debugging many times. The distributed and time-sensitive nature of the system being studied made it inconvenient or simply impossible to reproduce some issues and situations if one of the processes was stalled while it was being debugged.

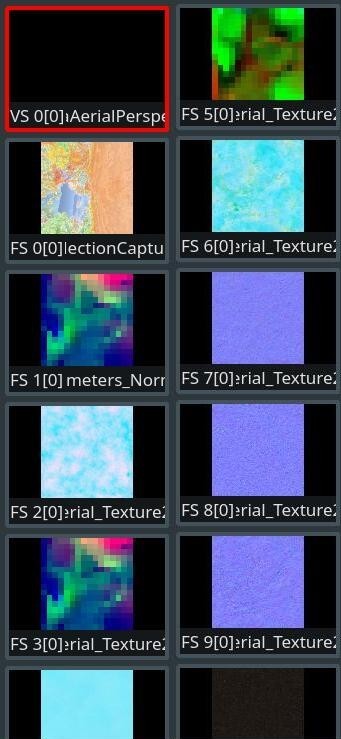

In the context of Vulkan and graphics in general, printf debugging can be useful to see what shader programs are doing, but some people may not be aware it’s possible to “print” values from shaders. In Vulkan, shader programs are normally created in a high level language like GLSL or HLSL and then compiled to SPIR-V, which is then passed down to the driver and compiled to the GPU’s native instruction set. That final binary, many times outside the control of user applications, runs in a quite closed and highly parallel environment without many options to observe what’s happening and without text input and output facilities. Fortunately, tools like glslang can generate some debug information when compiling shaders to SPIR-V and other tools like Nsight can use that information to let you debug shaders being run.

Still, being able to print the values of different expressions inside a shader can be an easy way to debug issues. With the arrival of Ray Tracing, this is even more useful than before. In ray tracing pipelines, the shaders being executed and resources being used are chosen based on the scene geometry, the origin and the direction of the ray being traced. printf debugging can let you see where you are and what you’re using. So how do you print values from shaders?

Vulkan’s debug printf is implemented as part of the Validation Layers and the general procedure is well documented. If you were to implement this kind of mechanism yourself, you’d likely use a storage buffer to save the different values you want to print while shader invocations are running and, later, you’d go over the contents of that buffer and print the associated message with each value or values. And that is, essentially, what debug printf does but in a very convenient and automated way so that you don’t have to deal with the gory details and corner cases.

In a GLSL shader, simply:

Enable the GL_EXT_debug_printf extension.

Sprinkle your code with debugPrintfEXT() calls.

Use the Vulkan Configurator that’s part of the SDK or manually edit vk_layer_settings.txt for your app enabling VK_VALIDATION_FEATURE_ENABLE_DEBUG_PRINTF_EXT.

Normally, disable other validation features so as not to get too much output.

Take a look at the debug report or debug utils info messages containing printf results, or set printf_to_stdout to true so printf messages are sent to stdout directly.

You can find an example shader in the validation layers test code. The debug printf feature has helped me a lot in the past, so I wanted to make sure it’s widely known and used.

Due to the observer effect, you may end up in situations where your code works correctly when enabling debug printf but incorrectly without it. This may be due to multiple reasons but one of the main ones I’ve encountered is improper synchronization. When debug printf is used, the layers use additional synchronization primitives to sync the contents of auxiliary buffers, which can mask synchronization bugs present in the app.

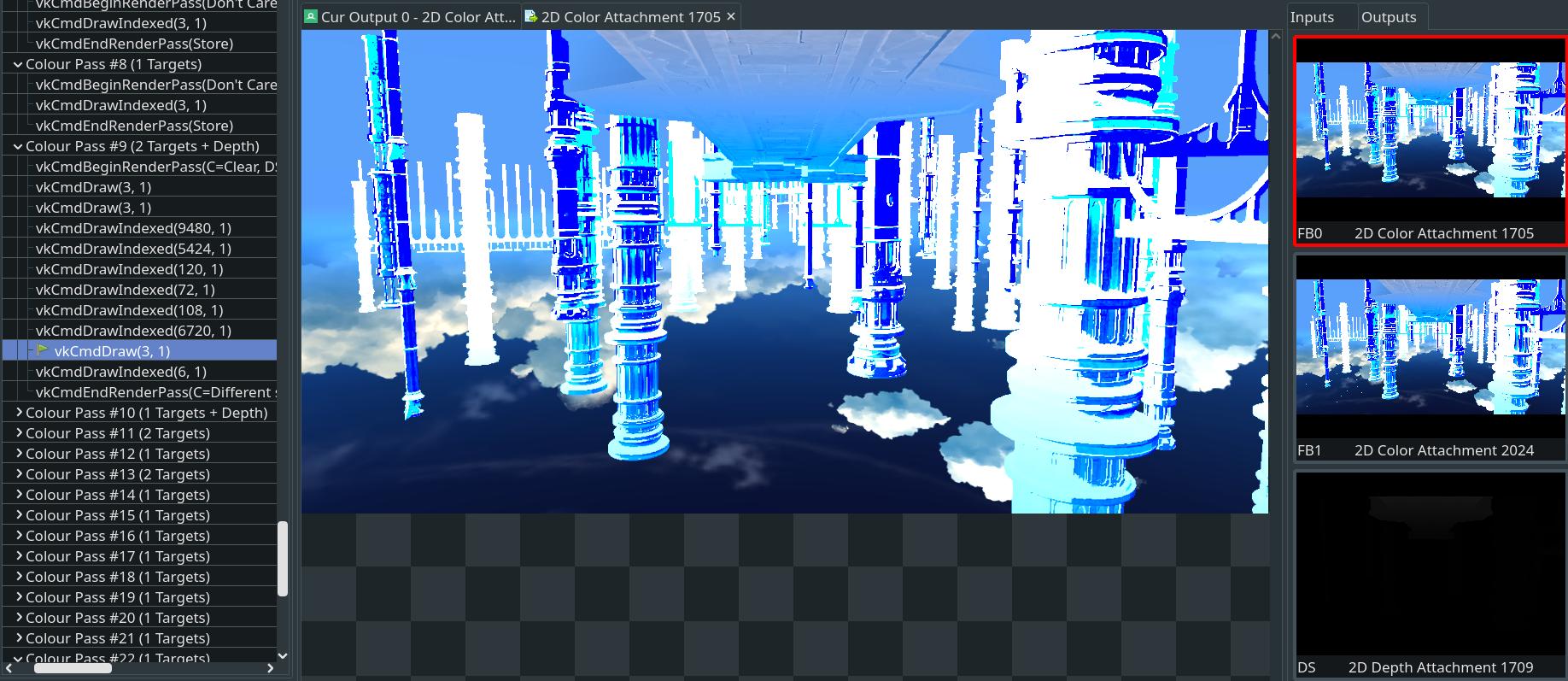

Finally, RenderDoc 1.14, released at the end of May, also supports Vulkan’s shader printf statements and will let you take a look at the print statements produced during a draw call. Furthermore, the print statements don’t have to be present in the original shader. You can also use the shader edit system to insert them on the fly and use them to debug the results of a particular shader invocation. Isn’t that awesome? Great work by Baldur Karlsson as always.

PS: As a happy coincidence, just yesterday LunarG published a white paper on Vulkan’s debug printf with additional information on this excellent feature. Be sure to check it out!

In order to expose OpenGL 4.6 the last missing feature in llvmpipe is anisotropic texture filtering. Adding support for this also allows lavapipe expose the Vulkan samplerAnisotropy feature.

I started writing anisotropic support > 6 months ago. At the time we were trying to deprecate the classic swrast driver, and someone pointed out it had support for anisotropic filtering. This support had also been ported to the softpipe driver, but never to llvmpipe.

I had also considered porting swiftshaders anisotropic support, but since I was told the softpipe code was functional and had users I based my llvmpipe port on that.

Porting the code to llvmpipe means rewriting it to generate LLVM IR using the llvmpipe vector processing code. This is a lot messier than just writing linear processing code, and when I thought I had it working it passes GL CTS, but failed the VK CTS. The results also to my eye looked worse than I'd have thought was acceptable, and softpipe seemed to be as bad.

Once I swung back around to this I decided to port the VK CTS test to GL and run it on softpipe and llvmpipe code. Initially llvmpipe had some more bugs to solve esp where the mipmap levels were being chosen, but once I'd finished aligning softpipe and llvmpipe I started digging into why the softpipe code wasn't as nice as I expected.

The softpipe code was based on an implementation of an Elliptical Weighted Average Filter (EWA). The paper "Creating Raster Omnimax Images from Multiple Perspective Views Using the Elliptical Weighted Average Filter" described this. I sat down with the paper and softpipe code and eventually found the one line where they diverged.[1] This turned out to be a bug introduced in a refactoring 5 years ago, and nobody had noticed or tracked it down.

I then ported the same fix to my llvmpipe code, and VK CTS passes. I also optimized the llvmpipe code a bit to avoid doing pointless sampling and cleaned things up. This code landed in [2] today.

For GL4.6 there are still some fixes in other areas.

[1] https://gitlab.freedesktop.org/mesa/mesa/-/merge_requests/11917

[2] https://gitlab.freedesktop.org/mesa/mesa/-/merge_requests/8804

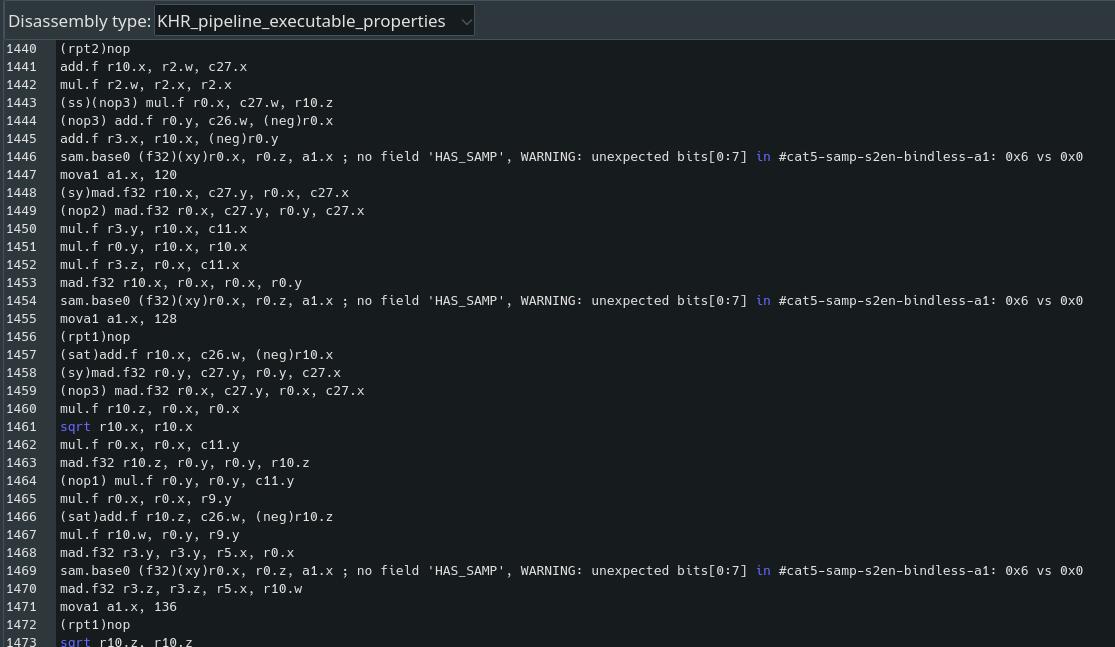

After a month of reverse-engineering, we’re excited to release documentation on the Valhall instruction set, available as a PDF. The findings are summarized in an XML architecture description for machine consumption. In tandem with the documentation, we’ve developed a Valhall assembler and disassembler as a reverse-engineering aid.

Valhall is the fourth Arm® Mali™ architecture and the fifth Mali instruction set. It is implemented in the Arm® Mali™-G78, the most recently released Mali hardware, and Valhall will continue to be implemented in Mali products yet to come.

Each architecture represents a paradigm shift from the last. Midgard generalizes the Utgard pixel processor to support compute shaders by unifying the shader stages, adding general purpose memory access, and supporting integers of various bit sizes. Bifrost scalarizes Midgard, transitioning away from the fixed 4-channel vector (vec4) architecture of Utgard and Midgard to instead rely on warp-based execution for parallelism, better using the hardware on modern workloads. Valhall linearizes Bifrost, removing the Very Long Instruction Word mechanisms of its predecessors. Valhall replaces the compiler’s static scheduling with hardware dynamic scheduling, trading additional control hardware for higher average performance. That means padding with “no operation” instructions is no longer required, which may decrease code size, promising better instruction cache use.

All information in this post and the linked PDF and XML is published in good faith and for general information purpose only. We do not make any warranties about the completeness, reliability and accuracy of this information. Any action you take upon the information you find here, is strictly at your own risk. We are not be liable for any losses and/or damages in connection with the use of this information.

While we strive to make the information as accurate as possible, we make no claims, promises, or guarantees about its accuracy, completeness, or adequacy. We expressly disclaim liability for content, errors and omissions in this information.

Let’s dig in.

In June, Collabora procured an International edition of the Samsung Galaxy S21 phone, powered by a system-on-chip with Mali G78. Although Arm announced Valhall with the Mali G77 in May 2019, roll out has been slow due to the COVID-19 pandemic. At the time of writing, there are not yet Linux friendly devices with a Valhall chip, forcing use of a locked down Android device. There’s a silver lining: we have a head start on the reverse-engineering, so by the time hacker-friendly devices arrive with Valhall GPUs, we can have open source drivers ready.

Android complicates reverse-engineering (though not as much as macOS). On Linux, we can compile a library on the device to intercept data sent to the GPU. On Android, we must cross-compile from a desktop with the Android Native Development Kit, ironically software that doesn’t run on Arm processors. Further, where on Linux we can track the standard system calls, Android device drivers replace the standard open() system call with a complicated Android-only “binder” interface. Adapting the library to support binder would be gnarly, but do we have to? We could sprinkle in one little hack anywhere we see a file descriptor without the file name.

#define MALI0 "/dev/mali0"

bool is_mali(int fd)

{

char in[128] = { 0 }, out[128] = { 0 };

snprintf(in, sizeof(in), "/proc/self/fd/%d", fd);

int count = readlink(in, out, sizeof(out) - 1);

return count == strlen(MALI0) && strncmp(out, MALI0, count) == 0;

}Now we can hook the Mali ioctl() calls without tracing binder and easily dump graphics memory.

We’re interested in the new instruction set, so we’re looking for the compiled shader binaries in memory. There’s a chicken-and-egg problem: we need to find the shaders to reverse-engineer them, but we need to reverse-engineer the shaders to know what to look for. Fortunately, there’s an escape hatch. The proprietary Mali drivers allow an OpenGL application to query the compiled binary with the ARM_mali_program_binary extension, returning a file in the Mali Binary Shader format. That format was reverse-engineered years ago by Connor Abbott for earlier Mali architectures, and the basic structure is unchanged in Valhall. Our task is simple: compile a test shader, dump both GPU memory and the Mali Binary Shader, and find the common section. Searching for the common bytes produces an address in executable graphics memory, in this case 0x7f0002de00. Searching for that address in turn finds the “shader program descriptor” which references it.

18 00 00 80 00 10 00 00 00 DE 02 00 7F 00 00 00Another search shows this descriptor’s address in the payload of an index-driven vertex shading job for graphics or a compute job for OpenCL. Those jobs contain the Job Manager header introduced a decade ago for Midgard, so we understand them well: they form a linked list of jobs, and only the first job is passed to the kernel. The kernel interface has a “job chain” parameter on the submit system call taking a GPU address. We understand the kernel interface well as it is open source due to kernel licensing requirements.

With each layer identified, we teach the wrapper library to chase the pointers and dump every shader executed, enabling us to reverse-engineer the new instruction set and develop a disassembler.

Reverse-engineering in the dark is possible, but it’s easier to have some light. While waiting for the Valhall phone to arrive, I read everything Arm made public about the instruction set, particularly this article from Anandtech. Without lifting a finger, that article tells us Valhall is…

It also says that Valhall has a 16KB instruction cache, holding 2048 instructions. Since Valhall has a regular encoding, we divide 16384 bytes by 2048 instructions to find a Valhall instruction is 8 bytes. Our first attempt at a “disassembler” can print hex dumps of every 8 bytes on a line; our calculation ensures that is the correct segmentation.

From here on, reverse-engineering is iterative. We have a baseline level of knowledge, and we want to grow that knowledge. To do so, we input test programs into the proprietary driver to observe the output, then perturbe the input program to see how the output changes.

As we discover new facts about the architecture, we update our disassembler, demonstrating new knowledge and separating the known from the unknown. Ideally, we encode these facts in a machine-readable file forming a single reference for the architecture. From this file, we can generate a disassembler, an assembler, an instruction encoder, and documentation. For Valhall, I use an XML file, resembling Bifrost’s equivalent XML.

Filling out this file is usually straightforward though tedious. Modern APIs are large, so there is a great deal of effort required to map the API requirements to the hardware features.

However, some hardware features do not map to any API. Here are subtler tales from reversing Valhall.

Arithmetic is faster than memory access, so modern processors execute arithmetic in parallel with pending memory accesses. Modern GPU architectures require the compiler to manage this mechanism by analyzing the program and instructing the hardware to wait for the results before they’re needed.

For this purpose, Bifrost uses an explicit scoreboarding system. Bifrost groups up to 16 instructions together in a clause, and each clause has a fixed header. The compiler assigns a “dependency slot” between 0 and 7 to each clause, specified in the header. Each clause can wait on any set of slots, specified with another 8-bits in the clause header. Specifying dependencies per-clause is a compromise between precision and code size.

We expect Valhall to feature a similar scheme, but Valhall doesn’t have clauses or clause headers, so where does it specify this info?

Studying compiled shaders, we see the last byte of every instruction is usually zero. But when the result of a memory access is first read, the previous instruction has a bit set in the last byte. Which bit is set depends on the number of memory accesses in flight, so it seems the last byte encodes a dependency wait. The memory access instructions themselves are often zero in their last bytes, so it doesn’t look like the last byte is used to encode the dependency slot – but executing many memory access instructions at once and comparing the bits, we see a single 2-bit field stands out as differing. The dependency slot is specified inside the instruction, not in the metadata.

What makes this design practical? Two factors.

One, only the waits need to be specified in general. Arithmetic instructions don’t need a dependency slot, since they complete immediately. The longest message passing instructions is shorter than the longer arithmetic instruction, so there is space in the instruction itself to specify only when needed.

Two, the performance gain from adding extra slots levels off quickly. Valhall cuts back on Bifrost’s 8 slots (6 general purpose). Instead it has 4 or 5 slots, with only 3 general purpose, saving 4-bits for every instruction.

This story exemplifies a general pattern: Valhall is a flattening of Bifrost. Alternatively, Bifrost is “Valhall with clauses”, although that description is an anachronism. Why does Bifrost have clauses, and why does Valhall remove them? The pattern in this story of dependency waits generalizes to answer the question: grouping many instructions into Bifrost clauses allows the hardware to amortize operations like dependency waits and reduce the hardware gate count of the shader core. However, clauses add substantial encoding overhead, compiler complexity, and imprecision. Bifrost optimizes for die space; Valhall optimizes for performance.

Hardware features that are unused by the proprietary driver are a perennial challenge for reverse-engineering. However, we have a complete Bifrost reference at our disposal, and Valhall instructions are usually equivalent to Bifrost. Special instructions and modes from Bifrost cast a shadow on Valhall, showing where there are gaps in our knowledge. Sometimes these gaps are impractical to close, short of brute-forcing the encoding space. Other times we can transfer knowledge and make good guesses.

Consider the Cross Lane PERmute instruction, CLPER, which takes a register and the index of another lane in the warp, and returns the value of the register in the specified lane. CLPER is a “subgroup operation”, required for Vulkan and used to implement screen-space derivatives in fragment shaders. On Bifrost, the CLPER instruction is defined as:

<ins name="+CLPER.i32" mask="0xfc000" exact="0x7c000">

<src start="0" mask="0x7"/>

<src start="3"/>

<mod name="lane_op" start="6" size="2">

<opt>none</opt>

<opt>xor</opt>

<opt>accumulate</opt>

<opt>shift</opt>

</mod>

<mod name="subgroup" start="8" size="2">

<opt>subgroup2</opt>

<opt>subgroup4</opt>

<opt>subgroup8</opt>

</mod>

<mod name="inactive_result" start="10" size="4">

<opt>zero</opt>

<opt>umax</opt>

....

<opt>v2infn</opt>

<opt>v2inf</opt>

</mod>

</ins>We expect a similar definition for Valhall. One modification is needed: Valhall warps contain 16 threads, so there should be a subgroup16 option after subgroup8, with the natural binary encoding 11. Looking at a binary Valhall CLPER instruction, we see a 11 pair corresponding to the subgroup field. Similarly experimenting with different subgroup operations in OpenCL lets us figure out the lane_op field. We end up with an instruction definition like:

<ins name="CLPER.u32" title="Cross-lane permute" dests="1" opcode="0xA0" opcode2="0xF">

<src/>

<src widen="true"/>

<subgroup/>

<lane_op/>

</ins>Notice we do not specify the encoding in the Valhall XML, since Valhall encoding is regular. Also notice we lack the inactive_result modifier. On Bifrost, inactive_result specifies the value returned if the program attempts to access an inactive lane. We may guess Valhall has the same mechanism, but that modifier is not directly controllable by current APIs. How do we proceed?

If we can run code on the device, we can experiment with the instruction. Inactive lanes may be caused by divergent control flow, where one lane in the thread branches but another lane does not, forcing the hardware to execute only part of the warp. After reverse-engineering Valhall’s branch instructions, we can construct a situation where a single lane is active and the rest are inactive. Then we insert a CLPER instruction with extra bits set, store the result to main memory, and print the result. This assembly program does the trick:

# Elect a single lane

BRANCHZ.reconverge.id lane_id, offset:3

# Try to read a value from an inactive thread

CLPER.u32 r0, r0, 0x01000000.b3, inactive_result:VALUE

# Store the value

STORE.i32.slot0.reconverge @r0, u0, offset:0

# End shader

NOP.returnWith the assembler we’re writing, we can assemble this compute kernel. How do we run it on the device without knowing the GPU data structures required to dispatch compute shaders? We make use of another classic reverse-engineering technique: instead of writing the initialization code ourselves, piggyback off the proprietary driver. Our wrapper library allows us to access graphics memory before the driver submits work to the hardware. We use this to read the memory, but we may also modify it. We already identified the shader program descriptor, so we can inject our own shaders. From here, we can jury-rig a script to execute arbitrary shader binaries on the device in the context of an OpenCL application running under the proprietary driver.

Putting it together, we find the inactive_result bits in the CLPER encoding and write one more script to dump all values.

for ((i = 0 ; i < 16 ; i++)); do

sed -e "s/VALUE/$i/" shader.asm | python3 asm.py shader.bin

adb push shader.bin /data/local/tmp/

adb shell 'REPLACE=/data/local/tmp/shader.bin '\

'LD_PRELOAD=/data/local/tmp/panwrap.so '\

'/data/local/tmp/test-opencl'

doneThe script’s output contains sixteen possibilities – and they line up perfectly with Bifrost’s sixteen options. Success.

There’s more to learn about Valhall, but we’ve reverse-engineered enough to develop a Valhall compiler. As Valhall is a simplification of Bifrost, and we’ve already developed a free and open source compiler for Bifrost, this task is within reach. Indeed, adapting the Bifrost compiler to Valhall will require refactoring but little new development.

Mali G78 does bring changes beyond the instruction set. The data structures are changed to reduce Vulkan driver overhead. For example, the monolithic “Renderer State Descriptor” on Bifrost is split into a “Shader Program Descriptor” and a “Depth Stencil Descriptor”, so changes to the depth/stencil state no longer require the driver to re-emit shader state. True, the changes require more reverse-engineering. Fortunately, many data structures are adapted from Bifrost requiring few changes to the Mesa driver.

Overall, supporting Valhall in Mesa is within reach. If you’re designing a Linux-friendly device with Valhall and looking for open source drivers, please reach out!

Originally posted on Collabora’s blog

Some days ago my Igalia colleague Adrián Pérez pointed us to mold, a new drop-in replacement for existing Unix linkers created by the original author of LLVM lld. While mold is pretty new and does not aim to be 100% compatible with GNU ld, GNU gold or LLVM lld (at least as of the time I’m writing this), I noticed the benchmark table in its README file also painted a pretty picture about the performance of lld, if inferior to that of mold.

In my job at Igalia I work most of the time on VK-GL-CTS, Vulkan and OpenGL’s Conformance Test Suite, which contains thousands of tests for OpenGL and Vulkan. These tests are provided by different executable files and the Vulkan tests on which I’m focused are contained in a binary called deqp-vk. When built with debug information, deqp-vk can be quite large. A recent build, for example, is taking 369 MB in my drive. But the worst part is that linking the binary typically takes around 25 seconds on my work laptop.

$ time cmakebuild.sh --target deqp-vk [6/6] Linking CXX executable external/vulkancts/modules/vulkan/deqp-vk real 0m25.137s user 0m22.280s sys 0m3.440s

I had never paid much attention to the linker before, always relying on the default choice in Fedora or any other distribution. However, I decided to install lld, which has an official package, and gave it a try. You Will Not Believe What Happened Next.

$ time cmakebuild.sh --target deqp-vk [6/6] Linking CXX executable external/vulkancts/modules/vulkan/deqp-vk real 0m2.622s user 0m5.456s sys 0m1.764s

lld is capable of correctly linking deqp-vk in 1/10th of the time the default linker (GNU ld) takes to do the same job. If you want to try lld yourself you have several options. Ideally, you’d be able to run update-alternatives --set ld /usr/bin/lld as root but that option is notably not available in Fedora. There was a proposal to make that work but it never materialized, so it cannot be made the default system-wide linker.

However, depending on the build system used by a particular project, there should be a way to make it use lld instead of /usr/bin/ld. For example, VK-GL-CTS uses CMake, which invokes the compiler to link executable files, instead of calling the linker directly, which would be unusual. Both GCC and Clang can be passed -fuse-ld=lld as a command line option to use lld instead of the default linker. That flag should be added to CMake’s CMAKE_EXE_LINKER_FLAGS variable, either by reconfiguring an existing project with, for example, ccmake, or by adding the flag to the LDFLAGS environment variable before running CMake on a build directory for the first time.

Looking forward to start using the mold linker in the future and its multithreading capabilities. In the mean time, I’m very happy to have checked lld. It’s not that usual that a simple tooling change as this one gives me such a clear advantage.

That’s right.

Zink(-wip) now fully supports GL_KHR_blend_equation_advanced, which means ES 3.2 is a go (once my local CI clears me to push today’s snapshot).

And all it took was one brief exchange with a top Mesa reviewer who is incidentally rumored to be undergoing training deep in the mountains to become an expert BBQ master on the extremely professional #zink channel on OFTC:

That's the thing. You can totally do it in Zink.

My mind was blown.

Why hadn’t I thought of that sooner?

I could just…do it? Just like that? And then it’d be done?

Truly the experts are on a different level from us mortals.

So now it’s done, and that means zink is finished. I don’t expect there will be any more work to do now that the final boss has been defeated. Don’t even bother trying to file bug reports.

You may not like it, but this is what peak Friday looks like.

This is going to be less of a technical post and more of a have you thought about post from me personally (usual disclaimer: this post represents only my views). With that said, I think this is more important than the average post here, meaning that expectations should be set somewhere between I need to stop everything else I’m doing until I finish reading and this is the most important event in my life.

Let’s talk about open source. No, Open Source. The idea of it.

Those of you who are veterans are rolling your eyes. Another post about the glory of Open Source.

The thing about Open Source is that it’s sort of whatever you make of it. At its core, it’s about getting people together to solve a problem—community building. Whether that community is large or small, the goal is the same: write some quality software.

To that end, you’ve got your usual corporate powerpoint slide of community roles:

In Mesa, the maintainer and developer roles are mostly the same among core contributors: these are the people who write the code that gets posted about on all the news sites.

The reviewer is a bit more mysterious though. Who are reviewers, and what separates them from the others?

Reviewers are the grease that makes the project work. There’s really no other way of saying it.

Outside of a few components of Mesa that are effectively the wild west, without any form of oversight or approval needed for changes to be landed, every driver and utility in the tree requires that changes undergo review before they land. This means that each and every patch which affects code or build has to have a person stop everything else they’re doing and physically scroll through each patch, line-by-line, then add a Reviewed-by or Acked-by tag.

If you’re unclear as to the meanings of these tags, consider it like you’re going skydiving with someone you’ve never met before who has been in charge of preparing your parachute:

It’s then up to the developer to decide whether to merge the code based on the feedback given to them by the reviewer.

This, of course, assumes they get feedback at all.

Too often on news sites (and in certain corporate metrics) you’ll see something like “Patches McCodesAlot, working for GreatCodingCompany, authored the most code changes for this release cycle (9001 patches), which is over 100x more than the next highest contributor.”

The manager at a company sees this and thinks “I’ll send this up the chain. We should poach Patches so we can have greater control over this project which underpins our entire business strategy. Also it’ll make my powerpoint pie charts look rad.”

The casual reader sees this and says “Wow, Patches is awesome! Without Patches, I probably couldn’t even play Fororantwatch on my Linux gaming desktop!”

But how do the patches that Patches writes get merged into the release? Unless Patches works exclusively in one of the undermaintained areas of the project, in which case it’s unlikely that their work is being widely used, the odds are that someone’s pulling a huge lift on the review side to enable all of those patches landing into the repository.

This is the job of the reviewer.

As this Mesa release cycle starts to wind down, I hope that readers of this blog and news sites can take a moment to look past Patches McCodesAlot and see the people who make it possible for Patches to land so many damn patches.

At the time of this post, this is what the top 10 reviewers managed to accomplish over the past few months:

| Number of Reviews | Reviewer Name | Corporate Affiliation |

|---|---|---|

| 91 | Erik Faye-Lund | Collabora |

| 94 | Samuel Pitoiset | Valve |

| 99 | Alejandro Piñeiro | Igalia |

| 115 | Kenneth Graunke | Intel |

| 116 | Bas Nieuwenhuizen | Blogger |

| 121 | Lionel Landwerlin | Intel |

| 128 | Adam Jackson | Red Hat |

| 140 | Marek Olšák | AMD |

| 176 | Jason Ekstrand | Intel |

| 300 | Dave Airlie | Red Hat |

Summed up, that’s over 1300 patches reviewed! For perspective, that’s around 30% of all the patches in this release, and it’s about 70% of the total number of patches that zink has received in the course of its existence.

Looking at it another way though, this is over 1300 patches that other people wrote which were able to land because these people took the time to look over the proposed changes—to triple-check the parachutes, as it were.

So thanks, Mesa reviewers. The project wouldn’t exist without all of you (and your generous employers, who should be blasting these metrics in the press when they talk about being good Open Source citizens).

I’d be remiss if I didn’t also mention the people working on Mesa CI. There’s no patch counts or review counts or anything to recognize everyone hard at work here, but CI is what keeps the triangles blasting out of your GPUs looking how they should.

Thanks, CI team. You’re awesome.

According to a recent metric, the Mesa CI infrastructure only had a 0.6% accidental failure rate. That’s pretty good considering how many thousands of jobs run every day.

This year, I decided to participate as speaker in esLibre 2021 conference. esLibre is a Spanish free software conference that covers a lot of different topics related to open-source projects: from the technical point of view to its social impact.

This year the conference had talks about game development with Godot, KDE, LibreOffice, Free Software in Universities among many others. Check out the program.

This is my first time participating in this conference and I enjoyed it a lot. Huge applause to the organization team for the huge work to organize this edition, for helping out the speakers with different testing days and for their kindness to reply any question from me and other attendees. They did a superb job!

My talk was an introduction to Mesa where I covered things like where is Mesa in the open-source graphics stack, a summary of what it does, the drivers implemented in Mesa, how our community is organized and how to contribute to it. If you know Spanish, you can check it out here (PDF). But in case you want an English version of it, this talk is very similar to the one I gave at Ubucon Europe 2018.

My esLibre talk was recorded as well! I’ll update this post with the link to the recording once it is publicly available.

Enjoy it!

Today it's part 3 of my Outreachy Saga it’s been 5 weeks of my Outreachy internship, and everything is not sailing smoothly how I would like! Why?? Because I had a little problem with my setup and I was stuck for 2 days without working until be able to correctly do my setup, how I said in my introduction post, one thing that I'm learning at my internship is "learning", because not everything goes as I would like, sometimes it is necessary to stop, breathe, redo everything and, after redoing everything, it is so rewarding when things start to flow.

Today my week’s blog will be focusing on the Linux Kernel Community at which I’m interning and the project on which I’m working. So, let’s get started!

A small context: the core of an Operating System (OS) is the Kernel, as this is responsible for the integration of the physical devices (hardware) of the computer with the programs (software). In a Linux OS this core is also known as Linux Kernel, has open source code, and is freely available for the community, how I said in my introduction blog https://open-sourceress.com/outreachy-introduction/, the community it's a set of people and companies that want to collaborate in the development of the system.

Due to these contributions Linux Kernel has grown a lot, with over 8 million lines of code and well over 1000 contributors to each release, is one of the largest and most active free software projects in existence. The kernel codebase has been logically broken down into a set of subsystems: network, architecture-specific support (x86, ARM, MIPS, ...), memory management, devices video, real-time systems, among others. This makes it a little easier to manage the contributions made in the Kernel, as most subsystems have a designated maintainer, and they handle verifying and accepting contributions before they are incorporated in Linux kernel mainline

About my project at Linux Kernel – “Improvements to DRI-devel (aka kernel GPU subsystem)“

In laptops, tablets, phones, and lots of other places GPU/display uses more silicon die space than everything else combined (humans are mostly visual people after all), dri-devel (and the wider set of projects under the X.org Foundation's umbrella) is the community that makes this all work and shine.

In my project, I would like to create new features and better understand how the DRM core works. To achieve this goal, I chose these tasks: Clean up the debugfs support and remove custom dumbmapoffset implementations

Anyone can contribute to the development of the kernel, just develop a patch, send it to the system's mailing list, wait for community considerations, fix whatever it takes, and that's it.

But yes, I know well, that starting to contribute to the kernel is scary, especially for anyone who is a noob (beginner, newbie) in the Free Software development world and also doesn't know where to start.

But there are several things and initiatives to help, for example:

A beginners guide to linux kernel development

Write and Submit your first Linux kernel Patch

Outreachy is a paid, remote internship program. Outreachy's goal is to support people from groups underrepresented in tech. We help newcomers to free software and open source make their first contributions. Outreachy provides internships to open source work. People apply from all around the world. Interns work remotely and are not required to move. Interns are paid a stipend of $5,500 USD for the three month internship. Interns have a $500 USD travel stipend to attend conferences or events. Interns work with experienced mentors from open source communities. Outreachy internship projects may include programming, user experience, documentation, illustration, graphical design, or data science. Interns often find employment after their internship with Outreachy sponsors or in jobs that use the skills they learned during their internship.

Google Summer of Code is a global program focused on bringing more student developers into open source software development. Students work with an open source organization on a 10 week programming project during their break from school.

In Brazil, I met 2 of these groups

In Campinas - LKCamp

In São Paulo - FLUSP

It's scary I know, but as you can see there are several initiatives and content to start contributing to the Linux Kernel. So don't be afraid, try to contribute to the Linux kernel and ask the community for help there will always be someone who can help you!

Ah!!! And I almost forgot, if you need help you can send a message, I'm also starting in this world of kernel contribution, but I'll do my best to help and my goal with the blog besides showing my Outreachy internship progress is also to create content to help with the contribution and development of the kernel for beginners, both in English and in Portuguese (my native language).

Thank you for following me so far, please feel free to comment! And stay tuned to the next chapters of this Saga!!!

Take care and have a great day!

For today, at least.

Today, this blog is a Gallium blog. And it’s a momentous day indeed.

We all know what this is:

It’s a screenshot of Portal 2 with the Gallium HUD activated and VSync disabled.

But what driver is that underneath?

Well, for today’s blog it’s RadeonSI, the reference implementation of a Gallium driver.

And why is this, I can hear you all asking.

What if I told you that this screenshot with 10% higher FPS is also Portal 2 with VSync disabled on RadeonSI using one trick that graphics developers WON’T TELL YOU:

Interested?

We did it.

By assembling an elite team of individuals with a few minutes to spare here and there over the past week, including:

it is now (technically) possible to run DXVK-compatible Source Engine games through Gallium’s Nine state tracker, providing a native D3D9 runtime.

Is your Portal 2 in-game FPS sad and barely even 500 like this screenshot?

Why not jack it up to more than TWICE THAT NUMBER* with riced out, GPU-fan-shredding technology that Mesa Gallium drivers have been shipping for years?

This post does not represent any form of official statement or address from Valve and is only a small project that was started out of boredom while I waited for CTS runs to finish.

This post also does not make any claims or statements regarding performance on other drivers, or performance comparisons using alternative graphics emulation layers, though whew, it sure would be interesting to see what those kinds of numbers look like!

The Khronos Group has released today a new version of the Vulkan specification that includes the VK_EXT_multi_draw extension. This new extension has been championed by Mike Blumenkrantz, contracted by Valve to work on Zink, an OpenGL implementation that’s part of Mesa and runs on top of Vulkan. Mike has been working very hard to make OpenGL-on-Vulkan performant and better, and came up with this extension to close an existing gap between the two APIs. As part of the ongoing collaboration between Igalia and Valve, I had the chance to participate in the release process by reviewing the specification text in depth, providing feedback and fixes, and writing a set of CTS tests to check conformance for drivers implementing the extension. As you can see in the contributors list, VK_EXT_multi_draw had input and feedback from more vendors. Special mention to Jason Ekstrand from Intel, who provided an initial review of the text, and Piers Daniell from NVIDIA, who was also involved since the early stages.

Thanks to VK_EXT_multi_draw, Vulkan will have equivalents to the glMultiDrawArrays and glMultiDrawElements functions from OpenGL. They’re called vkCmdDrawMultiEXT and vkCmdDrawMultiIndexedEXT. These two new functions allow recording a batch of draw commands in a command buffer using a single call, and they can be used in situations where an application would be recording a high number of draws without changing state. Although Vulkan already had mechanisms that allowed applications to record batches of draw commands in the form of indirect draws, these need the array of draw parameters to reside in a GPU-accessible buffer. VK_EXT_multi_draw, on the other hand, lets applications provide arrays of draw parameters using CPU memory.

vkCmdDrawMultiEXT is essentially equivalent to calling vkCmdDraw multiple times in a row, and vkCmdDrawMultiIndexedEXT does the same for vkCmdDrawIndexed. To improve application performance and reduce CPU overhead, Vulkan drivers are allowed and encouraged to omit checks for API function arguments provided by applications (these correctness checks are provided by the Vulkan Validation Layers mainly during application development), and thanks to mechanisms like primary and secondary command buffers, Vulkan makes it possible to prepare sequences of commands for the GPU to execute using multiple threads and CPU cores. In this situation, you may be wondering how much of an improvement the new functions provide apart from saving a few microseconds processing some function calls. In other words, what’s the practical difference between calling vkCmdDraw a thousand times and batching a thousand draws using vkCmdDrawMultiEXT?

The answer is that most of the overhead of recording a draw command doesn’t come from having to call a function, but in the checks the implementation has to run when recording the command. These checks may not be related to correctness, but to additional actions and options that may need to be taken depending on the state of the command buffer in the moment the draw command is recorded. For example, see the calls to radv_before_draw when RADV processes a draw command (note: RADV is Mesa’s super nice free software Vulkan driver for AMD cards). These checks only need to run once when using the new functions. In bechmark-like scenarios using real drivers, Mike has been able to verify that, while the overhead varies per driver and some of them are lightweight and have minimal overhead, some mainstream drivers can double their draw call processing rate when using VK_EXT_multi_draw.

Mike has work-in-progress implementations for Mesa’s ANV and RADV drivers (the Vulkan drivers for Intel and AMD GPUs, respectively) which pass conformance and will hopefully land soon in Mesa’s main branch, and more drivers are expected to ship support for the extension in the near future.

After months and months of the construction crews hammering away, VK_EXT_multi_draw has now been released for general use.

Will this suddenly make zink the fastest GPU driver in history?

Obviously.

Long-time readers will recall that I memed about this extension some time ago, and the numbers in a synthetic benchmark targeted at exactly this feature are phenomenal.

For more on the topic, we go to our Senior Multidraw Correspondent and my personal Khronos BFF, Ricardo Garcia, who has been following this story since the beginning.

In short, an issue was filed recently about getting the Nine state tracker working with zink.

Was it the first? No..

Was it the first one this year? Yes.

Thus began a minutes-long, helter-skelter sequence of events to get Nine up and running, spread out over the course of a day or two. In need of a skilled finagler knowledgeable in the mysterium of Gallium state trackers, I contacted the only developer I know with a rockstar name, Axel Davy. We set out at dawn, and I strapped on my parachute. It was almost immediately that I heard a familiar call: there’s a build issue.

Next stop was crashing in unimplemented interface methods with a stopover in flailing about wildly in TGSI what even is this before I arrivated at my target:

Ah, glorious triangles.

Hi all, hope you all are doing fine!

It's been 3 weeks since I started the Outreachy internship, I've done a lot but at the same time, I don't think I've done anything.

In the first week, it was that week of setup machine, fighting with IRC to be able to send messages, sending some information necessary for Outreachy organizers. I also needed to configure my blog's RSS Feed (yes, at a time when I was in doubt whether I wanted to work with backend or frontend, I decided to learn how to develop a blog) as I use Gatsby as the base of the blog, it was relatively easy to configure the RSS (Hooray!! One thing worked \o/)

To do my setup, my mentor Melissa gave me 2 tutorials as a base:

How to compile and install the Linux Kernel

And I needed to redo them a few times to understand how they worked (it's in my GIANT to-do list, a tutorial with my steps explaining where I had a problem, one day it leaves...) because I was going to use a virtual machine to run the tests and see if I didn't break the kernel too much after it was configured I needed to test to see if everything was right, for that I used the tutorial

Ok, setup working and now?? I still needed to configure one thing: The VKMS (a software-only model of a KMS driver that is useful for testing and for running X (or similar) on headless machines) and the IGT (a test suite used specifically for debugging and development of the DRM drivers), for this I used the tutorial:

I was stuck for a few days in this task, the tests failed but why??? Configuration error? Tool installation error??

Nooo! It was my own mistake... That I didn't read the tutorial properly, and I didn't see the message that said:

“The tests need to be run without a composer, so you need to switch to text-only mode”

For that I only needed to do:

sudo systemctl isolate multi-user.target Ready! Solved, tests working \o/ and now what?

Now my task for the next few days is to “create a debugfs file for vkms using drmstatedump()”, but that's a subject for the next post.

Thank you for following me so far, please feel free to comment! And stay tuned to the next chapters of this Saga called Outreachy!!

Take care and have a great day!

A long, long time ago in a month far, far away I said I was going to blog about some improvements I’d been working on for zink. I blogged about some of them, but one was conspicuously absent from the original list:

There’s a lot that goes into this item. The post you’re reading now isn’t about to go so far as to claim that zink(-wip) is usable for gaming. No, that day is still far, far away. But this post is going to be the first step.

To begin with, a riddle: what change was made to zink between these two screenshots?

That’s right, I put the punchline in the title.

A suballocator.

A suballocator is a mechanism by which small blocks of memory can be suballocated out of larger one. For example, if I want to allocate an 64byte chunk of memory, I could allocate it directly and get my block, or I could allocate a 4096byte chunk of memory and then take 64bytes out of it.

When performance is involved, it’s important to consider the time-cost of allocations, and so yes, it’s useful to have already allocated another 63 instances of 64bytes when I need a second one, but there’s another, deeper issue that’s also necessary to address, especially as it relates to gaming: 32bit environments.

In a 32bit process, the amount of address space available is limited to 4GB, regardless of how much actual memory is physically present, some of which is dedicated to system resources and unavailable for general use. Any time a buffer or image is mapped by the driver in a process, this uses up address space in order to create an addressable region of memory that can be read or written to. Once all the address space has been used up, no other resources can be mapped, and it becomes impossible to continue normal operations.

In short, the game crashes.

In Vulkan, and just generally in driver work, it’s important to keep allocation sizes aligned to the preference of the hardware for a given usage; this amounts to minMemoryMapAlignment, which is 4096bytes on many drivers. Similarly, vkGetBufferMemoryRequirements and vkGetImageMemoryRequirements return aligned memory sizes, so even if only 64bytes are needed, 4096bytes must still be allocated—4032 bytes unused. This ends up wasting tons of memory when an app is allocating lots of smaller regions, and it’s further wasting address space since Vulkan prohibits memory from being mapped multiple times, meaning that each 64byte buffer is wasting an additional 4032bytes of address space.

While 4k of memory may seem like a small amount, and why would anyone ever need more than 256kb memory anyway, these allocations all add up, fast enough that zink runs out of address space in a 32bit game like Tomb Raider within a couple minutes.

Playable?

Probably not.

If you’re working in Mesa, you basically have two options when you come across a new problem: delete some code or copy some code. It’s not often that I come across an issue which can’t be resolved by one of the two.

In this case, I had known for quite a while that the solution was going to be copying some code. Thus I entered the realm of Gallium’s awesome auxilliary/pipebuffer, a fearsome component that had only been leveraged by one driver.

Yup, it was time to throw more galaxybrain.jpg code into the blender and see what came out. Ultimately, I was able to repurpose a lot of the core calculation code for sizing allocations, which saved me from having to do any kind of thinking or maffs. This let me cut down my suballocator implementation to a little under 700 lines, leaving much, much, much more space for bugsactivities.

At a high level, here’s an overview of aux/pb:

pb_cache_init to set up a memory cachepb_slabs_initpb_slab_alloc to reuse/reclaim a slab allocation, otherwise manually allocate new memorypb_reference_with_winsysThere’s more under the hood, but it mostly boils down to filling in the interface functions to manage detecting whether resources are busy or can be reclaimed for reuse. The actual caching/reclaiming/reusing are all handled by aux/pb, meaning I was free to go about breaking everything with all the leftover time that I had.

Cultured users of zink-wip can now enjoy massively improved performance (and have already been enjoying it for the past month) in many apps. The rest of you get to sit around and watch while I bang my head against CI while ajax showers me with memes.

There’s a lot that has happened in the world of Zink since my last update, so let’s see if I can bring you up to date on the most important stuff.

Gosh, when I last blogged about Zink, it hadn’t even landed upstream in Mesa yet! Well, by now it’s been upstream for quite a while, and most development has moved there.

At the time of writing, we have merged 606 merge-requests

labeled “zink”. The current tip of mesa’s main branch is totaling 1717

commits touching the src/gallium/drivers/zink/ sub-folder, written by

42 different contributors. That’s pretty awesome in my eyes, Zink has

truly become a community project!

Another noteworthy change is that Mike Blumenkrantz has come aboard the project, and has churned out an incredible amount of improvements to Zink! He got hired by Valve to work on Zink (among other things), and is now the most prolific contributor, with more than twice the amount of commits than I have written.

If you want a job in Open Source graphics, Zink has a proven track-record as a job-creator! :smile:

In addition to Mike, there’s some other awesome people who have been helping out lately.

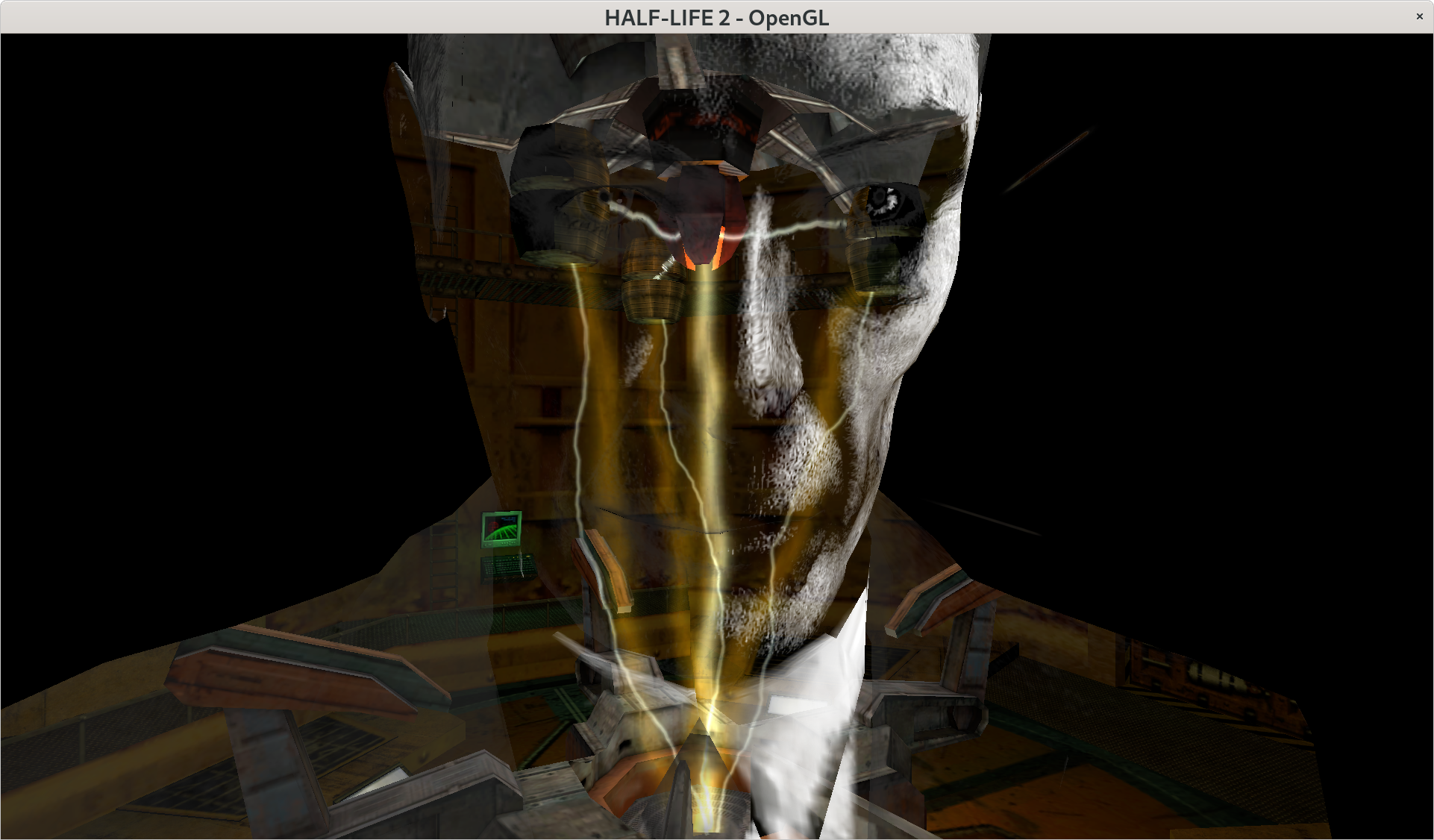

Half-Life 2 running with Zink.

Half-Life 2 running with Zink.

Thanks to a lot of hard work by Mike assisted by Dave Airlie and Adam Jackson, both of RedHat, Zink is now able to expose the OpenGL 4.6 (Core Profile) feature set, given enough Vulkan features! :tada:

Please note that this doesn’t mean that Zink is yet a conformant implementation, there’s some details left to be ironed out before we can claim that. In particular, we need to pass the conformance tests, and submit a conformance report to Khronos. We’re not there yet.

I’m also happy to see that Zink is currently at the top of MesaMatrix (together with LLVMpipe, i965 and RadeonSI), reporting a total of 160 OpenGL extensions at the time of writing!

In theory, that means you can run any OpenGL application you can think of on top of Zink. Mike is hard at work testing the entire Steam game library, and things are working pretty well.

Is this the end of the line for Zink? Are we done now? Not at all! :laughing:

We’re still stuck at OpenGL 3.0 for compatibility contexts, mainly due to lack of testing. There’s a lot of features that need to work together in relatively complicated ways for this to work for us.

Note that this only matters for applications that rely on legacy OpenGL features. Modern OpenGL programs gets OpenGL 4.6 support, as mentioned previously.

I don’t think this is going to be a big deal to enable, but I haven’t spent time on it.

Similar to the OpenGL 4.6 support, we’re now able to expose the OpenGL ES 3.1 feature set. This is again thanks to a lot of hard work by Mike and the gang.

Why not OpenGL ES 3.2? This comes down to the

GL_KHR_blend_equation_advanced feature. Mike

blogged about the issue a while ago.

To prevent regressions, we’ve started testing Zink on the Mesa CI system for every change. This is made possible thanks to Lavapipe, a Vulkan software implementation in Mesa that reuse the rasterizer from LLVMpipe.

This means we can run tests on virtual cloud machines without having to depend on unreliable hardware. :robot:

At the time of writing, we’re only exposing OpenGL 4.1 on top of Lavapipe, due to some lacking features. But we have patches in the works to bring this up to OpenGL 4.5, and OpenGL 4.6 probably won’t be far off when that lands.

Basic support for Zink on Microsoft Windows has landed. This isn’t particularly useful at the moment, because we need better window-system integration to get anywhere near reasonable performance. But it’s there.

Thanks to work by Duncan Hopkins of The Foundry, there’s also some support for macOS. This uses MoltenVK as the Vulkan implementation, meaning that we also support the Vulkan Portability Extension to some degree.

This support isn’t quite as drop-in as on other platforms, because it’s completely lacking window-system integration. But it seems to work for the use-cases they have at The Foundry, so it’s worth mentioning as well.

Beyond this, Igalia has brought up Zink on the V3DV driver, and I’ve heard some whispers that there’s some people running Zink on top of Turnip, an open-source Vulkan driver for recent Qualcomm Adreno GPUs.

I’ve heard some people have some success getting things running on NVIDIA, but there’s a few obvious problems in the way there due to the lack of proper DRI support… Which brings us to:

Another awesome new development is that Adam is working on Penny. So, what’s Penny?

Penny is another way of bringing up Zink, on systems without DRI support.

It works as a dedicated GLX integration that uses the

VK_KHR_swapchain extension to integrate properly with

the native Vulkan driver’s window-system integration instead of Mesa baking

its own.

This solves a lot of small, nasty issues in the DRI code-path. I’ll say the magic “implicit synchronization” word, and hope that scares away anyone wondering what it’s about.

A lot more has happened on the performance front as well, again all thanks to Mike. However, much of this is still out-of-tree, and waiting in Mike’s zink-wip branch.

So instead, I suggest you check out Mike’s blog for the latest performance information (and much more up-to-date info on Zink). There’s been a lot going on, and I’m sure there’s even more to come!

I think this should cover the most interesting bits of development.

On a personal note, I recently became a dad for the first time, and as a result I’ll be away for a while on paternity leave, starting early this fall. Luckily, Zink is in good hands with Mike and the rest of the upstream community taking care of things.

I would like to again plug Mike’s blog as a great source of Zink-related news, if you’re not already following it. He posts a lot more frequent than I do, and he’s also an epic meme master, so it’s all great fun!

(Added a section on load/store pairs on June 14th)

This question probably seems absurd. An unoptimized memcpy is a simple loop that copies bytes. How hard can that be? Well...

There's a fascinating thread on llvm-dev started by George Mitenkov proposing a new family of "byte" types. I found the proposal and discussion difficult to follow. In my humble opinion, this is because the proposal touches some rather subtle and underspecified aspects of LLVM IR semantics, and rather than address those fundamentals systematically, it jumps right into the minutiae of the instruction set. I look forward to seeing how the proposal evolves. In the meantime, this article is a byproduct of me attempting to digest the problem space.

Here is a fairly natural way to (attempt to) implement memcpy in LLVM IR:

define void @memcpy(i8* %dst, i8* %src, i64 %n) {

entry:

%dst.end = getelementptr i8, i8* %dst, i64 %n

%isempty = icmp eq i64 %n, 0

br i1 %isempty, label %out, label %loop

loop:

%src.loop = phi i8* [ %src, %entry ], [ %src.next, %loop ]

%dst.loop = phi i8* [ %dst, %entry ], [ %dst.next, %loop ]

%ch = load i8, i8* %src.loop

store i8 %ch, i8* %dst.loop

%src.next = getelementptr i8, i8* %src.loop, i64 1

%dst.next = getelementptr i8, i8* %dst.loop, i64 1

%done = icmp eq i8* %dst.next, %dst.end

br i1 %done, label %out, label %loop

out:

ret void

}

Unfortunately, the copy that is written to the destination is not a perfect copy of the source.

Hold on, I hear you think, each byte of memory holds one of 256 possible bit patterns, and this bit pattern is perfectly copied by the `load`/`store` sequence! The catch is that in LLVM's model of execution, a byte of memory can in fact hold more than just one of those 256 values. For example, a byte of memory can be poison, which means that there are at least 257 possible values. Poison is forwarded perfectly by the code above, so that's fine. The trouble starts because of pointer provenance.

From a machine perspective, a pointer is just an integer that is interpreted as a memory address.

For the compiler, alias analysis -- that is, the ability to prove that different pointers point at different memory addresses -- is crucial for optimization. One basic tool in the alias analysis toolbox is to recognize that if pointers point into different "memory objects" -- different stack or heap allocations -- then they cannot alias.

Unfortunately, many pointers are obtained via getelementptr (GEP) using dynamic (non-constant) indices. These dynamic indices could be such that the resulting pointer points into a different memory object than the base pointer. This makes it nearly impossible to determine at compile time whether two pointers point into the same memory object or not.

Which is why there is a rule which says (among other things) that if a pointer P obtained via GEP ends up going out-of-bounds and pointing into a different memory object than the pointer on which the GEP was based, then dereferencing P is undefined behavior even though the pointer's memory address is valid from the machine perspective.

As a corollary, a situation is possible in which there are two pointers whose underlying memory address is identical but whose provenance is different. In that case, it's possible that one of them can be dereferenced while dereferencing the other is undefined behavior.

This only makes sense if, in the formal semantics of LLVM IR, pointer values carry more information than just an integer interpreted as a memory address. They also carry provenance information, which is essentially the set of memory objects that can be accessed via this pointer and any pointers derived from it.

What is the provenance of a pointer that results from a load instruction? In a clean operational semantics, the load must derive this provenance from the values stored in memory.

If bytes of memory can only hold one of 256 bit patterns (or poison), that doesn't give us much to work with. We could say that the provenance of the pointer is "empty", meaning the pointer cannot be used to access any memory objects -- but that's clearly useless. Or we could say that the provenance of the pointer is "all", meaning the pointer (or pointers derived from it) can be freely used to access all memory objects, assuming the underlying address is adjusted appropriately. That isn't much better.[0]

Instead, we must say that -- as far as LLVM IR semantics are concerned -- each byte of memory holds pointer provenance information in addition to its i8 content. The provenance information in memory is written by pointer store, and pointer load uses it to reconstruct the original provenance of the loaded pointer.

What happens to provenance information in non-pointer load/store? A load can simply ignore the additional information in memory. For store, I see 3 possible choices:

1. Leave the provenance information that already happens to be in memory unmodified.

2. Set the provenance to "empty".

3. Set the provenance to "all".

Looking back at our attempt to implement memcpy, there is no choice which results in a perfect copy. All of the choices lose provenance information.

Without major changes to LLVM IR, only the last choice is potentially viable because it is the only choice that allows dereferencing pointers that are loaded from the memcpy destination.

Without major changes to LLVM IR, we can only implement a memcpy that loses provenance information during the copy.

So what? Alias analysis around memcpy and code like it ends up being conservative, but reasonable people can argue that this doesn't matter. The burden of evidence lies on whoever wants to make a large change here in order to improve alias analysis.

That said, we cannot just call it a day and go (or stay) home either, because there are related correctness issues in LLVM today, e.g. bug 37469 mentioned in the initial email of that llvm-dev thread.

Here's a simpler example of a correctness issue using our hand-coded memcpy:

define i32 @sample(i32** %pp) {

%tmp = alloca i32*

%pp.8 = bitcast i32** %pp to i8*

%tmp.8 = bitcast i32** %tmp to i8*

call void @memcpy(i8* %tmp.8, i8* %pp.8, i64 8)

%p = load i32*, i32** %tmp

%x = load i32, i32* %p

ret i32 %x

}

A transform that should be possible is to eliminate the memcpy and temporary allocation:

define i32 @sample(i32** %pp) {

%p = load i32*, i32** %pp

%x = load i32, i32* %p

ret i32 %x

}

This transform is incorrect because it introduces undefined behavior.

To see why, remember that this is the world where we agree that integer stores write an "all" provenance to memory, so %p in the original program has "all" provenance. In the transformed program, this may no longer be the case. If @sample is called with a pointer that was obtained through an out-of-bounds GEP whose resulting address just happens to fall into a different memory object, then the transformed program has undefined behavior where the original program didn't.

We could fix this correctness issue by introducing an unrestrict instruction which elevates a pointer's provenance to the "all" provenance:

define i32 @sample(i32** %pp) {

%p = load i32*, i32** %pp

%q = unrestrict i32* %p

%x = load i32, i32* %q

ret i32 %x

}

Here, %q has "all" provenance and therefore no undefined behavior is introduced.