Friday, 07 June 2019

I'm going to Akademy!

And you should too!

Akademy is free to attend however you need to register to reserve your space, so head to https://akademy.kde.org/2019/register and press the buttons.

Akademy is very important to meet people, discuss future plans, learn about new stuff and make friends for life!

Note this year the recommended accomodations are a bit on the expensive side, so you may want to hurry and apply for Travel support. The last round is open until July 1st.

Thursday, 06 June 2019

Akademy-es 2019 talks announced!

Akademy-es 2019 will be happening this June 28-30 in Vigo.

The talks were just announced recently.

Check them out at https://www.kde-espana.org/akademy-es-2019/programa-akademy-es-2019 it has lots of interesting talks so if you understand Spanish and are interested in KDE or Free Software in general I'd really recommend to attend!

Friday, 17 May 2019

[Some] KDE Applications 19.04.1 also available in flathub

Thanks to Nick Richards we've been able to convince flathub to momentarily accept our old appdata files as still valid, it's a stopgap workaround, but at least gives us some breathing time. So the updates are coming in as we speak.

Partition like a pro with fdisk, sfdisk and cfdisk

Most Linux distributions ship the hard drive partition tool fdisk by default. Knowing how to use it is a good skill for every Linux system administrator since having to rescue a system that has disk issues is a very common task. If the admin is faced with a prompt in a rescue mode boot, often fdisk is the only partitioning tool available and must be used, since if the main root filesystem is broken, one cannot install and use any other partitioning tools.

When installing Debian based systems (e.g. Ubuntu) in the text mode server installer, keep in mind that you can at any time during the installation process press Ctrl+Alt+F2 to jump to a text console running a limited shell prompt (Busybox) and manipulate the systems as you wish, among others run fdisk. When done press Ctrl+Alt+F1 to jump back to the installer screen.

In fact, fdisk is not a single utility but actually a tool that ships with three commands together: fdisk, sfdisk and cfdisk.

fdisk

Most Linux sysadmins have at some point used fdisk, the classic partitioning tool. There is also a tool with the same name in Windows, but its not the same tool. Across the Unix ecosystem the fdisk tool is however nowadays the same one, even on MacOS X.

To list the current partition layout one can simply run fdisk -l /dev/sda. Below is an example of the output. One can also run fdisk -l /dev/sd* to print the partition info of all sd devices in one go. The fdisk man page lists all the command line parameters available.

fdisk -lIf one runs just fdisk it will launch in interactive mode. Pressing m will show the help. To create a new GPT (for modern disk) partition table (resetting any existing partition table) and add a new Linux partition that uses all available disk space one can simply enter the commands g, n and w in sequence and pressing enter to all questions to accept them at their default values.

mcfdisk

The command cfdisk servers the same purpose as fdisk with the difference that it provides a slightly fancier user interface based on ncurses so there are menus one can browse with arrows and the tab button without having to remember the single letter commands fdisk uses.

sfdisk

The third tool in the suite is sfdisk. This tool is designed to be scripted, enabling administrators to script and automate partitioning operations.

The key to sfdisk operations is to first dump the current layout using the -d argument, for example sfdisk -d /dev/sda > partition-table. An example output would be:

$ cat partition-table

label: gpt

label-id: AF7B83C8-CE8D-463D-99BF-E654A68746DD

device: /dev/sda

unit: sectors

first-lba: 34

last-lba: 937703054

/dev/sda1 : start= 2048, size= 997376, type=C12A7328-F81F-11D2-BA4B-00A0C93EC93B, uuid=F35A875F-1A53-493E-85D4-870A7A749872

/dev/sda2 : start= 999424, size= 936701952, type=A19D880F-05FC-4D3B-A006-743F0F84911E, uuid=725EAB2A-F2E2-475E-81DC-9A5E18E29678This text file describes the partition type, the layout and also includes the device UUIDs. The file above can be considered a backup of the /dev/sda partition table. If something has gone wrong with the partition table and this file was saved at some earlier time, one can recover the partition table by running: sfdisk /dev/sda < partition-table.

Copying the partition table to multiple disks

One neat application of sfdisk is that it can be used to copy the partition layout to many devices. Say you have a big server computer with 16 hard disks. Once you have partitioned the first disk, you can dump the partition table of the first disk with sfdisk -d and then edit the dump file (remember, it is just a plain-text file) to remove references to the device name and UUID’s, which are unique to a specific device and not something you want to clone to other disks. If the initial dump was the example above, the version with unique identifiers removed would look like this:

label: gpt

unit: sectors

first-lba: 34

last-lba: 937703054

start= 2048, size= 997376, type=0FC63DAF-8483-4772-8E79-3D69D8477DE4

start= 999424, size= 936701952, type=A19D880F-05FC-4D3B-A006-743F0F84911EThis can applied to the another disk, for example /dev/sdb simply by running sfdisk /dev/sdb < partition-table. Now all the admin needs to do is run this same command a couple of times with only the one character changed on each invocation.

Listing device UUID’s with blkid

Keep in mind that the Linux kernel uses the device UUIDs for indentifying partitions and file systems. Be vary not to accidentally make two disks have the same UUID with sfdisk. Technically it is possible, and maybe useful in some situation where one wants to replace a hard drive and make the new hard drive 100% identical, but in a running system different disks should all have unique UUIDs.

To list all UUIDs use blkid. Below is an example of the output:

$ blkid

/dev/sda1: UUID="F379-8147" TYPE="vfat" PARTUUID="f35a875f-1a53-493e-85d4-870a7a749872"

/dev/sda2: UUID="5f12f800-1d8d-6192-0881-966a70daa16f" UUID_SUB="2d667c5b-b9f3-6510-cf76-9231122533ce" LABEL="fi-e3:0" TYPE="linux_raid_member" PARTUUID="725eab2a-f2e2-475e-81dc-9a5e18e29678"

/dev/sdb2: UUID="5f12f800-1d8d-6192-0881-966a70daa16f" UUID_SUB="0221fce5-2762-4b06-2d72-4f4f43310ba0" LABEL="fi-e3:0" TYPE="linux_raid_member" PARTUUID="cd2a477f-0b99-4dfc-baa6-f8ebb302cbbb"

/dev/md0: UUID="dcSgSA-m8WA-IcEG-l29Q-W6ti-6tRO-v7MGr1" TYPE="LVM2_member"

/dev/mapper/ssd-ssd--swap: UUID="2f2a93bd-f532-4a6d-bfa4-fcb96fb71449" TYPE="swap"

/dev/mapper/ssd-ssd--root: UUID="660ce473-5ad7-4be9-a834-4f3d3dfc33c3" TYPE="ext4"Extra tip: listing all disk with lsblk

While fdisk -l is nice for listing partition tables, often admins also want to know the partition sizes in human readable formats and what the partitions are used for. For this purpose the command lsblk is handy. While the default output is often enough, supplying the extra arguments -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINTmakes it even better. See below an example of the output:

$ lsblk -o NAME,SIZE,FSTYPE,TYPE,MOUNTPOINT

NAME SIZE FSTYPE TYPE MOUNTPOINT

sda 447,1G disk

├─sda1 487M vfat part /boot/efi

└─sda2 446,7G linux_raid_member part

└─md0 446,5G LVM2_member raid1

├─ssd-ssd--swap 8,8G swap lvm [SWAP]

└─ssd-ssd--root 437,7G ext4 lvm /

sdb 447,1G disk

├─sdb1 487M part

└─sdb2 446,7G linux_raid_member part

└─md0 446,5G LVM2_member raid1

├─ssd-ssd--swap 8,8G swap lvm [SWAP]

└─ssd-ssd--root 437,7G ext4 lvm /

sdc 447,1G disk

├─sdc1 487M part

└─sdc2 446,7G part A word of warning…

Remember that modifying the partition table is a destructive process. It is something the admin does while installing new systems or recovering broken ones. If done wrongly, all data might be lost!

Wednesday, 15 May 2019

No KDE Applications 19.04.1 available in flathub

The flatpak and flathub developers have changed what they consider a valid appdata file, so our appdata files that were valid last month are not valid anymore and thus we can't build the KDE Applications 19.04.1 packages.

Wednesday, 08 May 2019

Of elitists and laypeople

Spoilers Game of Thrones.

I have been watching Game of Thrones with great interest the past few weeks. It has very strongly highlighted a struggle that has been gripping my mind for a while now: That between elitists and laypeople. And I find myself in a strange in-between.

For those not in the know, the latest season of Game of Thrones is a bit controversial to say the least. If you skip past the internet vitriol, you’ll find a lot of people disliking the season for legitimate reasons: The battle tactics don’t make any sense, characters miraculously survive after the camera cuts away, time and distance stopped being an issue in a setting that used to take it slow, and there’s a weird, forced conflict that would go away entirely if these two characters that are already in love would simply marry. And the list goes on, I’m sure.

But on the other hand, there appears to be a large body of laypeople who watch and enjoy the series. Millions of people tune in every week to watch fictional people fight over a fictional throne, and they appear to be enjoying themselves. And me? Sure, I’m enjoying myself as well. I had muscle aches from the tension of watching The Long Night, and nothing gripped me more than the half-botched assassination attempt at the end of the episode.

So what gives? On the one hand enthusiasts are rightly criticising the writers for some very strange decisions, but on the other hand millions of people are enjoying the series all the same.

Of power users and newbies

I frankly don’t know the answer to the posed question, but I do know an analogy that prompted me to write this blog post. I am a humble contributor to the GNOME Project, chiefly as translator for Esperanto, but also miniscule bits and bobs here and there. GNOME faces a similar problem with detractors: They have their complaints about systemd, customisability, missing power user features, themes breaking, and so forth. And I’m sure they have some valid points, but GNOME remains the default desktop environment on many distributions, and many people use and love GNOME as I do.

These detractors often run some heavily customised Arch Linux system with some unintuitive-but-efficient window manager, and don’t have any editor other than Vim installed. Or in other words: They run a system that the vast majority of people could not and do not want to use.

And I understand these people, because in one aspect of my digital life, I have

been one of them. For at least two years, I ran

Spacemacs as my primary editor. For a while I even did

my e-mail through that program, and I loved it. Kind of. Sure, everything was

customisable, and the keyboard shortcuts were magically fast, but the mental

overhead of using that program was slowly grinding me down. Some menial task

that I do infrequently would turn out to involve a non-intuitive sequence of

keys that you just simply need to know, and I would spend far too long on

figuring that out. Or I would accidentally open Vim inside of the Emacs terminal

emulator, and :q would be sent to Emacs instead of the emulator. Sure, if you

know enough Emacs wizardry, you could easily escape this situation, but that’s

the point, isn’t it? The wizardry involved takes effort that I don’t always want

to put in, even if I know that it pays off. Kind of.

These days I use VSCodium, a Free Software version of Visual Studio Code. I like it well enough for a multitude of reasons, but mainly because the mental overhead of using this editor is a lot lower. Even so, is Emacs a better editor? Probably. If I could be bothered to maintain my Emacs wizarding skills, I am fairly certain that it would be the perfect editor. But that’s a big if. So that’s why I settle for VSCodium. And the same line of reasoning can be extended to why I use and love GNOME.

Back to Westeros

Having made that analogy, can it be mapped onto the kerfuffle surrounding Game of Thrones? Is it a matter of a small group wanting an intricate, advanced plot and a larger group wanting a simple, rudimentary story, because they can’t or don’t want to deal with a complicated story?

It seems that way, but the damnedest thing is that I don’t know. I like the latest season of Game of Thrones for what it is: An archetypical fight of good versus evil. The living gathered together to fight an undead army, and the living won. Such a story is a lot easier to get into as a layperson, and there is nothing wrong with enjoying simple, archetypical stories.

But that’s not what Game of Thrones is. Game of Thrones is the derivative of an

incredibly intricate series of books with so many details and plots, and the TV

series stayed faithful to that for a long time. The latest season is a huge

diversion from its roots. It is, as far as I can tell, replacing vi with

nano. There is nothing wrong with either, but there is a good reason why the

two are separate.

Who are these laypeople, anyway?

This question is difficult to answer, because the layperson isn’t me. It can’t possibly be, because here I am writing about the subject. The layperson must be someone who isn’t particularly interested or informed. I imagine that they just turn on the telly and enjoy it for what it is. No deep thoughts, no deep investment.

But why don’t these laypeople care? Why should we care about laypeople? Must we really dumb everything down for the lowest common denominator? Why can’t they just get on my level? This is really frustrating!

Enter cars.

I have a driving license, but I don’t really care about cars. I know how to work the pedals and the steering wheel, and that’s pretty much it. I don’t know why I don’t care about cars. I just want to get from my home to my destination and back. If I can put in as little effort as possible to do that, I’m happy. I just don’t have the time or desire to learn all the intricacies of cars.

And knowing that, I suppose that I’m the layperson I was so frustrated over a moment ago. When I walk into the garage with a minor problem, I like to imagine that I’m the sort of person who shows up at tech support because I can’t log in: I accidentally pressed Caps Lock.

So the layperson is me. Sometimes.

Then who are the elitists?

Having said all of that, something throws a wrench in the works: Game of Thrones was also immensely popular when it had all the intricacies and inter-weaving plots of the first few seasons. That appears to indicate to me that laypeople aren’t allergic to the kind of story that the enthusiasts want. But they aren’t allergic to the story that is being told in season 8, either, unlike the elitists.

So why do the elitists care? Why can’t they just appreciate the same things that laypeople do? Why must it always be so complex? Why should the complaints of a few outweigh the enjoyment of many?

And this is where I get stuck. Because frankly, I don’t know. Shouldn’t

everything be as accessible as possible? The more the merrier? Why should vi

exist when nano suffices?

But you can take my vi key bindings from my cold, dead hands. And I love what Game of Thrones used to be, and am sad that it morphed into an archetypical story that used to be its antithesis. I want complex things, even though I switched from Spacemacs to VSCodium and use GNOME instead of i3. Not for the sake of difficulty, but because complexity gives me something that simplicity cannot.

So the elitist is me. Sometimes.

Fin

I’m still in a limbo about this clash between elitists and laypeople. Maybe the clash is superficial and the two can exist side-by-side or separately. Maybe the writers of Game of Thrones just aren’t very good and accidentally made the story for laypeople instead of their target audience of elitists. Maybe it’s a sliding scale instead of a binary.

I don’t really know. I just wanted to get these thoughts out of my head and into a text box.

Sunday, 05 May 2019

External encrypted disk on LibreELEC

Last year I replaced, on the Raspberry Pi, the ArchLinux ARM with just Kodi installed with LibreELEC.

Today I plugged an external disk encrypted with dm-crypt, but to my full surprise this isn’t supported.

Luckily the project is open source and sky42 already provides a LibreELEC version with dm-crypt built-in support.

Once I flashed sky42’s version, I setup automated mount at startup via the autostart.sh script and the corresponding umount via shutdown.sh this way:

// copy your keyfile into /storage via SSH

$ cat /storage/.config/autostart.sh

cryptsetup luksOpen /dev/sda1 disk1 --key-file /storage/keyfile

mount /dev/mapper/disk1 /media

$ cat /storage/.config/shutdown.sh

umount /media

cryptsetup luksClose disk1

Reboot it and voilà!

Automount

If you want to automatically mount the disk whenever you plug it, then create the following udev rule:

// Find out ID_VENDOR_ID and ID_MODEL_ID for your drive by using `udevadm info`

$ cat /storage/.config/udev.rules.d/99-automount.rules

ACTION=="add", SUBSYSTEM=="usb", SUBSYSTEM=="block", ENV{ID_VENDOR_ID}=="0000", ENV{ID_MODEL_ID}=="9999", RUN+="cryptsetup luksOpen $env{DEVNAME} disk1 --key-file /storage/keyfile", RUN+="mount /dev/mapper/disk1 /media"

Saturday, 04 May 2019

Hardening OpenSSH Server

start by reading:

man 5 sshd_config

CentOS 6.x

Ciphers aes128-ctr,aes192-ctr,aes256-ctr

KexAlgorithms diffie-hellman-group-exchange-sha256

MACs hmac-sha2-256,hmac-sha2-512

and change the below setting in /etc/sysconfig/sshd:

AUTOCREATE_SERVER_KEYS=RSAONLY

CentOS 7.x

Ciphers chacha20-poly1305@openssh.com,aes128-ctr,aes192-ctr,aes256-ctr,aes128-gcm@openssh.com,aes256-gcm@openssh.com

KexAlgorithms curve25519-sha256,curve25519-sha256@libssh.org,diffie-hellman-group-exchange-sha256,diffie-hellman-group16-sha512,diffie-hellman-group18-sha512,diffie-hellman-group14-sha256

MACs umac-64-etm@openssh.com,umac-128-etm@openssh.com,hmac-sha2-256-etm@openssh.com,hmac-sha2-512-etm@openssh.com,umac-64@openssh.com,umac-128@openssh.com,hmac-sha2-256,hmac-sha2-512

Ubuntu 18.04.2 LTS

Ciphers chacha20-poly1305@openssh.com,aes128-ctr,aes192-ctr,aes256-ctr,aes128-gcm@openssh.com,aes256-gcm@openssh.com

KexAlgorithms curve25519-sha256,curve25519-sha256@libssh.org,diffie-hellman-group-exchange-sha256,diffie-hellman-group16-sha512,diffie-hellman-group18-sha512,diffie-hellman-group14-sha256

MACs umac-64-etm@openssh.com,umac-128-etm@openssh.com,hmac-sha2-256-etm@openssh.com,hmac-sha2-512-etm@openssh.com,umac-64@openssh.com,umac-128@openssh.com,hmac-sha2-256,hmac-sha2-512

HostKeyAlgorithms ecdsa-sha2-nistp384-cert-v01@openssh.com,ecdsa-sha2-nistp521-cert-v01@openssh.com,ssh-ed25519-cert-v01@openssh.com,ssh-rsa-cert-v01@openssh.com,ecdsa-sha2-nistp384,ecdsa-sha2-nistp521,ssh-ed25519,rsa-sha2-512,rsa-sha2-256

Archlinux

Ciphers chacha20-poly1305@openssh.com,aes128-ctr,aes192-ctr,aes256-ctr,aes128-gcm@openssh.com,aes256-gcm@openssh.com

KexAlgorithms curve25519-sha256,curve25519-sha256@libssh.org,diffie-hellman-group16-sha512,diffie-hellman-group18-sha512,diffie-hellman-group14-sha256

MACs umac-128-etm@openssh.com,hmac-sha2-512-etm@openssh.com

HostKeyAlgorithms ssh-ed25519-cert-v01@openssh.com,rsa-sha2-512-cert-v01@openssh.com,rsa-sha2-256-cert-v01@openssh.com,ssh-rsa-cert-v01@openssh.com,ssh-ed25519,rsa-sha2-512,rsa-sha2-256,ssh-rsa

Renew SSH Host Keys

rm -f /etc/ssh/ssh_host_* && service sshd restart

Generating ssh moduli file

for advance users only

ssh-keygen -G /tmp/moduli -b 4096

ssh-keygen -T /etc/ssh/moduli -f /tmp/moduli Automated phone backup with Syncthing

How do you backup your phones? Do you?

I use to perform a copy of all the photos and videos from my and my wife’s phone to my PC monthly and then I copy them to an external HDD attached to a Raspberry Pi.

However, it’s a tedious job mainly because: - I cannot really use the phones during this process; - MTP works one in 3 times - often I have to fallback to ADB; - I have to unmount the SD cards to speed up the copy; - after I copy the files, I have to rsync everything to the external HDD.

The Syncthing way

Syncthing describes itself as:

Syncthing replaces proprietary sync and cloud services with something open, trustworthy and decentralized.

I installed it to our Android phones and on the Raspberry Pi. On the Raspberry Pi I also enabled remote access.

I started the Syncthing application on the Android phones and I’ve chosen the folders (you can also select the whole Internal memory) to backup. Then, I shared them with the Raspberry Pi only and I set the folder type to “Send Only” because I don’t want the Android phone to retrieve any file from the Raspberry Pi.

On the Raspberry Pi, I accepted the sharing request from the Android phones, but I also changed the folder type to “Receive Only” because I don’t want the Raspberry Pi to send any file to the Android phones.

All done? Not yet.

Syncthing main purpose is to sync, not to backup. This means that, by default, if I delete a photo from my phone, that photo is gone from the Raspberry Pi too and this isn’t what I do need nor what I do want.

However, Syncthing supports File Versioning and best yet it does support a “trash can”-like file versioning which moves your deleted files into a .stversions subfolder, but if this isn’t enough yet you can also write your own file versioning script.

All done? Yes! Whenever I do connect to my own WiFi my photos are backed up!

Friday, 03 May 2019

How I put order in my bookmarks and found a better way to organise them

I have gone through several stages of this and so far nothing has stuck as ideal, but I think I am inching towards it.

To start off, I have to confess that while I love the internet and the web, I loathe having everything in the browser. The browser becoming the OS is what seems to be happening, and I hate that thought. I like to keep things locally, having backups, and control over my documents and data. Although I changed my e-mail provider(s) several times, I still have all my e-mail locally stored from 2003 until today.

I also do not like reading longer texts on an LCD, so I usually put longer texts into either Wallabag or Mozilla’s Pocket to read them later on my eInk reader (Kobo Aura). BTW, Wallabag and Pocket both have their pros and cons themselves. Pocket is more popular and better integrated into a lot of things (e.g. Firefox, Kobo, etc.), while Wallabag is fully FOSS (even the server) and offers some extra features that are in Pocket either subject to subscription or completely missing.

Still, an enormous amount of information is (and should be!) on the web, so each of us needs to somehow keep track and make sense of it :)

So, with that intro out of the way, here is how I tackle(d) this mess.

Historic overview of methods I used so far¶

Hierarchy of folders¶

As many of us, I guess, I started with first putting bookmarks in the bookmark bar, but soon had to start organising them into folders … and subfolders … and subsubfolders …and subsubsubfolders … until the screen did not fit the whole tree of them any more when expanded.

Pro:

- can be neat and tidy

- easy to sync between devices through e.g. Firefox Sync

Con:

- can become a huge mess, once it grows to a behemoth

- takes several clicks to put a bookmark into the appropriate (sub)folder

Tags + search bar¶

Then I decided to keep it flat and use the Firefox search bar to find what I am looking for.

To achieve that, when I bookmarked something, I renamed it to something useful and added tags (e.g.: shop, tea; or python, sql, howto).

This worked kinda OK, but a big downside is that there is a huge amount of clutter which is not easy to navigate and edit once you want to organise all the already existing bookmarks. The bookmark panel is somewhat helpful, but not a lot.

Pro:

- easy to search

- easy to find a relevant bookmark when you are about to search for something through the combined URL/search bar

- easy to sync between devices through e.g. Firefox Sync

Con:

- your search query must match the name, tag(s), or URL of bookmark

- hard to find or navigate other than searching (for name tag, URL)

OneTab¶

Several years after that, I learnt about OneTab from an onboarding website of a company I applied to (but did not get the job). The main promise of it is to loads of open tabs into (simple) organised lists on a single page. And all that with a single click (well, two really).

This worked wonders for (still does) for decluttering my tab list. Especially when grouped with Tree Style Tabs, which I very warmly recommend trying out. Even if it looks odd and unrully at first, it is very easy to get used to and helps organise tabs immensely. But back to OneTab…

The good side of OneTab is that it really helps keep your tab bar clean and therefore reduces your computer’s resource usage. It is also super for keeping track of tabs that you may (or maybe not) need to open again later, as you can (re)open a whole group of “tabs” with a single click.

As a practical example, let us say I am travelling to Barcelona in two months. So I book flights and the hotel, and in the process also check out some touristy and other helpful info. Because I will not be needing the touristy and travel stuff for quite some time before the trip, I do not need all the tabs open. But as it is a one-off trip, it is also silly to bookmark it all. So I send them all to OneTab and name the group e.g. “Barcelona trip 2019”. If I stumble upon any new stuff that is relevant, I simply send it to the same Named Group in OneTab. Once I need that info, I either open individual “tabs” or restore the whole group with one click and have it ready. An additional cool thing is that by default if you open a group or a single link “tab” from OneTab, it will remove it from the list. You can decide to keep the links in the list as well.

In practices, I still used tagged bookmarks for links that I wanted to store long-term, while depending on OneTab for short- to mid-term storage.

Pro:

- great for decluttering your tabs

- helps keep your browser’s resource usage low

- great for creating (temporary) lists of tabs that you do not need now, but will in the future

- can easily send a group of “tabs” with others via e-mail

Con:

- no tags, categories or other means of adding meta data – you can only name groups, and cannot even rename links

- no searching other than through the “webpage” list of “tabs”

- as the list of “tabs”/bookmarks grows, the harder it is to keep an overview

- cannot sync between devices

- (proprietary plug-in)

Worldbrain’s Memex¶

About two months ago, I stumbled upon Worldbrain’s Memex through a FOSDEM talk. It promises to fix bookmarking, searching, note-taking and web history for you … which is quite an impressive lot.

So far, I have to say, I am quite impressed. It is super easy to find stuff you visited, even if you forgot to bookmark it, as it indexes all the websites you visit (unless you put tell Memex to ignore that page or domain).

For more order, you can assign tags to websites and/or store them into collections (i.e. groups or folders). What is more, you can do that even later, if you forgot about it the first time. If you want to especially emphasise a specific website, you can also star it.

An excellent feature missing in other bookmarking methods I have seen so far is that it lets you annotate websites – through highlights and comments and tags attached to those highlights. So, not only can you store comments and tags on the websites, but also on annotations within those websites.

One concern I have is that they might have taken more than what they can chew, but since I started using it, I have seen so much progress that I am (cautiously) optimistic about it.

Pro:

- supports both tags and collections (i.e. groups)

- enables annotations/highlights and comments (as well as tags to both) to websites

- indexes websites, so when you search for something it goes through both the website’s text, as well as your notes to that website and, of course, tags

- starring websites you would like to find more easily

- you can also set specific websites or domain names to be ignored

- it offers quite an advanced search, including limiting by data ranges, stars, or domains

- when you search for something (e.g. using DuckDuckGo or Google) it shows suggested websites that you already visited before

- sharing of annotations and comments with others (as long as they also have Memex installed)

- for annotations it uses the W3C Open Annotation spec

- stores everything locally (with the exception of sharing annotations via a link, of course)

Con:

- it consumes more disk space due to running its own index

- needs an external app for backing up data

- so far no syncing of bookmarks between devices (but it is in the making)

- so far it does not sync annotations between different devices (but both mobile apps for iOS/Android, and Pocket integration are in the making)

Status quo and looking at the future¶

I currently have still a few dozen bookmarks that I need to tag in Memex and delete from my Firefox bookmarks. And a further several dozen in OneTab.

The most viewed websites, I have in the “Top Sites” in Firefox.

Most of the “tabs” in OneTab, I have already migrated to Memex and I am looking very much forward to trying to use it instead of OneTab. So far it seems a bit more work, as I need to 1) open all tabs into a tab tree (same as in OneTab), 2) open that tab tree in a separate window (extra step), and then 3) use the “Tag all tabs in window” or “Add all tabs in window” option from the extension button (similar as in OneTab), and finally 4) close the tabs by closing the window (extra step). What I usually do is to change a Tab Group from OneTab to a Collection in Memex and then take some extra time to add tags or notes, if appropriate.

So, I am quite confident Memex will be able to replace OneTab for me and most likely also (most) normal bookmarks. I may keep some bookmarks of things that I want to always keep track of, like my online bank’s URL, but I am not sure yet.

The annotations are a god-send as well, which will be very hard to get rid of, as I already got used to them.

Now, if I could only send stuff to my eInk reader (or phone), annotate it there and have those annotations auto-magically show up in the browser and therefore stored locally on my laptop … :D

Oh, oh, and if I could search through Memex from my KDE Plasma desktop and add/view annotations from other documents (e.g. ePub, ODF, PDF) and other applicatios (e.g. Okular, Calibre, LibreOffice). One may dream …

hook out → sipping Vin Santo and planning more order in bookmarks

P.S. This blog post was initially a comment to the topic “How do you organize your bookmarks?” in the ~tech group on Tildes where further discussion is happening as well.

Monday, 22 April 2019

[Some] KDE Applications 19.04 also available in flathub

The KDE Applications 19.04 release announcement (read it if you haven't, it's very complete) mentions some of the applications are available at the snap store, but forgets to mention flathub.

Just wanted to bring up that there's also some of the applications available in there https://flathub.org/apps/search/org.kde.

All the ones that are released along KDE Applications 19.04 were updated on release day (except kubrick that has a compilation issue and will be updated for 19.04.1 and kontact which is a best and to be honest i didn't particularly feel like updating it)

If you feel like helping there's more applications that need adding and more automation that needs to happen, so get in touch :)

Monday, 15 April 2019

Closer Look at the Double Ratchet

In the last blog post, I took a closer look at how the Extended Triple Diffie-Hellman Key Exchange (X3DH) is used in OMEMO and which role PreKeys are playing. This post is about the other big algorithm that makes up OMEMO. The Double Ratchet.

The Double Ratchet algorithm can be seen as the gearbox of the OMEMO machine. In order to understand the Double Ratchet, we will first have to understand what a ratchet is.

Before we start: This post makes no guarantees to be 100% correct. It is only meant to explain the inner workings of the Double Ratchet algorithm in a (hopefully) more or less understandable way. Many details are simplified or omitted for sake of simplicity. If you want to implement this algorithm, please read the Double Ratchet specification.

Image by Benedikt.Seidl [Public domain]

A ratchet is a tool used to drive nuts and bolts. The distinctive feature of a ratchet tool over an ordinary wrench is, that the part that grips the head of the bolt can only turn in one direction. It is not possible to turn it in the opposite direction as it is supposed to.

In OMEMO, ratchet functions are one-way functions that basically take input keys and derives a new keys from that. Doing it in this direction is easy (like turning the ratchet tool in the right direction), but it is impossible to reverse the process and calculate the original key from the derived key (analogue to turning the ratchet in the opposite direction).

Symmetric Key Ratchet

One type of ratchet is the symmetric key ratchet (abbrev. sk ratchet). It takes a key and some input data and produces a new key, as well as some output data. The new key is derived from the old key by using a so called Key Derivation Function. Repeating the process multiple times creates a Key Derivation Function Chain (KDF-Chain). The fact that it is impossible to reverse a key derivation is what gives the OMEMO protocol the property of Forward Secrecy.

The above image illustrates the process of using a KDF-Chain to generate output keys from input data. In every step, the KDF-Chain takes the input and the current KDF-Key to generate the output key. Then it derives a new KDF-Key from the old one, replacing it in the process.

To summarize once again: Every time the KDF-Chain is used to generate an output key from some input, its KDF-Key is replaced, so if the input is the same in two steps, the output will still be different due to the changed KDF-Key.

One issue of this ratchet is, that it does not provide future secrecy. That means once an attacker gets access to one of the KDF-Keys of the chain, they can use that key to derive all following keys in the chain from that point on. They basically just have to turn the ratchet forwards.

Diffie-Hellman Ratchet

The second type of ratchet that we have to take a look at is the Diffie-Hellman Ratchet. This ratchet is basically a repeated Diffie-Hellman Key Exchange with changing key pairs. Every user has a separate DH ratcheting key pair, which is being replaced with new keys under certain conditions. Whenever one of the parties sends a message, they include the public part of their current DH ratcheting key pair in the message. Once the recipient receives the message, they extract that public key and do a handshake with it using their private ratcheting key. The resulting shared secret is used to reset their receiving chain (more on that later).

Once the recipient creates a response message, they create a new random ratchet key and do another handshake with their new private key and the senders public key. The result is used to reset the sending chain (again, more on that later).

Image by OpenWhisperSystems (modified by author)

As a result, the DH ratchet is forwarded every time the direction of the message flow changes. The resulting keys are used to reset the sending-/receiving chains. This introduces future secrecy in the protocol.

Chains

A session between two devices has three chains – a root chain, a sending chain and a receiving chain.

The root chain is a KDF chain which is initialized with the shared secret which was established using the X3DH handshake. Both devices involved in the session have the same root chain. Contrary to the sending and receiving chains, the root chain is only initialized/reset once at the beginning of the session.

The sending chain of the session on device A equals the receiving chain on device B. On the other hand, the receiving chain on device A equals the sending chain on device B. The sending chain is used to generate message keys which are used to encrypt messages. The receiving chain on the other hand generates keys which can decrypt incoming messages.

Whenever the direction of the message flow changes, the sending and receiving chains are reset, meaning their keys are replaced with new keys generated by the root chain.

An Example

I think this rather complex protocol is best explained by an example message flow which demonstrates what actually happens during message sending / receiving etc.

In our example, Obi-Wan and Grievous have a conversation. Obi-Wan starts by establishing a session with Grievous and sends his initial message. Grievous responds by sending two messages back. Unfortunately the first of his replies goes missing.

Session Creation

In order to establish a session with Grievous, Obi-Wan has to first fetch one of Grievous key bundles. He uses this to establish a shared secret S between him and Grievous by executing a X3DH key exchange. More details on this can be found in my previous post. He also extracts Grievous signed PreKey ratcheting public key. S is used to initialize the root chain.

Obi-Wan now uses Grievous public ratchet key and does a handshake with his own ratchet private key to generate another shared secret which is pumped into the root chain. The output is used to initialize the sending chain and the KDF-Key of the root chain is replaced.

Now Obi-Wan established a session with Grievous without even sending a message. Nice!

The initial root key comes from the result of the X3DH handshake.

Original image by OpenWhisperSystems (modified by author)

Initial Message

Now the session is established on Obi-Wans side and he can start composing a message. He decides to send a classy “Hello there!” as a greeting. He uses his sending chain to generate a message key which is used to encrypt the message.

In our example only one message key is derived though.

Image by OpenWhisperSystems

Note: In the above image a constant is used as input for the KDF-Chain. This constant is defined by the protocol and isn’t important to understand whats going on.

Now Obi-Wan sends over the encrypted message along with his ratcheting public key and some information on what PreKey he used, the current sending key chain index (1), etc.

When Grievous receives Obi-Wan’s message, he completes his X3DH handshake with Obi-Wan in order to calculate the same exact shared secret S as Obi-Wan did earlier. He also uses S to initialize his root chain.

Now Grevious does a full ratchet step of the Diffie-Hellman Ratchet: He uses his private and Obi-Wans public ratchet key to do a handshake and initialize his receiving chain with the result. Note: The result of the handshake is the same exact value that Obi-Wan earlier calculated when he initialized his sending chain. Fantastic, isn’t it? Next he deletes his old ratchet key pair and generates a fresh one. Using the fresh private key, he does another handshake with Obi-Wans public key and uses the result to initialize his sending chain. This completes the full DH ratchet step.

Image by OpenWhisperSystems

Decrypting the Message

Now that Grievous has finalized his side of the session, he can go ahead and decrypt Obi-Wans message. Since the message contains the sending chain index 1, Grievous knows, that he has to use the first message key generated from his receiving chain to decrypt the message. Because his receiving chain equals Obi-Wans sending chain, it will generate the exact same keys, so Grievous can use the first key to successfully decrypt Obi-Wans message.

Sending a Reply

Grievous is surprised by bold actions of Obi-Wan and promptly goes ahead to send two replies.

He advances his freshly initialized sending chain to generate a fresh message key (with index 1). He uses the key to encrypt his first message “General Kenobi!” and sends it over to Obi-Wan. He includes his public ratchet key in the message.

Unfortunately though the message goes missing and is never received.

He then forwards his sending chain a second time to generate another message key (index 2). Using that key he encrypt the message “You are a bold one.” and sends it to Obi-Wan. This message contains the same public ratchet key as the first one, but has the sending chain index 2. This time the message is received.

Receiving the Reply

Once Obi-Wan receives the second message and does a full ratchet step in order to complete his session with Grevious. First he does a DH handshake between his private and the Grevouos’ public ratcheting key he got from the message. The result is used to setup his receiving chain. He then generates a new ratchet key pair and does a second handshake. The result is used to reset his sending chain.

Obi-Wan notices that the sending chain index of the received message is 2 instead of 1, so he knows that one message must have been missing or delayed. To deal with this problem, he advances his receiving chain twice (meaning he generates two message keys from the receiving chain) and caches the first key. If later the missing message arrives, the cached key can be used to successfully decrypt the message. For now only one message arrived though. Obi-Wan uses the generated message key to successfully decrypt the message.

Conclusions

What have we learned from this example?

Firstly, we can see that the protocol guarantees forward secrecy. The KDF-Chains used in the three chains can only be advanced forwards, and it is impossible to turn them backwards to generate earlier keys. This means that if an attacker manages to get access to the state of the receiving chain, they can not decrypt messages sent prior to the moment of attack.

But what about future messages? Since the Diffie-Hellman ratchet introduces new randomness in every step (new random keys are generated), an attacker is locked out after one step of the DH ratchet. Since the DH ratchet is used to reset the symmetric ratchets of the sending and receiving chain, the window of the compromise is limited by the next DH ratchet step (meaning once the other party replies, the attacker is locked out again).

On top of this, the double ratchet algorithm can deal with missing or out-of-order messages, as keys generated from the receiving chain can be cached for later use. If at some point Obi-Wan receives the missing message, he can simply use the cached key to decrypt its contents.

This self-healing property was eponymous to the Axolotl protocol (an earlier name of the Signal protocol, the basis of OMEMO).

Acknowledgements

Thanks to syndace and paul for their feedback and clarification on some points.

Saturday, 13 April 2019

Tenth Anniversary of AltOS

In the early days of the collaboration between Bdale Garbee and Keith Packard that later became Altus Metrum, the software for TeleMetrum was crafted as an application running on top of an existing open source RTOS. It didn't take long to discover that the RTOS was ill-suited to our needs, and Keith had to re-write various parts of it to make things fit in the memory available and work at all.

Eventually, Bdale idly asked Keith how much of the RTOS he'd have to rewrite before it would make sense to just start over from scratch. Keith took that question seriously, and after disappearing for a day or so, the first code for AltOS was committed to revision control on 12 April 2009.

Ten years later, AltOS runs on multiple processor architectures, and is at the heart of all Altus Metrum products.

Friday, 05 April 2019

Hard drive failure in my zpool 😞

I have a storage box in my house that stores important documents, backups, VM disk images, photos, a copy of the Tor Metrics archive and other odd things. I’ve put a lot of effort into making sure that it is both reliable and performant. When I was working on a modern CollecTor for Tor Metrics recently, I used this to be able to run the entire history of the Tor network through the prototype replacement to see if I could catch any bugs.

I have had my share of data loss events in my life, but since I’ve found ZFS I have hope that it is possible to avoid, or at least seriously minimise the risk of, any catastrophic data loss events ever happening to me again. ZFS has:

- cryptographic checksums to validate data integrity

- mirroring of disks

- “scrub” function that ensures that the data on disk is actually still good even if you’ve not looked at it yourself in a while

ZFS on its own is not the entire solution though. I also mix-and-match hard drive models to ensure that a systematic fault in a particular model won’t wipe out all my mirrors at once, and I also have scheduled SMART self-tests to detect faults before any data loss has occured.

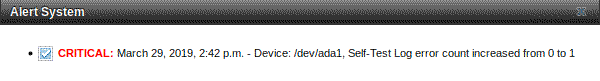

Unfortunately, one of my drives in my zpool has failed a SMART self-test.

This means I now have to treat that drive as “going to fail soon” which means that I don’t have redundancy in my zpool anymore, so I have to act. Fortunately, in September 2017 when my workstation died, I received some donations towards the hardware I use for my open source work and I did buy a spare HDD for this very situation!

At present my zpool setup looks like:

% zpool status flat

pool: flat

state: ONLINE

scan: scrub repaired 0 in 0 days 07:05:28 with 0 errors on Fri Apr 5 07:05:36 2019

config:

NAME STATE READ WRITE CKSUM

flat ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

cache

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

errors: No known data errors

The drives in the two mirrors are 3TB drives, in each mirror is one WD Red and one Toshiba NAS drive. In this case, it is one of the WD Red drives that has failed and I’ll be replacing it with another WD Red. One important thing to note is that you have to replace the drive with one of equal or greater capacity. In this case it is the same model so the capacity should be the same, but not all X TB drives are going to be the same size.

You’ll notice here that it is saying No known data errors. This is because

there hasn’t been any issues with the data yet, it is just a SMART failure, and

hopefully by replacing the disk any data error can be avoided entirely.

My plan was to move to a new system soon, with 8 bays. In that system I’ll keep the stripe over 2 mirrors but one mirror will run over 3x 6TB drives with the other remaining on 2x 3TB drives. This incident leaves me with only 1 leftover 3TB drive though so maybe I’ll have to rethink this.

Free space remaining in my zpool

My current machine, an HP MicroServer, does not support hot-swapping the drives so I have to start by powering off the machine and replacing the drive.

% zpool status flat

pool: flat

state: DEGRADED

status: One or more devices could not be opened. Sufficient replicas exist for

the pool to continue functioning in a degraded state.

action: Attach the missing device and online it using 'zpool online'.

see: http://illumos.org/msg/ZFS-8000-2Q

scan: scrub repaired 0 in 0 days 07:05:28 with 0 errors on Fri Apr 5 07:05:36 2019

config:

NAME STATE READ WRITE CKSUM

flat DEGRADED 0 0 0

mirror-0 ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

mirror-1 DEGRADED 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

xxxxxxxxxxxxxxxxxxxx UNAVAIL 0 0 0 was /dev/gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

cache

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

errors: No known data errors

The disk that was part of the mirror is now unavailable, but the pool is still functioning as the other disk is still present. This means that there are still no data errors and everything is still running. The only downtime was due to the non-hot-swappableness of my SATA controller.

Through the web interface in FreeNAS, it is possible to now use the new disk to replace the old disk in the mirror: Storage -> View Volumes -> Volume Status (under the table, with the zpool highlighted) -> Replace (with the unavailable disk highlighted).

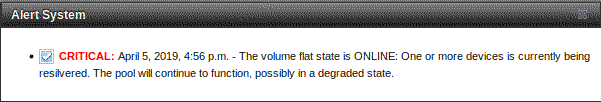

Running zpool status again:

% zpool status flat

pool: flat

state: ONLINE

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Fri Apr 5 16:55:47 2019

1.30T scanned at 576M/s, 967G issued at 1.12G/s, 4.33T total

4.73G resilvered, 21.82% done, 0 days 00:51:29 to go

config:

NAME STATE READ WRITE CKSUM

flat ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0 (resilvering)

cache

gptid/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx ONLINE 0 0 0

errors: No known data errors

And everything should be OK again soon, now with the dangerous disk removed and a hopefully more reliable disk installed.

A more optimistic message from FreeNAS

This has put a dent in my plans to upgrade my storage, so for now I’ve added the hard drives I’m looking for to my Amazon wishlist.

As for the drive that failed, I’ll be doing an ATA Secure Erase and then disposing of it. NIST SP 800-88 thinks that ATA Secure Erase is in the same category as degaussing a hard drive and that it is more effective than overwriting the disk with software. ATA Secure Erase is faster too because it’s the hard drive controller doing the work. I just have to hope that my firmware wasn’t replaced with firmware that only fakes the process (or I’ll just do an overwrite anyway to be sure). According to the same NIST document, “for ATA disk drives manufactured after 2001 (over 15 GB) clearing by overwriting the media once is adequate to protect the media from both keyboard and laboratory attack”.

This blog post is also a little experiment. I’ve used a Unicode emoji in the title, and I want to see how various feed aggregators and bots handle that. Sorry if I broke your aggregator or bot.

IETF 104 in Prague

Thanks to support from Article 19, I was able to attend IETF 104 in Prague, Czech Republic this week. Primarily this was to present my Internet Draft which takes safe measurement principles from Tor Metrics work and the Research Safety Board and applies them to Internet Measurement in general.

My IETF badge, complete with additional tag for my nick

I attended with a free one-day pass for the IETF and free hackathon registration, so more than just the draft presentation happened. During the hackathon I sat at the MAPRG table and worked on PATHspider with Mirja Kühlewind from ETH Zurich. We have the code running again with the latest libraries available in Debian testing and this may become the basis of a future Tor exit scanner (for generating exit lists, and possibly also some bad exit detection). We ran a quick measurement campaign that was reported in the hackathon presentations.

During the hackathon I also spoke to Watson Ladd from Cloudflare about his Roughtime draft which could be interesting for Tor for a number of reasons. One would be for verifying if a consensus is fresh, another would be for Tor Browser to detect if a TLS cert is valid, and another would be providing archive signatures for Tor Metrics. (We’ve started looking at archive signatures since our recent work on modernising CollecTor).

On the Monday, this was the first “real” day of the IETF. The day started off for me at the PEARG meeting. I presented my draft as the first presentation in that session. The feedback was all positive, it seems like having the document is both desirable and timely.

The next presentation was from Ryan Guest at Salesforce. He was talking about privacy considerations for application level logging. I think this would also be a useful draft that compliments my draft on safe measurement, or maybe even becomes part of my draft. I need to follow up with him to see what he wants to do. A future IETF hackathon project might be comparing Tor’s safe logging with whatever guidelines we come up with, and also comparing our web server logs setup.

Nick Sullivan was up next with his presentation on Privacy Pass. It seems like a nice scheme, assuming someone can audit the anti-tagging properties of it. The most interesting thing I took away from it is that federation is being explored which would turn this into a system that isn’t just for Cloudflare.

Amelia Andersdotter and Christoffer Långström then presented on differential privacy. They have been exploring how it can be applied to binary values as opposed to continuous, and how it could be applied to Internet protocols like the QUIC spin bit.

The last research presentation was Martin Schanzenbach presenting on an identity provider based on the GNU Name System. This one was not so interesting for me, but maybe others are interested.

I attended the first part of the Stopping Malware and Researching Threats (SMART) session. There was an update from Symantec based on their ISTR report and I briefly saw the start of a presentation about “Malicious Uses of Evasive Communications and Threats to Privacy“ but had to leave early to attend another meeting. I plan to go back and look through all of the slides from this session later.

The next IETF meeting is directly after the next Tor meeting (I had thought for some reason it directly clashed, but I guess I was wrong). I will plan to remotely participate in PEARG again there and move my draft forwards.

When the Duke walks, you don't notice it

This is my late submission for transgender day of visibility. It comes almost a week late, but I suppose I’ll use this proverb that is popular among the trans community to justify myself:

The best time to plant a tree was 20 years ago. The second best time is now.

Or: The best time to post about transgender day of visibility was 31st of March. The second best time is 5th of April.

I should preface this post by emphasising that all of its contents are exclusively my own experiences, and may not speak for anybody other than myself. It is written in the spirit of visibility, so that the public knows that transgender people exist, and that ultimately we are normal people.

A second justification for my lateness has been my hesitance to broadcast this to the internet. I like privacy, and I take a lot of steps to safeguard it (e.g, by using Free Software). But there is one step that I have made only halfway, and that is anonymity. I use a VPN to hide my IP address, and I take special care not to give internet giants all of my personal data—I am a nobody when I surf the web.

But when I interact with human beings on the internet, I try to be me. This is terrible privacy advice, because the internet never forgets when you make a mistake. But I find it important, because the internet is a very real place. More and more, the internet affects our collective lives. It allows us to do tangible things such as purchasing items, and it does intangible things such as morphing our perception and opinion of the world. Anonymity allows you to enter this space—this real space—as an ethereal ghost, existing perpetually out of sight, but able to interact just the same. It does not take a creative mind to imagine how this can be abused, and people do.

I could abuse such ghostly powers for good, but I am not comfortable with holding that power. So I wish to be myself in spite of knowing better. To exist online under this name, I must self-censor. I must not say things that I imagine will come back to harm my future self, and I must hide aspects about myself that I do not want everybody to know—say it once, and the whole world knows.

And for years, I haven’t said it: I am transgender. By itself this is unimportant (so what?), but the act of saying it is not. The act of saying it means that anybody, absolutely anybody until the end of the digital age, can discover this about me and hold it against me, and there is no shortage of people who would. And that is frankly quite scary.

But the act of saying it is also activism. By saying it, you assert your existence in the face of an ideology that wishes you didn’t. By saying it, you own the narrative of what it means to be trans, rather than ideologues who would paint you in a dehumanised light. By saying it, you make tangible and visible a human experience that many people do not understand. At the risk of sounding self-aggrandising, there is power in that.

The last point I find especially empowering. Until the exact moment I decided to transition, I simply did not know of the mere existence of trans people. I knew about drag queens flamboyantly dancing on boats in the canals of Amsterdam, but those people were otherworldly to me. They weren’t tangibly real, and they weren’t me. Had I known that transgender people were everyday women and men who care about the same things that I do, I would have spared myself a lot of mental anguish and made the leap a lot sooner.

Instead, something else prompted that realisation. I was reading a Christmas novel in Summer, as you do. The book was called “Let It Snow: Three Holiday Romances” authored by John Green, Lauren Myracle and Maureen Johnson. The book has three POVs in a town struck by a heavy snow storm, and there’s a lot of interplay between the POVs.

I was reading one of John Green’s chapters. His main character had long been great friends with a tomboyish girl (nicknamed “the Duke”) who struggled with her gender expression, and the two embark on a great journey through the snow storm to reach the waffle house. During this trek, there is a scene where he is walking a few paces behind her, and he is looking at her. And while looking, and through the shared experiences, a sudden thought strikes him: “Anyway, the Duke was walking, and there was a certain something to it, and I was kind of disgusted with myself for thinking about that certain something. [‌] When Brittany the cheerleader walks, you notice it. When the Duke walks, you don’t. Usually.”

These two had been friends for the longest time, and for the first time, he entertained the thought that it might be something more than that. And you read on, and on, and this wayward thought starts to become quite real and serious. And suddenly he becomes self-aware of the thought absorbing him:

“Once you think a thought, it is extremely difficult to unthink it. And I had thought the thought.”

It hit me like a brick. In that very same instance, I, too, thought the thought. What had been a feeling for so long, I finally thought out loud in my head, and it was impossible for me to unthink it. It had nothing to do with the novel, and I have no idea how I made that leap, but in that moment I realised for the first time, truly realised, that I did not want to be a boy—that I wanted to be a girl. And I was miserable for it, but eventually better off. A quick search later and I discovered that trans people exist, and that transition is an actual thing that normal people do.

So I did it. And it has been good. Whatever ailed me prior to transition is mostly gone, and I have become a functioning adult who does many non-transgender-related things such as translating GNOME into Esperanto and creating cat monsters for Dungeons & Dragons. But I never really included being transgender in any of my online activities, and I want to change that. I want to be more like the Duke. I want to walk like her, and while people may not always see that walk, I want to call attention to it every now and then. And maybe it will help someone be struck by the thought, whatever spark of madness it is that they need.

Happy transgender day of visibility.

Thursday, 04 April 2019

The Power of Workflow Scripts

Nextcloud has the ability to define some conditions under which external scripts are executed. The app which makes this possible is called “Workflow Script”. I always knew that this powerful tool exists, yet I never really had a use case for it. This changed last week. Task I heavily rely on text files for note taking. I organize them in folders, for example I have a “Projects” folders with sub-folders for each project I work on currently.

Wednesday, 03 April 2019

Shaking Hands With OMEMO: X3DH Key Exchange

This is the first part of a small series about the cryptographic building blocks of OMEMO. This post is about the Extended Triple Diffie Hellman Key Exchange Algorithm (X3DH) which is used to establish a session between OMEMO devices.

Part 2: Closer Look at the Double Ratchet

In the past I have written some posts about OMEMO and its future and how it does compare to the Olm encryption protocol used by matrix.org. However, some readers requested a closer, but still straightforward look at how OMEMO and the underlying algorithms work. To get started, we first have to take a look at its past.

OMEMO was implemented in the Android Jabber Client Conversations as part of a Google Summer of Code project by Andreas Straub in 2015. The basic idea was to utilize the encryption library used by Signal (formerly TextSecure) for message encryption. So basically OMEMO borrows almost all the cryptographic mechanisms including the Double Ratchet and X3DH from Signals encryption protocol, which is appropriately named Signal Protocol. So to begin with, lets look at it first.

The Signal Protocol

The famous and ingenious protocol that drives the encryption behind Signal, OMEMO, matrix.org, WhatsApp and a lot more was created by Trevor Perrin and Moxie Marlinspike in 2013. Basically it consists of two parts that we need to further investigate:

- The Extended Triple-Diffie-Hellman Key Exchange (X3DH)

- The Double Ratchet Algorithm

One core principle of the protocol is to get rid of encryption keys as soon as possible. Almost every message is encrypted with another fresh key. This is a huge difference to other protocols like OpenPGP, where the user only has one key which can decrypt all messages ever sent to them. The later can of course also be seen as an advantage OpenPGP has over OMEMO, but it all depends on the situation the user is in and what they have to protect against.

A major improvement that the Signal Protocol introduced compared to encryption protocols like OTRv3 (Off-The-Record Messaging) was the ability to start a conversation with a chat partner in an asynchronous fashion, meaning that the other end didn’t have to be online in order to agree on a shared key. This was not possible with OTRv3, since both parties had to actively send messages in order to establish a session. This was okay back in the days where people would start their computer with the intention to chat with other users that were online at the same time, but it’s no longer suitable today.

Note: The recently worked on OTRv4 will not come with this handicap anymore.

The X3DH Key Exchange

Let’s get to it already!

X3DH is a key agreement protocol, meaning it is used when two parties establish a session in order to agree on a shared secret. For a conversation to be confidential we require, that only sender and (intended) recipient of a message are able to decrypt it. This is possible when they share a common secret (eg. a password or shared key). Exchanging this key with one another has long been kind of a hen and egg problem: How do you get the key from one end to the other without an adversary being able to get a copy of the key? Well, obviously by encrypting it, but how? How do you get that key to the other side? This problem has only been solved after the second world war.

The solution is a so called Diffie-Hellman-Merkle Key Exchange. I don’t want to go into too much detail about this, as there are really great resources about how it works available online, but the basic idea is that each party possesses an asymmetric key pair consisting of a public and a private key. The public key can be shared over insecure networks while the

private key must be kept secret. A Diffie-Hellman key exchange (DH) is the process of combining a public key A with a private key b in order to generate a shared secret. The essential trick is, that you get the same exact secret if you combine the secret key a with the public key B. Wikipedia does a great job at explaining this using an analogy of mixing colors.

Deniability and OTR

In normal day to day messaging you don’t always want to commit to what you said. Especially under oppressive regimes it may be a good idea to be able to deny that you said or wrote something specific. This principle is called deniability.

Note: It is debatable, whether cryptographic deniability ever saved someone from going to jail, but that’s not scope of this blog post.

At the same time you want to be absolutely sure that you are really talking to your chat partner and not to a so called man in the middle. These desires seem to be conflicting at first, but the OTR protocol featured both. The user has an IdentityKey, which is used to identify the user by means of a fingerprint. The (massively and horribly simplified) procedure of creating a OTR session is as follows: Alice generates a random session key and signs the public key with her IdentityKey. She then sends that public key over to Bob, who generates another random session key with which he executes his half of the DH handshake. He then sends the public part of that key (again, signed) back to Alice, who does another DH to acquire the same shared secret as Bob. As you can see, in order to establish a session, both parties had to be online. Note: The signing part has been oversimplified for sake of readability.

From DH to X3DH

Perrin and Marlinspike improved upon this model by introducing the concept of PreKeys. Those basically are the first halves of a DH-handshake, which can – along with some other keys of the user – be uploaded to a server prior to the beginning of a conversation. This way another user can initiate a session by fetching one half-completed handshake and completing it.

Basically the Signal protocol comprises of the following set of keys per user:

| IdentityKey (IK) | Acts as the users identity by providing a stable fingerprint |

| Signed PreKey (SPK) | Acts as a PreKey, but carries an additional signature of IK |

| Set of PreKeys ({OPK}) | Unsigned PreKeys |

If Alice wants to start chatting, she can fetch Bobs IdentityKey, Signed PreKey and one of his PreKeys and use those to create a session. In order to preserve cryptographic properties, the handshake is modified like follows:

DH1 = DH(IK_A, SPK_B)

DH2 = DH(EK_A, IK_B)

DH3 = DH(EK_A, SPK_B)

DH4 = DH(EK_A, OPK_B)

S = KDF(DH1 || DH2 || DH3 || DH4)

EK_A denotes an ephemeral, random key which is generated by Alice on the fly. Alice can now derive an encryption key to encrypt her first message for Bob. She then sends that message (a so called PreKeyMessage) over to Bob, along with some additional information like her IdentityKey IK, the public part of the ephemeral key EK_A and the ID of the used PreKey OPK.

Once Bob logs in, he can use this information to do the same calculations (just with swapped public and private keys) to calculate S from which he derives the encryption key. Now he can decrypt the message.

In order to prevent the session initiation from failing due to lost messages, all messages that Alice sends over to Bob without receiving a first message back are PreKeyMessages, so that Bob can complete the session, even if only one of the messages sent by Alice makes its way to Bob. The exact details on how OMEMO works after the X3DH key exchange will be discussed in part 2 of this series

X3DH Key Exchange TL;DR

X3DH utilizes PreKeys to allow session creation with offline users by doing 4 DH handshakes between different keys.

A subtle but important implementation difference between OMEMO and Signal is, that the Signal server is able to manage the PreKeys for the user. That way it can make sure, that every PreKey is only used once. OMEMO on the other hand solely relies on the XMPP servers PubSub component, which does not support such behavior. Instead, it hands out a bundle of around 100 PreKeys. This seems like a lot, but in reality the chances of a PreKey collision are pretty high (see the birthday problem).

OMEMO does come with some counter measures for problems and attacks that arise from this situation, but it makes the protocol a little less appealing than the original Signal protocol.

Clients should for example keep used PreKeys around until the end of catch -up of missed message to allow decryption of messages that got sent in sessions that have been established using the same PreKey.

Monday, 25 March 2019

Another Step to a Google-free Life

I watch a lot of YouTube videos. So much, that it starts to annoy me, how much of my free time I’m wasting by watching (admittedly very interesting) clips of a broad range of content creators.

Logging out of my Google account helped a little bit to keep my addiction at bay, as it appears to prevent the YouTube algorithm, which normally greets me with a broad set of perfectly selected videos from recognizing me. But then again I use Google to log in to one service or another, so it became annoying to log in and back out again all the time. At one point I decided to delete my YouTube history, which resulted in a very bad prediction of what videos I might like. This helped for a short amount of time, but the algorithm quickly returned to its merciless precision after a few days.

Today I decided, that its time to leave Google behind completely. My Google Mail account was used only for online shopping anyways, so I figured why not use a more privacy respecting service instead. Self-hosting was not an option for me, as I only have a residential IP address on my Raspberry Pi and also I heard that hosting a mail server is a huge pain.

A New Mail Account

So I created an account at the Berlin based service mailbox.org. They offer emails plus some cloud stuff like an office suite, storage etc., although I don’t think I’ll use any of the additional services (oh, they offer an XMPP account as well :P). The service is not free as in free beer as it costs 1â‚Ź per month, but that’s a fair price in my opinion. All in all it appears to be a good replacement for all the Google stuff.

As a next step, I went through the long list of all the websites and shops that I have accounts on, scouting for those services that are registered on my Google Mail address. All those mail settings had to be changed to the new account.

Mail Extensions

Bonus Tipp: Mailbox.org has support for so called Mail Extensions (or Plus Extensions, I’m not really sure how they are called). This means that you can create a folder in your inbox, lets say “fsfe”. Now you can change your mail address of your FSFE account to “username+fsfe@mailbox.org”. Mails from the FSFE will still go to your “username@mailbox.org” mail account, but they are automatically sorted into the fsfe inbox. This is useful not only to sort mails by sender, but also to find out, which of the many services you use messed up and leaked your mail address to those nasty spammers, so you can avoid that service in the future.

This trick also works for Google Mail by the way.

Deleting (most) the Google Services

The last step logically would be to finally delete my Google account. However, I’m not entirely sure if I really changed all the important services over to the new account, so I’ll keep it for a short period of time (a month or so) to see if any more important mails arrive.

However, I discovered that under the section “Delete Services or Account” you can see a list of all the services which are connected with your Google account. It is possible to partially delete those services, so I went ahead and deleted most of it, except Google Mail.

Additional Bonus Tipp: I use NewPipe on my phone, which is a free libre replacement for the YouTube app. It has a neat feature which lets you import your subscriptions from your YouTube account. That way I can still follow some of the creators, but in a more manual way (as I have to open the app on my phone, which I don’t often do). In my eyes, this is a good compromise

I’m looking forward to go fully Google-free soon. I de-googled my phone ages ago, but for some reason I still held on to my Google account. This will be sorted out soon though!

De-Googling your Phone?

By the way, if you are looking to de-google your phone, Mike Kuketz has a great series of blog posts about that topic (in German though):

Happy Hacking!

Update (27.05.2019)

Friday, 22 March 2019

How to create good SSH keys

A couple years back we wrote a guide on how to create good OpenPGP/GnuPG keys and now it is time to write a guide on SSH keys for much of the same reasons: SSH key algorithms have evolved in past years and the keys generated by the default OpenSSH settings a few years ago are no longer considered state-of-the-art. This guide is intended both for those completely new to SSH and to those who have already been using it for years and who want to make sure they are following the latest best practices.

Use OpenSSH 7 or later

Related to SSH keys there have been some relevant changes in versions 5.7, 6.5 and 7.0. Latest version is 7.9. You should be running at least 7.0. Current Debian stable (”Stretch”) shipped version 7.4 and for example Ubuntu 16.04 (”Xenial”) shipped 7.2, so nobody should be running on their laptop any later versions than these.

Generate Ed25519 keys

With a recent version of OpenSSH, simply run ssh-keygen -t ed25519. This will create a private and public key pair files at .ssh/id_ed25519 (and .pub) using the Ed25519 algorithm, which is considered state of the art. Elliptic curve algorithms in general are sleek and efficient and unlike the other well known elliptic curve algorithm ECDSA, this Ed25519 does not depend on any suspicious NIST defined constants. If you encounter a server that is very old and does not support Ed25519 keys, you might need to have a more traditinal RSA keypair. A strong 4k RSA key pair can be generated with ssh-keygen -b 4096. Hopefully you won’t need to ever do that.

Running ssh-keygen will prompt for a password. If your laptop is encrypted and well protected you can omit the password and gain some speed and convenience in your SSH commands.

Store the private key securely

Hopefully your laptop is well protected with full disk encryption etc and you can trust that nobody else than yourself has access to your /home/<username>/.ssh directory. Hopefully you also have securely stored encrypted backups of your laptop so that you can recover the .ssh directory if your laptop for any reason is lost or broken.

The SSH keyfiles are stored as armored ASCII, which means that you could even print them on paper and store the printed key in a real vault just to be extra sure you never loose your private key (.ssh/id_ed25519).

The public key is indeed designed to be public

The public key .ssh/id_ed25519.pubon the other hand is meant to be public. Here is mine for example:

~/.ssh$ cat id_ed25519.pub

ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIMdBlrVoDupARk3pd1Q9sDImaGCxEalcFt7QTqBa36kH otto@XPS-13-9370You can find a lot of public SSH keys for example on Github using the URL https://github.com/<username>.keys. You may also want to check out the excellent Github SSH usage docs.

This public key is the one you will distribute to remote servers. Place them on the remote server in the .ssh/authorized_keys file. The servers you try to access will use the public key to create a challenge, and only your laptop that has the private key pair can solve that challenge, and thus authenticate that your connection to the server is authorized.

On Linux machines a shorthand to copy your SSH key to a remote server is to run ssh-copy-id remote.example.com. This will ask for a password on the first time, but once the key is in place, a SSH keys will be used instead and no password is asked anymore.

Best practices

Once you are familiar with SSH key usage basics, please adopt these policies:

- Disable password authentication on the SSH servers you control altogether. The less there are passwords, the less there as ones that can leak or be forgotten etc. For passwords less is indeed more. Only use keys for authentication on SSH connections.