Planet Python

Last update: December 25, 2022 07:41 AM UTC

December 24, 2022

Wyatt Baldwin

PDM vs Poetry

A few years back, I started using poetry to manage dependencies for all my projects. I ran into some minor issues early on but haven’t had any problems recently and prefer it to any of the other dependency management / packaging solutions I’ve used. Recently, I’ve started hearing about pdm and how it’s the bee’s knees. Earlier today, I did a search for “pdm vs poetry” and didn’t find much, so I thought I’d write something myself.

December 24, 2022 03:30 AM UTC

December 23, 2022

CodersLegacy

Setup Virtual Environment for Pyinstaller with Venv

In this Python tutorial, we will discuss how to optimize your Pyinstaller EXE’s using Virtual Environments. We will be using the “venv” library to create the Virtual environment for Pyinstaller, which is actually already included in every Python installation by default (because its part of the standard library).

We will walk you through the entire process, starting from “what” virtual environments are, “why” we need them and “how” to create one.

Understanding Pyinstaller

Let me start by telling you how Pyinstaller works. We all know that Pyinstaller creates a standalone EXE which bundles all the dependencies, allowing it to run on any system.

What alot of people do not know however, is “HOW” Pyinstaller does this.

Let me explain.

What Pyinstaller does is “freezes” your Python environment into what we call a “frozen” application. In non-technical terms, this means to take bundle everything in your Python environment, like your Python installation, libraries that you have installed, and other dependencies (e.g DLL’s or Data files) you may be using, into a single application.

Kind of like taking a “snap-shot” of your program in it’s running state (with all the dependencies active) and saving it.

With this understanding, we can now safely explain the benefits of Virtual Environments.

What are Virtual Environments in Python?

Imagine for a moment that you have a 100 libraries installed for Python on your device. You might think you do not have many, but the truth is that when you download a big library (e.g Matplotlib) it downloads several other libraries along with it (as dependencies).

To check the currently installed libraries on our systems (excluding the ones included by default), run the following command.

pip listIt will give you something like that following output.

altgraph 0.17.3

astroid 2.12.9

async-generator 1.10

attrs 22.2.0

auto-py-to-exe 2.24.1

Automat 22.10.0

autopep8 1.7.0

Babel 2.10.3

beautifulsoup4 4.11.1

bottle 0.12.23

bottle-websocket 0.2.9

bs4 0.0.1

cad-to-shapely 0.3.1

cairocffi 1.3.0

CairoSVG 2.5.2

certifi 2022.12.7

cffi 1.15.1

chardet 4.0.0

colorama 0.4.5

constantly 15.1.0

cryptography 38.0.4

cssselect 1.2.0

cssselect2 0.6.0

cx-Freeze 6.13.1

cycler 0.11.0

Cython 0.29.32

defusedxml 0.7.1

dill 0.3.5.1

Eel 0.14.0

et-xmlfile 1.1.0

exceptiongroup 1.1.0

ezdxf 0.18

filelock 3.8.0

fonttools 4.34.4

future 0.18.2

geomdl 5.3.1

gevent 22.10.2

gevent-websocket 0.10.1

greenlet 2.0.1

h11 0.14.0

hyperlink 21.0.0

idna 2.10

incremental 22.10.0

isort 5.10.1

itemadapter 0.7.0

itemloaders 1.0.6

Jinja2 3.0.1

jmespath 1.0.1

kiwisolver 1.4.4

lazy-object-proxy 1.7.1

lief 0.12.3

lxml 4.9.1

MarkupSafe 2.1.1

matplotlib 3.5.3

mccabe 0.7.0

more-itertools 8.14.0

MouseInfo 0.1.3

mpmath 1.2.1

Nuitka 1.2.4

numexpr 2.8.3

numpy 1.23.1

openpyxl 3.0.10

ordered-set 4.1.0

outcome 1.2.0

packaging 21.3

pandas 1.4.3

pandastable 0.13.0

parsel 1.7.0

pefile 2022.5.30

Pillow 8.4.0

pip 22.2.1

platformdirs 2.5.2

Protego 0.2.1

pyasn1 0.4.8

pyasn1-modules 0.2.8

PyAutoGUI 0.9.53

pycodestyle 2.9.1

pycparser 2.21

PyDispatcher 2.0.6

pygal 3.0.0

pygame 2.1.2

pygame-menu 4.2.8

PyGetWindow 0.0.9

pyinstaller 5.6.2

pyinstaller-hooks-contrib 2022.13

pylint 2.15.2

PyMsgBox 1.0.9

PyMuPDF 1.20.2

pyOpenSSL 22.1.0

pyparsing 3.0.9

pyperclip 1.8.2

PyQt5 5.15.7

PyQt5-Qt5 5.15.2

PyQt5-sip 12.11.0

PyQt6 6.4.0

PyQt6-Qt6 6.4.1

PyQt6-sip 13.4.0

PyRect 0.2.0

PyScreeze 0.1.28

PySocks 1.7.1

pytest-check 1.0.6

python-dateutil 2.8.2

pytweening 1.0.4

pytz 2022.1

pywin32-ctypes 0.2.0

queuelib 1.6.2

requests 2.25.1

requests-file 1.5.1

rhino-shapley-interop 0.0.4

rhino3dm 7.15.0

scipy 1.9.0

Scrapy 2.7.1

sectionproperties 2.0.3

selenium 4.7.2

service-identity 21.1.0

setuptools 63.2.0

Shapely 1.8.2

six 1.16.0

sniffio 1.3.0

sortedcontainers 2.4.0

soupsieve 2.3.2.post1

sympy 1.10.1

tabulate 0.8.10

tinycss2 1.1.1

tkcalendar 1.6.1

tkdesigner 1.0.6

tkinter-tooltip 2.1.0

tldextract 3.4.0

toml 0.10.2

tomli 2.0.1

tomlkit 0.11.4

triangle 20220202

trio 0.22.0

trio-websocket 0.9.2

Twisted 22.10.0

twisted-iocpsupport 1.0.2

typing_extensions 4.3.0

urllib3 1.26.13

w3lib 2.1.1

webencodings 0.5.1

whichcraft 0.6.1

wrapt 1.14.1

wsproto 1.2.0

xlrd 2.0.1

zope.event 4.5.0

zope.interface 5.5.2

That was quite a long list right? I don’t even recognize half of those libraries (they were installed as dependencies). And this is on a relatively new Python installation (3-4 months old). Running this on my old device might have given me double the above amount.

Now you might have already put two-and-two together and realized the problem here.

When we normally use Pyinstaller to bundle our applications, it ends up including ALL of the libraries that we have installed. Regardless of whether they are actually needed, or not.

Now obviously this is a big problem, especially if you have several large libraries lying around which are not actually being used.

The Solution?

Virtual Environments!

Now, what we could do is setup a new Python installation on your PC and only install the required packages (which you know are being used). But this is an extra hassle, and can cause issues with your current Python installation if you are not careful.

Instead, we use Virtual environments which basically create a “fresh copy” of your current Python version, without any of the installed libraries. You can create as many virtual environments as you want!

We can then compile our Pyinstaller EXE’s inside these virtual environments (just like how we normally do). This time the EXE will only include the bare minimum number of libraries.

It is actually recommended to have a virtual environment for each major project you have. This is to ensure that there is no library conflict, and to ensure version control (the version of the libraries you are using).

Version Control in Virtual Environments with Venv

For example, a common issue that can happen is when you install a new library “A” (unrelated to your application) and it requires a dependency “B”, which is also required by library “C”.

Library “A” requires the dependency to be at atleast version 1.3 (random version number i picked), whereas library “C” only works with the dependency “B” up-to version 1.2 (1.3 onwards not supported).

Hence, we now have a conflict issue. There are many other scenarios like this under which problems can occur. This is just one of them.

Virtual environments help isolate projects and dependencies into separate environments, minimizing the risk of conflict.

Creating a Pyinstaller Virtual Environment with Venv

Now for the actual implementation part of the tutorial. The first thing we will do is setup our Virtual environments.

For users with Python added to PATH, run the following command.

python -m venv tutorial

In the above command, “tutorial” is the name of the virtual environment. This is completely your choice what you choose to name it. Also pay attention to which folder you are running this command in. The virtual environment will be created there.

For users who do not have Python added to PATH, you need to find the path to your Python installation. You can typically find it in a location like this:

C:\Users\CodersLegacy\AppData\Local\Programs\Python\Python310To create a virtual environment you need to run the following command (swapping out “python” for the location of your python.exe file)

C:\Users\CodersLegacy\AppData\Local\Programs\Python\Python310\python.exe -m venv tutorial

Activating the Virtual Environment

We aren’t done yet though. The Virtual environment needs to be activated first! If you completed the previous step, we should have a folder structure something like this.

-- virtuals_envs_folder

-- tutorialvirtual_envs_folder is simply a parent folder where we ran the previous commands for creating the virtual environment.

We will now add a new Python file to the virtual_envs_folder (not the tutorial folder). So now our file structure is something like this.

-- virtuals_envs_folder

-- tutorial

-- file.pyfile.py is where all the code will go, which we want want to convert to a pyinstaller exe. Any supporting files, folder or libraries you have created can also be added here.

Now we need to run another command which will activate the virtual environment. The command can vary slightly depending on what terminal/console/OS you are using.

Command Prompt: (Windows)

C:\Users\CodersLegacy\virtual_envs_folder> tutorial\Scripts\activate.batWindows PowerShell:

C:\Users\CodersLegacy\virtual_envs_folder> tutorial\Scripts\Activate.ps1Linux:

C:\Users\CodersLegacy\virtual_envs_folder> tutorial\bin\activateCongratulations, now your Virtual environment is now activated and ready to run! Our command-line will now be pointing to the Python installation inside our Virtual environment instead of the main Python installation. (This effect will end once you close the command line/terminal)

Your virtual environment folder (tutorial) should look something like this:

-- tutorial

-- Include

-- Lib

-- Scripts

-- file.pyThe two important files here are “Lib” and “Scripts”. “Lib” is where all of our installed libraries will go. “Scripts” is where our Python.exe file is.

Your command prompt/terminal should also look something like this:

(tutorial) C:\Users\CodersLegacy\virtual_envs_folder>Notice the “(tutorial)” which is now included right in the start. If this has appeared, your Venv Virtual Environment is ready to use with Python and Pyinstaller.

Setting up our Application in the Virtual Environment

Now we will begin installing the required libraries we need. Here is some sample code we will be using in our file.py file.

import tkinter as tk

from tkinter.filedialog import askopenfilename, asksaveasfile

import numpy as np

from matplotlib.figure import Figure

from matplotlib.backends.backend_tkagg import FigureCanvasTkAgg

from matplotlib.path import Path

from matplotlib.patches import PathPatch

from matplotlib.collections import PatchCollection

from pandastable import Table

import pandas as pd

class Window():

def __init__(self, master):

self.main = tk.Frame(master, background="white")

self.rightframe = tk.Frame(self.main, background="white")

self.rightframe.pack(side=tk.LEFT)

self.leftframe = tk.Frame(self.main, background="white")

self.leftframe.pack(side=tk.LEFT)

self.rightframeheader = tk.Frame(self.rightframe, background="white")

self.button1 = tk.Button(self.rightframeheader, text='Import CSV', command=self.import_csv, width=10)

self.button1.pack(pady = (0, 5), padx = (10, 0), side = tk.LEFT)

self.button2 = tk.Button(self.rightframeheader, text='Clear', command=self.clear, width=10)

self.button2.pack(padx = (10, 0), pady = (0, 5), side = tk.LEFT)

self.button3 = tk.Button(self.rightframeheader, text='Generate Plot', command=self.generatePlot, width=10)

self.button3.pack(pady = (0, 5), padx = (10, 0), side = tk.LEFT)

self.rightframeheader.pack()

self.tableframe = tk.Frame(self.rightframe, highlightbackground="blue", highlightthickness=5)

self.table = Table(self.tableframe, dataframe=pd.DataFrame(), width=300, height=400)

self.table.show()

self.tableframe.pack()

self.canvas = tk.Frame(self.leftframe)

self.fig = Figure()

self.ax = self.fig.add_subplot(111)

self.graph = FigureCanvasTkAgg(self.fig, self.canvas)

self.graph.draw()

self.graph.get_tk_widget().pack()

self.canvas.pack(padx=(20, 0))

self.main.pack()

def import_csv(self):

types = [("CSV files","*.csv"),("Excel files","*.xlsx"),("Text files","*.txt"),("All files","*.*") ]

csv_file_path = askopenfilename(initialdir = ".", title = "Open File", filetypes=types)

tempdf = pd.DataFrame()

try:

tempdf = pd.read_csv(csv_file_path)

except:

tempdf = pd.read_excel(csv_file_path)

self.table.model.df = tempdf

self.table.model.df.columns = self.table.model.df.columns.str.lower()

self.table.redraw()

self.generatePlot()

def clear(self):

self.table.model.df = pd.DataFrame()

self.table.redraw()

self.ax.clear()

def generatePlot(self):

self.ax.clear()

if not(self.table.model.df.empty):

df = self.table.model.df.copy()

self.ax.plot(pd.to_numeric(df["x"]), pd.to_numeric(df["y"]), color ='tab:blue', picker=True, pickradius=5)

self.graph.draw_idle()

root = tk.Tk()

window = Window(root)

root.mainloop()

Now you have two options. You can either go and install each library individually using “pip”, or you can create a requirements.txt file. We will go with the latter option, because its a recommended approach for helping to maintain the correct versions.

First create a new requirements.txt file in the parent folder of your virtual environment. File structure should look like this:

-- virtuals_envs_folder

-- tutorial

-- file.py

-- requirements.txtNow we will open a new command prompt (unrelated to our Virtual environment), and use it to check the versions of each our required libraries.

We can check the version of an installed library using pip show <library-name>. Running pip show matplotlib, gives us the following output.

Name: matplotlib

Version: 3.5.3We will now add this information to our requirements.txt file. For the sample code we provided above, our file will look like this:

matplotlib==3.5.3

sympy==1.10.1

pandastable==0.13.0

pandas==1.4.3

numpy==1.23.1

scipy==1.9.0

pyinstaller==5.6.2

auto-py-to-exe==2.24.1Now we will run the following command to have them all installed in one go.

pip install -r requirements.txtUsing Pyinstaller in our Venv Virtual Environment

And now, we are FINALLY done with all the setup!

But don’t forget why we are doing all of this!. As a test, try running pip show again, and see how many libraries get printed out this time. It should be alot less than what we had before.

All we need to do now is run the pyinstaller command like we would do normally.

pyinstaller --noconsole --onefile file.pyThis will generate our Pyinstaller EXE in our Venv Virtual environment (or in the parent folder)! Now observe the size difference and let us know down in the comments section how much of an improvement you got!

There should also be a slight speed bonus, due to the smaller size and lower number of modules to load.

If you are looking to further reduce the size of your Python module, the UPX packer is a great way to easily bring down the size of your EXE substantially. Check out our tutorial on it!

This marks the end of the “Setup Virtual Environment for Pyinstaller with Venv” Tutorial. Any suggestions or contributions for CodersLegacy are more than welcome. Questions regarding the tutorial content can be asked in the comments section below.

The post Setup Virtual Environment for Pyinstaller with Venv appeared first on CodersLegacy.

December 23, 2022 08:13 PM UTC

Python for Beginners

Use the Pandas fillna Method to Fill NaN Values

Handling NaN values while analyzing data is an important task. The pandas module in python provides us with the fillna() method to fill NaN values. In this article, we will discuss how to use the pandas fillna method to fill NaN values in Python.

- The filna() Method

- Use Pandas Fillna to Fill Nan Values in the Entire Dataframe

- Fill Different Values in Each Column in Pandas

- Only Fill the First N Null Values in Each Column

- Only Fill the First N Null Values in Each Row

- Pandas Fillna With the Last Valid Observation

- Pandas Fillna With the Next Valid Observation

- Pandas Fillna Inplace

- Conclusion

The filna() Method

You can fill NaN values in a pandas dataframe using the fillna() method. It has the following syntax.

DataFrame.fillna(value=None, *, method=None, axis=None, inplace=False, limit=None, downcast=None)Here,

- The

valueparameter takes the value that replaces the NaN values. You can also pass a python dictionary or a series to the value parameter. Here, the dictionary should contain the column names of the dataframe as its keys and the value that needs to be filled in the columns as the associated values. Similarly, the pandas series should contain the column names of the dataframe as the index and the replacement values as the associated value for each index. - The

methodparameter is used to fill NaN values in the dataframe if no input is given to thevalueparameter. If thevalueparameter is not None, themethodparameter is set to None. Otherwise, we can assign the literal“ffill”,“bfill”,“backfill”, or“pad”to specify what values we want to fill in place of the NaN values. - The

axisparameter is used to specify the axis along which to fill missing values. If you want to fill only specific rows or columns using the pandas fillna method, you can use theaxisparameter. To fill NaN values in rows, theaxisparameter is set to 1 or“columns”. To fill values by to columns, theaxisparameter is set to“index”or 0. - By default, the pandas fillna method doesn’t modify the original dataframe. It returns a new dataframe after execution, to modify the original dataframe on which the

fillna()method is invoked, you can set theinplaceparameter to True. - If the

methodparameter is specified, thelimitparameter specifies the maximum number of consecutive NaN values to forward/backward fill. In other words, if there is a gap with more thanlimitnumber of consecutive NaNs, it will only be partially filled. If themethodparameter is not specified, thelimitparameter takes the maximum number of entries along the entire axis where NaNs will be filled. It must be greater than 0 if not None. - The

downcastparameter takes a dictionary as a map to decide what data types should be downcasted and the destination data type if there is a need to change the data types of the values.

Use Pandas Fillna to Fill Nan Values in the Entire Dataframe

To fill NaN values in a pandas dataframe using the fillna method, you pass the replacement value of the NaN value to the fillna() method as shown in the following example.

import pandas as pd

import numpy as np

x=pd.read_csv("grade2.csv")

print("The original dataframe is:")

print(x)

x=x.fillna(0)

print("The modified dataframe is:")

print(x)Output:

The original dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 NaN NaN NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 NaN 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 NaN NaN NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 NaN NaN NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 A

The modified dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 0 0.0 0

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 0 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 0.0 0.0 0 0.0 0

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 0

9 0.0 0.0 0 0.0 0

10 3.0 15.0 Lokesh 88.0 AIn the above example, we have passed the value 0 to the fillna() method. Hence, all the NaN values in the input data frame are replaced by 0.

This approach isn’t very practical as different columns have different data types. So, we can choose to fill different values in different columns to replace the null values.

Fill Different Values in Each Column in Pandas

Instead of filling all the NaN values with the same value, you can also replace the NaN value in each column with a specific value. For this, we need to pass a dictionary containing column names as its keys and the values to be filled in the columns as the associated values to the fillna() method. You can observe this in the following example.

import pandas as pd

import numpy as np

x=pd.read_csv("grade2.csv")

print("The original dataframe is:")

print(x)

x=x.fillna({"Class":1,"Roll":100,"Name":"PFB","Marks":0,"Grade":"F"})

print("The modified dataframe is:")

print(x)Output:

The original dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 NaN NaN NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 NaN 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 NaN NaN NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 NaN NaN NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 A

The modified dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 PFB 0.0 F

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 PFB 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 1.0 100.0 PFB 0.0 F

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 F

9 1.0 100.0 PFB 0.0 F

10 3.0 15.0 Lokesh 88.0 AIn the above example, we have passed the dictionary {"Class" :1, "Roll": 100, "Name": "PFB", "Marks" : 0, "Grade": "F" } to the fillna() method as input. Due to this, the NaN values in the "Class" column are replaced by 1, the NaN values in the "Roll" column are replaced by 100, the NaN values in the "Name" column are replaced by "PFB", and so on. Thus, When we pass the column names of the dataframe as key and a python literal as associated value to the key, the NaN values are replaced in each column of the dataframe according to the input dictionary.

Instead of giving all the column names as keys in the input dictionary, you can also choose to ignore some. In this case, the NaN values in the columns that are not present in the input dictionary are not considered for replacement. You can observe this in the following example.

import pandas as pd

import numpy as np

x=pd.read_csv("grade2.csv")

print("The original dataframe is:")

print(x)

x=x.fillna({"Class":1,"Roll":100,"Name":"PFB","Marks":0})

print("The modified dataframe is:")

print(x)Output:

The original dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 NaN NaN NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 NaN 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 NaN NaN NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 NaN NaN NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 A

The modified dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 PFB 0.0 NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 PFB 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 1.0 100.0 PFB 0.0 NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 1.0 100.0 PFB 0.0 NaN

10 3.0 15.0 Lokesh 88.0 AIn this example, we haven’t passed the "Grade" column in the input dictionary to the fillna() method. Hence, the NaN values in the "Grade" column are not replaced by any other value.

Only Fill the First N Null Values in Each Column

Instead of filling all NaN values in each column, you can also limit the number of NaN values to be filled in each column. For this, you can pass the maximum number of values to be filled as input argument to the limit parameter in the fillna() method as shown below.

import pandas as pd

import numpy as np

x=pd.read_csv("grade2.csv")

print("The original dataframe is:")

print(x)

x=x.fillna(0, limit=3)

print("The modified dataframe is:")

print(x)Output:

The original dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 NaN NaN NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 NaN 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 NaN NaN NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 NaN NaN NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 A

The modified dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 0 0.0 0

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 0 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 0.0 0.0 0 0.0 0

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 0

9 0.0 0.0 NaN 0.0 NaN

10 3.0 15.0 Lokesh 88.0 AIn the above example, we have set the limit parameter to 3. Due to this, only the first three NaN values from each column are replaced by 0.

Only Fill the First N Null Values in Each Row

To fill only the first N null value in each row of the dataframe, you can pass the maximum number of values to be filled as an input argument to the limit parameter in the fillna() method. Additionally, you need to specify that you want to fill the rows by setting the axis parameter to 1. You can observe this in the following example.

import pandas as pd

import numpy as np

x=pd.read_csv("grade2.csv")

print("The original dataframe is:")

print(x)

x=x.fillna(0, limit=2,axis=1)

print("The modified dataframe is:")

print(x)Output:

The original dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 NaN NaN NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 NaN 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 NaN NaN NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 NaN NaN NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 A

The modified dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 0.0 0.0 NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 0 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 0.0 0.0 NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 0

9 0.0 0.0 NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 AIn the above example, we have set the limit parameter to 2 and the axis parameter to 1. Hence, only two NaN values from each row are replaced by 0 when the fillna() method is executed.

Pandas Fillna With the Last Valid Observation

Instead of specifying a new value, you can also fill NaN values using the existing values. For instance, you can fill the Null values using the last valid observation by setting the method parameter to “ffill” as shown below.

import pandas as pd

import numpy as np

x=pd.read_csv("grade2.csv")

print("The original dataframe is:")

print(x)

x=x.fillna(method="ffill")

print("The modified dataframe is:")

print(x)Output:

The original dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 NaN NaN NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 NaN 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 NaN NaN NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 NaN NaN NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 A

The modified dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 Clara 78.0 B

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 Amy 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 3.0 27.0 Aditya 55.0 C

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 B

9 3.0 11.0 Bobby 50.0 B

10 3.0 15.0 Lokesh 88.0 AIn this example, we have set the method parameter to "ffill". Hence, whenever a NaN value is encountered, the fillna() method fills the particular cell with the non-null value in the preceding cell in the same column.

Pandas Fillna With the Next Valid Observation

You can fill the Null values using the next valid observation by setting the method parameter to “bfill” as shown below.

import pandas as pd

import numpy as np

x=pd.read_csv("grade2.csv")

print("The original dataframe is:")

print(x)

x=x.fillna(method="bfill")

print("The modified dataframe is:")

print(x)Output:

The original dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 NaN NaN NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 NaN 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 NaN NaN NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 NaN NaN NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 A

The modified dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 Amy 88.0 A

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 Aditya 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 3.0 23.0 Radheshyam 78.0 B

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 A

9 3.0 15.0 Lokesh 88.0 A

10 3.0 15.0 Lokesh 88.0 AIn this example, we have set the method parameter to "bfill". Hence, whenever a NaN value is encountered, the fillna() method fills the particular cell with the non-null value in the next cell in the same column.

Pandas Fillna Inplace

By default, the fillna() method returns a new dataframe after execution. To modify the existing dataframe instead of creating a new one, you can set the inplace parameter to True in the fillna() method as shown below.

import pandas as pd

import numpy as np

x=pd.read_csv("grade2.csv")

print("The original dataframe is:")

print(x)

x.fillna(method="bfill",inplace=True)

print("The modified dataframe is:")

print(x)Output:

The original dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 NaN NaN NaN

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 NaN 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 NaN NaN NaN NaN NaN

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 NaN

9 NaN NaN NaN NaN NaN

10 3.0 15.0 Lokesh 88.0 A

The modified dataframe is:

Class Roll Name Marks Grade

0 2.0 27.0 Harsh 55.0 C

1 2.0 23.0 Clara 78.0 B

2 3.0 33.0 Amy 88.0 A

3 3.0 34.0 Amy 88.0 A

4 3.0 15.0 Aditya 78.0 B

5 3.0 27.0 Aditya 55.0 C

6 3.0 23.0 Radheshyam 78.0 B

7 3.0 23.0 Radheshyam 78.0 B

8 3.0 11.0 Bobby 50.0 A

9 3.0 15.0 Lokesh 88.0 A

10 3.0 15.0 Lokesh 88.0 AIn this example, we have set the inplace parameter to True in the fillna() method. Hence, the input dataframe is modified.

Conclusion

In this article, we have discussed how to use the pandas fillna method to fill nan values in Python.

To learn more about python programming, you can read this article on how to sort a pandas dataframe. You might also like this article on how to drop columns from a pandas dataframe.

I hope you enjoyed reading this article. Stay tuned for more informative articles.

Happy Learning!

The post Use the Pandas fillna Method to Fill NaN Values appeared first on PythonForBeginners.com.

December 23, 2022 02:00 PM UTC

Real Python

The Real Python Podcast – Episode #138: 2022 Real Python Tutorial & Video Course Wrap Up

It's been another year of changes at Real Python! The Real Python team has written, edited, curated, illustrated, and produced a mountain of Python material this year. We added some new members to the team, updated the site's features, and created new styles of tutorials and video courses.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

December 23, 2022 12:00 PM UTC

December 22, 2022

Sumana Harihareswara - Cogito, Ergo Sumana

Speech-to-text with Whisper: How I Use It & Why

Speech-to-text with Whisper: How I Use It & Why

December 22, 2022 09:41 PM UTC

Peter Bengtsson

Pip-Outdated.py - a script to compare requirements.in with the output of pip list --outdated

Simply by posting this, there's a big chance you'll say "Hey! Didn't you know there's already a well-known script that does this? Better." Or you'll say "Hey! That'll save me hundreds of seconds per year!"

The problem

Suppose you have a requirements.in file that is used, by pip-compile to generate the requirements.txt that you actually install in your Dockerfile or whatever server deployment. The requirements.in is meant to be the human-readable file and the requirements.txt is for the computers. You manually edit the version numbers in the requirements.in and then run pip-compile --generate-hashes requirements.in to generate a new requirements.txt. But the "first-class" packages in the requirements.in aren't the only packages that get installed. For example:

▶ cat requirements.in | rg '==' | wc -l

54

▶ cat requirements.txt | rg '==' | wc -l

102

In other words, in this particular example, there are 76 "second-class" packages that get installed. There might actually be more stuff installed that you didn't describe. That's why pip list | wc -l can be even higher. For example, you might have locally and manually done pip install ipython for a nicer interactive prompt.

The solution

The command pip list --outdated will list packages based on the requirements.txt not the requirements.in. To mitigate that, I wrote a quick Python CLI script that combines the output of pip list --outdated with the packages mentioned in requirements.in:

#!/usr/bin/env python

import subprocess

def main(*args):

if not args:

requirements_in = "requirements.in"

else:

requirements_in = args[0]

required = {}

with open(requirements_in) as f:

for line in f:

if "==" in line:

package, version = line.strip().split("==")

package = package.split("[")[0]

required[package] = version

res = subprocess.run(["pip", "list", "--outdated"], capture_output=True)

if res.returncode:

raise Exception(res.stderr)

lines = res.stdout.decode("utf-8").splitlines()

relevant = [line for line in lines if line.split()[0] in required]

longest_package_name = max([len(x.split()[0]) for x in relevant]) if relevant else 0

for line in relevant:

p, installed, possible, *_ = line.split()

if p in required:

print(

p.ljust(longest_package_name + 2),

"INSTALLED:",

installed.ljust(9),

"POSSIBLE:",

possible,

)

if __name__ == "__main__":

import sys

sys.exit(main(*sys.argv[1:]))

Installation

To install this, you can just download the script and run it in any directory that contains a requirements.in file.

Or you can install it like this:

curl -L https://gist.github.com/peterbe/099ad364657b70a04b1d65aa29087df7/raw/23fb1963b35a2559a8b24058a0a014893c4e7199/Pip-Outdated.py > ~/bin/Pip-Outdated.py chmod +x ~/bin/Pip-Outdated.py Pip-Outdated.py

December 22, 2022 01:14 PM UTC

Ned Batchelder

Secure maintainer workflow, continued

Picking up from Secure maintainer workflow, especially the comments there (thanks!), here are some more things I’m doing to keep my maintainer workflow safe.

1Password ssh: I’m using 1Password as my SSH agent. It works really well, and uses the Mac Touch ID for authorization. Now I have no private keys in my ~/.ssh directory. I’ve been very impressed with 1Password’s helpful and comprehensive approach to configuration and settings.

Improved environment variables: I’ve updated my opvars and unopvars shell functions that set environment variables from 1Password. Now I can name sets of credentials (defaulting to the current directory name), and apply multiple sets. Then unopvars knows all that have been set, and clears all of them.

Public/private GitHub hosts: There’s a problem with using a fingerprint-gated SSH agent: some common operations want an SSH key but aren’t actually security sensitive. When pulling from a public repo, you don’t want to be interrupted to touch the sensor. Reading public information doesn’t need authentication, and you don’t want to become desensitized to the importance of the sensor. Pulling changes from a git repo with a “git@” address always requires SSH, even if the repo is public. It shouldn’t require an alarming interruption.

Git lets you define “insteadOf” aliases so that you can pull using “https:” and push using “git@”. The syntax seems odd and backwards to me, partly because I can define pushInsteadOf, but there’s no pullInsteadOf:

[url "git@github.com:"]

# Git remotes of "git@github.com" should really be pushed using ssh.

pushInsteadOf = git@github.com:

[url "https://github.com/"]

# Git remotes of "git@github.com" should be pulled over https.

insteadOf = git@github.com:

This works great, except that private repos still need to be pulled using SSH. To deal with this, I have a baroque contraption arrangement using a fake URL scheme “github_private:” like this:

[url "git@github.com:"]

pushInsteadOf = git@github.com:

# Private repos need ssh in both directions.

insteadOf = github_private:

[url "https://github.com/"]

insteadOf = git@github.com:

Now if I set the remote URL to “github_private:nedbat/secret.git”, then activity will use “git@github.com:nedbat/secret.git” instead, for both pushing and pulling. (BTW: if you start fiddling with this, “git remote -v” will show you the URLs after these remappings, and “git config --get-regex ‘remote.*.url’” will show you the actual settings before remapping.)

But how to set the remote to “github_private:nedbat/secret.git”? I can set it manually for specific repos with “git remote”, but I also clone entire organizations and don’t want to have to know which repos are private. I automate the remote-setting with an aliased git command I can run in a repo directory that sets the remote correctly if the repo is private:

[alias]

# If this is a private repo, change the remote from "git@github.com:" to

# "github_private:". You can remap "github_private:" to "git@" like this:

#

# [url "git@github.com:"]

# insteadOf = github_private:

#

# This requires the gh command: https://cli.github.com/

#

fix-private-remotes = "!f() { \

vis=$(gh api 'repos/{owner}/{repo}' --template '{{.visibility}}'); \

if [[ $vis == private ]]; then \

for rem in $(git remote); do \

echo Updating remote $rem; \

git config remote.$rem.url $(git config remote.$rem.url | \

sed -e 's/git@github.com:/github_private:/'); \

done \

fi; \

}; f"

This uses GitHub’s gh command-line tool, which is quite powerful. I’m using it more and more.

This is getting kind of complex, and is still a work in progress, but it’s working. I’m always interested in ideas for improvements.

December 22, 2022 12:03 PM UTC

Python Software Foundation

More Python Everywhere, All at Once: Looking Forward to 2023

The PSF works hard throughout the year to put on PyCon US, support smaller Python events around the world through our Grants program and of course to provide the critical infrastructure and expertise that keep CPython and PyPI running smoothly for the 8 million (and growing!) worldwide base of Python users. We want to invest more deeply in education and outreach in 2023, and donations from individuals (like you) can make sure we have the resources to start new projects and sustain them alongside our critical community functions.

Supporting Membership is a particularly great way to contribute to the PSF. By becoming a Supporting Member, you join a core group of PSF stakeholders, and since Supporting Members are eligible to vote in our Board and bylaws elections, you gain a voice in the future of the PSF. And we have just introduced a new sliding scale rate for Supporting Members, so you can join at the standard rate of an annual $99 contribution, or for as little as $25 annually if that works better for you. We are about three quarters of the way to our goal of 100 new supporting members by the end of 2022 – Can you sign up today and help push us over the edge?

Thank you for reading and for being a part of the one-of-a-kind community that makes Python and the PSF so special.

With warmest wishes to you and yours for a happy and healthy new year,

Deb

December 22, 2022 11:08 AM UTC

PyCharm

The PyCharm 2022.3.1 Release Candidate is out!

This build contains important bug fixes for PyCharm 2022.3. Look through the list of improvements and update to the latest version for a better experience.

- Packaging: PyCharm no longer uses excessive disk space caching PyPI. [PY-57156]

- HTTP client: Setting a proxy no longer breaks package inspection. [PY-57612]

- Python console: Code that is run with the Emulate terminal in output console option enabled now has the correct indentation level. [PY-57706]

- Inspections: The Loose punctuation mark inspection now works correctly for reStructuredText fields in docstrings. [PY-53047]

- Inspections: Fixed an SOE exception where processing generic types broke error highlighting in the editor. [PY-54336]

- Debugger: We fixed several issues for the debugger. [PY-57296], [PY-57055]

- Code insight: Code insight for IntEnum properties is now correct. [PY-55734]

- Code insight: Code insight has been improved for dataclass arguments when wildcard import is used. [PY-36158]

For the full list of improvements, please refer to the release notes. Share your feedback in the comments under this post or in our issue tracker.

December 22, 2022 08:55 AM UTC

Talk Python to Me

#395: Tools for README.md Creation and Maintenance

If you maintain projects on places like GitHub, you know that having a classy readme is important and that maintaining a change log can be helpful for you and consumers of the project. It can also be a pain. That's why I'm excited to welcome back Ned Batchelder to the show. He has a lot of tools to help here as well as some opinions we're looking forward to hearing. We cover his tools and a bunch of others he and I found along the way.<br/> <br/> <strong>Links from the show</strong><br/> <br/> <div><b>Ned on Mastodon</b>: <a href="https://hachyderm.io/@nedbat" target="_blank" rel="noopener">@nedbat@hachyderm.io</a><br/> <b>Ned's website</b>: <a href="https://nedbatchelder.com" target="_blank" rel="noopener">nedbatchelder.com</a><br/> <br/> <b>Readme as a Service</b>: <a href="https://readme.so" target="_blank" rel="noopener">readme.so</a><br/> <b>hatch-fancy-pypi-readme</b>: <a href="https://github.com/hynek/hatch-fancy-pypi-readme" target="_blank" rel="noopener">github.com</a><br/> <b>Shields.io badges</b>: <a href="https://shields.io" target="_blank" rel="noopener">shields.io</a><br/> <b>All Contributors</b>: <a href="https://allcontributors.org" target="_blank" rel="noopener">allcontributors.org</a><br/> <b>Keep a changelog</b>: <a href="https://keepachangelog.com" target="_blank" rel="noopener">keepachangelog.com</a><br/> <b>Scriv: Changelog management tool</b>: <a href="https://github.com/nedbat/scriv" target="_blank" rel="noopener">github.com</a><br/> <b>changelog_manager</b>: <a href="https://github.com/masukomi/changelog_manager" target="_blank" rel="noopener">github.com</a><br/> <b>executablebooks' github activity</b>: <a href="https://github.com/executablebooks/github-activity" target="_blank" rel="noopener">github.com</a><br/> <b>dinghy: A GitHub activity digest tool</b>: <a href="https://github.com/nedbat/dinghy" target="_blank" rel="noopener">github.com</a><br/> <b>cpython's blurb</b>: <a href="https://github.com/python/core-workflow/tree/master/blurb" target="_blank" rel="noopener">github.com</a><br/> <b>release drafter</b>: <a href="https://github.com/release-drafter/release-drafter" target="_blank" rel="noopener">github.com</a><br/> <b>Towncrier</b>: <a href="https://github.com/twisted/towncrier" target="_blank" rel="noopener">github.com</a><br/> <b>mktestdocs testing code samples in readmes</b>: <a href="https://github.com/koaning/mktestdocs" target="_blank" rel="noopener">github.com</a><br/> <b>shed</b>: <a href="https://github.com/Zac-HD/shed" target="_blank" rel="noopener">github.com</a><br/> <b>blacken-docs</b>: <a href="https://github.com/adamchainz/blacken-docs" target="_blank" rel="noopener">github.com</a><br/> <b>Cog</b>: <a href="https://github.com/nedbat/cog" target="_blank" rel="noopener">github.com</a><br/> <b>Awesome tools for readme</b>: <a href="https://github.com/HaiDang666/awesome-tool-for-readme-profile" target="_blank" rel="noopener">github.com</a><br/> <br/> <b>coverage.py</b>: <a href="ttps://coverage.readthedocs.io" target="_blank" rel="noopener">coverage.readthedocs.io</a><br/> <b>Tailwind CSS "Landing page"</b>: <a href="https://tailwindcss.com" target="_blank" rel="noopener">tailwindcss.com</a><br/> <b>Poetry "Landing page"</b>: <a href="https://python-poetry.org" target="_blank" rel="noopener">python-poetry.org</a><br/> <b>Textual</b>: <a href="https://www.textualize.io" target="_blank" rel="noopener">textualize.io</a><br/> <b>Rich</b>: <a href="https://github.com/Textualize/rich" target="_blank" rel="noopener">github.com</a><br/> <b>Join Mastodon Page</b>: <a href="https://joinmastodon.org" target="_blank" rel="noopener">joinmastodon.org</a><br/> <b>Watch this episode on YouTube</b>: <a href="https://www.youtube.com/watch?v=K7jeBfiNqR4" target="_blank" rel="noopener">youtube.com</a><br/> <b>Episode transcripts</b>: <a href="https://talkpython.fm/episodes/transcript/395/tools-for-readme.md-creation-and-maintenance" target="_blank" rel="noopener">talkpython.fm</a><br/> <br/> <b>--- Stay in touch with us ---</b><br/> <b>Subscribe to us on YouTube</b>: <a href="https://talkpython.fm/youtube" target="_blank" rel="noopener">youtube.com</a><br/> <b>Follow Talk Python on Mastodon</b>: <a href="https://fosstodon.org/web/@talkpython" target="_blank" rel="noopener"><i class="fa-brands fa-mastodon"></i>talkpython</a><br/> <b>Follow Michael on Mastodon</b>: <a href="https://fosstodon.org/web/@mkennedy" target="_blank" rel="noopener"><i class="fa-brands fa-mastodon"></i>mkennedy</a><br/></div><br/> <strong>Sponsors</strong><br/> <a href='https://talkpython.fm/max'>Local Maximum Podcast</a><br> <a href='https://talkpython.fm/sentry'>Sentry Error Monitoring, Code TALKPYTHON</a><br> <a href='https://talkpython.fm/assemblyai'>AssemblyAI</a><br> <a href='https://talkpython.fm/training'>Talk Python Training</a>

December 22, 2022 08:00 AM UTC

December 21, 2022

Everyday Superpowers

TIL Debug any python file in VS Code

One of my frustrations with VisualStudio Code was creating a `launch.json` file whenever I wanted to debug a one-off Python file.

Today, I learned that you could add a debugger configuration to your user settings. This allows you always to have a debugging configuration available.

Create a debugging (or launch) configuration:

- Select the "Run and Debug" tab and click "create a launch.json file"

2. Choose "Python File"

3. VS Code will generate a configuration for you. This is the one I used, where I changed the `"justMyCode"` to `false`, so I can step in to any Python code.

{

"name": "Python: Current File",

"type": "python",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal",

"justMyCode": false

}4. Copy the config (including the curly braces)

5. Open your user configuration (`⌘`/`Ctrl` + `,`) and search for "launch".

6. Click "Edit in settings.json"

7. Add your configuration to the `"configurations"` list.

This is what mine looks like, for reference:

...

"launch": {

"configurations": [

{

"name": "Python: Current File",

"type": "python",

"request": "launch",

"program": "${file}",

"console": "integratedTerminal",

"justMyCode": false

}

],

"compounds": []

}Read more...

December 21, 2022 09:37 PM UTC

What is Your Burnout Telling You?

I am glad that mental health is being discussed more often in the programming world. In particular, I would like to thank Kenneth Reitz for his transparency over the last few years and contributing his recent essay on developer burnout.

Having just recently experienced a period of burnout, I would like to share some ideas that might help you skirt by it in your own life.

And, before I go on, let me say loud and clear, there is absolutely no shame in experiencing, or reaching out for help from, anxiety, fear, anger, burnout, depression, or any other mental condition.

If you think you might be struggling with something, please reach out for help! You might have to reach out several times to multiple sources, but remember you are too valuable to waste time with this funk.

Read more...

December 21, 2022 04:37 PM UTC

A Sublime User in PyCharm Land

I have written few articles about how Sublime Text has been such a great environment to get my work done—and there's more to come, I have been extremely happy with Sublime for years.

But listening to Michael Kennedy and some guests on his Talk Python to Me podcast gush about PyCharm's great features made me wonder what I was missing. I was lucky to receive a trial license for PyCharm almost a year ago, but I found it opaque and harsh compared to my beloved Sublime.

With my license ending soon and a couple new python projects at work, I thought I would invest some effort to get used to PyCharm and see if I could get any benefit from it.

Read my thoughts of the first few months of using PyCharm.

Read more...

December 21, 2022 04:37 PM UTC

New python web app not working?

How many times have you had the thrill of releasing a new service or app to the world, only to have it crashing down when you test the URL and find a server error page instead of your work? I'm collecting a few tips I use when I'm trying to figure out why the new python service I have set up is not working.

Read more...

December 21, 2022 04:37 PM UTC

Using Sublime Text for python

Five or so years ago, I was frustrated by my coding environment. I was working on .net web sites and felt like I was fighting Microsoft's Visual Studio to get my work done. I started jumping between Visual Studio, Notepad++, Eclipse, and other programs. With all my jumping around, I was not really happy with my experience in any of them.

I came across an article that encouraged me to invest in my coding tools; that is to pick one program and dive deep into it, find out what it's good for, and try to enjoy it to its maximum, before looking to other tools.

Given that inspiration, I turned to Sublime Text and the myriad of how-to articles and videos for it, and within a month it was my favorite editor. I could list dozens of reasons why you should give it a try, but Daniel Bader has done a great job.

I list several packages that I find crucial to helping my development experience.

Read more...

December 21, 2022 04:37 PM UTC

Real Python

Generate Images With DALL·E 2 and the OpenAI API

Describe any image, then let a computer create it for you. What sounded futuristic only a few years ago has become reality with advances in neural networks and latent diffusion models (LDM). DALL·E by OpenAI has made a splash through the amazing generative art and realistic images that people create with it.

OpenAI now allows access to DALL·E through their API, which means that you can incorporate its functionality into your Python applications.

In this tutorial, you’ll:

- Get started using the OpenAI Python library

- Explore API calls related to image generation

- Create images from text prompts

- Create variations of your generated image

- Convert Base64 JSON responses to PNG image files

You’ll need some experience with Python, JSON, and file operations to breeze through this tutorial. You can also study up on these topics while you go along, as you’ll find relevant links throughout the text.

If you haven’t played with the web user interface (UI) of DALL·E before, then try it out before coming back to learn how to use it programmatically with Python.

Source Code: Click here to download the free source code that you’ll use to generate stunning images with DALL·E 2 and the OpenAI API.

Complete the Setup Requirements

If you’ve seen what DALL·E can do and you’re eager to make its functionality part of your Python applications, then you’re in the right spot! In this first section, you’ll quickly walk through what you need to do to get started using DALL·E’s image creation capabilities in your own code.

Install the OpenAI Python Library

Confirm that you’re running Python version 3.7.1 or higher, create and activate a virtual environment, and install the OpenAI Python library:

The openai package gives you access to the full OpenAI API. In this tutorial, you’ll focus on the Image class, which you can use to interact with DALL·E to create and edit images from text prompts.

Get Your OpenAI API Key

You need an API key to make successful API calls. Sign up for the OpenAI API and create a new API key by clicking on the dropdown menu on your profile and selecting View API keys:

On this page, you can manage your API keys, which allow you to access the service that OpenAI offers through their API. You can create and delete secret keys.

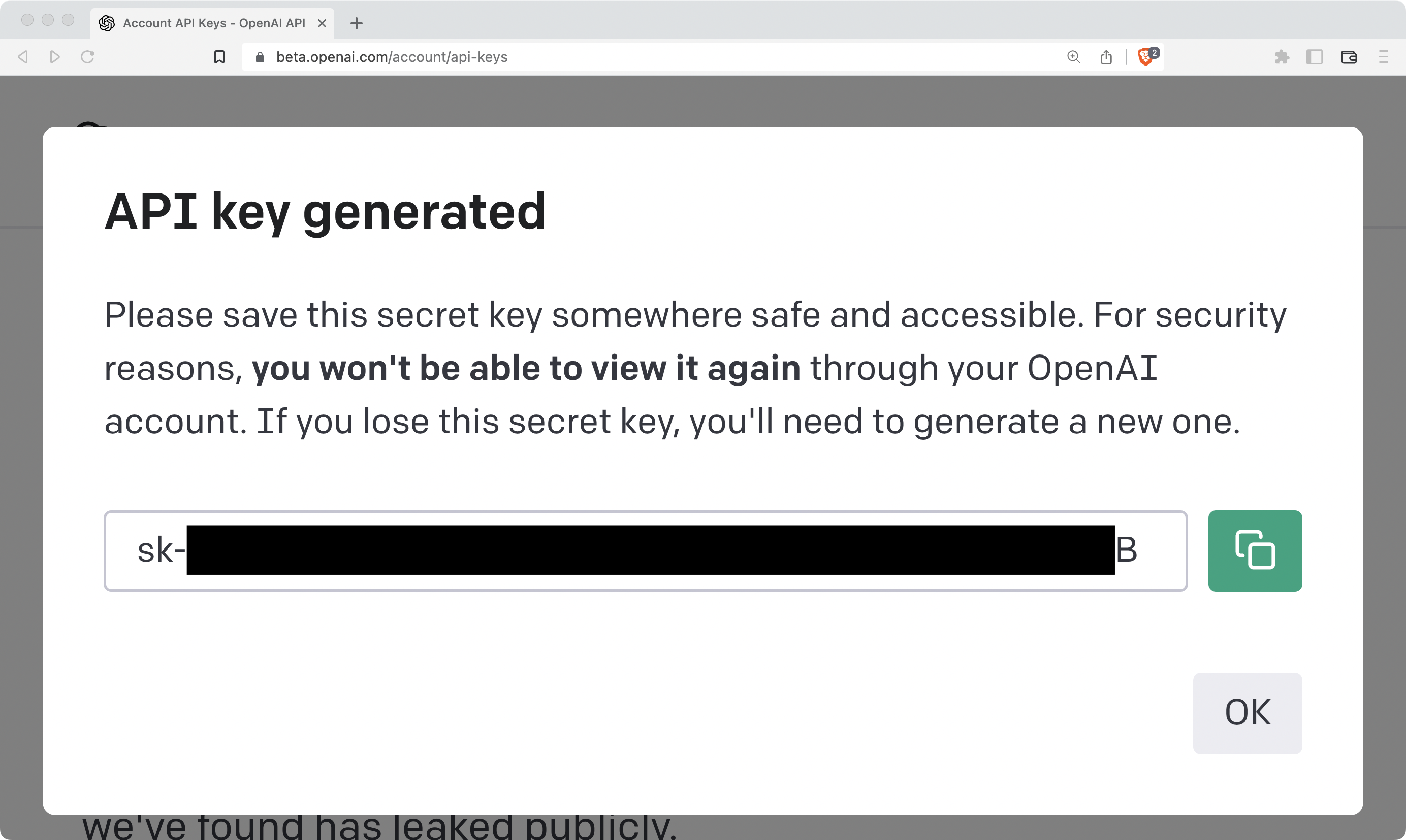

Click on Create new secret key to create a new API key, and copy the value shown in the pop-up window:

Always keep this key secret! Copy the value of this key so you can later use it in your project. You’ll only see the key value once.

Save Your API Key as an Environment Variable

A quick way to save your API key and make it available to your Python scripts is to save it as an environment variable. Select your operating system to learn how:

Read the full article at https://realpython.com/generate-images-with-dalle-openai-api/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

December 21, 2022 02:00 PM UTC

Python for Beginners

Check for Not Null Value in Pandas Python

In python, we sometimes need to filter not null and null values. In this article, we will discuss different ways to check for not null in pandas using examples.

We can check for not null in pandas using the notna() function and the notnull() function. Let us discuss each function one by one.

- Check for Not Null in Pandas Using the notna() Method

- Check for Not NA in a Pandas Dataframe Using notna() Method

- Check for Not Null Values in a Column in Pandas Dataframe

- Check for Not NA in a Pandas Series Using notna() Method

- Check for Not Null in Pandas Using the notnull() Method

- Check for Not Null in a Pandas Dataframe Using the notnull() Method

- Conclusion

Check for Not Null in Pandas Using the notna() Method

As the name suggests, the notna() method works as a negation of the isna() method. The isna() method is used to check for nan values in pandas. The notna() function has the following syntax.

pandas.notna(object)Here, the object can be a single python object or a collection of objects such as a python list or tuple.

If we pass a single python object to the notna() method as an input argument, it returns False if the python object is None, pd.NA or np.NaN object. For python objects that are not null, the notna() function returns True. You can observe this in the following example.

import pandas as pd

import numpy as np

x=pd.NA

print("The value is:",x)

output=pd.notna(x)

print("Is the value not Null:",output)Output:

The value is: <NA>

Is the value not Null: FalseIn the above example, we have passed the pandas.NA object to the notna() function. Hence, it returns False.

When we pass a list or numpy array of elements to the notna() function, the notna() function is executed with each element of the array. After execution, it returns a list or array containing True and False values. The True values of the output array correspond to all the values that are not NA, NaN, or None at the same position in the input list or array. The False values in the output array correspond to all the NA, NaN, or None values at the same position in the input list or array. You can observe this in the following example.

import pandas as pd

import numpy as np

x=[1,2,pd.NA,4,5,None, 6,7,np.nan]

print("The values are:",x)

output=pd.notna(x)

print("Are the values not Null:",output)Output:

The values are: [1, 2, <NA>, 4, 5, None, 6, 7, nan]

Are the values not Null: [ True True False True True False True True False]In this example, we have passed a list containing 9 elements to the notna() function. After execution, the notna() function returns a list of 9 boolean values. Each element in the output list is associated with the element at the same index in the input list given to the notna() function. At the indices where the input list does not contain Null values, the output list contains True. Similarly, at indices where the input list contains null values, the output list contains False.

Check for Not NA in a Pandas Dataframe Using notna() Method

Along with the notna() function, python also provides us with the notna() method to check for not null values in pandas dataframes and series objects.

The notna() method, when invoked on a pandas dataframe, returns another dataframe containing True and False values. True values of the output dataframe correspond to all the values that are not NA, NaN, or None at the same position in the input dataframe. The False values in the output dataframe correspond to all the NA, NaN, or None values at the same position in the input dataframe. You can observe this in the following example.

import pandas as pd

import numpy as np

df=pd.read_csv("grade.csv")

print("The dataframe is:")

print(df)

output=df.notna()

print("Are the values not Null:")

print(output)Output:

The dataframe is:

Class Roll Name Marks Grade

0 1 11 Aditya 85.0 A

1 1 12 Chris NaN A

2 1 14 Sam 75.0 B

3 1 15 Harry NaN NaN

4 2 22 Tom 73.0 B

5 2 15 Golu 79.0 B

6 2 27 Harsh 55.0 C

7 2 23 Clara NaN B

8 3 34 Amy 88.0 A

9 3 15 Prashant NaN B

10 3 27 Aditya 55.0 C

11 3 23 Radheshyam NaN NaN

Are the values not Null:

Class Roll Name Marks Grade

0 True True True True True

1 True True True False True

2 True True True True True

3 True True True False False

4 True True True True True

5 True True True True True

6 True True True True True

7 True True True False True

8 True True True True True

9 True True True False True

10 True True True True True

11 True True True False FalseIn the above example, we have invoked the notna() method on a dataframe containing NaN values along with other values. The notna() method returns a dataframe containing boolean values. Here, False values of the output dataframe correspond to all the values that are NA, NaN, or None at the same position in the input dataframe. The True values in the output dataframe correspond to all the not null values at the same position in the input dataframe.

Check for Not Null Values in a Column in Pandas Dataframe

Instead of the entire dataframe, you can also check for not null values in a column of a pandas dataframe. For this, you just need to invoke the notna() method on the particular column as shown below.

import pandas as pd

import numpy as np

df=pd.read_csv("grade.csv")

print("The dataframe column is:")

print(df["Marks"])

output=df["Marks"].notna()

print("Are the values not Null:")

print(output)Output:

The dataframe column is:

0 85.0

1 NaN

2 75.0

3 NaN

4 73.0

5 79.0

6 55.0

7 NaN

8 88.0

9 NaN

10 55.0

11 NaN

Name: Marks, dtype: float64

Are the values not Null:

0 True

1 False

2 True

3 False

4 True

5 True

6 True

7 False

8 True

9 False

10 True

11 False

Name: Marks, dtype: boolCheck for Not NA in a Pandas Series Using notna() Method

Like a dataframe, we can also invoke the notna() method on a pandas Series object. In this case, the notna() method returns a Series containing True and False values. You can observe this in the following example.

import pandas as pd

import numpy as np

x=pd.Series([1,2,pd.NA,4,5,None, 6,7,np.nan])

print("The series is:")

print(x)

output=pd.notna(x)

print("Are the values not Null:")

print(output)Output:

The series is:

0 1

1 2

2 <NA>

3 4

4 5

5 None

6 6

7 7

8 NaN

dtype: object

Are the values not Null:

0 True

1 True

2 False

3 True

4 True

5 False

6 True

7 True

8 False

dtype: boolIn this example, we have invoked the notna() method on a pandas series. The notna() method returns a Series of boolean values after execution. Here, False values of the output series correspond to all the values that are NA, NaN, or None at the same position in the input series. The True values in the output series correspond to all the not null values at the same position in the input series.

Check for Not Null in Pandas Using the notnull() Method

The notnull() method is an alias of the notna() method. Hence, it works exactly the same as the notna() method.

When we pass a NaN value, pandas.NA value, pandas.NaT value, or None object to the notnull() function, it returns False.

import pandas as pd

import numpy as np

x=pd.NA

print("The value is:",x)

output=pd.notnull(x)

print("Is the value not Null:",output)Output:

The value is: <NA>

Is the value not Null: FalseIn the above example, we have passed pandas.NA value to the notnull() function. Hence, it returns False.

When we pass any other python object to the notnull() function, it returns True as shown below.

import pandas as pd

import numpy as np

x=1117

print("The value is:",x)

output=pd.notnull(x)

print("Is the value not Null:",output)Output:

The value is: 1117

Is the value not Null: TrueIn this example, we passed the value 1117 to the notnull() function. Hence, it returns True showing that the value is not a null value.

When we pass a list or numpy array to the notnull() function, it returns a numpy array containing True and False values. You can observe this in the following example.

import pandas as pd

import numpy as np

x=[1,2,pd.NA,4,5,None, 6,7,np.nan]

print("The values are:",x)

output=pd.notnull(x)

print("Are the values not Null:",output)Output:

The values are: [1, 2, <NA>, 4, 5, None, 6, 7, nan]

Are the values not Null: [ True True False True True False True True False]In this example, we have passed a list to the notnull() function. After execution, the notnull() function returns a list of boolean values. Each element in the output list is associated with the element at the same index in the input list given to the notnull() function. At the indices where the input list contains Null values, the output list contains False. Similarly, at indices where the input list contains integers, the output list contains True.

Check for Not Null in a Pandas Dataframe Using the notnull() Method

You can also invoke the notnull() method on a pandas dataframe to check for nan values as shown below.

import pandas as pd

import numpy as np

df=pd.read_csv("grade.csv")

print("The dataframe is:")

print(df)

output=df.notnull()

print("Are the values not Null:")

print(output)Output:

The dataframe is:

Class Roll Name Marks Grade

0 1 11 Aditya 85.0 A

1 1 12 Chris NaN A

2 1 14 Sam 75.0 B

3 1 15 Harry NaN NaN

4 2 22 Tom 73.0 B

5 2 15 Golu 79.0 B

6 2 27 Harsh 55.0 C

7 2 23 Clara NaN B

8 3 34 Amy 88.0 A

9 3 15 Prashant NaN B

10 3 27 Aditya 55.0 C

11 3 23 Radheshyam NaN NaN

Are the values not Null:

Class Roll Name Marks Grade

0 True True True True True

1 True True True False True

2 True True True True True

3 True True True False False

4 True True True True True

5 True True True True True

6 True True True True True

7 True True True False True

8 True True True True True

9 True True True False True

10 True True True True True

11 True True True False FalseIn the output, you can observe that the notnull() method behaves in exactly the same manner as the notna() method.

Instead of the entire dataframe, you can also use the notnull() method to check for not nan values in a column as shown in the following example.

import pandas as pd

import numpy as np

df=pd.read_csv("grade.csv")

print("The dataframe column is:")

print(df["Marks"])

output=df["Marks"].notnull()

print("Are the values not Null:")

print(output)Output:

The dataframe column is:

0 85.0

1 NaN

2 75.0

3 NaN

4 73.0

5 79.0

6 55.0

7 NaN

8 88.0

9 NaN

10 55.0

11 NaN

Name: Marks, dtype: float64

Are the values not Null:

0 True

1 False

2 True

3 False

4 True

5 True

6 True

7 False

8 True

9 False

10 True

11 False

Name: Marks, dtype: boolIn a similar manner, you can invoke the notnull() method on a pandas series as shown below.

import pandas as pd

import numpy as np

x=pd.Series([1,2,pd.NA,4,5,None, 6,7,np.nan])

print("The series is:")

print(x)

output=pd.notnull(x)

print("Are the values not Null:")

print(output)Output:

The series is:

0 1

1 2

2 <NA>

3 4

4 5

5 None

6 6

7 7

8 NaN

dtype: object

Are the values not Null:

0 True

1 True

2 False

3 True

4 True

5 False

6 True

7 True

8 False

dtype: boolIn the above example, we have invoked the notnull() method on a series. The notnull() method returns a Series of boolean values after execution. Here, the True values of the output series correspond to all the values that are not NA, NaN, or None at the same position in the input series. The False values in the output series correspond to all the NA, NaN, or None values at the same position in the input series.

Conclusion

In this article, we have discussed different ways to check for not null values in pandas. To learn more about python programming, you can read this article on how to sort a pandas dataframe. You might also like this article on how to drop columns from a pandas dataframe.

I hope you enjoyed reading this article. Stay tuned for more informative articles.

Happy Learning!

The post Check for Not Null Value in Pandas Python appeared first on PythonForBeginners.com.

December 21, 2022 02:00 PM UTC

Will Kahn-Greene

Volunteer Responsibility Amnesty Day: December 2022

Today is Volunteer Responsibility Amnesty Day where I spend some time taking stock of things and maybe move some projects to the done pile.

In June, I ran a Volunteer Responsibility Amnesty Day [1] for Mozilla Data Org because the idea really struck a chord with me and we were about to embark on 2022h2 where one of the goals was to "land planes" and finish projects. I managed to pass off Dennis and end Puente. I also spent some time mulling over better models for maintaining a lot of libraries.

[1]I gave the post an exceedingly long slug. I wish I had thought about future me typing that repeatedly and made it shorter like I did this time around.

This time around, I'm just organizing myself.

Here's the list of things I'm maintaining in some way that aren't the big services that I work on:

- bleach

- what is it:

Bleach is an allowed-list-based HTML sanitizing Python library.

- role:

maintainer

- keep doing:

no

- next step:

more on this next year

- everett

- what is it:

Python configuration library.

- role:

maintainer

- keep doing:

yes

- next step:

keep on keepin on

- markus

- what is it:

Python metrics library.

- role:

maintainer

- keep doing:

yes

- next step:

keep on keepin on

- fillmore

- what is it:

Python library for scrubbing Sentry events.

- role:

maintainer

- keep doing:

yes

- next step:

keep on keepin on

- kent

- what is it:

Fake Sentry server for local development.

- role:

maintainer

- keep doing:

yes

- next step:

keep on keepin on, but would be happy to pass this off

- sphinx-js

- what is it:

Sphinx extension for documenting JavaScript and TypeScript.

- role:

co-maintainer

- keep doing:

yes

- next step:

keep on keepin on

- crashstats-tools

- what is it:

Command line utilities for interacting with Crash Stats

- role:

maintainer

- keep doing:

yes

- next step:

keep on keepin on

- paul-mclendahand

- what is it:

Utility for combining GitHub pull requests.

- role:

maintainer

- keep doing:

yes

- next step:

keep on keepin on

- rob-bugson

- what is it:

Firefox addon for attaching GitHub pull requests to Bugzilla.

- role:

maintainer

- keep doing:

yes

- next step:

keep on keepin on

- fx-crash-sig

- what is it:

Python library for symbolicating stacks and generating crash signatures.

- role:

maintainer

- keep doing:

maybe

- next step:

keep on keepin on for now, but figure out a better long term plan

- siggen

- what is it:

Python library for generating crash signatures.

- role:

maintainer

- keep doing:

yes

- next step:

keep on keepin on

- mozilla-django-oidc

- what is it:

Django OpenID Connect library.

- role:

contributor (I maintain docker-test-mozilla-django-oidc

- keep doing:

maybe

- next step:

think about dropping this at some point

That's too many things. I need to pare the list down. There are a few I could probably sunset, but not any time soon.

I'm also thinking about a maintenance model where I'm squishing it all into a burst of activity for all the libraries around some predictable event like Python major releases.

I tried that out this fall and did a release of everything except Bleach (more on that next year) and rob-bugson which is a Firefox addon. I think I'll do that going forward. I need to document it somewhere so as to avoid the pestering of "Is this project active?" issues. I'll do that next year.

December 21, 2022 12:59 PM UTC

PyBites

Reflections on the Zen of Python

An initial version of this article appeared as a Pybites email first. If you like it join our friends list to get our valuable Python, developer and mindset content first …

How following the Zen of Python will make your code better, a lot better.

This epic set of axioms (triggered by typing import this in the Python REPL) says a lot about code quality and good software design principles.

Although each one is profound, let’s look at a few in particular:

Explicit is better than implicit.

This is an important one. If we don’t state things explicitly we miss obvious facts when looking at the code later, which will lead to mistakes.

This axiom is much about expressing intent and being informative for the next engineer, who will often be you!

You read software way more often than you write it. Plus the code you do write is for humans first and machines second.

Hence using meaningful variable / function / module names, and structuring your code properly, matters. As the Zen also says: Readability counts (and I think Python’s required indenting, like it or not, helps with this too).

Simple is better than complex.

Software inherently grows complex over time. I think keeping things simple as a system grows, is the greatest challenge we face as programmers.

Following this criteria alone is often the only way to keep a system maintainable!

Sometimes you cannot avoid a certain level of complexity though. That’s where great libraries design great abstractions. The underlying code is complicated but it’s hidden behind a nice usable interface (great examples are Typer, FastAPI, rich, requests, Django, etc).

As the Zen says: Complex is better than complicated, and the isolation of code and behavior those interfaces create, are a testament to the other Zen axiom that Namespaces are one honking great idea.

Regarding namespacing see also this discussion I recently opened about Python imports.

Flat is better than nested.

This is one of the things I often highlight in my code reviews. Deeply nested code (the horrendous “arrow shape”) is an indication of overly complex code and should be refactored.

Did you know that Flake8 is a wrapper around PyFlakes, pep8 and Ned Batchelder’s McCabe script?

McCabe checks complexity and anything that goes beyond 10 is considered too complex.

So the good news is that you can measure this using Flake8’s --max-complexity option.

Often it’s just a matter of breaking code out into more helper functions / smaller pieces.

You can also make your code less nested by using more early returns and constructs like continue and break.

Similarly Zen’s Sparse is better than dense is all about keeping your code organized and using line breaks for more breathing space.

For example, in a function, do some input validation -> add two line breaks -> do the actual work -> add two line breaks -> handle the return.

Just adding some more space / using blocks, your code will be so much more peaceful and readable, an easy win.

Same with clever one-liners, they are generally not readable. Do your fellow developers (and future you) a favor and break them out over multiple lines.

Special cases aren’t special enough to break the rules. Although practicality beats purity.

I like this axiom a lot because we all start software projects with a lot of good intentions, but as complexity grows, and adjusting to (inevitable) user requirements on the fly, we’ll inevitably have to make compromises.

It’s good to set the standards high but shipping fast and being responsive to changing needs also requires you to be a Pragmatic Programmer (as per one of my favorite programming books).

Regarding software in the real world, I like what somebody said the other day after working with us:

Most valuable thing I learned from you and the program was not only iterating quickly but having it be in the hands of users.

PDM client on the importance of shipping code requiring a good dose of practicality.

If the implementation is hard to explain, it’s a bad idea + If the implementation is easy to explain, it may be a good idea.

Another important one. We often think we can implement something right the first time, but sometimes we don’t understand the problem good enough yet (or we try to be clever lol).

A good test is to see how easy you can explain the design to a colleague (or rubber duck lol). If you struggle, maybe you should not start coding yet, but spend some more time at the whiteboard and talking to your stakeholders.

What’s the real problem you’re trying to solve? How will it be future proof? The latter usually becomes more apparent as more code gets written.

We talk about this in our live developer mindset trainings and on our podcast as well.

I hope this made you reflect on Python code and software development overall.

We should be grateful to Tim Peters for writing these axioms up and for Python’s creator + core developers for so elegantly implementing these almost everywhere you look in the Standard Library and beyond.