| Ø |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| [[WM:TECHBLOG]] |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| All Things Linguistic |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Andy Mabbett, aka pigsonthewing. |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Anna writes |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| BaChOuNdA |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Bawolff's rants |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Blogs on Santhosh Thottingal |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Bookcrafting Guru |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| brionv |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Catching Flies |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Clouds & Unicorns |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Cogito, Ergo Sumana tag: Wikimedia |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Cometstyles.com |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Commonists |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Content Translation Update |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| cookies & code |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Damian's Dev Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Design at Wikipedia |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| dialogicality |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Diff |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Doing the needful |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Durova |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Ed's Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Einstein University |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Endami |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Fae |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| FOSS – Small Town Tech |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Free Knowledge Advocacy Group EU |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Gap Finding Project |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Geni's Wikipedia Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://ad.huikeshoven.org/feeds/posts/default/-/wiki |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://blog.maudite.cc/comments/feed |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://blog.pediapress.com/feeds/posts/default/-/wiki |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://blog.robinpepermans.be/feeds/posts/default/-/PlanetWM |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://bluerasberry.com/feed/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://brianna.modernthings.org/atom/?section=article |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://magnusmanske.de/wordpress/?feed=rss2 |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://moriel.smarterthanthat.com/tag/mediawiki/feed/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://terrychay.com/category/work/wikimedia/feed |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://wikipediaweekly.org/feed/podcast |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://www.greenman.co.za/blog/?tag=wikimedia&feed=rss2 |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| http://www.phoebeayers.info/phlog/?cat=10&feed=rss2 |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://blog.bluespice.com/tag/mediawiki/feed/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://blog.wikimedia.de/tag/Wikidata+English/feed/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://logic10.tumblr.com/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://lu.is/wikimedia/feed/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://mariapacana.tumblr.com/tagged/parsoid/rss |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://medium.com/feed/@nehajha |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://thoughtsfordeletion.blogspot.com/feeds/posts/default |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://wandacode.com/category/outreachy-internship/feed/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://wllm.com/tag/wikipedia/feed/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://www.residentmar.io/feed |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| https://www.wikiphotographer.net/category/wikimedia-commons/feed/ |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| in English – Wikimedia Suomi |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| International Wikitrekk |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Laura Hale, Wikinews reporter |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Leave it to the prose |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Make love, not traffic. |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Mark Rauterkus & Running Mates ponder current events |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| MediaWiki – Chris Koerner |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| MediaWiki – It rains like a saavi |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| MediaWiki – Ryan D Lane |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| MediaWiki and Wikimedia – etc. etc. |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| mediawiki Archives - addshore |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| MediaWiki Testing |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Ministry of Wiki Affairs |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Musings of Majorly |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| My Outreachy 2017 @ Wikimedia Foundation |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| NonNotableNatterings |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Notes from the Bleeding Edge |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Nothing three |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Okinovo okýnko |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Open Codex |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Open Source Exile |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Original Research |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Pablo Garuda |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Pau Giner |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Personal – The Moon on a Stick |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Planet Wikimedia – Entropy Wins |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Planet Wikimedia – OpenMeetings.org | Announcements |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| planetwikimedia – copyrighteous |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Political Bias on Wikipedia |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Professional Wiki Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| project-green-smw |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Ramblings by Paolo on Web2.0, Wikipedia, Social Networking, Trust,

Reputation, … |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Rock drum |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Sam Wilson's Website :: Wikimedia |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Score all the things |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Semantic MediaWiki – news |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Sentiments of a Dissident |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Stories by Megha Sharma on Medium |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Sue Gardner's Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Tech News weekly bulletin feed |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Technical & On-topic – Mike Baynton’s Mediawiki Dev

Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| The Academic Wikipedian |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| The Ash Tree |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| The Lego Mirror - MediaWiki |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| The life of James R. |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| The Speed of Thought |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| The Wikipedian |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| TheDJ writes |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| This Month in GLAM |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Timo Tijhof |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Ting's Wikimedia Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Tyler Cipriani: blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Vinitha's blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| weekly – semanario – hebdo – 週刊

– týdeník – Wochennotiz –

주간 – tygodnik |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| What is going on in Europe? |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wiki – David Gerard |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wiki – Gabriel Pollard |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wiki – Our new mind |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wiki – stu.blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wiki – The life on Wikipedia – A Wikignome's perspecive |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wiki – Wiki Strategies |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wiki – Ziko's Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wiki Education |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wiki Loves Monuments |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wiki Northeast |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wiki Playtime - Medium |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wiki-en – [[content|comment]] |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikibooks News |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikimedia – andré klapper's blog. |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikimedia – apergos' open musings |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikimedia – Bitterscotch |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia – DcK Area |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia – Guillaume Paumier |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikimedia – Harsh Kothari |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikimedia – millosh’s blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia – Open and Free Source! |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikimedia – Open World |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikimedia – Thomas Dalton |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia – Tim Starling's blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia – Witty's Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia (en) – Random ruminations of a ruthless 'riter |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikimedia Archives - Kevin Payravi's Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia Archives - TheresNoTime |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia DC Blog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia Foundation |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia on Taavi Väänänen |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia Security Team |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikimedia |

ഗ്രന്ഥപ്പുര |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikinews Reports |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia & Linterweb |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia – Aharoni in Unicode |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikipedia – Andrew Gray |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia – Andy Mabbett, aka pigsonthewing. |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia – Blossoming Soul |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia – Bold household |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikipedia – Going GNU |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia – mlog |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia – ragesoss |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikipedia – The Longest Now |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia - nointrigue.com |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedia Notes from User:Wwwwolf |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikipedian in Residence for Gender Equity at West Virginia

University |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| WikiProject Oregon |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikisorcery |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Wikistaycation |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wikitech – domas mituzas |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| wmf – Entries in Life |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| WMUK |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Words and what not |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| Writing Within the Rules |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| XD @ WP |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

| {{Hatnote}} |

XML |

20:01, Monday, 14 2022 March |

21:01, Monday, 14 2022 March |

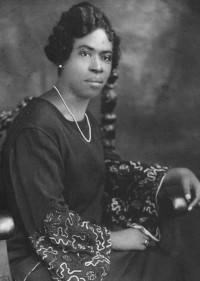

In four Wiki Scholars courses, museum professionals who

work at one of the Smithsonian’s nearly 200 Affiliates

collaborated with each other and Wiki Education’s team to add

and expand biographies of notable women on Wikipedia. Over 6 weeks,

they learned how to use Wikimedia projects as tools in their work

to preserve and share knowledge with the public. All told, we

trained 74 museum professionals how to edit Wikipedia, representing

53 different Smithsonian Affiliate museums, and they improved more

than 160 articles. By embedding Wikipedia know-how within their

institution, the Smithsonian has developed a network of new

Wikipedians to continue this important work both through their own

editing and through organizing local projects.

In four Wiki Scholars courses, museum professionals who

work at one of the Smithsonian’s nearly 200 Affiliates

collaborated with each other and Wiki Education’s team to add

and expand biographies of notable women on Wikipedia. Over 6 weeks,

they learned how to use Wikimedia projects as tools in their work

to preserve and share knowledge with the public. All told, we

trained 74 museum professionals how to edit Wikipedia, representing

53 different Smithsonian Affiliate museums, and they improved more

than 160 articles. By embedding Wikipedia know-how within their

institution, the Smithsonian has developed a network of new

Wikipedians to continue this important work both through their own

editing and through organizing local projects.