Tobi Lütke, who famously started Shopify when he realized that the software he built to run his snowboard shop was a much bigger opportunity than the shop itself, was reminiscing on Twitter about how cheap it used to be to run digital advertising:

fun story: I had no one bidding against me on major ski resorts names with my snowboard ads in 2004. Turns out minimum bid for google ad is 0.20c. I bet if you would roll your face on a keyboard as keyword there would be 3 others bidding against you today.

— tobi lutke (@tobi) February 17, 2022

This isn’t just a fun story: it’s a critical insight into the conditions that enabled Shopify to become the company that it is today; understanding how those conditions have changed give insight into what Shopify needs to become.

Shopify’s Evolution

Back in 2004 a lot of the pieces that were necessary to run an e-commerce site existed, albeit in rudimentary and hard-to-use forms. One could, with a bit of trouble, open a merchant account and accept credit cards; 3PL warehouses could hold inventory; UPS and Fedex could deliver your goods. And, of course, you could run really cheap ads on Google. What was missing was software to tie all of those pieces together, which is exactly what Lütke built for Snowdevil, his snowboard shop, and in 2006 opened up to other merchants; the software’s name was called Shopify:

This idea of Shopify as the hub for an e-commerce shop is one that has persisted to this day, but over the ensuing years Shopify has added on platform components as well; a platform looks like this (from The Bill Gates Line):

The first platform was the Shopify App Store, launched in 2009, where developers could access the Shopify API and create new plugins to deliver specific functionality that merchants might need. For example, if you want to offer a product on a subscription basis you might install Recharge Subscriptions; if you want help managing your shipments you might install ShipStation. Shopify itself delivers additional functionality through the Shopify App Store, like its Facebook Channel plugin, which lets you easily sync your products to Facebook to easily manage your advertising.

A year later Shopify launched the Shopify Theme Store, where merchants could buy a professional theme to make their site their own; now the hub looked like this:

At the same time Shopify also vertically integrated to incorporate features it once left to partners; the most important of these integrations was Shopify Payments, which launched in 2013 and was rebranded as Shop Pay in 2020. Yes, you could still use a clunky merchant account, but it was far easier to simply use the built-in Shop Pay functionality. Shop Pay was also critical in that it was the first part of the Shopify stack to build a consumer brand: users presented with a myriad of payment options know that if they click the purple Shop Pay button all of their payment and delivery information will be pre-populated, making it possible to buy with just one additional tap.

Even with this toehold in the consumer space, though, Shopify has remained a company that is focused first-and-foremost on its merchants and its mission to “help people achieve independence by making it easier to start, run, and grow a business.” That independence doesn’t just mean one-person entrepreneurs either: good-size brands like Gymshark, Rebecca Minkoff, KKW Beauty, Kylie Cosmetics, and FIGS leverage Shopify to build brands that are independent of Amazon in particular.

Apple and Facebook

In 2020’s Apple and Facebook I explained the symbiotic relationship between the two companies when it came to the App Store:

Facebook’s early stumbles on mobile are well-documented: the company bet on web-based apps that just didn’t work very well; the company completely rewrote its iOS app even as it was going public, which meant it had a stagnating app at the exact time mobile was exploding, threatening the desktop advertising product and platform that were the basis of the company’s S-1.

The re-write turned out to be not just a company-saving move — the native mobile app had the exact same user-facing features as the web-centric one, with the rather important detail that it actually worked — but in fact an industry-transformational one: one of the first new products enabled by the company’s new app were app install ads. From TechCrunch in 2012:

Facebook is making a big bet on the app economy, and wants to be the top source of discovery outside of the app stores. The mobile app install ads let developers buy tiles that promote their apps in the Facebook mobile news feed. When tapped, these instantly open the Apple App Store or Google Play market where users can download apps.

The ads are working already. One client TinyCo saw a 50% higher click through rate and higher conversion rates compared to other install channels. Facebook’s ads also brought in more engaged users. Ads tech startup Nanigans clients attained 8-10X more reach than traditional mobile ad buys when it purchased Facebook mobile app install ads. AdParlor racked up a consistent 1-2% click through rate.

Facebook’s App Install product quickly became the most important channel for acquiring users, particularly for games that monetized with Apple’s in-app purchase API: the combination of Facebook data with developer’s sophisticated understanding of expected value per app install led to an explosion in App Store revenue.

It’s worth underlining this point: the App Store would not be nearly the juggernaut it is today, nor would Apple’s “Services Narrative” be so compelling, were it not for the work that Facebook put in to build out the best customer acquisition engine in the industry (much to the company’s financial benefit, to be clear); Apple and Facebook’s relationship looked like this:

Facebook was by far the best and most efficient way to acquire new users, while Apple was able to sit back and harvest 30% of the revenue earned from those new users. Yes, some number of users came in via the App Store, but the primary discovery mechanism in the App Store is search, which relies on a user knowing what they want; Facebook showed users apps they never knew existed.

Facebook and Shopify

Facebook plays a similar role for e-commerce, particularly the independent sellers that exist on Shopify:

What makes Facebook’s approach so effective is that its advertising is a platform in its own right. Just as every app on a smartphone or every piece of software on a PC shares the same resources and API, every advertiser on Facebook, from app maker to e-commerce seller and everyone in-between, uses the same set of APIs that Facebook provides. What makes this so effective, though, is that the shared resources are not computing power but data, especially conversion data; I explained how this worked in 2020’s Privacy Labels and Lookalike Audiences, but briefly:

- Facebook shows a user an ad, and records the unique identifier provided by their phone (IDFA, Identifier for Advertisers, on iOS; GAID, Google Advertising Identifer, on Android).

- A user downloads an app, or makes an e-commerce purchase; Facebook’s SDK, which is embedded in the app or e-commerce site, again records the IDFA or notes the referral code that led the user to the site, and charges the advertiser for a successful conversion.

- The details around this conversion, whether it be which creative was used in the ad, what was purchased, how much was spent, etc., is added to the profile of the user who saw the ad.

- Advertisers take out new ads on Facebook asking the company to find users who are similar to users who have purchased from them before (Facebook knows this from past purchases seen by its SDK, or because an advertiser uploads a list of past customers).

- Facebook repeats this process, further refining its understanding of customers, apps, and e-commerce offerings in the process, including the esoteric ways (only discoverable by machine learnings) in which they relate to each other.

The critical thing to understand about this process is that no one app or e-commerce seller stands alone; everyone has collectively deputized Facebook to hold all of the pertinent user data and to figure out how all of the pieces fit together in a way that lets each individual app maker or e-commerce retailer acquire new customers for a price less than what that customer is worth to them in lifetime value.

This, by extension, means that Shopify doesn’t stand alone either: the company is even more dependent on Facebook to drive e-commerce than Apple ever was to drive app installs.1 That’s why it’s not a surprise that Facebook’s recent plunge in value was preceded (and then followed) by Shopify’s own:

Part of Shopify’s decline is likely related to the fact that it is another pandemic stock: the sort of growth the company saw while customers were stuck at home with nothing to do other than shop online couldn’t go on forever. Moreover, the company announced big increases in spending (more on this in a moment). However, the major headwind the company shares with Facebook is Apple.

ATT’s Impact

I have been writing regularly about Apple’s App Tracking Transparency (ATT) initiative since it was announced two years ago, so I won’t belabor the point; the key thing to understand is that ATT broke the Facebook advertising collective. On the app install side this was done by technical means: Apple made the IDFA an opt-in behind a scary warning about tracking, which most users declined.

The e-commerce side is more interesting: while Apple can’t technically limit what Facebook collects via its Pixel on a retailer’s website, ATT bans said broad collection all the same. To that end Facebook originally announced plans to not show the ATT prompt and abandon the IDFA; a few months later the company did an about-face announcing that it would indeed show the ATT prompt, and also limit what it collected in its in-app browser via the Facebook Pixel.

It’s unclear what happened to change Facebook’s mind; had they continued on their original path then their app advertising business would have suffered from a loss of data, but the e-commerce advertising would have been relatively unaffected (the loss of IDFA-related app install data would have decreased the amount of data available for that machine learning-driven targeting). What seems likely — and to be clear, this is pure speculation — is that Apple threatened to kick Facebook and its apps out of the App Store if it didn’t abide by ATT’s policies, even the parts that were technically unenforceable.

Regardless, the net impact is that it was suddenly impossible for Facebook to tie together all of the various pieces of that virtuous cycle I described above deterministically. Ads were hard to tie to conversions, conversions were hard to tie to users, which meant that users and advertisers were hard to tie to each other, resulting in less relevant ads for the former that cost more money for the latter.2 The monetary impact is massive: Facebook forecast a $10 billion hit to 2022 revenue, and as noted, its market value has been cut by a third.

Note, however, that ATT didn’t hurt all advertisers: companies like Google and Amazon are doing great; I explained why in Digital Advertising in 2022:

Amazon’s advertising business has three big advantages relative to Facebook’s.

- Search advertising is the best and most profitable form of advertising. This goes back to the point I made above: the more certain you are that you are showing advertising to a receptive customer, the more advertisers are willing to bid for that ad slot, and text in a search box will always be more accurate than the best targeting.

- Amazon faces no data restrictions. That noted, Amazon also has data on its users, and it is free to collect as much of it as it likes, and leverage it however it wishes when it comes to selling ads. This is because all of Amazon’s data collection, ad targeting, and conversion happen on the same platform — Amazon.com, or the Amazon app. ATT only restricts third party data sharing, which means it doesn’t affect Amazon at all.

- Amazon benefits from ATT spillover. That is not to say that ATT didn’t have an effect on Amazon: I noted above that Snap’s business did better than expected in part because its business wasn’t dominated by direct advertising to the extent that Facebook’s was, and that more advertising money flowed into other types of advertising. This almost certainly made a difference for Amazon as well: one of the most affected areas of Facebook advertising was e-commerce; if you are an e-commerce seller whose Shopify store powered-by Facebook ads was suddenly under-performing thanks to ATT, then the natural response is to shift products and advertising spend to Amazon.

All of these advantages will persist: search advertising will always be effective, and Amazon can always leverage data, and while some degree of ATT-related pullback was likely due to both uncertainty and the fact that Facebook hasn’t built back its advertising stack for a post-ATT world, the fact that said future stack will never be quite as good as the old one means that there is more e-commerce share to be grabbed than there might have been otherwise.

This last point is not set in stone: Shopify is already making major investments to compete with Amazon; it has the opportunity to do even more.

The Shopify Fulfillment Network

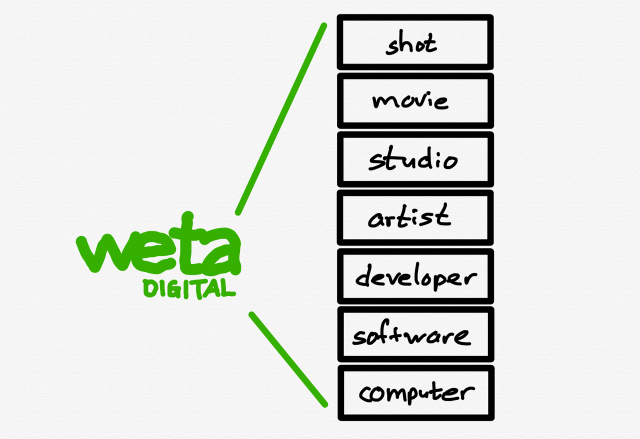

In 2019 I wrote about Shopify and its orthogonal competition with Amazon in Shopify and the Power of Platforms:

The difference is that Shopify is a platform: instead of interfacing with customers directly, 820,000 3rd-party merchants sit on top of Shopify and are responsible for acquiring all of those customers on their own.

[…]

This is how Shopify can both in the long run be the biggest competitor to Amazon even as it is a company that Amazon can’t compete with: Amazon is pursuing customers and bringing suppliers and merchants onto its platform on its own terms; Shopify is giving merchants an opportunity to differentiate themselves while bearing no risk if they fail.

The context of that Article was Shopify’s announcement of yet another platform initiative: the Shopify Fulfillment Network.

From the company’s blog:

Customers want their online purchases fast, with free shipping. It’s now expected, thanks to the recent standard set by the largest companies in the world. Working with third-party logistics companies can be tedious. And finding a partner that won’t obscure your customer data or hide your brand with packaging is a challenge.

This is why we’re building Shopify Fulfillment Network—a geographically dispersed network of fulfillment centers with smart inventory-allocation technology. We use machine learning to predict the best places to store and ship your products, so they can get to your customers as fast as possible.

We’ve negotiated low rates with a growing network of warehouse and logistic providers, and then passed on those savings to you. We support multiple channels, custom packaging and branding, and returns and exchanges. And it’s all managed in Shopify.

The first paragraph explains why the Shopify Fulfillment Network was a necessary step for Shopify: Amazon may commoditize suppliers, hiding their brand from website to box, but if its offering is truly superior, suppliers don’t have much choice. That was increasingly the case with regards to fulfillment, particularly for the small-scale sellers that are important to Shopify not necessarily for short-term revenue generation but for long-run upside. Amazon was simply easier for merchants and more reliable for customers.

Notice, though, that Shopify is not doing everything on their own: there is an entire world of third-party logistics companies (known as “3PLs”) that offer warehousing and shipping services. What Shopify is doing is what platforms do best: act as an interface between two modularized pieces of a value chain.

On one side are all of Shopify’s hundreds of thousands of merchants: interfacing with all of them on an individual basis is not scalable for those 3PL companies; now, though, they only need to interface with Shopify. The same benefit applies in the opposite direction: merchants don’t have the means to negotiate with multiple 3PLs such that their inventory is optimally placed to offer fast and inexpensive delivery to customers; worse, the small-scale sellers I discussed above often can’t even get an audience with these logistics companies. Now, though, Shopify customers need only interface with Shopify.

Over the intervening three years, though, Shopify has moved away from this vision: Shopify Fulfillment Network (SFN) is not going to be a platform like the Shopify App Store but rather an integrated part of Shopify’s core offering like Shop Pay. President Harley Finkelstein explained on the company’s recent earnings call:

We are consolidating our network to larger facilities. We’ll operate more of them ourselves, and we’ll unify the warehouse management software that we’ve been building and testing over the past 18 months. We expect that these changes will enable us to deliver packages in 2 days or less to more than 90% of the U.S. population, while minimizing the inventory investment for SFN merchants. While Amy will go into more detail as to what our evolved vision looks like from a financial perspective, I can tell you, from a merchant’s perspective, Shopify Fulfillment can be life-changing for their businesses. We hear from merchants that fulfillment is only something you think about when it isn’t working well, and they are thrilled that they now never have to think about it. The stockouts and pre-orders that took the shine off strong demand for the new releases, largely became, I think, in the past, with Shopify Fulfillment. And just recently, I heard from a merchant who tells me that he sleeps even better because Shopify Fulfillment just works. Comments like these fuel our ambition, and we’ll continue to explore opportunities to give merchants more visibility and control over their most important assets.

Building and managing warehouses itself is a major commitment: Shopify is going to spend a billion dollars in capital expenditures in 2023 and 2024 building out the Shopify Fulfillment Network, and it seems safe to assume that that spending will only increase over time. I think, though, that this makes sense: Shopify learned from Shop Pay that it can decrease complexity for merchants and deliver a better experience for customers by doing essential functionality itself, and those same needs exist in logistics as well, particularly given Amazon’s massive investment in its own integrated operations.

Keep in mind, though, logistics isn’t the only advantage that Amazon has.

Shopify Advertising Services

Remember the fundamental challenge that ATT presents to Facebook: the company can no longer pool the conversion and targeting data of all of its advertisers such that the sum of effectiveness vastly exceeds what any one of those advertisers could accomplish on their own. The response of the gaming market has been a wave of consolidation to better pool and leverage data. Amazon, as noted, is well ahead of the curve here: because the company’s third-party merchant ecosystem lives within the Amazon.com website and app, Amazon has full knowledge of conversions and the ability to target consumers without any interference from Apple.

Shopify is halfway there: a massive number of e-commerce retailers are on Shopify, but today Shopify mostly treats them all as individual entities, having left the pooling of data for advertising to Facebook. Now that Facebook is handicapped by Apple, Shopify should step up to provide substitute functionality and build its own advertising network.

This advertising network, though, would look a bit different than what you might expect. First, Shopify doesn’t have any major customer-facing properties to display ads; it could potentially build some cross-shop advertising, but that doesn’t seem very ideal for either merchants or customers. The reality is that Shopify merchants still need to find customers on other sites like social networks; the challenge is doing so without knowing who is actually seeing the ads.

Here Shopify’s ability to act on behalf of the entire Shopify network provides an opening: instead of being an advertising seller at scale, like Facebook, Shopify the company would become an advertising buyer at scale. Armed with its perfect knowledge of conversions it could run probabilistically-targeted campaigns that are much more precise than anyone else, using every possible parameter available to advertisers on Facebook or anywhere else, and over time build sophisticated cohorts that map to certain types of products and purchase patterns. No single Shopify merchant could do this on their own with a similar level of sophistication, which Facebook indirectly admitted on its recent earnings call; COO Sheryl Sandberg said:

On measurement, there were 2 key areas within measurement, which were impacted as a result of Apple’s iOS changes. And I talked about this on the call last quarter as you referenced. The first is the underreporting gap. And what’s happening here is that advertisers worry they’re not getting the ROI they’re actually getting. On this part, we’ve made real progress on that underreporting gap since last quarter, and we believe we’ll continue to make more progress in the years ahead. I do want to caution that it’s easier to address this with large campaigns and harder with small campaigns, which means that part will take longer, and it also means that Apple’s changes continue to hurt small businesses more.

Sandberg’s comment was primarily about the sheer amount of data produced by larger campaigns, but the same principle applies to the sheer number of campaigns as well: any one advertiser is, thanks to ATT, limited in the data points they can get from Facebook, making it more difficult to run multiple campaigns to better understand what works and what doesn’t. However, Shopify could in theory run campaigns for each of its individual merchants and collate the data on the back-end; this is the inverse of Amazon’s advantage of being one website, because in this case Shopify benefits from having a hand in such a huge number of them.

I suspect the response of many close Shopify watchers is that such an initiative is not in the company’s DNA; that, though, is why the evolution of the Shopify Fulfillment Network is so notable: building and operating warehouses wasn’t really in the company’s DNA either, but it is the right thing to do if the company is going to continue to power The Anti-Amazon Alliance. The same principle applies to this theoretical ad network, particularly given that it is Amazon who is a big winner from Apple’s changes.

What is interesting is that it appears that Shopify is already flirting with this idea:3 Last summer the company quietly introduced the concept of Shopify Audiences during an invite-only presentation; Tanay Jaipuria fleshed out the concept on Substack:

Shopify Audiences is a data exchange network, which uses aggregated conversion data (i.e., data around which people bought a merchant’s product) across all opted-in merchants on Shopify to generate a custom audience for a given merchant’s product. This audience is essentially a set of people Shopify believes are likely to be interested in your product given the data around all transactions that have taken place across all opted-in merchants on Shopify.

Merchants can then use these audiences when advertising on FB, Snap, Twitter, and other ad platforms1 either as custom audiences or lookalike audiences which should result in higher-performing ads and lower cost per conversion to acquire customers/sales.

This is a big step, and is very valuable for Facebook advertising in particular; however, it doesn’t address the Apple issue, because ATT bans custom and lookalike audiences from external data sources. That means that Data Exchange can’t be used in any campaign targeting iOS users. That is why Shopify Audiences is only the first step: to make this work Shopify Audiences needs to become Shopify Advertising Services, where Shopify doesn’t just collect the targets but buys the ads to target them as well, in a way no one else in the world can.

The Conservation of Attractive Profits

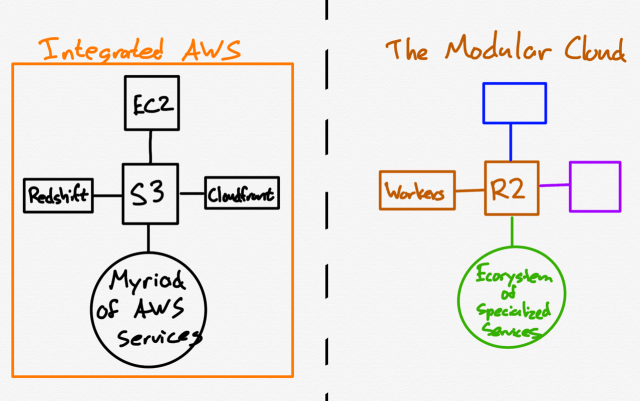

Shopify Advertising Services, Shopify Fulfillment Network, and Shop Pay would, without question, result in a very different looking company than the one I sketched out at the beginning of this Article:

This sort of monolith, though, makes sense not only because of the specifics of what is happening in the market, but from a theoretical perspective as well. I wrote another article about Shopify a year ago called Market-Making on the Internet, highlighting how major consumer-facing platforms were increasingly incorporating Shopify into their sites and apps:

What makes the Shopify platform so fascinating is that over time more and more of the e-commerce it enables happens somewhere other than a Shopify website. Shopify, for example, can help you sell on Amazon, and in what will be an increasingly important channel, Facebook Shops. In the latter case Facebook and Shopify are partnering to create a fully-integrated market: Facebook’s userbase and advertising tools on one side, and Shopify’s e-commerce management and seller base on the other. The broader takeaway, though, is that Shopify’s real value proposition is working across markets, not creating an exclusive one.

Facebook’s motivations are clear: conversions in Facebook Shops can be tracked in a way conversions on websites no longer can be, which will result in in more effective advertising; it is to Shopify’s credit that they are seen as such an important and credible partner that Facebook is going as far as incorporating Shop Pay as well. Even so, this is very much an example of Facebook integrating and Shopify, as it must, modularizing to accommodate them. That is a recipe for long run commoditization.

That is why it is a good thing that Shopify is integrating elsewhere in its business: profits in a value chain follow integration, and the more that Shopify is intertwined with the biggest players the more it needs to find other ways to differentiate. Shop Pay is already a massive win, and fulfillment has the chance to be another one; advertising shouldn’t be far behind.

I wrote a follow-up to this Article in this Daily Update.

While you can make purchases from brands in the Shop Pay app, it’s an insignificant channel that isn’t at all comparable to Apple’s own direct route to customers via the App Store. ↩

Facebook’s advertising is sold on an auction basis, and advertisers often bid against desired outcomes, like conversions; the more difficult it is to target users the more users there are who need to be showed an ad, which increases demand for inventory, increasing prices. ↩

Thanks to Eric Seufert for tipping me off to this. ↩