$ time cmakebuild.sh --target deqp-vk [6/6] Linking CXX executable external/vulkancts/modules/vulkan/deqp-vk real 0m25.137s user 0m22.280s sys 0m3.440s

Debugging shaders in Vulkan using printf

Debugging programs using printf statements is not a technique that everybody appreciates. However, it can be quite useful and sometimes necessary depending on the situation. My past work on air traffic control software involved using several forms of printf debugging many times. The distributed and time-sensitive nature of the system being studied made it inconvenient or simply impossible to reproduce some issues and situations if one of the processes was stalled while it was being debugged.

In the context of Vulkan and graphics in general, printf debugging can be useful to see what shader programs are doing, but some people may not be aware it’s possible to “print” values from shaders. In Vulkan, shader programs are normally created in a high level language like GLSL or HLSL and then compiled to SPIR-V, which is then passed down to the driver and compiled to the GPU’s native instruction set. That final binary, many times outside the control of user applications, runs in a quite closed and highly parallel environment without many options to observe what’s happening and without text input and output facilities. Fortunately, tools like glslang can generate some debug information when compiling shaders to SPIR-V and other tools like Nsight can use that information to let you debug shaders being run.

Still, being able to print the values of different expressions inside a shader can be an easy way to debug issues. With the arrival of Ray Tracing, this is even more useful than before. In ray tracing pipelines, the shaders being executed and resources being used are chosen based on the scene geometry, the origin and the direction of the ray being traced. printf debugging can let you see where you are and what you’re using. So how do you print values from shaders?

Vulkan’s debug printf is implemented as part of the Validation Layers and the general procedure is well documented. If you were to implement this kind of mechanism yourself, you’d likely use a storage buffer to save the different values you want to print while shader invocations are running and, later, you’d go over the contents of that buffer and print the associated message with each value or values. And that is, essentially, what debug printf does but in a very convenient and automated way so that you don’t have to deal with the gory details and corner cases.

In a GLSL shader, simply:

-

Enable the GL_EXT_debug_printf extension.

-

Sprinkle your code with debugPrintfEXT() calls.

-

Use the Vulkan Configurator that’s part of the SDK or manually edit vk_layer_settings.txt for your app enabling VK_VALIDATION_FEATURE_ENABLE_DEBUG_PRINTF_EXT.

-

Normally, disable other validation features so as not to get too much output.

-

Take a look at the debug report or debug utils info messages containing printf results, or set printf_to_stdout to true so printf messages are sent to stdout directly.

You can find an example shader in the validation layers test code. The debug printf feature has helped me a lot in the past, so I wanted to make sure it’s widely known and used.

Due to the observer effect, you may end up in situations where your code works correctly when enabling debug printf but incorrectly without it. This may be due to multiple reasons but one of the main ones I’ve encountered is improper synchronization. When debug printf is used, the layers use additional synchronization primitives to sync the contents of auxiliary buffers, which can mask synchronization bugs present in the app.

Finally, RenderDoc 1.14, released at the end of May, also supports Vulkan’s shader printf statements and will let you take a look at the print statements produced during a draw call. Furthermore, the print statements don’t have to be present in the original shader. You can also use the shader edit system to insert them on the fly and use them to debug the results of a particular shader invocation. Isn’t that awesome? Great work by Baldur Karlsson as always.

PS: As a happy coincidence, just yesterday LunarG published a white paper on Vulkan’s debug printf with additional information on this excellent feature. Be sure to check it out!

Linking deqp-vk much faster thanks to lld

Some days ago my Igalia colleague Adrián Pérez pointed us to mold, a new drop-in replacement for existing Unix linkers created by the original author of LLVM lld. While mold is pretty new and does not aim to be 100% compatible with GNU ld, GNU gold or LLVM lld (at least as of the time I’m writing this), I noticed the benchmark table in its README file also painted a pretty picture about the performance of lld, if inferior to that of mold.

In my job at Igalia I work most of the time on VK-GL-CTS, Vulkan and OpenGL’s Conformance Test Suite, which contains thousands of tests for OpenGL and Vulkan. These tests are provided by different executable files and the Vulkan tests on which I’m focused are contained in a binary called deqp-vk. When built with debug information, deqp-vk can be quite large. A recent build, for example, is taking 369 MB in my drive. But the worst part is that linking the binary typically takes around 25 seconds on my work laptop.

I had never paid much attention to the linker before, always relying on the default choice in Fedora or any other distribution. However, I decided to install lld, which has an official package, and gave it a try. You Will Not Believe What Happened Next.

$ time cmakebuild.sh --target deqp-vk [6/6] Linking CXX executable external/vulkancts/modules/vulkan/deqp-vk real 0m2.622s user 0m5.456s sys 0m1.764s

lld is capable of correctly linking deqp-vk in 1/10th of the time the default linker (GNU ld) takes to do the same job. If you want to try lld yourself you have several options. Ideally, you’d be able to run update-alternatives --set ld /usr/bin/lld as root but that option is notably not available in Fedora. There was a proposal to make that work but it never materialized, so it cannot be made the default system-wide linker.

However, depending on the build system used by a particular project, there should be a way to make it use lld instead of /usr/bin/ld. For example, VK-GL-CTS uses CMake, which invokes the compiler to link executable files, instead of calling the linker directly, which would be unusual. Both GCC and Clang can be passed -fuse-ld=lld as a command line option to use lld instead of the default linker. That flag should be added to CMake’s CMAKE_EXE_LINKER_FLAGS variable, either by reconfiguring an existing project with, for example, ccmake, or by adding the flag to the LDFLAGS environment variable before running CMake on a build directory for the first time.

Looking forward to start using the mold linker in the future and its multithreading capabilities. In the mean time, I’m very happy to have checked lld. It’s not that usual that a simple tooling change as this one gives me such a clear advantage.

Letting other local users access your PipeWire PulseAudio instance

Some time ago I blogged about how to let other users connect to your PulseAudio instance. This can be quite useful to, for example, run your usual web browser as a separate user while allowing it to properly play online videos. With the arrival of Fedora 34, PulseAudio has been replaced by PipeWire but, luckily, PipeWire aims to be a drop-in replacement for PulseAudio. The question is: can it also listen on a TCP port like the PulseAudio daemon does and can we make other users connect to it? And the answer is yes.

The first step is configuring PipeWire to launch the PulseAudio module using a TCP port in addition to the normal Unix socket. For that, you’ll likely find a sample PipeWire configuration file in /usr/share/pipewire/pipewire-pulse.conf that you can copy to the $HOME/.config/pipewire directory for your normal user. Once copied, edit that file and find the PulseAudio compatibility module configuration section. In it, add the TCP port to the list of server addresses. The section text could look like this:

{ name = libpipewire-module-protocol-pulse

args = {

# the addresses this server listens on

server.address = [

"unix:native"

"tcp:127.0.0.1:4713"

]

#pulse.min.req = 256/48000 # 5ms

#pulse.default.req = 960/48000 # 20 milliseconds

#pulse.min.frag = 256/48000 # 5ms

#pulse.default.frag = 96000/48000 # 2 seconds

#pulse.default.tlength = 96000/48000 # 2 seconds

#pulse.min.quantum = 256/48000 # 5ms

}

}

The line you have to add is the one containing tcp:127.0.0.1:4713. Port 4713 is the one normally used by PulseAudio.

Finally, when launching an application as another user you need to tell it to connect to that PulseAudio server. The easiest way is using an environment variable called PULSE_SERVER.

PULSE_SERVER=tcp:127.0.0.1:4713 export PULSE_SERVER

VK_EXT_multi_draw released for Vulkan

The Khronos Group has released today a new version of the Vulkan specification that includes the VK_EXT_multi_draw extension. This new extension has been championed by Mike Blumenkrantz, contracted by Valve to work on Zink, an OpenGL implementation that’s part of Mesa and runs on top of Vulkan. Mike has been working very hard to make OpenGL-on-Vulkan performant and better, and came up with this extension to close an existing gap between the two APIs. As part of the ongoing collaboration between Igalia and Valve, I had the chance to participate in the release process by reviewing the specification text in depth, providing feedback and fixes, and writing a set of CTS tests to check conformance for drivers implementing the extension. As you can see in the contributors list, VK_EXT_multi_draw had input and feedback from more vendors. Special mention to Jason Ekstrand from Intel, who provided an initial review of the text, and Piers Daniell from NVIDIA, who was also involved since the early stages.

Thanks to VK_EXT_multi_draw, Vulkan will have equivalents to the glMultiDrawArrays and glMultiDrawElements functions from OpenGL. They’re called vkCmdDrawMultiEXT and vkCmdDrawMultiIndexedEXT. These two new functions allow recording a batch of draw commands in a command buffer using a single call, and they can be used in situations where an application would be recording a high number of draws without changing state. Although Vulkan already had mechanisms that allowed applications to record batches of draw commands in the form of indirect draws, these need the array of draw parameters to reside in a GPU-accessible buffer. VK_EXT_multi_draw, on the other hand, lets applications provide arrays of draw parameters using CPU memory.

vkCmdDrawMultiEXT is essentially equivalent to calling vkCmdDraw multiple times in a row, and vkCmdDrawMultiIndexedEXT does the same for vkCmdDrawIndexed. To improve application performance and reduce CPU overhead, Vulkan drivers are allowed and encouraged to omit checks for API function arguments provided by applications (these correctness checks are provided by the Vulkan Validation Layers mainly during application development), and thanks to mechanisms like primary and secondary command buffers, Vulkan makes it possible to prepare sequences of commands for the GPU to execute using multiple threads and CPU cores. In this situation, you may be wondering how much of an improvement the new functions provide apart from saving a few microseconds processing some function calls. In other words, what’s the practical difference between calling vkCmdDraw a thousand times and batching a thousand draws using vkCmdDrawMultiEXT?

The answer is that most of the overhead of recording a draw command doesn’t come from having to call a function, but in the checks the implementation has to run when recording the command. These checks may not be related to correctness, but to additional actions and options that may need to be taken depending on the state of the command buffer in the moment the draw command is recorded. For example, see the calls to radv_before_draw when RADV processes a draw command (note: RADV is Mesa’s super nice free software Vulkan driver for AMD cards). These checks only need to run once when using the new functions. In bechmark-like scenarios using real drivers, Mike has been able to verify that, while the overhead varies per driver and some of them are lightweight and have minimal overhead, some mainstream drivers can double their draw call processing rate when using VK_EXT_multi_draw.

Mike has work-in-progress implementations for Mesa’s ANV and RADV drivers (the Vulkan drivers for Intel and AMD GPUs, respectively) which pass conformance and will hopefully land soon in Mesa’s main branch, and more drivers are expected to ship support for the extension in the near future.

Vulkan Ray Tracing Resources and Overview

As you may know, I’ve been working on VK-GL-CTS for some time now. VK-GL-CTS the Conformance Test Suite for Vulkan and OpenGL, a large collection of tests used to verify implementations of the Vulkan and OpenGL APIs work as intended by the specification. My work has been mainly focused on the Vulkan side of things as part of Igalia's ongoing collaboration with Valve.

Last year, Khronos released the official specification of the Vulkan ray tracing extensions and I had the chance to participate in the final stages of the process by improving test coverage and fixing bugs in existing CTS tests, which is work that continues to this day mixed with other types of tasks in my backlog.

As part of this effort I learned many bits of the new Vulkan Ray Tracing API and even provided some very minor feedback about the spec, which resulted in me being listed as contributor to the VK_KHR_acceleration_structure extension.

Now that the waters are a bit more calm, I wanted to give you a list of resources and a small overview of the main concepts behind the Vulkan version of ray tracing.

General Overview

There are a few basic resources that can help you get acquainted with the new APIs.

-

The official Khronos blog published an overview of the ray tracing extensions that explains some of the basic concepts like acceleration structures, ray tracing pipelines (and what their different shader stages do) and ray queries.

-

Intel’s Jason Ekstrand gave an excellent talk about ray tracing in Vulkan in XDC 2020. I highly recommend you to watch it if you’re interested.

-

For those wanting to get their hands on some code, the Khronos official Vulkan Samples repository includes a basic ray tracing sample.

-

The official Vulkan specification text (warning: very large HTML document), while intimidating, is actually a good source to learn many new parts of the API. If you’re already familiar with Vulkan, the different sections about ray tracing and ray tracing pipelines are worth reading.

Acceleration Structures

The basic idea of ray tracing, as a tool, is that you must be able to choose an arbitrary point in space as the ray origin and a direction vector, and ask your implementation if that ray intersects anything along the way given a minimum and maximum distance.

In a modern computer or console game the number of triangles present in a scene is huge, so you can imagine detecting intersections between them and your ray can be very expensive. The implementation typically needs to organize the scene geometry in a hierarchical tree-like structure that can be traversed more efficiently by discarding large amounts of geometry with some simple tests. That’s what an Acceleration Structure is.

Fortunately, you don’t have to organize the scene geometry yourself. Implementations are free to choose the best and most suitable acceleration structure format according to the underlying hardware. They will build this acceleration structure for you and give you an opaque handle to it that you can use in your app with the rest of the API. You’re only required to provide the long list of geometries making up your scene.

You may be thinking, and you’d be right, that building the acceleration structure must be a complex and costly process itself, and it is. For this reason, you must try to avoid rebuilding them completely all the time, in every frame of the app. This is why acceleration structures are divided in two types: bottom level and top level.

Bottom level acceleration structures (BLAS) contain lists of geometries and typically represent whole models in your scene: a building, a tree, an object, etc.

Top level acceleration structures (TLAS) contain lists of “pointers” to bottom level acceleration structures, together with a transformation matrix for each pointer.

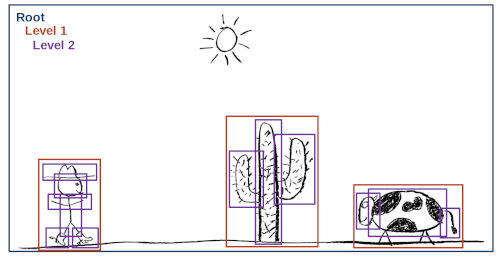

In the diagram below, taken from Jason Ekstrand’s XDC 2020 talk[1], you can see the blue square representing the TLAS, the red squares representing BLAS and the purple squares representing geometries.

The whole idea behind this is that you may be able to build the bottom level acceleration structure for each model only once as long as the model itself does not change, and you will include this model in your scene one or more times. Each time, it will have an associated transformation matrix that will allow you to translate, rotate or scale the model without rebuilding it. So, in each frame, you may only have to rebuild the top level acceleration structure while keeping the bottom level ones intact. Other tricks you can use include rebuilding the top level acceleration structure in a reduced frame rate compared to the app, or using a simplified version of the world geometry when tracing rays instead of the more detailed model used when rendering the scene normally.

Acceleration structures, ray origins and direction vectors typically use world-space coordinates.

Ray Queries

In its most basic form, you can access the ray tracing facilities of the implementation by using ray queries. Before ray tracing, Vulkan already had graphics and compute pipelines. One of the main components of those pipelines are shader programs: application-provided instructions that run on the GPU telling it what to do and, in a graphics pipeline, how to process geometry data (vertex shaders) and calculate the color of each pixel that ends up on the screen (fragment shaders).

When ray queries are supported, you can trace rays from those “classic” shader programs for any purpose. For example, to implement lighting effects in a fragment shader.

Ray Tracing Pipelines

The full power of ray tracing in Vulkan comes in the form of a completely new type of pipeline, the ray tracing pipeline, that complements the existing compute and graphics pipelines.

Most Vulkan ray tracing tutorials, including the Khronos blog post I mentioned before, explain the basics of these pipelines, including the new shader stages (ray generation, intersection, any hit, closest hit, etc) and how they work together. They cover acceleration structure traversal for each ray and how that triggers execution of a particular shader program provided by your app. The image below, taken from the official Vulkan specification[2], contains the typical representation of this traversal process.

The main difference between the traditional graphics pipelines and ray tracing pipelines is the following one. If you’re familiar with the classic graphics pipelines, you know the app decides and has full control over what is being drawn at any moment. Your command stream usually looks like this.

-

Begin render pass (I’ll be using this depth buffer to discard overlapping geometry on the screen and the resulting pixels need to be written to this image)

-

Bind descriptor sets (I’ll be using these textures and data buffers)

-

Bind pipeline (This is how the whole process looks like, including the crucial part of shader programs: let me tell you what to do with each vertex and how to calculate the color of each resulting pixel)

-

Draw this

-

Draw that

-

Bind pipeline (I’ll be using different shader programs for the next draws, thank you)

-

Draw some more

-

Draw even more

-

Bind descriptor sets (The textures and other data will be different from now on)

-

Bind pipeline (The shaders will be different too)

-

Additional draws

-

Final draws (Almost there, buddy)

-

End render pass (I’m done)

Each draw command in the command stream instructs the GPU to draw an object and, because the app is recording that command, the app knows what that object is and the appropriate resources that need to be used to draw that object, including textures, data buffers and shader programs. Before recording the draw command, the app can prepare everything in advance and tell the implementation which shaders and resources will be used with the draw command.

In a ray tracing pipeline, the scene geometry is organized in an acceleration structure. When tracing a ray, you don’t know, in advance, which geometry it’s going to intersect. Each geometry may need a particular set of resources and even the shader programs may need to change with each geometry or geometry type.

Shader Binding Table

For this reason, ray tracing APIs need you to create a Shader Binding Table or SBT for short. SBTs represent (potentially) large arrays of shaders organized in shader groups, where each shader group has a handle that sits in a particular position in the array. The implementation will access this table, for example, when the ray hits a particular piece of geometry. The index it will use to access this table or array will depend on several parameters. Some of them come from the ray tracing command call in a ray generation shader, and others come from the index of the geometry and instance data in the acceleration structure.

There’s a formula to calculate that index and, while it’s not very complex, it will determine the way you must organize your shader binding table so it matches your acceleration structure, which can be a bit of a headache if you’re new to the process.

I highly recommend to take a look at Will Usher’s Shader Binding Table Tutorial, which includes an interactive SBT builder tool that will let you get an idea of how things work and fit together.

The Shader Binding Table is complemented in Vulkan by a Shader Record Buffer. The concept is that entries in the Shader Binding Table don’t have a fixed size that merely corresponds to the size of a shader group handle identifying what to run when the ray hits that particular piece of geometry. Instead, each table entry can be a bit larger and you can put arbitrary data after each handle. That data block is called the Shader Record Buffer, and can be accessed from shader programs when they run. They may be used, for example, to store indices to resources and other data needed to draw that particular piece of geometry, so the shaders themselves don’t have to be completely unique per geometry and can be reused more easily.

Conclusion

As you can see, ray tracing can be more complex than usual but it’s a very powerful tool. I hope the basic explanations and resources I linked above help you get to know it better. Happy hacking!