You may have just now

noticed

that the GNOME developers documentation website has changed after 15

years. You may also have noticed that it

contains drastically less content than it used to. Before you pick up

torches and pitchforks, let me give you a short tl;dr of the changes:

- Yes, this is entirely intentional

- Yes, I know that stuff has been moved

- Yes, I know that old URLs don’t work

- Yes, some redirections will be put in place

- No, we can’t go back

So let’s recap a bit the state of the developers documentation website in

2021, for those who weren’t in attendance at my GUADEC 2021

presentation:

- library-web is a

Python application, which started as a Summer of Code project in 2006,

whose job was to take Autotools release tarballs, explode them, fiddle

with their contents, and then publish files on the gnome.org infrastructure.

- library-web relies heavily on Autotools and gtk-doc.

- library-web does a lot of pre-processing of the documentation to rewrite

links and CSS from the HTML files it receives.

- library-web is very much a locally sourced, organic, artisanal pile of

hacks that revolve very much around the GNOME infrastructure from around 2007-2009.

- library-web is incredibly hard to test locally, even when running inside

a container, and the logging is virtually non-existent.

- library-web is still running on Python 2.

- library-web is entirely unmaintained.

That should cover the infrastructure side of things. Now let’s look at the content.

The developers documentation is divided in four sections:

- a platform overview

- the Human Interface guidelines

- guides and tutorials

- API references

The platform overview is slightly out of date; the design team has been

reviewing the HIG and using a new documentation

format; the guides and

tutorials still like GTK1 and GTK2 content; or how to port GNOME 2

applications to GNOME 3; or how to write a Metacity theme.

This leaves us with the API references, which are a grab bag of

miscellaneous things, listed by version numbers. Outside of the C API

documentation, the only other references hosted on developer.gnome.org are

the C++ bindings—which, incidentally, use Doxygen and when they aren’t

broken by library-web messing about with the HTML, they have their own

franken-style mash up of gtkmm.org and developer.gnome.org.

Why didn’t I know about this?

If you’re asking this question, allow me to be blunt for a second: the

reason you never noticed that the developers documentation website was

broken is that you never actually experienced it for its intended use case.

Most likely, you either just looked in a couple of well known places and

never ventured outside of those; and/or you are a maintainer, and you never

literally cared how things worked (or didn’t work) after you uploaded a

release tarball somewhere. Like all infrastructure, it was somebody else’s problem.

I completely understand that we’re all volunteers, and that things that work

can be ignored because everyone has more important things to think about.

Sadly, things change: we don’t use Autotools (that much), which means

release archives do not contain the generated documentation any more; this

means library-web cannot be updated, unless somebody modifies the

configuration to look for a separate documentation tarball that the

maintainer has to generate manually and upload in a magic location on the

gnome.org file server—this has happened for GTK4 and GLib for the past two years.

Projects change the way they lay out the documentation, or gtk-doc changes

something, and that causes library-web to stop extracting the right files;

you can look at the ATK

reference for the past year and

a half for an example.

Projects bump up their API, and now the cross-referencing gets broken, like

the GTK3 pages linking GDK2 types.

Finally, projects decide to change how their documentation is generated,

which means that library-web has no idea how to extract the HTML files, or

how to fiddle with them.

If you’re still using Autotools and gtk-doc, and haven’t done an API bump in

15 years, and all you care about is copying a release archive to the

gnome.org infrastructure I’m sure all of this will come as a surprise, and

I’m sorry you’re just now being confronted with a completely broken

infrastructure. Sadly, the infrastructure was broken for everybody else long

before this point.

What did you do?

I tried to make library-web deal with the changes in our infrastructure. I

personally built and uploaded multiple versions of the documentation for

GLib (three different archives for each release) for a year and a half; I

configured library-web to add more “extra tarball” locations for various

projects; I tried making library-web understand the new layout of various

projects; I even tried making library-web publish the gi-docgen references

used by GTK, Pango, and other projects.

Sadly, every change broke something else—and I’m not just talking about the

horrors of the code base. As library-web is responsible for determining the

structure of the documentation, any change to how the documentation is

handled leads to broken URLs, broken links, or broken redirections.

The entire castle of cards needed to go.

Which brings us to the

plan.

What are you going to do?

Well, the first step has been made: the new developer.gnome.org website does

not use library-web. The content has been refreshed, and more content is on

the way.

Again, this leaves the API references. For those, there are two things that

need to happen—and are planned for GNOME 41:

- all the libraries that are part of the GNOME SDK run time, built by

gnome-build-meta must

also build their documentation, which will be published as part of the

org.gnome.Sdk.Docs extension; the contents of the extension will also

be published online.

- every library that is hosted on gnome.org infrastructure should publish

their documentation through their CI pipeline; for that, I’m working on

a CI template file and image that should take care of the easy projects,

and will act as model for projects that are more complicated.

I’m happy to guide maintainers to deal with that, and I’m also happy to open

merge requests on various projects.

In the meantime, the old documentation is still

available as a static snapshot, and the

sysadmins are going to set up some redirections to bridge us from the old

platform to the new—and hopefully we’ll soon be able to redirect to each

project’s GitLab pages.

Can we go back, please?

Sadly, since nobody has ever bothered picking up the developers

documentation when it was still possible to incrementally fix it, going back

to a broken infrastructure isn’t going to help anybody.

We also cannot keep the old developer.gnome.org and add a new one, of

course; now we’d have two websites, one of which broken and unmaintained and

linked all over the place, and a new one that nobody knows exists.

The only way is forward, for better or worse.

What about Devhelp

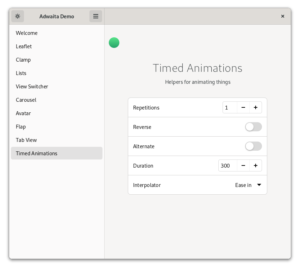

Some of you may have noticed that I picked up the maintenance of

Devhelp, and landed a few fixes to

ensure that it can read the GTK4 documentation. Outside of some visual

refresh for the UI, I also am working on making it load the contents of the

org.gnome.Sdk.Docs run time extension, which means it’ll be able to load

all the core API references. Ideally, we’re also going to see a port to GTK4

and libadwaita, as soon as WebKitGTK for GTK4 is more wideley available.

. It shouldn’t matter what the users environment might be or how it’s being distributed. This imposes some technical challenges and, I imagine, is one of the reasons why a few file managers available on Flathub don’t provide feature parity with their non-flatpak versions.

. It shouldn’t matter what the users environment might be or how it’s being distributed. This imposes some technical challenges and, I imagine, is one of the reasons why a few file managers available on Flathub don’t provide feature parity with their non-flatpak versions.

” emoji (only editors have this permission).

” emoji (only editors have this permission).

: I have added the project description “Shortwave” to this message

: I have added the project description “Shortwave” to this message : I have added this message to the “Circle Apps” section.

: I have added this message to the “Circle Apps” section.