You are currently browsing the category archive for the ‘math.AP’ category.

Just a brief post to record some notable papers in my fields of interest that appeared on the arXiv recently.

- “A sharp square function estimate for the cone in

“, by Larry Guth, Hong Wang, and Ruixiang Zhang. This paper establishes an optimal (up to epsilon losses) square function estimate for the three-dimensional light cone that was essentially conjectured by Mockenhaupt, Seeger, and Sogge, which has a number of other consequences including Sogge’s local smoothing conjecture for the wave equation in two spatial dimensions, which in turn implies the (already known) Bochner-Riesz, restriction, and Kakeya conjectures in two dimensions. Interestingly, modern techniques such as polynomial partitioning and decoupling estimates are not used in this argument; instead, the authors mostly rely on an induction on scales argument and Kakeya type estimates. Many previous authors (including myself) were able to get weaker estimates of this type by an induction on scales method, but there were always significant inefficiencies in doing so; in particular knowing the sharp square function estimate at smaller scales did not imply the sharp square function estimate at the given larger scale. The authors here get around this issue by finding an even stronger estimate that implies the square function estimate, but behaves significantly better with respect to induction on scales.

- “On the Chowla and twin primes conjectures over

“, by Will Sawin and Mark Shusterman. This paper resolves a number of well known open conjectures in analytic number theory, such as the Chowla conjecture and the twin prime conjecture (in the strong form conjectured by Hardy and Littlewood), in the case of function fields where the field is a prime power

which is fixed (in contrast to a number of existing results in the “large

” limit) but has a large exponent

. The techniques here are orthogonal to those used in recent progress towards the Chowla conjecture over the integers (e.g., in this previous paper of mine); the starting point is an algebraic observation that in certain function fields, the Mobius function behaves like a quadratic Dirichlet character along certain arithmetic progressions. In principle, this reduces problems such as Chowla’s conjecture to problems about estimating sums of Dirichlet characters, for which more is known; but the task is still far from trivial.

- “Bounds for sets with no polynomial progressions“, by Sarah Peluse. This paper can be viewed as part of a larger project to obtain quantitative density Ramsey theorems of Szemeredi type. For instance, Gowers famously established a relatively good quantitative bound for Szemeredi’s theorem that all dense subsets of integers contain arbitrarily long arithmetic progressions

. The corresponding question for polynomial progressions

is considered more difficult for a number of reasons. One of them is that dilation invariance is lost; a dilation of an arithmetic progression is again an arithmetic progression, but a dilation of a polynomial progression will in general not be a polynomial progression with the same polynomials

. Another issue is that the ranges of the two parameters

are now at different scales. Peluse gets around these difficulties in the case when all the polynomials

have distinct degrees, which is in some sense the opposite case to that considered by Gowers (in particular, she avoids the need to obtain quantitative inverse theorems for high order Gowers norms; which was recently obtained in this integer setting by Manners but with bounds that are probably not strong enough to for the bounds in Peluse’s results, due to a degree lowering argument that is available in this case). To resolve the first difficulty one has to make all the estimates rather uniform in the coefficients of the polynomials

, so that one can still run a density increment argument efficiently. To resolve the second difficulty one needs to find a quantitative concatenation theorem for Gowers uniformity norms. Many of these ideas were developed in previous papers of Peluse and Peluse-Prendiville in simpler settings.

- “On blow up for the energy super critical defocusing non linear Schrödinger equations“, by Frank Merle, Pierre Raphael, Igor Rodnianski, and Jeremie Szeftel. This paper (when combined with two companion papers) resolves a long-standing problem as to whether finite time blowup occurs for the defocusing supercritical nonlinear Schrödinger equation (at least in certain dimensions and nonlinearities). I had a previous paper establishing a result like this if one “cheated” by replacing the nonlinear Schrodinger equation by a system of such equations, but remarkably they are able to tackle the original equation itself without any such cheating. Given the very analogous situation with Navier-Stokes, where again one can create finite time blowup by “cheating” and modifying the equation, it does raise hope that finite time blowup for the incompressible Navier-Stokes and Euler equations can be established… In fact the connection may not just be at the level of analogy; a surprising key ingredient in the proofs here is the observation that a certain blowup ansatz for the nonlinear Schrodinger equation is governed by solutions to the (compressible) Euler equation, and finite time blowup examples for the latter can be used to construct finite time blowup examples for the former.

Let be a divergence-free vector field, thus

, which we interpret as a velocity field. In this post we will proceed formally, largely ignoring the analytic issues of whether the fields in question have sufficient regularity and decay to justify the calculations. The vorticity field

is then defined as the curl of the velocity:

(From a differential geometry viewpoint, it would be more accurate (especially in other dimensions than three) to define the vorticity as the exterior derivative of the musical isomorphism

of the Euclidean metric

applied to the velocity field

; see these previous lecture notes. However, we will not need this geometric formalism in this post.)

Assuming suitable regularity and decay hypotheses of the velocity field , it is possible to recover the velocity from the vorticity as follows. From the general vector identity

applied to the velocity field

, we see that

and thus (by the commutativity of all the differential operators involved)

Using the Newton potential formula

and formally differentiating under the integral sign, we obtain the Biot-Savart law

This law is of fundamental importance in the study of incompressible fluid equations, such as the Euler equations

since on applying the curl operator one obtains the vorticity equation

and then by substituting (1) one gets an autonomous equation for the vorticity field . Unfortunately, this equation is non-local, due to the integration present in (1).

In a recent work, it was observed by Elgindi that in a certain regime, the Biot-Savart law can be approximated by a more “low rank” law, which makes the non-local effects significantly simpler in nature. This simplification was carried out in spherical coordinates, and hinged on a study of the invertibility properties of a certain second order linear differential operator in the latitude variable ; however in this post I would like to observe that the approximation can also be seen directly in Cartesian coordinates from the classical Biot-Savart law (1). As a consequence one can also initiate the beginning of Elgindi’s analysis in constructing somewhat regular solutions to the Euler equations that exhibit self-similar blowup in finite time, though I have not attempted to execute the entirety of the analysis in this setting.

Elgindi’s approximation applies under the following hypotheses:

- (i) (Axial symmetry without swirl) The velocity field

is assumed to take the form

for some functionsof the cylindrical radial variable

and the vertical coordinate

. As a consequence, the vorticity field

takes the form

whereis the field

- (ii) (Odd symmetry) We assume that

and

, so that

.

A model example of a divergence-free vector field obeying these properties (but without good decay at infinity) is the linear vector field

which is of the form (3) with and

. The associated vorticity

vanishes.

We can now give an illustration of Elgindi’s approximation:

Proposition 1 (Elgindi’s approximation) Under the above hypotheses (and assuing suitable regularity and decay), we have the pointwise bounds

for any

, where

is the vector field (5), and

is the scalar function

Thus under the hypotheses (i), (ii), and assuming that is slowly varying, we expect

to behave like the linear vector field

modulated by a radial scalar function. In applications one needs to control the error in various function spaces instead of pointwise, and with

similarly controlled in other function space norms than the

norm, but this proposition already gives a flavour of the approximation. If one uses spherical coordinates

then we have (using the spherical change of variables formula and the odd nature of

)

where

is the operator introduced in Elgindi’s paper.

Proof: By a limiting argument we may assume that is non-zero, and we may normalise

. From the triangle inequality we have

and hence by (1)

In the regime we may perform the Taylor expansion

Since

we see from the triangle inequality that the error term contributes to

. We thus have

where is the constant term

and are the linear term

By the hypotheses (i), (ii), we have the symmetries

The even symmetry (8) ensures that the integrand in is odd, so

vanishes. The symmetry (6) or (7) similarly ensures that

, so

vanishes. Since

, we conclude that

Using (4), the right-hand side is

where . Because of the odd nature of

, only those terms with one factor of

give a non-vanishing contribution to the integral. Using the rotation symmetry

we also see that any term with a factor of

also vanishes. We can thus simplify the above expression as

Using the rotation symmetry again, we see that the term

in the first component can be replaced by

or by

, and similarly for the

term in the second component. Thus the above expression is

giving the claim.

Example 2 Consider the divergence-free vector field

, where the vector potential

takes the form

for some bump function

supported in

. We can then calculate

and

In particular the hypotheses (i), (ii) are satisfied with

One can then calculate

If we take the specific choice

where

is a fixed bump function supported some interval

and

is a small parameter (so that

is spread out over the range

), then we see that

(with implied constants allowed to depend on

),

and

which is completely consistent with Proposition 1.

One can use this approximation to extract a plausible ansatz for a self-similar blowup to the Euler equations. We let be a small parameter and let

be a time-dependent vorticity field obeying (i), (ii) of the form

where and

is a smooth field to be chosen later. Admittedly the signum function

is not smooth at

, but let us ignore this issue for now (to rigorously make an ansatz one will have to smooth out this function a little bit; Elgindi uses the choice

, where

). With this ansatz one may compute

By Proposition 1, we thus expect to have the approximation

We insert this into the vorticity equation (2). The transport term will be expected to be negligible because

, and hence

, is slowly varying (the discontinuity of

will not be encountered because the vector field

is parallel to this singularity). The modulating function

is similarly slowly varying, so derivatives falling on this function should be lower order. Neglecting such terms, we arrive at the approximation

and so in the limit we expect obtain a simple model equation for the evolution of the vorticity envelope

:

If we write for the logarithmic primitive of

, then we have

and hence

which integrates to the Ricatti equation

which can be explicitly solved as

where is any function of

that one pleases. (In Elgindi’s work a time dilation is used to remove the unsightly factor of

appearing here in the denominator.) If for instance we set

, we obtain the self-similar solution

and then on applying

Thus, we expect to be able to construct a self-similar blowup to the Euler equations with a vorticity field approximately behaving like

and velocity field behaving like

In particular, would be expected to be of regularity

(and smooth away from the origin), and blows up in (say)

norm at time

, and one has the self-similarity

and

A self-similar solution of this approximate shape is in fact constructed rigorously in Elgindi’s paper (using spherical coordinates instead of the Cartesian approach adopted here), using a nonlinear stability analysis of the above ansatz. It seems plausible that one could also carry out this stability analysis using this Cartesian coordinate approach, although I have not tried to do this in detail.

I’ve just uploaded to the arXiv my paper “Quantitative bounds for critically bounded solutions to the Navier-Stokes equations“, submitted to the proceedings of the Linde Hall Inaugural Math Symposium. (I unfortunately had to cancel my physical attendance at this symposium for personal reasons, but was still able to contribute to the proceedings.) In recent years I have been interested in working towards establishing the existence of classical solutions for the Navier-Stokes equations

that blow up in finite time, but this time for a change I took a look at the other side of the theory, namely the conditional regularity results for this equation. There are several such results that assert that if a certain norm of the solution stays bounded (or grows at a controlled rate), then the solution stays regular; taken in the contrapositive, they assert that if a solution blows up at a certain finite time , then certain norms of the solution must also go to infinity. Here are some examples (not an exhaustive list) of such blowup criteria:

- (Leray blowup criterion, 1934) If

blows up at a finite time

, and

, then

for an absolute constant

.

- (Prodi–Serrin–Ladyzhenskaya blowup criterion, 1959-1967) If

blows up at a finite time

, and

, then

, where

.

- (Beale-Kato-Majda blowup criterion, 1984) If

blows up at a finite time

, then

, where

is the vorticity.

- (Kato blowup criterion, 1984) If

blows up at a finite time

, then

for some absolute constant

.

- (Escauriaza-Seregin-Sverak blowup criterion, 2003) If

blows up at a finite time

, then

.

- (Seregin blowup criterion, 2012) If

blows up at a finite time

, then

.

- (Phuc blowup criterion, 2015) If

blows up at a finite time

, then

for any

.

- (Gallagher-Koch-Planchon blowup criterion, 2016) If

blows up at a finite time

, then

for any

.

- (Albritton blowup criterion, 2016) If

blows up at a finite time

, then

for any

.

My current paper is most closely related to the Escauriaza-Seregin-Sverak blowup criterion, which was the first to show a critical (i.e., scale-invariant, or dimensionless) spatial norm, namely , had to become large. This result now has many proofs; for instance, many of the subsequent blowup criterion results imply the Escauriaza-Seregin-Sverak one as a special case, and there are also additional proofs by Gallagher-Koch-Planchon (building on ideas of Kenig-Koch), and by Dong-Du. However, all of these proofs rely on some form of a compactness argument: given a finite time blowup, one extracts some suitable family of rescaled solutions that converges in some weak sense to a limiting solution that has some additional good properties (such as almost periodicity modulo symmetries), which one can then rule out using additional qualitative tools, such as unique continuation and backwards uniqueness theorems for parabolic heat equations. In particular, all known proofs use some version of the backwards uniqueness theorem of Escauriaza, Seregin, and Sverak. Because of this reliance on compactness, the existing proofs of the Escauriaza-Seregin-Sverak blowup criterion are qualitative, in that they do not provide any quantitative information on how fast the

norm will go to infinity (along a subsequence of times).

On the other hand, it is a general principle that qualitative arguments established using compactness methods ought to have quantitative analogues that replace the use of compactness by more complicated substitutes that give effective bounds; see for instance these previous blog posts for more discussion. I therefore was interested in trying to obtain a quantitative version of this blowup criterion that gave reasonably good effective bounds (in particular, my objective was to avoid truly enormous bounds such as tower-exponential or Ackermann function bounds, which often arise if one “naively” tries to make a compactness argument effective). In particular, I obtained the following triple-exponential quantitative regularity bounds:

Theorem 1 If

is a classical solution to Navier-Stokes on

with

and

for

and

.

As a corollary, one can now improve the Escauriaza-Seregin-Sverak blowup criterion to

for some absolute constant , which to my knowledge is the first (very slightly) supercritical blowup criterion for Navier-Stokes in the literature.

The proof uses many of the same quantitative inputs as previous arguments, most notably the Carleman inequalities used to establish unique continuation and backwards uniqueness theorems for backwards heat equations, but also some additional techniques that make the quantitative bounds more efficient. The proof focuses initially on points of concentration of the solution, which we define as points where there is a frequency

for which one has the bound

for a large absolute constant , where

is a Littlewood-Paley projection to frequencies

. (This can be compared with the upper bound of

for the quantity on the left-hand side that follows from (1).) The factor of

normalises the left-hand side of (2) to be dimensionless (i.e., critical). The main task is to show that the dimensionless quantity

cannot get too large; in particular, we end up establishing a bound of the form

from which the above theorem ends up following from a routine adaptation of the local well-posedness and regularity theory for Navier-Stokes.

The strategy is to show that any concentration such as (2) when is large must force a significant component of the

norm of

to also show up at many other locations than

, which eventually contradicts (1) if one can produce enough such regions of non-trivial

norm. (This can be viewed as a quantitative variant of the “rigidity” theorems in some of the previous proofs of the Escauriaza-Seregin-Sverak theorem that rule out solutions that exhibit too much “compactness” or “almost periodicity” in the

topology.) The chain of causality that leads from a concentration (2) at

to significant

norm at other regions of the time slice

is somewhat involved (though simpler than the much more convoluted schemes I initially envisaged for this argument):

- Firstly, by using Duhamel’s formula, one can show that a concentration (2) can only occur (with

large) if there was also a preceding concentration

at some slightly previous point

in spacetime, with

also close to

(more precisely, we have

,

, and

). This can be viewed as a sort of contrapositive of a “local regularity theorem”, such as the ones established by Caffarelli, Kohn, and Nirenberg. A key point here is that the lower bound

in the conclusion (3) is precisely the same as the lower bound in (2), so that this backwards propagation of concentration can be iterated.

- Iterating the previous step, one can find a sequence of concentration points

with the

propagating backwards in time; by using estimates ultimately resulting from the dissipative term in the energy identity, one can extract such a sequence in which the

increase geometrically with time, the

are comparable (up to polynomial factors in

) to the natural frequency scale

, and one has

. Using the “epochs of regularity” theory that ultimately dates back to Leray, and tweaking the

slightly, one can also place the times

in intervals

(of length comparable to a small multiple of

) in which the solution is quite regular (in particular,

enjoy good

bounds on

).

- The concentration (4) can be used to establish a lower bound for the

norm of the vorticity

near

. As is well known, the vorticity obeys the vorticity equation

In the epoch of regularity

, the coefficients

of this equation obey good

bounds, allowing the machinery of Carleman estimates to come into play. Using a Carleman estimate that is used to establish unique continuation results for backwards heat equations, one can propagate this lower bound to also give lower

bounds on the vorticity (and its first derivative) in annuli of the form

for various radii

, although the lower bounds decay at a gaussian rate with

.

- Meanwhile, using an energy pigeonholing argument of Bourgain (which, in this Navier-Stokes context, is actually an enstrophy pigeonholing argument), one can locate some annuli

where (a slightly normalised form of) the entrosphy is small at time

; using a version of the localised enstrophy estimates from a previous paper of mine, one can then propagate this sort of control forward in time, obtaining an “annulus of regularity” of the form

in which one has good estimates; in particular, one has

type bounds on

in this cylindrical annulus.

- By intersecting the previous epoch of regularity

with the above annulus of regularity, we have some lower bounds on the

norm of the vorticity (and its first derivative) in the annulus of regularity. Using a Carleman estimate first introduced by Escauriaza, Seregin, and Sverak, as well as a second application of the Carleman estimate used previously, one can then propagate this lower bound back up to time

, establishing a lower bound for the vorticity on the spatial annulus

. By some basic Littlewood-Paley theory one can parlay this lower bound to a lower bound on the

norm of the velocity

; crucially, this lower bound is uniform in

.

- If

is very large (triple exponential in

!), one can then find enough scales

with disjoint

annuli that the total lower bound on the

norm of

provided by the above arguments is inconsistent with (1), thus establishing the claim.

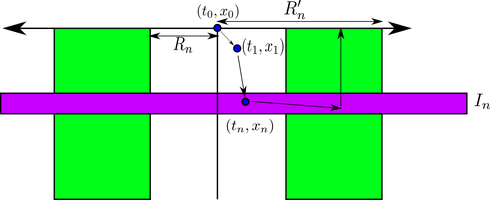

The chain of causality is summarised in the following image:

It seems natural to conjecture that similar triply logarithmic improvements can be made to several of the other blowup criteria listed above, but I have not attempted to pursue this question. It seems difficult to improve the triple logarithmic factor using only the techniques here; the Bourgain pigeonholing argument inevitably costs one exponential, the Carleman inequalities cost a second, and the stacking of scales at the end to contradict the upper bound costs the third.

Let be some domain (such as the real numbers). For any natural number

, let

denote the space of symmetric real-valued functions

on

variables

, thus

for any permutation . For instance, for any natural numbers

, the elementary symmetric polynomials

will be an element of . With the pointwise product operation,

becomes a commutative real algebra. We include the case

, in which case

consists solely of the real constants.

Given two natural numbers , one can “lift” a symmetric function

of

variables to a symmetric function

of

variables by the formula

where ranges over all injections from

to

(the latter formula making it clearer that

is symmetric). Thus for instance

and

Also we have

With these conventions, we see that vanishes for

, and is equal to

if

. We also have the transitivity

if .

The lifting map is a linear map from

to

, but it is not a ring homomorphism. For instance, when

, one has

In general, one has the identity

for all natural numbers and

,

, where

range over all injections

,

with

. Combinatorially, the identity (2) follows from the fact that given any injections

and

with total image

of cardinality

, one has

, and furthermore there exist precisely

triples

of injections

,

,

such that

and

.

Example 1 When

, one has

which is just a restatement of the identity

Note that the coefficients appearing in (2) do not depend on the final number of variables . We may therefore abstract the role of

from the law (2) by introducing the real algebra

of formal sums

where for each ,

is an element of

(with only finitely many of the

being non-zero), and with the formal symbol

being formally linear, thus

and

for and scalars

, and with multiplication given by the analogue

of (2). Thus for instance, in this algebra we have

and

Informally, is an abstraction (or “inverse limit”) of the concept of a symmetric function of an unspecified number of variables, which are formed by summing terms that each involve only a bounded number of these variables at a time. One can check (somewhat tediously) that

is indeed a commutative real algebra, with a unit

. (I do not know if this algebra has previously been studied in the literature; it is somewhat analogous to the abstract algebra of finite linear combinations of Schur polynomials, with multiplication given by a Littlewood-Richardson rule. )

For natural numbers , there is an obvious specialisation map

from

to

, defined by the formula

Thus, for instance, maps

to

and

to

. From (2) and (3) we see that this map

is an algebra homomorphism, even though the maps

and

are not homomorphisms. By inspecting the

component of

we see that the homomorphism

is in fact surjective.

Now suppose that we have a measure on the space

, which then induces a product measure

on every product space

. To avoid degeneracies we will assume that the integral

is strictly positive. Assuming suitable measurability and integrability hypotheses, a function

can then be integrated against this product measure to produce a number

In the event that arises as a lift

of another function

, then from Fubini’s theorem we obtain the formula

is an element of the formal algebra , then

Note that by hypothesis, only finitely many terms on the right-hand side are non-zero.

Now for a key observation: whereas the left-hand side of (6) only makes sense when is a natural number, the right-hand side is meaningful when

takes a fractional value (or even when it takes negative or complex values!), interpreting the binomial coefficient

as a polynomial

in

. As such, this suggests a way to introduce a “virtual” concept of a symmetric function on a fractional power space

for such values of

, and even to integrate such functions against product measures

, even if the fractional power

does not exist in the usual set-theoretic sense (and

similarly does not exist in the usual measure-theoretic sense). More precisely, for arbitrary real or complex

, we now define

to be the space of abstract objects

with and

(and

now interpreted as formal symbols, with the structure of a commutative real algebra inherited from

, thus

In particular, the multiplication law (2) continues to hold for such values of , thanks to (3). Given any measure

on

, we formally define a measure

on

with regards to which we can integrate elements

of

by the formula (6) (providing one has sufficient measurability and integrability to make sense of this formula), thus providing a sort of “fractional dimensional integral” for symmetric functions. Thus, for instance, with this formalism the identities (4), (5) now hold for fractional values of

, even though the formal space

no longer makes sense as a set, and the formal measure

no longer makes sense as a measure. (The formalism here is somewhat reminiscent of the technique of dimensional regularisation employed in the physical literature in order to assign values to otherwise divergent integrals. See also this post for an unrelated abstraction of the integration concept involving integration over supercommutative variables (and in particular over fermionic variables).)

Example 2 Suppose

is a probability measure on

, and

is a random variable; on any power

, we let

be the usual independent copies of

on

, thus

for

. Then for any real or complex

, the formal integral

can be evaluated by first using the identity

(cf. (1)) and then using (6) and the probability measure hypothesis

to conclude that

For

a natural number, this identity has the probabilistic interpretation

whenever

are jointly independent copies of

, which reflects the well known fact that the sum

has expectation

and variance

. One can thus view (7) as an abstract generalisation of (8) to the case when

is fractional, negative, or even complex, despite the fact that there is no sensible way in this case to talk about

independent copies

of

in the standard framework of probability theory.

In this particular case, the quantity (7) is non-negative for every nonnegative

, which looks plausible given the form of the left-hand side. Unfortunately, this sort of non-negativity does not always hold; for instance, if

has mean zero, one can check that

and the right-hand side can become negative for

. This is a shame, because otherwise one could hope to start endowing

with some sort of commutative von Neumann algebra type structure (or the abstract probability structure discussed in this previous post) and then interpret it as a genuine measure space rather than as a virtual one. (This failure of positivity is related to the fact that the characteristic function of a random variable, when raised to the

power, need not be a characteristic function of any random variable once

is no longer a natural number: “fractional convolution” does not preserve positivity!) However, one vestige of positivity remains: if

is non-negative, then so is

One can wonder what the point is to all of this abstract formalism and how it relates to the rest of mathematics. For me, this formalism originated implicitly in an old paper I wrote with Jon Bennett and Tony Carbery on the multilinear restriction and Kakeya conjectures, though we did not have a good language for working with it at the time, instead working first with the case of natural number exponents and appealing to a general extrapolation theorem to then obtain various identities in the fractional

case. The connection between these fractional dimensional integrals and more traditional integrals ultimately arises from the simple identity

(where the right-hand side should be viewed as the fractional dimensional integral of the unit against

). As such, one can manipulate

powers of ordinary integrals using the machinery of fractional dimensional integrals. A key lemma in this regard is

Lemma 3 (Differentiation formula) Suppose that a positive measure

on

depends on some parameter

and varies by the formula

for some function

. Let

be any real or complex number. Then, assuming sufficient smoothness and integrability of all quantities involved, we have

for all

that are independent of

. If we allow

to now depend on

also, then we have the more general total derivative formula

again assuming sufficient amounts of smoothness and regularity.

Proof: We just prove (10), as (11) then follows by same argument used to prove the usual product rule. By linearity it suffices to verify this identity in the case for some symmetric function

for a natural number

. By (6), the left-hand side of (10) is then

Differentiating under the integral sign using (9) we have

and similarly

where are the standard

copies of

on

:

By the product rule, we can thus expand (12) as

where we have suppressed the dependence on for brevity. Since

, we can write this expression using (6) as

where is the symmetric function

But from (2) one has

and the claim follows.

Remark 4 It is also instructive to prove this lemma in the special case when

is a natural number, in which case the fractional dimensional integral

can be interpreted as a classical integral. In this case, the identity (10) is immediate from applying the product rule to (9) to conclude that

One could in fact derive (10) for arbitrary real or complex

from the case when

is a natural number by an extrapolation argument; see the appendix of my paper with Bennett and Carbery for details.

Let us give a simple PDE application of this lemma as illustration:

Proposition 5 (Heat flow monotonicity) Let

be a solution to the heat equation

with initial data

a rapidly decreasing finite non-negative Radon measure, or more explicitly

for al

. Then for any

, the quantity

is monotone non-decreasing in

for

, constant for

, and monotone non-increasing for

.

Proof: By a limiting argument we may assume that is absolutely continuous, with Radon-Nikodym derivative a test function; this is more than enough regularity to justify the arguments below.

For any , let

denote the Radon measure

Then the quantity can be written as a fractional dimensional integral

Observe that

and thus by Lemma 3 and the product rule

where we use for the variable of integration in the factor space

of

.

To simplify this expression we will take advantage of integration by parts in the variable. Specifically, in any direction

, we have

and hence by Lemma 3

Multiplying by and integrating by parts, we see that

where we use the Einstein summation convention in . Similarly, if

is any reasonable function depending only on

, we have

and hence on integration by parts

We conclude that

and thus by (13)

The choice of that then achieves the most cancellation turns out to be

(this cancels the terms that are linear or quadratic in the

), so that

. Repeating the calculations establishing (7), one has

and

where is the random variable drawn from

with the normalised probability measure

. Since

, one thus has

This expression is clearly non-negative for , equal to zero for

, and positive for

, giving the claim. (One could simplify

here as

if desired, though it is not strictly necessary to do so for the proof.)

Remark 6 As with Remark 4, one can also establish the identity (14) first for natural numbers

by direct computation avoiding the theory of fractional dimensional integrals, and then extrapolate to the case of more general values of

. This particular identity is also simple enough that it can be directly established by integration by parts without much difficulty, even for fractional values of

.

A more complicated version of this argument establishes the non-endpoint multilinear Kakeya inequality (without any logarithmic loss in a scale parameter ); this was established in my previous paper with Jon Bennett and Tony Carbery, but using the “natural number

first” approach rather than using the current formalism of fractional dimensional integration. However, the arguments can be translated into this formalism without much difficulty; we do so below the fold. (To simplify the exposition slightly we will not address issues of establishing enough regularity and integrability to justify all the manipulations, though in practice this can be done by standard limiting arguments.)

I was recently asked to contribute a short comment to Nature Reviews Physics, as part of a series of articles on fluid dynamics on the occasion of the 200th anniversary (this August) of the birthday of George Stokes. My contribution is now online as “Searching for singularities in the Navier–Stokes equations“, where I discuss the global regularity problem for Navier-Stokes and my thoughts on how one could try to construct a solution that blows up in finite time via an approximately discretely self-similar “fluid computer”. (The rest of the series does not currently seem to be available online, but I expect they will become so shortly.)

I was pleased to learn this week that the 2019 Abel Prize was awarded to Karen Uhlenbeck. Uhlenbeck laid much of the foundations of modern geometric PDE. One of the few papers I have in this area is in fact a joint paper with Gang Tian extending a famous singularity removal theorem of Uhlenbeck for four-dimensional Yang-Mills connections to higher dimensions. In both these papers, it is crucial to be able to construct “Coulomb gauges” for various connections, and there is a clever trick of Uhlenbeck for doing so, introduced in another important paper of hers, which is absolutely critical in my own paper with Tian. Nowadays it would be considered a standard technique, but it was definitely not so at the time that Uhlenbeck introduced it.

Suppose one has a smooth connection on a (closed) unit ball

in

for some

, taking values in some Lie algebra

associated to a compact Lie group

. This connection then has a curvature

, defined in coordinates by the usual formula

It is natural to place the curvature in a scale-invariant space such as , and then the natural space for the connection would be the Sobolev space

. It is easy to see from (1) and Sobolev embedding that if

is bounded in

, then

will be bounded in

. One can then ask the converse question: if

is bounded in

, is

bounded in

? This can be viewed as asking whether the curvature equation (1) enjoys “elliptic regularity”.

There is a basic obstruction provided by gauge invariance. For any smooth gauge taking values in the Lie group, one can gauge transform

to

and then a brief calculation shows that the curvature is conjugated to

This gauge symmetry does not affect the norm of the curvature tensor

, but can make the connection

extremely large in

, since there is no control on how wildly

can oscillate in space.

However, one can hope to overcome this problem by gauge fixing: perhaps if is bounded in

, then one can make

bounded in

after applying a gauge transformation. The basic and useful result of Uhlenbeck is that this can be done if the

norm of

is sufficiently small (and then the conclusion is that

is small in

). (For large connections there is a serious issue related to the Gribov ambiguity.) In my (much) later paper with Tian, we adapted this argument, replacing Lebesgue spaces by Morrey space counterparts. (This result was also independently obtained at about the same time by Meyer and Riviére.)

To make the problem elliptic, one can try to impose the Coulomb gauge condition

(also known as the Lorenz gauge or Hodge gauge in various papers), together with a natural boundary condition on that will not be discussed further here. This turns (1), (2) into a divergence-curl system that is elliptic at the linear level at least. Indeed if one takes the divergence of (1) using (2) one sees that

and if one could somehow ignore the nonlinear term then we would get the required regularity on

by standard elliptic regularity estimates.

The problem is then how to handle the nonlinear term. If we already knew that was small in the right norm

then one can use Sobolev embedding, Hölder’s inequality, and elliptic regularity to show that the second term in (3) is small compared to the first term, and so one could then hope to eliminate it by perturbative analysis. However, proving that

is small in this norm is exactly what we are trying to prove! So this approach seems circular.

Uhlenbeck’s clever way out of this circularity is a textbook example of what is now known as a “continuity” argument. Instead of trying to work just with the original connection , one works with the rescaled connections

for

, with associated rescaled curvatures

. If the original curvature

is small in

norm (e.g. bounded by some small

), then so are all the rescaled curvatures

. We want to obtain a Coulomb gauge at time

; this is difficult to do directly, but it is trivial to obtain a Coulomb gauge at time

, because the connection vanishes at this time. On the other hand, once one has successfully obtained a Coulomb gauge at some time

with

small in the natural norm

(say bounded by

for some constant

which is large in absolute terms, but not so large compared with say

), the perturbative argument mentioned earlier (combined with the qualitative hypothesis that

is smooth) actually works to show that a Coulomb gauge can also be constructed and be small for all sufficiently close nearby times

to

; furthermore, the perturbative analysis actually shows that the nearby gauges enjoy a slightly better bound on the

norm, say

rather than

. As a consequence of this, the set of times

for which one has a good Coulomb gauge obeying the claimed estimates is both open and closed in

, and also contains

. Since the unit interval

is connected, it must then also contain

. This concludes the proof.

One of the lessons I drew from this example is to not be deterred (especially in PDE) by an argument seeming to be circular; if the argument is still sufficiently “nontrivial” in nature, it can often be modified into a usefully non-circular argument that achieves what one wants (possibly under an additional qualitative hypothesis, such as a continuity or smoothness hypothesis).

I have just uploaded to the arXiv my paper “On the universality of the incompressible Euler equation on compact manifolds, II. Non-rigidity of Euler flows“, submitted to Pure and Applied Functional Analysis. This paper continues my attempts to establish “universality” properties of the Euler equations on Riemannian manifolds , as I conjecture that the freedom to set the metric

ought to allow one to “program” such Euler flows to exhibit a wide range of behaviour, and in particular to achieve finite time blowup (if the dimension is sufficiently large, at least).

In coordinates, the Euler equations read

where is the pressure field and

is the velocity field, and

denotes the Levi-Civita connection with the usual Penrose abstract index notation conventions; we restrict attention here to the case where

are smooth and

is compact, smooth, orientable, connected, and without boundary. Let’s call

an Euler flow on

(for the time interval

) if it solves the above system of equations for some pressure

, and an incompressible flow if it just obeys the divergence-free relation

. Thus every Euler flow is an incompressible flow, but the converse is certainly not true; for instance the various conservation laws of the Euler equation, such as conservation of energy, will already block most incompressible flows from being an Euler flow, or even being approximated in a reasonably strong topology by such Euler flows.

However, one can ask if an incompressible flow can be extended to an Euler flow by adding some additional dimensions to . In my paper, I formalise this by considering warped products

of

which (as a smooth manifold) are products

of

with a torus, with a metric

given by

for , where

are the coordinates of the torus

, and

are smooth positive coefficients for

; in order to preserve the incompressibility condition, we also require the volume preservation property

though in practice we can quickly dispose of this condition by adding one further “dummy” dimension to the torus . We say that an incompressible flow

is extendible to an Euler flow if there exists a warped product

extending

, and an Euler flow

on

of the form

for some “swirl” fields . The situation here is motivated by the familiar situation of studying axisymmetric Euler flows

on

, which in cylindrical coordinates take the form

The base component

of this flow is then a flow on the two-dimensional plane which is not quite incompressible (due to the failure of the volume preservation condition (2) in this case) but still satisfies a system of equations (coupled with a passive scalar field

that is basically the square of the swirl

) that is reminiscent of the Boussinesq equations.

On a fixed -dimensional manifold

, let

denote the space of incompressible flows

, equipped with the smooth topology (in spacetime), and let

denote the space of such flows that are extendible to Euler flows. Our main theorem is

Theorem 1

- (i) (Generic inextendibility) Assume

. Then

is of the first category in

(the countable union of nowhere dense sets in

).

- (ii) (Non-rigidity) Assume

(with an arbitrary metric

). Then

is somewhere dense in

(that is, the closure of

has non-empty interior).

More informally, starting with an incompressible flow , one usually cannot extend it to an Euler flow just by extending the manifold, warping the metric, and adding swirl coefficients, even if one is allowed to select the dimension of the extension, as well as the metric and coefficients, arbitrarily. However, many such flows can be perturbed to be extendible in such a manner (though different perturbations will require different extensions, in particular the dimension of the extension will not be fixed). Among other things, this means that conservation laws such as energy (or momentum, helicity, or circulation) no longer present an obstruction when one is allowed to perform an extension (basically this is because the swirl components of the extension can exchange energy (or momentum, etc.) with the base components in a basically arbitrary fashion.

These results fall short of my hopes to use the ability to extend the manifold to create universal behaviour in Euler flows, because of the fact that each flow requires a different extension in order to achieve the desired dynamics. Still it does seem to provide a little bit of support to the idea that high-dimensional Euler flows are quite “flexible” in their behaviour, though not completely so due to the generic inextendibility phenomenon. This flexibility reminds me a little bit of the flexibility of weak solutions to equations such as the Euler equations provided by the “-principle” of Gromov and its variants (as discussed in these recent notes), although in this case the flexibility comes from adding additional dimensions, rather than by repeatedly adding high-frequency corrections to the solution.

The proof of part (i) of the theorem basically proceeds by a dimension counting argument (similar to that in the proof of Proposition 9 of these recent lecture notes of mine). Heuristically, the point is that an arbitrary incompressible flow is essentially determined by

independent functions of space and time, whereas the warping factors

are functions of space only, the pressure field is one function of space and time, and the swirl fields

are technically functions of both space and time, but have the same number of degrees of freedom as a function just of space, because they solve an evolution equation. When

, this means that there are fewer unknown functions of space and time than prescribed functions of space and time, which is the source of the generic inextendibility. This simple argument breaks down when

, but we do not know whether the claim is actually false in this case.

The proof of part (ii) proceeds by direct calculation of the effect of the warping factors and swirl velocities, which effectively create a forcing term (of Boussinesq type) in the first equation of (1) that is a combination of functions of the Eulerian spatial coordinates (coming from the warping factors) and the Lagrangian spatial coordinates

(which arise from the swirl velocities, which are passively transported by the flow). In a non-empty open subset of

, the combination of these coordinates becomes a non-degenerate set of coordinates for spacetime, and one can then use the Stone-Weierstrass theorem to conclude. The requirement that

be topologically a torus is a technical hypothesis in order to avoid topological obstructions such as the hairy ball theorem, but it may be that the hypothesis can be dropped (and it may in fact be true, in the

case at least, that

is dense in all of

, not just in a non-empty open subset).

These lecture notes are a continuation of the 254A lecture notes from the previous quarter.

We consider the Euler equations for incompressible fluid flow on a Euclidean space ; we will label

as the “Eulerian space”

(or “Euclidean space”, or “physical space”) to distinguish it from the “Lagrangian space”

(or “labels space”) that we will introduce shortly (but the reader is free to also ignore the

or

subscripts if he or she wishes). Elements of Eulerian space

will be referred to by symbols such as

, we use

to denote Lebesgue measure on

and we will use

for the

coordinates of

, and use indices such as

to index these coordinates (with the usual summation conventions), for instance

denotes partial differentiation along the

coordinate. (We use superscripts for coordinates

instead of subscripts

to be compatible with some differential geometry notation that we will use shortly; in particular, when using the summation notation, we will now be matching subscripts with superscripts for the pair of indices being summed.)

In Eulerian coordinates, the Euler equations read

where is the velocity field and

is the pressure field. These are functions of time

and on the spatial location variable

. We will refer to the coordinates

as Eulerian coordinates. However, if one reviews the physical derivation of the Euler equations from 254A Notes 0, before one takes the continuum limit, the fundamental unknowns were not the velocity field

or the pressure field

, but rather the trajectories

, which can be thought of as a single function

from the coordinates

(where

is a time and

is an element of the label set

) to

. The relationship between the trajectories

and the velocity field was given by the informal relationship

We will refer to the coordinates as (discrete) Lagrangian coordinates for describing the fluid.

In view of this, it is natural to ask whether there is an alternate way to formulate the continuum limit of incompressible inviscid fluids, by using a continuous version of the Lagrangian coordinates, rather than Eulerian coordinates. This is indeed the case. Suppose for instance one has a smooth solution

to the Euler equations on a spacetime slab

in Eulerian coordinates; assume furthermore that the velocity field

is uniformly bounded. We introduce another copy

of

, which we call Lagrangian space or labels space; we use symbols such as

to refer to elements of this space,

to denote Lebesgue measure on

, and

to refer to the

coordinates of

. We use indices such as

to index these coordinates, thus for instance

denotes partial differentiation along the

coordinate. We will use summation conventions for both the Eulerian coordinates

and the Lagrangian coordinates

, with an index being summed if it appears as both a subscript and a superscript in the same term. While

and

are of course isomorphic, we will try to refrain from identifying them, except perhaps at the initial time

in order to fix the initialisation of Lagrangian coordinates.

Given a smooth and bounded velocity field , define a trajectory map for this velocity to be any smooth map

that obeys the ODE

in view of (2), this describes the trajectory (in ) of a particle labeled by an element

of

. From the Picard existence theorem and the hypothesis that

is smooth and bounded, such a map exists and is unique as long as one specifies the initial location

assigned to each label

. Traditionally, one chooses the initial condition

for , so that we label each particle by its initial location at time

; we are also free to specify other initial conditions for the trajectory map if we please. Indeed, we have the freedom to “permute” the labels

by an arbitrary diffeomorphism: if

is a trajectory map, and

is any diffeomorphism (a smooth map whose inverse exists and is also smooth), then the map

is also a trajectory map, albeit one with different initial conditions

.

Despite the popularity of the initial condition (4), we will try to keep conceptually separate the Eulerian space from the Lagrangian space

, as they play different physical roles in the interpretation of the fluid; for instance, while the Euclidean metric

is an important feature of Eulerian space

, it is not a geometrically natural structure to use in Lagrangian space

. We have the following more general version of Exercise 8 from 254A Notes 2:

Exercise 1 Let

be smooth and bounded.

- If

is a smooth map, show that there exists a unique smooth trajectory map

with initial condition

for all

.

- Show that if

is a diffeomorphism and

, then the map

is also a diffeomorphism.

Remark 2 The first of the Euler equations (1) can now be written in the form

which can be viewed as a continuous limit of Newton’s first law

.

Call a diffeomorphism (oriented) volume preserving if one has the equation

for all , where the total differential

is the

matrix with entries

for

and

, where

are the components of

. (If one wishes, one can also view

as a linear transformation from the tangent space

of Lagrangian space at

to the tangent space

of Eulerian space at

.) Equivalently,

is orientation preserving and one has a Jacobian-free change of variables formula

for all , which is in turn equivalent to

having the same Lebesgue measure as

for any measurable set

.

The divergence-free condition then can be nicely expressed in terms of volume-preserving properties of the trajectory maps

, in a manner which confirms the interpretation of this condition as an incompressibility condition on the fluid:

Lemma 3 Let

be smooth and bounded, let

be a volume-preserving diffeomorphism, and let

be the trajectory map. Then the following are equivalent:

on

.

is volume-preserving for all

.

Proof: Since is orientation-preserving, we see from continuity that

is also orientation-preserving. Suppose that

is also volume-preserving, then for any

we have the conservation law

for all . Differentiating in time using the chain rule and (3) we conclude that

for all , and hence by change of variables

which by integration by parts gives

for all and

, so

is divergence-free.

To prove the converse implication, it is convenient to introduce the labels map , defined by setting

to be the inverse of the diffeomorphism

, thus

for all . By the implicit function theorem,

is smooth, and by differentiating the above equation in time using (3) we see that

where is the usual material derivative

acting on functions on . If

is divergence-free, we have from integration by parts that

for any test function . In particular, for any

, we can calculate

and hence

for any . Since

is volume-preserving, so is

, thus

Thus is volume-preserving, and hence

is also.

Exercise 4 Let

be a continuously differentiable map from the time interval

to the general linear group

of invertible

matrices. Establish Jacobi’s formula

and use this and (6) to give an alternate proof of Lemma 3 that does not involve any integration in space.

Remark 5 One can view the use of Lagrangian coordinates as an extension of the method of characteristics. Indeed, from the chain rule we see that for any smooth function

of Eulerian spacetime, one has

and hence any transport equation that in Eulerian coordinates takes the form

for smooth functions

of Eulerian spacetime is equivalent to the ODE

where

are the smooth functions of Lagrangian spacetime defined by

In this set of notes we recall some basic differential geometry notation, particularly with regards to pullbacks and Lie derivatives of differential forms and other tensor fields on manifolds such as and

, and explore how the Euler equations look in this notation. Our discussion will be entirely formal in nature; we will assume that all functions have enough smoothness and decay at infinity to justify the relevant calculations. (It is possible to work rigorously in Lagrangian coordinates – see for instance the work of Ebin and Marsden – but we will not do so here.) As a general rule, Lagrangian coordinates tend to be somewhat less convenient to use than Eulerian coordinates for establishing the basic analytic properties of the Euler equations, such as local existence, uniqueness, and continuous dependence on the data; however, they are quite good at clarifying the more algebraic properties of these equations, such as conservation laws and the variational nature of the equations. It may well be that in the future we will be able to use the Lagrangian formalism more effectively on the analytic side of the subject also.

Remark 6 One can also write the Navier-Stokes equations in Lagrangian coordinates, but the equations are not expressed in a favourable form in these coordinates, as the Laplacian

appearing in the viscosity term becomes replaced with a time-varying Laplace-Beltrami operator. As such, we will not discuss the Lagrangian coordinate formulation of Navier-Stokes here.

Note: this post is not required reading for this course, or for the sequel course in the winter quarter.

In a Notes 2, we reviewed the classical construction of Leray of global weak solutions to the Navier-Stokes equations. We did not quite follow Leray’s original proof, in that the notes relied more heavily on the machinery of Littlewood-Paley projections, which have become increasingly common tools in modern PDE. On the other hand, we did use the same “exploiting compactness to pass to weakly convergent subsequence” strategy that is the standard one in the PDE literature used to construct weak solutions.

As I discussed in a previous post, the manipulation of sequences and their limits is analogous to a “cheap” version of nonstandard analysis in which one uses the Fréchet filter rather than an ultrafilter to construct the nonstandard universe. (The manipulation of generalised functions of Columbeau-type can also be comfortably interpreted within this sort of cheap nonstandard analysis.) Augmenting the manipulation of sequences with the right to pass to subsequences whenever convenient is then analogous to a sort of “lazy” nonstandard analysis, in which the implied ultrafilter is never actually constructed as a “completed object“, but is instead lazily evaluated, in the sense that whenever membership of a given subsequence of the natural numbers in the ultrafilter needs to be determined, one either passes to that subsequence (thus placing it in the ultrafilter) or the complement of the sequence (placing it out of the ultrafilter). This process can be viewed as the initial portion of the transfinite induction that one usually uses to construct ultrafilters (as discussed using a voting metaphor in this post), except that there is generally no need in any given application to perform the induction for any uncountable ordinal (or indeed for most of the countable ordinals also).

On the other hand, it is also possible to work directly in the orthodox framework of nonstandard analysis when constructing weak solutions. This leads to an approach to the subject which is largely equivalent to the usual subsequence-based approach, though there are some minor technical differences (for instance, the subsequence approach occasionally requires one to work with separable function spaces, whereas in the ultrafilter approach the reliance on separability is largely eliminated, particularly if one imposes a strong notion of saturation on the nonstandard universe). The subject acquires a more “algebraic” flavour, as the quintessential analysis operation of taking a limit is replaced with the “standard part” operation, which is an algebra homomorphism. The notion of a sequence is replaced by the distinction between standard and nonstandard objects, and the need to pass to subsequences disappears entirely. Also, the distinction between “bounded sequences” and “convergent sequences” is largely eradicated, particularly when the space that the sequences ranged in enjoys some compactness properties on bounded sets. Also, in this framework, the notorious non-uniqueness features of weak solutions can be “blamed” on the non-uniqueness of the nonstandard extension of the standard universe (as well as on the multiple possible ways to construct nonstandard mollifications of the original standard PDE). However, many of these changes are largely cosmetic; switching from a subsequence-based theory to a nonstandard analysis-based theory does not seem to bring one significantly closer for instance to the global regularity problem for Navier-Stokes, but it could have been an alternate path for the historical development and presentation of the subject.

In any case, I would like to present below the fold this nonstandard analysis perspective, quickly translating the relevant components of real analysis, functional analysis, and distributional theory that we need to this perspective, and then use it to re-prove Leray’s theorem on existence of global weak solutions to Navier-Stokes.

Recent Comments