It has been a while since the last AppStream-related post (or any post for that matter) on this blog, but of course development didn’t stand still all this time. Quite the opposite – it was just me writing less about it, which actually is a problem as some of the new features are much less visible. People don’t seem to re-read the specification constantly for some reason

It has been a while since the last AppStream-related post (or any post for that matter) on this blog, but of course development didn’t stand still all this time. Quite the opposite – it was just me writing less about it, which actually is a problem as some of the new features are much less visible. People don’t seem to re-read the specification constantly for some reason  . As a consequence, we have pretty good adoption of features I blogged about (like fonts support), but much of the new stuff is still not widely used. Also, I had to make a promise to several people to blog about the new changes more often, and I am definitely planning to do so. So, expect posts about AppStream stuff a bit more often now.

. As a consequence, we have pretty good adoption of features I blogged about (like fonts support), but much of the new stuff is still not widely used. Also, I had to make a promise to several people to blog about the new changes more often, and I am definitely planning to do so. So, expect posts about AppStream stuff a bit more often now.

What actually was AppStream again? The AppStream Freedesktop Specification describes two XML metadata formats to describe software components: One for software developers to describe their software, and one for distributors and software repositories to describe (possibly curated) collections of software. The format written by upstream projects is called Metainfo and encompasses any data installed in /usr/share/metainfo/, while the distribution format is just called Collection Metadata. A reference implementation of the format and related features written in C/GLib exists as well as Qt bindings for it, so the data can be easily accessed by projects which need it.

The software metadata contains a unique ID for the respective software so it can be identified across software repositories. For example the VLC Mediaplayer is known with the ID org.videolan.vlc in every software repository, no matter whether it’s the package archives of Debian, Fedora, Ubuntu or a Flatpak repository. The metadata also contains translatable names, summaries, descriptions, release information etc. as well as a type for the software. In general, any information about a software component that is in some form relevant to displaying it in software centers is or can be present in AppStream. The newest revisions of the specification also provide a lot of technical data for systems to make the right choices on behalf of the user, e.g. Fwupd uses AppStream data to describe compatible devices for a certain firmware, or the mediatype information in AppStream metadata can be used to install applications for an unknown filetype easier. Information AppStream does not contain is data the software bundling systems are responsible for. So mechanistic data how to build a software component or how exactly to install it is out of scope.

So, now let’s finally get to the new AppStream features since last time I talked about it – which was almost two years ago, so quite a lot of stuff has accumulated!

Specification Changes/Additions

Web Application component type

(Since v0.11.7) A new component type web-application has been introduced to describe web applications. A web application can for example be GMail, YouTube, Twitter, etc. launched by the browser in a special mode with less chrome. Fundamentally though it is a simple web link. Therefore, web apps need a launchable tag of type url to specify an URL used to launch them. Refer to the specification for details. Here is a (shortened) example metainfo file for the Riot Matrix client web app:

<component type="web-application">

<id>im.riot.webapp</id>

<metadata_license>FSFAP</metadata_license>

<project_license>Apache-2.0</project_license>

<name>Riot.im</name>

<summary>A glossy Matrix collaboration client for the web</summary>

<description>

<p>Communicate with your team[...]</p>

</description>

<icon type="stock">im.riot.webapp</icon>

<categories>

<category>Network</category>

<category>Chat</category>

<category>VideoConference</category>

</categories>

<url type="homepage">https://riot.im/</url>

<launchable type="url">https://riot.im/app</launchable>

</component>

Repository component type

(Since v0.12.1) The repository component type describes a repository of downloadable content (usually other software) to be added to the system. Once a component of this type is installed, the user has access to the new content. In case the repository contains proprietary software, this component type pairs well with the agreements section.

This component type can be used to provide easy installation of e.g. trusted Debian or Fedora repositories, but also can be used for other downloadable content. Refer to the specification entry for more information.

Operating System component type

(Since v0.12.5) It makes sense for the operating system itself to be represented in the AppStream metadata catalog. Information about it can be used by software centers to display information about the current OS release and also to notify about possible system upgrades. It also serves as a component the software center can use to attribute package updates to that do not have AppStream metadata. The operating-system component type was designed for this and you can find more information about it in the specification documentation.

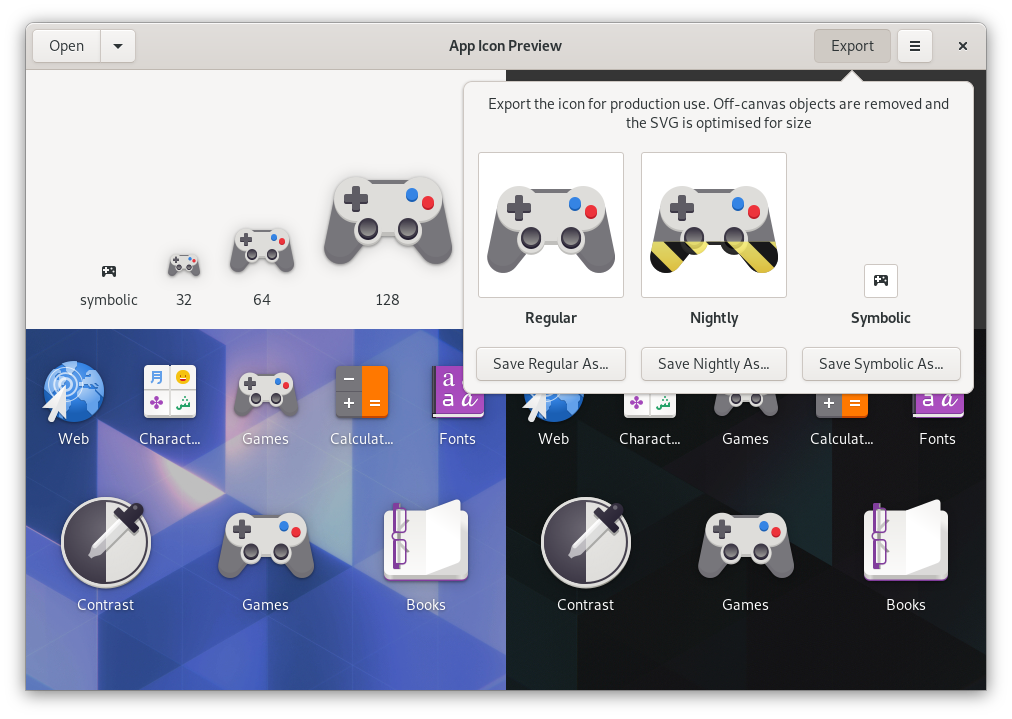

Icon Theme component type

(Since v0.12.8) While styles, themes, desktop widgets etc. are already covered in AppStream via the addon component type as they are specific to the toolkit and desktop environment, there is one exception: Icon themes are described by a Freedesktop specification and (usually) work independent of the desktop environment. Because of that and on request of desktop environment developers, a new icon-theme component type was introduced to describe icon themes specifically. From the data I see in the wild and in Debian specifically, this component type appears to be very underutilized. So if you are an icon theme developer, consider adding a metainfo file to make the theme show up in software centers! You can find a full description of this component type in the specification.

Runtime component type

(Since v0.12.10) A runtime is mainly known in the context of Flatpak bundles, but it actually is a more universal concept. A runtime describes a defined collection of software components used to run other applications. To represent runtimes in the software catalog, the new AppStream component type was introduced in the specification, but it has been used by Flatpak for a while already as a nonstandard extension.

Release types

(Since v0.12.0) Not all software releases are created equal. Some may be for general use, others may be development releases on the way to becoming an actual final release. In order to reflect that, AppStream introduced at type property to the release tag in a releases block, which can be either set to stable or development. Software centers can then decide to hide or show development releases.

End-of-life date for releases

(Since v0.12.5) Some software releases have an end-of-life date from which onward they will no longer be supported by the developers. This is especially true for Linux distributions which are described in a operating-system component. To define an end-of-life date, a release in AppStream can now have a date_eol property using the same syntax as a date property but defining the date when the release will no longer be supported (refer to the releases tag definition).

Details URL for releases

(Since v0.12.5) The release descriptions are short, text-only summaries of a release, usually only consisting of a few bullet points. They are intended to give users a fast, quick to read overview of a new release that can be displayed directly in the software updater. But sometimes you want more than that. Maybe you are an application like Blender or Krita and have prepared an extensive website with an in-depth overview, images and videos describing the new release. For these cases, AppStream now permits an url tag in a release tag pointing to a website that contains more information about a particular release.

Release artifacts

(Since v0.12.6) AppStream limited release descriptions to their version numbers and release notes for a while, without linking the actual released artifacts. This was intentional, as any information how to get or install software should come from the bundling/packaging system that Collection Metadata was generated for.

But the AppStream metadata has outgrown this more narrowly defined purpose and has since been used for a lot more things, like generating HTML download pages for software, making it the canonical source for all the software metadata in some projects. Coming from Richard Hughes awesome Fwupd project was also the need to link to firmware binaries from an AppStream metadata file, as the LVFS/Fwupd use AppStream metadata exclusively to provide metadata for firmware. Therefore, the specification was extended with an artifacts tag for releases, to link to the actual release binaries and tarballs. This replaced the previous makeshift “release location” tag.

Release artifacts always have to link to releases directly, so the releases can be acquired by machines immediately and without human intervention. A release can have a type of source or binary, indicating whether a source tarball or binary artifact is linked. Each binary release can also have an associated platform triplet for Linux systems, an identifier for firmware, or any other identifier for a platform. Furthermore, we permit sha256 and blake2 checksums for the release artifacts, as well as specifying sizes. Take a look at the example below, or read the specification for details.

<releases>

​ <release version="1.2" date="2014-04-12" urgency="high">

​ [...]

​ <artifacts>

​ <artifact type="binary" platform="x86_64-linux-gnu">

​ <location>https://example.com/mytarball.bin.tar.xz</location>

​ <checksum type="blake2">852ed4aff45e1a9437fe4774b8997e4edfd31b7db2e79b8866832c4ba0ac1ebb7ca96cd7f95da92d8299da8b2b96ba480f661c614efd1069cf13a35191a8ebf1</checksum>

​ <size type="download">12345678</size>

​ <size type="installed">42424242</size>

​ </artifact>

​ <artifact type="source">

​ <location>https://example.com/mytarball.tar.xz</location>

​ [...]

​ </artifact>

​ </artifacts>

​ </release>

​</releases>

Issue listings for releases

(Since v0.12.9) Software releases often fix issues, sometimes security relevant ones that have a CVE ID. AppStream provides a machine-readable way to figure out which components on your system are currently vulnerable to which CVE registered issues. Additionally, a release tag can also just contain references to any normal resolved bugs, via bugtracker URLs. Refer to the specification for details. Example for the issues tag in AppStream Metainfo files:

<issues>

​ <issue url="https://example.com/bugzilla/12345">bz#12345</issue>

​ <issue type="cve">CVE-2019-123456</issue>

​</issues>

Requires and Recommends relations

(Since v0.12.0) Sometimes software has certain requirements only justified by some systems, and sometimes it might recommend specific things on the system it will run on in order to run at full performance.

I was against adding relations to AppStream for quite a while, as doing so would add a more “functional” dimension to it, impacting how and when software is installed, as opposed to being only descriptive and not essential to be read in order to install software correctly. However, AppStream has pretty much outgrown its initial narrow scope and adding relation information to Metainfo files was a natural step to take. For Fwupd it was an essential step, as Fwupd firmware might have certain hard requirements on the system in order to be installed properly. And AppStream requirements and recommendations go way beyond what regular package dependencies could do in Linux distributions so far.

Requirements and recommendations can be on other software components via their id, on a modalias, specific kernel version, existing firmware version or for making system memory recommendations. See the specification for details on how to use this. Example:

<requires>

<id version="1.0" compare="ge">org.example.MySoftware</id>

​ <kernel version="5.6" compare="ge">Linux</kernel>

​</requires>

<recommends>

​ <memory>2048</memory> <!-- recommend at least 2GiB of memory -->

​</recommends>

This means that AppStream currently supported provides, suggests, recommends and requires relations to refer to other software components or system specifications.

Agreements

(Since v0.12.1) The new agreement section in AppStream Metainfo files was added to make it easier for software to be compliant to the EU GDPR. It has since been expanded to be used for EULAs as well, which was a request coming (to no surprise) from people having to deal with corporate and proprietary software components. An agreement consists of individual sections with headers and descriptive texts and should – depending on the type – be shown to the user upon installation or first use of a software component. It can also be very useful in case the software component is a firmware or driver (which often is proprietary – and companies really love their legal documents and EULAs).

Contact URL type

(Since v0.12.4) The contact URL type can be used to simply set a link back to the developer of the software component. This may be an URL to a contact form, their website or even a mailto: link. See the specification for all URL types AppStream supports.

Videos as software screenshots

(Since v0.12.8) This one was quite long in the making – the feature request for videos as screenshots had been filed in early 2018. I was a bit wary about adding video, as that lets you run into a codec and container hell as well as requiring software centers to support video and potentially requiring the appstream-generator to get into video transcoding, which I really wanted to avoid. Alternatively, we would have had to make AppStream add support for multiple likely proprietary video hosting platforms, which certainly would have been a bad idea on every level. Additionally, I didn’t want to have people add really long introductory videos to their applications.

Ultimately, the problem was solved by simplification and reduction: People can add a video as “screenshot” to their software components, as long as it isn’t the first screenshot in the list. We only permit the vp9 and av1 codecs and the webm and matroska container formats. Developers should expect the audio of their videos to be muted, but if audio is present, the opus codec must be used. Videos will be size-limited, for example Debian imposes a 14MiB limit on video filesize. The appstream-generator will check for all of these requirements and reject a video in case it doesn’t pass one of the checks. This should make implementing videos in software centers easy, and also provide the safety guarantees and flexibility we want.

So far we have not seen many videos used for application screenshots. As always, check the specification for details on videos in AppStream. Example use in a screenshots tag:

​<screenshots>

​ <screenshot type="default">

​ <image type="source" width="1600" height="900">https://example.com/foobar/screenshot-1.png</image>

​ </screenshot>

​ <screenshot>

​ <video codec="av1" width="1600" height="900">https://example.com/foobar/screencast.mkv</video>

​ </screenshot>

​ </screenshots>

Emphasis and code markup in descriptions

(Since v0.12.8) It has long been requested to have a little bit more expressive markup in descriptions in AppStream, at least more than just lists and paragraphs. That has not happened for a while, as it would be a breaking change to all existing AppStream parsers. Additionally, I didn’t want to let AppStream descriptions become long, general-purpose “how to use this software” documents. They are intended to give a quick overview of the software, and not comprehensive information. However ultimately we decided to add support for at least two more elements to format text: Inline code elements as well as em emphases. There may be more to come, but that’s it for now. This change was made about half a year ago, and people are currently advised to use the new styling tags sparingly, as otherwise their software descriptions may look odd when parsed with older AppStream implementation versions.

Remove-component merge mode

(Since v0.12.4) This addition is specified for the Collection Metadata only, as it affects curation. Since AppStream metadata is in one big pool for Linux distributions, and distributions like Debian freeze their repositories, it sometimes is required to merge metadata from different sources on the client system instead of generating it in the right format on the server. This can also be used for curation by vendors of software centers. In order to edit preexisting metadata, special merge components are created. These can permit appending data, replacing data etc. in existing components in the metadata pool. The one thing that was missing was a mode that permitted the complete removal of a component. This was added via a special remove-component merge mode. This mode can be used to pull metadata from a software center’s catalog immediately even if the original metadata was frozen in place in a package repository. This can be very useful in case an inappropriate software component is found in the repository of a Linux distribution post-release. Refer to the specification for details.

Custom metadata

(Since v0.12.1) The AppStream specification is extensive, but it can not fit every single special usecase. Sometimes requests come up that can’t be generalized easily, and occasionally it is useful to prototype a feature first to see if it is actually used before adding it to the specification properly. For that purpose, the custom tag exists. The tag defines a simple key-value structure that people can use to inject arbitrary metadata into an AppStream metainfo file. The libappstream library will read this tag by default, providing easy access to the underlying data. Thereby, the data can easily be used by custom applications designed to parse it. It is important to note that the appstream-generator tool will by default strip the custom data from files unless it has been whitelisted explicitly. That way, the creator of a metadata collection for a (package) repository has some control over what data ends up in the resulting Collection Metadata file. See the specification for more details on this tag.

Miscellaneous additions

(Since v0.12.9) Additionally to JPEG and PNG, WebP images are now permitted for screenshots in Metainfo files. These images will – like every image – be converted to PNG images by the tool generating Collection Metadata for a repository though.

(Since v0.12.10) The specification now contains a new name_variant_suffix tag, which is a translatable string that software lists may append to the name of a component in case there are multiple components with the same name. This is intended to be primarily used for firmware in Fwupd, where firmware may have the same name but actually be slightly different (e.g. region-specific). In these cases, the additional name suffix is shown to make it easier to distinguish the different components in case multiple are present.

(Since v0.12.10) AppStream has an URI format to install applications directly from webpages via the appstream: scheme. This URI scheme now permits alternative IDs for the same component, in case it switched its ID in the past. Take a look at the specification for details about the URI format.

(Since v0.12.10) AppStream now supports version 1.1 of the Open Age Rating Service (OARS), so applications (especially games) can voluntarily age-rate themselves. AppStream does not replace parental guidance here, and all data is purely informational.

Library & Implementation Changes

Of course, besides changes to the specification, the reference implementation also received a lot of improvements. There are too many to list them all, but a few are noteworthy to mention here.

No more automatic desktop-entry file loading

(Since v0.12.3) By default, libappstream was loading information from local .desktop files into the metadata pool of installed applications. This was done to ensure installed apps were represented in software centers to allow them to be uninstalled. This generated much more pain than it was useful for though, with metadata appearing two to three times in software centers because people didn’t set the X-AppStream-Ignore=true tag in their desktop-entry files. Also, the generated data was pretty bad. So, newer versions of AppStream will only load data of installed software that doesn’t have an equivalent in the repository metadata if it ships a metainfo file. One more good reason to ship a metainfo file!

Software centers can override this default behavior change by setting the AS_POOL_FLAG_READ_DESKTOP_FILES flag for AsPool instances (which many already did anyway).

LMDB caches and other caching improvements

(Since v0.12.7) One of the biggest pain points in adding new AppStream features was always adjusting the (de)serialization of the new markup: AppStream exists as a YAML version for Debian-based distributions for Collection Metadata, an XML version based on the Metainfo format as default, and a GVariant binary serialization for on-disk caching. The latter was used to drastically reduce memory consumption and increase speed of software centers: Instead of loading all languages, only the one we currently needed was loaded. The expensive icon-finding logic, building of the token cache for searches and other operations were performed and the result was saved as a binary cache on-disk, so it was instantly ready when the software center was loaded next.

Adjusting three serialization formats was pretty laborious and a very boring task. And at one point I benchmarked the (de)serialization performance of the different formats and found out the the XML reading/writing was actually massively outperforming that of the GVariant cache. Since the XML parser received much more attention, that was only natural (but there were also other issues with GVariant deserializing large dictionary structures).

Ultimately, I removed the GVariant serialization and replaced it with a memory-mapped XML-based cache that reuses 99.9% of the existing XML serialization code. The cache uses LMDB, a small embeddable key-value store. This makes maintaining AppStream much easier, and we are using the same well-tested codepaths for caching now that we also use for normal XML reading/writing. With this change, AppStream also uses even less memory, as we only keep the software components in memory that the software center currently displays. Everything that isn’t directly needed also isn’t in memory. But if we do need the data, it can be pulled from the memory-mapped store very quickly.

While refactoring the caching code, I also decided to give people using libappstream in their own projects a lot more control over the caching behavior. Previously, libappstream was magically handling the cache behind the back of the application that was using it, guessing which behavior was best for the given usecase. But actually, the application using libappstream knows best how caching should be handled, especially when it creates more than one AsPool instance to hold and search metadata. Therefore, libappstream will still pick the best defaults it can, but give the application that uses it all control it needs, down to where to place a cache file, to permit more efficient and more explicit management of caches.

Validator improvements

(Since v0.12.8) The AppStream metadata validator, used by running appstreamcli validate <file>, is the tool that each Metainfo file should run through to ensure it is conformant to the AppStream specification and to give some useful hints to improve the metadata quality. It knows four issue severities: Pedantic issues are hidden by default (show them with the --pedantic flag) and affect upcoming features or really “nice to have” things that are completely nonessential. Info issues are not directly a problem, but are hints to improve the metadata and get better overall data. Things the specification recommends but doesn’t mandate also fall into this category. Warnings will result in degraded metadata but don’t make the file invalid in its entirety. Yet, they are severe enough that we fail the validation. Things like that are for example a vanishing screenshot from an URL: Most of the data is still valid, but the result may not look as intended. Invalid email addresses, invalid tag properties etc. fall into that category as well: They will all reduce the amount of metadata systems have available. So the metadata should definitely be warning-free in order to be valid. And finally errors are outright violation of the specification that may likely result in the data being ignored in its entirety or large chunks of it being invalid. Malformed XML or invalid SPDX license expressions would fall into that group.

Previously, the validator would always show very long explanations for all the issues it found, giving detailed information on an issue. While this was nice if there were few issues, it produces very noisy output and makes it harder to quickly spot the actual error. So, the whole validator output was changed to be based on issue tags, a concept that is also known from other lint tools such as Debian’s Lintian: Each error has its own tag string, identifying it. By default, we only show the tag string, line of the issue, severity and component name it affects as well a short repeat of an invalid value (in case that’s applicable to the issue). If people do want to know detailed information, they can get it by passing --explain to the validation command. This solution has many advantages:

- It makes the output concise and easy to read by humans and is mostly already self-explanatory

- Machines can parse the tags easily and identify which issue was emitted, which is very helpful for AppStream’s own testsuite but also for any tool wanting to parse the output

- We can now have translators translate the explanatory texts

Initially, I didn’t want to have the validator return translated output, as that may be less helpful and harder to search the web for. But now, with the untranslated issue tags and much longer and better explanatory texts, it makes sense to trust the translators to translate the technical explanations well.

Of course, this change broke any tool that was parsing the old output. I had an old request by people to have appstreamcli return machine-readable validator output, so they could integrate it better with preexisting CI pipelines and issue reporting software. Therefore, the tool can now return structured, machine-readable output in the YAML format if you pass --format=yaml to it. That output is guaranteed to be stable and can be parsed by any CI machinery that a project already has running. If needed, other output formats could be added in future, but for now YAML is the only one and people generally seem to be happy with it.

Create desktop-entry files from Metainfo

(Since v0.12.9) As you may have noticed, an AppStream Metainfo file contains some information that a desktop-entry file also contains. Yet, the two file formats serve very different purposes: A desktop file is basically launch instructions for an application, with some information about how it is displayed. A Metainfo file is mostly display information and less to none launch instructions. Admittedly though, there is quite a bit of overlap which may make it useful for some projects to simply generate a desktop-entry file from a Metainfo file. This may not work for all projects, most notably ones where multiple desktop-entry files exists for just one AppStream component. But for the simplest and most common of cases, a direct mapping between Metainfo and desktop-entry file, this option is viable.

The appstreamcli tool permits this now, using the appstreamcli make-desktop-file subcommand. It just needs a Metainfo file as first parameter, and a desktop-entry output file as second parameter. If the desktop-entry file already exists, it will be extended with the new data from tbe Metainfo file. For the Exec field in a desktop-entry file, appstreamcli will read the first binary entry in a provides tag, or use an explicitly provided line passed via the --exec parameter.

Please take a look at the appstreamcli(1) manual page for more information on how to use this useful feature.

Convert NEWS files to Metainfo and vice versa

(Since v0.12.9) Writing the XML for release entries in Metainfo files can sometimes be a bit tedious. To make this easier and to integrate better with existing workflows, two new subcommands for appstreamcli are now available: news-to-metainfo and metainfo-to-news. They permit converting a NEWS textfile to Metainfo XML and vice versa, and can be integrated with an application’s build process. Take a look at AppStream itself on how it uses that feature.

In addition to generating the NEWS output or reading it, there is also a second YAML-based option available. Since YAML is a structured format, more of the features of AppStream release metadata are available in the format, such as marking development releases as such. You can use the --format flag to switch the output (or input) format to YAML.

Please take a look at the appstreamcli(1) manual page for a bit more information on how to use this feature in your project.

Support for recent SPDX syntax

(Since v0.12.10) This has been a pain point for quite a while: SPDX is a project supported by the Linux Foundation to (mainly) provide a unified syntax to identify licenses for Open Source projects. They did change the license syntax twice in incompatible ways though, and AppStream already implemented a previous versions, so we could not simply jump to the latest version without supporting the old one.

With the latest release of AppStream though, the software should transparently convert between the different version identifiers and also support the most recent SPDX license expressions, including the WITH operator for license exceptions. Please report any issues if you see them!

Future Plans?

First of all, congratulations for reading this far into the blog post! I hope you liked the new features! In case you skipped here, welcome to one of the most interesting sections of this blog post!

So, what is next for AppStream? The 1.0 release, of course! The project is certainly mature enough to warrant that, and originally I wanted to get the 1.0 release out of the door this February, but it doesn’t look like that date is still realistic. But what does “1.0” actually mean for AppStream? Well, here is a list of the intended changes:

- Removal of almost all deprecated parts of the specification. Some things will remain supported forever though: For example the

desktop component type is technically deprecated for desktop-application but is so widely used that we will support it forever. Things like the old application node will certainly go though, and so will the /usr/share/appdata path as metainfo location, the appcategory node that nobody uses anymore and all other legacy cruft. I will be mindful about this though: If a feature still has a lot of users, it will stay supported, potentially forever. I am closely monitoring what is used mainly via the information available via the Debian archive. As a general rule of thumb though: A file for which appstreamcli validate passes today is guaranteed to work and be fine with AppStream 1.0 as well. - Removal of all deprecated API in libappstream. If your application still uses API that is flagged as deprecated, consider migrating to the supported functions and you should be good to go! There are a few bigger refactorings planned for some of the API around releases and data serialization, but in general I don’t expect this to be hard to port.

- The 1.0 specification will be covered by an extended stability promise. When a feature is deprecated, there will be no risk that it is removed or become unsupported (so the removal of deprecated stuff in the specification should only happen once). What is in the 1.0 specification will quite likely be supported forever.

So, what is holding up the 1.0 release besides the API cleanup work? Well, there are a few more points I want to resolve before releasing the 1.0 release:

- Resolve hosting release information at a remote location, not in the Metainfo file (#240): This will be a disruptive change that will need API adjustments in libappstream for sure, and certainly will – if it happens – need the 1.0 release. Fetching release data from remote locations as opposed to having it installed with software makes a lot of sense, and I either want to have this implemented and specified properly for the 1.0 release, or have it explicitly dismissed.

- Mobile friendliness / controls metadata (#192 & #55): We need some way to identify applications as “works well on mobile”. I also work for a company called Purism which happens to make a Linux-based smartphone, so this is obviously important for us. But it also is very relevant for users and other Linux mobile projects. The main issue here is to define what “mobile” actually means and what information makes sense to have in the Metainfo file to be future-proof. At the moment, I think we should definitely have data on supported input controls for a GUI application (touch vs mouse), but for this the discussion is still not done.

- Resolving

addon component type complexity (lots of issue reports): At the moment, an addon component can be created to extend an existing application by $whatever thing This can be a plugin, a theme, a wallpaper, extra content, etc. This is all running in the addon supergroup of components. This makes it difficult for applications and software centers to occasionally group addons into useful groups – a plugin is functionally very different from a theme. Therefore I intend to possibly allow components to name “addon classes” they support and that addons can sort themselves into, allowing easy grouping and sorting of addons. This would of course add extra complexity. So this feature will either go into the 1.0 release, or be rejected. - Zero pending feature requests for the specification: Any remaining open feature request for the specification itself in AppStream’s issue tracker should either be accepted & implemented, or explicitly deferred or rejected.

I am not sure yet when the todo list will be completed, but I am certain that the 1.0 release of AppStream will happen this year, most likely before summer. Any input, especially from users of the format, is highly appreciated.

Thanks a lot to everyone who contributed or is contributing to the AppStream implementation or specification, you are great! Also, thanks to you, the reader, for using AppStream in your project  . I definitely will give a bit more frequent and certainly shorter updates on the project’s progress from now on. Enjoy your rich software metadata, firmware updates and screenshot videos meanwhile!

. I definitely will give a bit more frequent and certainly shorter updates on the project’s progress from now on. Enjoy your rich software metadata, firmware updates and screenshot videos meanwhile!

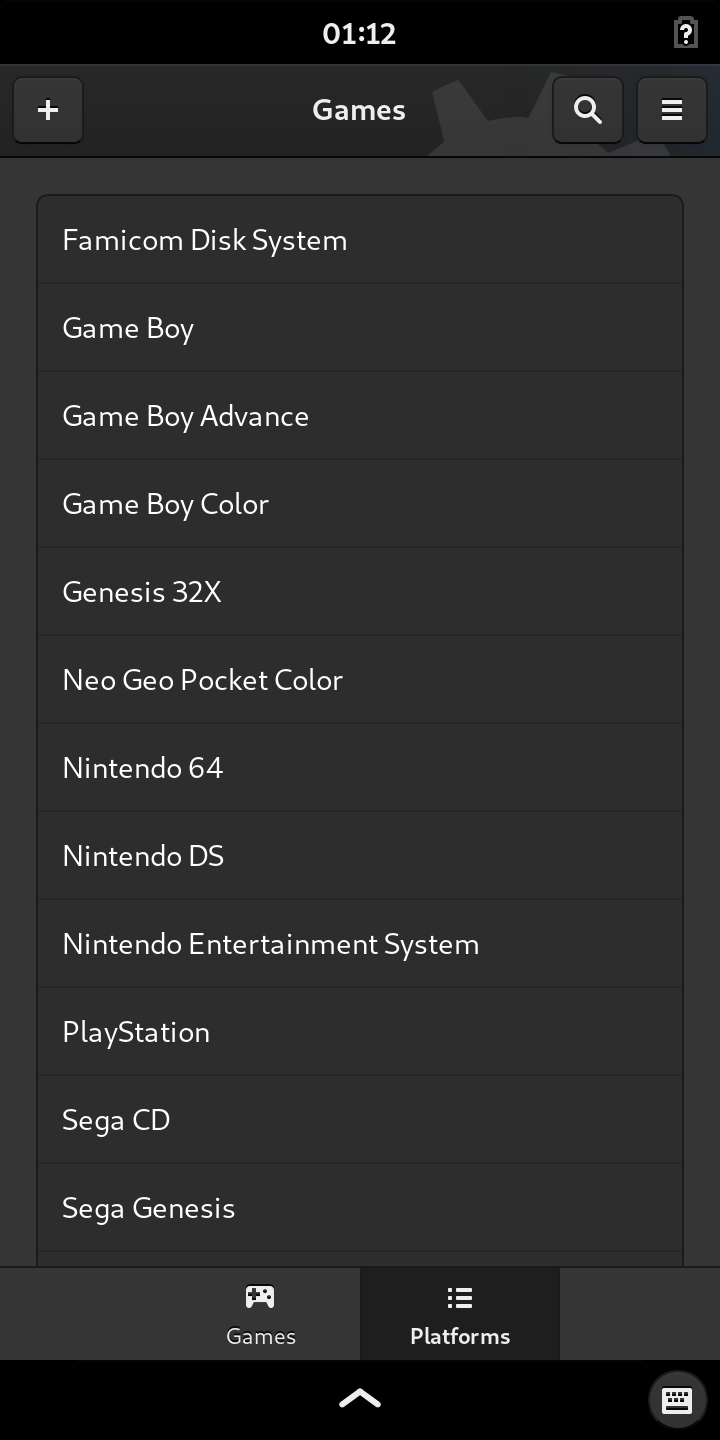

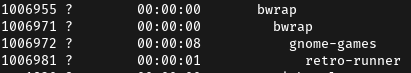

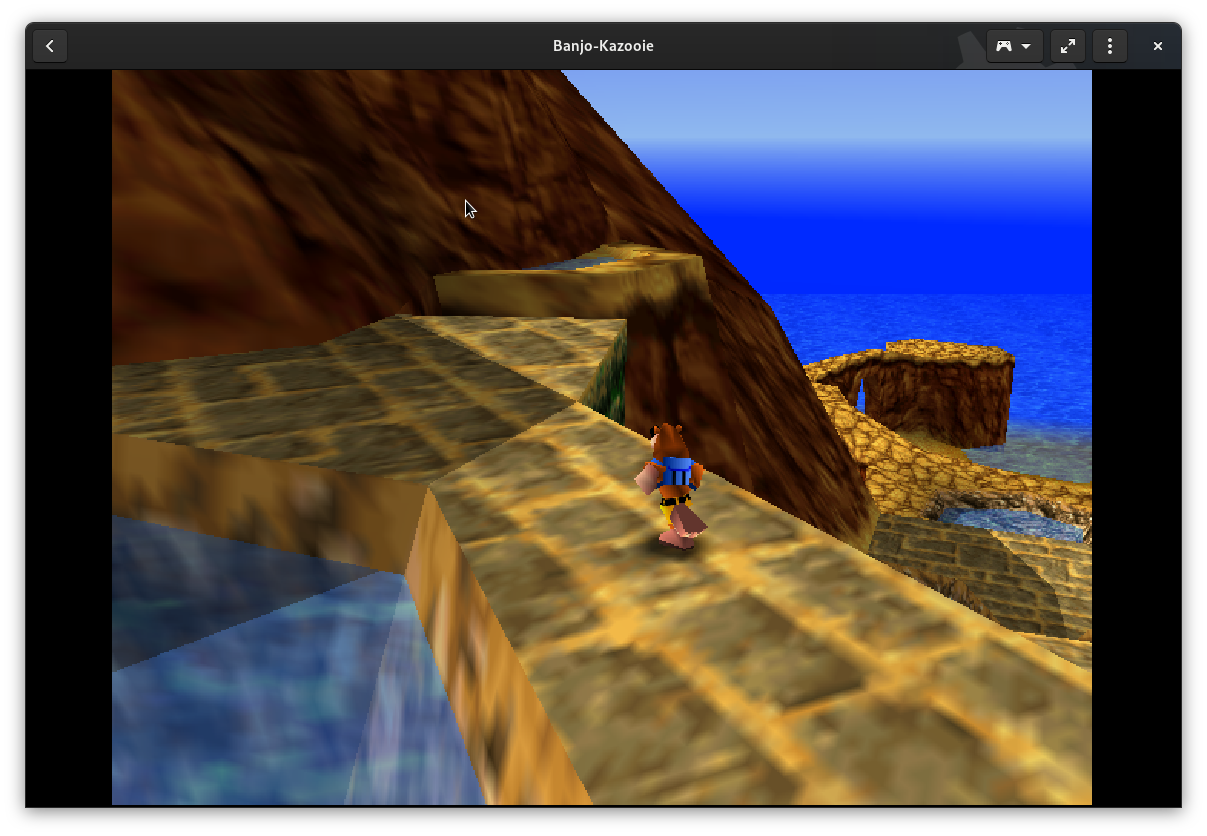

Thankfully, the resolution is usually pretty low (160×144, 240×160, 320×240, or sometimes 640×480), so this pipeline works, and it’s even noticeably faster than it was in a single process for me.

Thankfully, the resolution is usually pretty low (160×144, 240×160, 320×240, or sometimes 640×480), so this pipeline works, and it’s even noticeably faster than it was in a single process for me.

It has been a while since the last AppStream-related post (or any post for that matter) on this blog, but of course development didn’t stand still all this time. Quite the opposite – it was just me writing less about it, which actually is a problem as some of the new features are much less visible. People don’t seem to re-read the specification constantly for some reason

It has been a while since the last AppStream-related post (or any post for that matter) on this blog, but of course development didn’t stand still all this time. Quite the opposite – it was just me writing less about it, which actually is a problem as some of the new features are much less visible. People don’t seem to re-read the specification constantly for some reason  . As a consequence, we have pretty good adoption of features I blogged about (like fonts support), but much of the new stuff is still not widely used. Also, I had to make a promise to several people to blog about the new changes more often, and I am definitely planning to do so. So, expect posts about AppStream stuff a bit more often now.

. As a consequence, we have pretty good adoption of features I blogged about (like fonts support), but much of the new stuff is still not widely used. Also, I had to make a promise to several people to blog about the new changes more often, and I am definitely planning to do so. So, expect posts about AppStream stuff a bit more often now.