Cloud TPU

Train and run machine learning models faster than ever before.

View Documentation Get StartedAccelerated machine learning

Machine learning (ML) has enabled breakthroughs across a variety of business and research problems, from strengthening network security to improving the accuracy of medical diagnoses. Because training and running deep learning models can be computationally demanding, we built the Tensor Processing Unit (TPU), an ASIC designed from the ground up for machine learning that powers several of our major products, including Translate, Photos, Search, Assistant, and Gmail. Cloud TPU empowers businesses everywhere to access this accelerator technology to speed up their machine learning workloads on Google Cloud.

Built for AI on Google Cloud

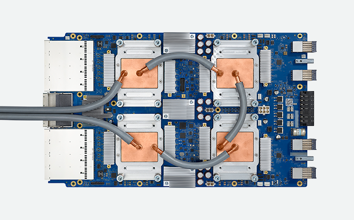

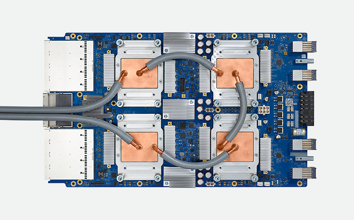

Architected to run cutting-edge machine learning models with AI services on Google Cloud, Cloud TPU delivers the computational power to transform your business or create the next research breakthrough. With a custom high-speed network that allows TPUs to work together on ML workloads, Cloud TPU can provide up to 11.5 petaflops of performance in a single pod.

Iterate faster on your ML solutions

Training a machine learning model is analogous to compiling code. Models need to be trained over and over as apps are built, deployed, and refined, so it needs to happen fast and cost-effectively. Cloud TPU provides the performance and cost ideal for ML teams to iterate faster on their solutions.

Proven, state-of-the-art models

Use Google-qualified reference models optimized for performance, accuracy, and quality to build solutions for many real world use cases. Just bring your data, download a reference model, and train.

Cloud TPU offering

Cloud TPU v2

180 teraflops

64 GB High Bandwidth Memory (HBM)

Cloud TPU v3 Beta

420 teraflops

128 GB HBM

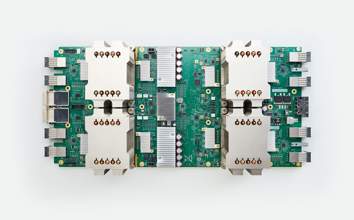

Cloud TPU v2 Pod Alpha

11.5 petaflops

4 TB HBM

2-D toroidal mesh network

Cloud TPU features

- Proven Reference Models

- Use proven Google-qualified reference models, optimized for performance, accuracy, and quality for many real-world use cases of object detection, language modeling, sentiment analysis, translation, image classification, and more.

- Integrated

- At their core, Cloud TPUs and Google Cloud's Data and Analytics services are fully integrated with other GCP offerings, providing unified access across the entire service line. Run machine learning workloads on Cloud TPUs and benefit from Google Cloud Platform’s industry-leading storage, networking, and data analytics technologies.

- Connect Cloud TPUs to Custom Machine Types

- You can connect to Cloud TPUs from custom VM types, which can help you optimally balance processor speeds, memory, and high-performance storage resources for your individual workloads.

- Preemptible Cloud TPU

- Save money by using preemptible Cloud TPUs for fault-tolerant machine learning workloads, such as long training runs with checkpointing or batch prediction on large datasets. Preemptible Cloud TPUs are 70% cheaper than on-demand instances, making everything from your first experiments to large-scale hyperparameter searches more affordable than ever before.

“Cloud TPU Pods have transformed our approach to visual shopping by delivering a 10X speedup over our previous infrastructure. We used to spend months training a single image recognition model, whereas now we can train much more accurate models in a few days on Cloud TPU Pods. We’ve also been able to take advantage of the additional memory the TPU pods have, allowing us to process many more images at a time. This rapid turnaround time enables us to iterate faster and deliver improved experiences for both eBay customers and sellers.”

— Larry Colagiovanni VP of New Product Development, eBay