Hey, did you notice, on the brand-spanking-new Yahoo homepage, right there on the side of the page, it’s photos from your Flickr contacts (or maybe your groups)! No? Go check it out, I’ll wait.

Ok, great, you’re back! What you should have seen, assuming you have Flickr contacts (or are a member of some groups), is photos from your most recently active contact (or group!). Something like… this:

(thanks schill!)

What you see above is the 10 most recent photos from my contact who most recently uploaded any photos. The homepage retrieves this data by making a call to a specially tailored method from the Flickr API.

The Latency Problem

In order to ensure performance for Yahoo.com, this API method had very tight SLAs; we can’t slow down the page for the millions of homepage visitors to pull in Flickr content, after all. While the method can return different data sets depending on the most recent activity from your Flickr contacts and groups, for the sake of this post we’re going to focus exclusively on the contacts case. The first step to returning data is to get your most recently active contact, and to do that we need a list of all of your contacts sorted by how recently they’ve uploaded a photo. In an ideal world, the SQL query would be something along the lines of:

SELECT contact_id FROM Contacts WHERE user_id = ? ORDER BY date_upload LIMIT 1;

Easy, right? Sadly, no. Due to the way our contact relationships are stored, the query we need to run is more complex than the above. Still, for the vast majority of Flickr users, the *actual* SQL query performed just fine, usually in less than 1ms. However, Flickr users come in all shapes and sizes. Some users have a few contacts, some have hundreds, and some… have tens of thousands. Unfortunately, the runtime for the query scaled proportionally to number of contacts the user has. I won’t delve into the specifics of the query or how we store contact relationships, but suffice it to say we investigated possible changes to both the query and to the indexes in MySQL, but neither was sufficient to give us the performance we needed across all possible use cases. With that in mind, we had to look elsewhere for optimizations.

The First Attempt

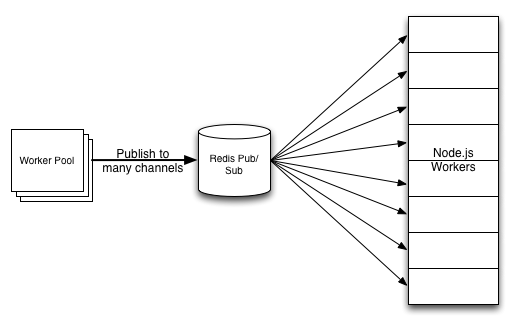

With a pure MySQL solution off the table, our first thoughts for optimization avenues turned to the obvious: denormalization. If we stored the 10 most recent photos from your most recently active contact ahead of time, then getting that list when the homepage was rendered would be trivial regardless of how many contacts you have. At Flickr we’re big fans of Redis, so our thoughts immediately turned to using it as a store for the denormalized contact data.

In order to denormalize the data, we need to constantly process six different user actions:

- Add a contact

- Remove a contact

- Change a contact relationship

- User uploads a photo

- User deletes a photo

- User changes a photo’s privacy

Each one of these actions can impact what photos you should see on the Yahoo homepage, and therefore must update the denormalized data appropriately. Because the photo related actions can potentially impact thousands of users, if not tens or hundreds of thousands (everyone who calls the photo owner a contact would need to have their denormalized data updated on upload), they must be processed by our offline task system to ensure site performance is not adversely impacted. Unfortunately, this is where we ran into a snag. The nature of Flickr’s offline task system is such that the order of processing is not guaranteed. In most cases this is not a problem, however when it comes to maintaining an accurate list of denormalized data not being able to predict the outcome of running multiple tasks is problematic.

Out of Order

Imagine the following scenario: you add a contact then quickly remove them. This results in two tasks being added, one to add and one to remove. If they’re processed in the order in which the actions were taken, everything is fine. However, if they’re processed out of order then when everything is said and done the denormalized data no longer accurately reflects your actual contact relationships. Maybe the next action that updates your data will correct this problem, or maybe it will introduce another problem. Over time, it’s likely that a not-insignificant number of users will have denormalized data that is out of sync with reality.

Ok, let’s try this another way

Looking back at our original problem, the bottleneck was not in generating the list of photos for your most recently active contact, it was just in finding who your most recently active contact was (specifically if you have thousands or tens of thousands of contacts). What if, instead of fully denormalizing, we just maintain a list of your recently active contacts? That would allow us to optimize the slow query, much like a native MySQL index would; instead of needing to look through a list of 20,000 contacts to see which one has uploaded a photo recently, we only need to look at your most recent 5 or 10 (regardless of your total contacts count)!

In the end, this is exactly what we ended up doing. We still process offline tasks to keep track of your contacts’ activity, but now the set of actions we need to track is smaller:

- Add a new contact

- Remove a contact

- User uploads a photo

Each task maintains a per-user sorted set where the set member is the contact id, and the score is the timestamp of when that contact last uploaded a photo. So, for example, if a user (user id 12345) adds a new contact (user id 98765) we simply do:

ZADD user_12345 1363665432 98765

Removing a contact is the opposite, using ZREM as the yin to ZADD‘s yang:

ZREM user_12345 98765

When a user uploads a new photo it’s once again a ZADD (though it’s a ZADD against the set for every user that calls the uploading user a contact). If the uploader is already in a given user’s set, this will simply update the score, if not then the uploader will be added to the set.

As things stand right now, the set will grow without bound, which is obviously not much help if our goal is to limit the number of contacts we need to check against when querying the DB. The solution to this is to cap the set. After each ZADD we check to see if the size of the set has exceeded a threshold (generally we store an additional 20% on top of the data we absolutely need), and if so, we remove all of the extra records using ZREMRANGEBYRANK:

ZREMRANGEBYRANK user_12345 0 ($collection_size - $max_size) - 1

Where $collection_size is the current number of members in the set and $max_size is the maximum number of members we want to store. Note that we’re removing from the head of the set. Redis stores data in sorted sets in ascending order, so the least recently active contacts are at the beginning of the set.

Akin to how we must cap the set to keep it below a maximum size, we also have a threshold on the other end of the spectrum to keep the set above a minimum size. If a user happens to remove their 10 most recently active contacts then there would be no data in their set, and the Redis index would be of little value. With that in mind, any time the user removes a contact, we check to see if the size of the set has dropped under the minimum threshold, and if so we repopulate the data based on their remaining contacts. This is slightly more complex than a simple Redis command, so we’ll use actual PHP to explain:

//

// $key is the redis ZSET key, e.g. user_12345

//

$count = redis_zset_zcard($key);

if ($count < $MIN_SET_SIZE) {

if ($count > 0) {

$current_contacts = redis_zset_zrange($key, 0, -1);

} else {

$current_contacts = array();

}

//

// In this call, the second parameter is the number of contacts

// to return and the third parameter is a list of users to

// exclude from the response. Since they’re already in the

// set, there’s no need to add them again

//

$contacts = contacts_most_recently_uploaded_list($user, $MAX_SET_SIZE - $count,

$current_contacts);

foreach ($contacts as $contact) {

redis_zset_zadd($key, $contact['last_upload'], $contact['id']);

}

}

Remember, this is all being done outside of the context of a page request, so there’s no harm in spending a little bit of extra time to ensure when the API is called we have a reliable index that we can use to optimize the DB query.

The final action we need to take is to actually query the set to get the list of contacts so we can actually do said DB query optimization. This is done with a standard ZREVRANGE:

ZREVRANGE user_12345 0 10

Similar to how we cap the set by removing members from the beginning because those are the least recently active, when we want the most recently active we use ZREVRANGE to get members at the end of the set.

You’re probably wondering, what about the other three events that generated tasks for the fully denormalized solution? How are we able to get by without them? Well, because we’ll just be using the list of contacts to optimize a live DB query, we can take some liberties with data purity in Redis. Because we store multiple recently active contacts, it doesn’t matter if, for example, the most recent contact in your Redis set has deleted his most recent photos or made them all private. When we query the DB, we further restrict the list based on your current relationship with a contact and photo-visibility, so any issues with Redis being out of sync sort themselves out automatically.

Take the above scenario where a user adds and quickly removes a contact. If the remove is processed first and the contact remains in the user’s Redis set, when we go to query the live contacts DB, we’ll get no results for that relationship and move on to the next contact in the set—crisis averted. There is a slight problem with the reverse scenario wherein a user removes a contact and then adds them back. If the tasks are processed out of order, there’s a chance that the add may not end up being reflected in the Redis set. This is the only chance for corruption under this system (as opposed to the fully denormalized solution where many tasks could interact and corrupt one another), and it’s likely to be fixed the next time any of the other actions occur. Furthermore, the only downside of this corruption is a user seeing photos from their second most recently active contact; this is a condition we’re willing to live with given the overall gains provided by the solution as a whole.

Not All Wine and Roses

The dataset involved in this solution is one of the largest we’ve pushed at Redis so far, and it’s not without its pitfalls. Namely, as the size of the dataset increases, the amount of time spent doing RDB saves also increases. This can introduce latency into Redis commands while the save is in progress. From Redis Persistence:

RDB needs to fork() often in order to persist on disk using a child process. Fork() can be time consuming if the dataset is big, and may result in Redis to stop serving clients for some millisecond or even for one second if the dataset is very big and the CPU performance not great. AOF also needs to fork() but you can tune how often you want to rewrite your logs without any trade-off on durability.

This is a key factor to be aware of when optimizing Redis performance. The overall speed of the system can be adversely impacted by RDB saves, therefore taking steps to minimize the time spent saving is critical. We’ve solved this problem by isolating these writes to their own Redis instance, thereby limiting the size of the dataset to only keys related to contacts activity. In the long term, as activity increases, it’s likely that we’ll need to further reduce the number of writes-per-instance by sharding this contact activity to a number of Redis instances. In some cases, multiple small Redis instances running on a single host can be preferable to one large Redis instance. As mentioned in the Redis Persistence guide, RDB saves can suffer with a slow CPU; if upgrading hardware (mostly faster CPUs to improve fork() performance), is possible, that’s certainly another option to investigate.