Saturday, 25 April 2020

Monday, 20 April 2020

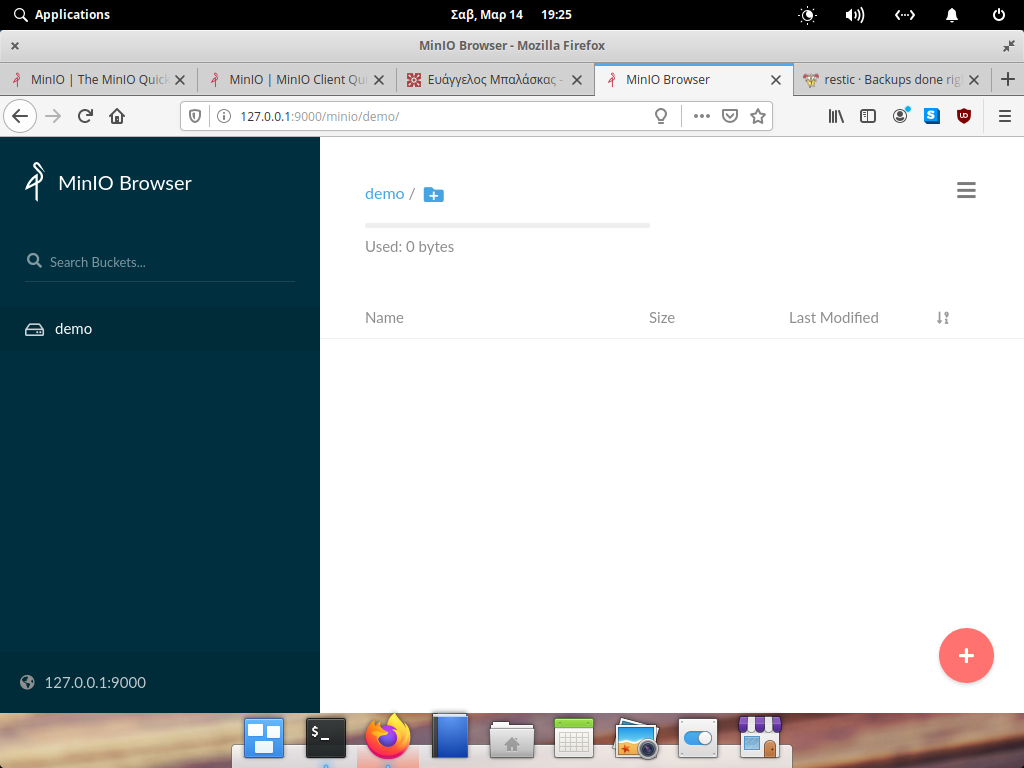

👾 Game-streaming without the "cloud" 🌩️

With increasing bandwidths, live-streaming of video games is becoming more and more popular – and might further accelerate the demise of the desktop computer. Most options are “cloud-gaming” services based on subscriptions where you don’t own the games and are likely to be tracked and monetised for your data. In this blog post I present the solution I built at home to replace my “living room computer”.

TL; DR

I describe how to stream a native Windows game (Divinity Original Sin 2 – installed DRM-free from GOG) via Steam Remote Play from my Desktop (running Devuan GNU/Linux) in 4K resolution and maximum quality directly to my TV (running Android). On the TV I then play the game with my flatmate using two controllers: one Xbox Controller connected via USB, one Steam-Controller connected via Bluetooth. The process of getting there is not trivial or flawless, but gaming itself works perfectly without artefacts or lag.

(The computer in the above photo is not used any longer.)

Motivation

The big “cloud” streaming services for audio and video are incredibly convenient. In my opinion they address the following problems very well:

- They make content available on hardware that wouldn’t have the capability to store the content.

- This includes all devices of the user, not just a single (desktop/laptop) computer.

- They provide access to a lot of content at a fixed monthly price (especially useful for people who haven’t had the possibility to build up their “library”).

On the other hand, they usually force you to use software that is non-free and/or not trustworthy (if they support your OS at all). You risk losing access to all content when the subscription is terminated. And – often overlooked – they monitor you very closely: what you watch/listen to, when and where you do it, when you press pause and for how long etc. I suspect that at some point this data will be more valuable than the income generated from subscription fees, but this is not important here.

It was only a matter of time before video-gaming would also become part of the streaming business and there are now multiple contenders: Google Stadia and Playstation Now are typical “streaming services” like Netflix for video; the games are included in the monthly fee. They have all the benefits and problems discussed above. Other services like GeForce Now and Blade Shadow provide computation and streaming infrastructure but leave it to you to provide the games (manually or via gaming platforms). This has slightly different implications, but I dont’ want to discuss these further, because I haven’t used any of them and likely won’t in the future.

In any case, I am of course not a big fan of being tracked and anything I can set up with my own infrastructure at home, I try to do! [I actually don’t really spend that much time gaming nowadays, but setting this up was still fun]. In the past I have had an extra (older) computer beside the TV that I used for casual gaming. This has become to old (and noisy) to play current games, so instead I want stream the game from my own desktop computer in a different room.

Disclaimer: It should be noted that this “only” provides feature 2. above, i.e. convergence of different devices. Also this setup includes using various non-free software components, i.e. it also does not solve all of the problems mentioned above.

Setting up the host

The “host” is the computer that renders the game, in my case the desktop computer. Before attempting to stream anything, we need to make sure that everything works on the host, i.e. we need to be able to play the game, use the controllers etc.

Hardware

| CPU | RAM | GPU |

|---|---|---|

| AMD Ryzen 6c/12t @ 3.6Ghz | 32GB | GeForce RTX 2060S |

Since the host needs to render the game, it needs decent hardware. Note that it needs to be able to encode the video-stream at the same time as rendering the game which means the requirements are even higher than usual. But of course all of this also depends on the exact games that you are playing and which resolution you want to stream. My specs are shown above.

The game

I chose Divinity Original Sin 2 for this article because that’s what I am playing right now and because I wanted to demonstrate that this even works with games that are not native to Linux (although I usually don’t buy games that don’t have native versions). If you buy it, I recommend getting it on GOG, because games are DRM-free there (they work without internet connection and stay working even if GOG goes bankrupt). The important thing here is that the game does not need to be a Steam game even though we will use Steam Remote Play for streaming. Buying it on Steam will make the process a little easier though.

Install the game using wine (wine in Devuan/Debian repos).

I assume that you have installed it to /games/DOS2 and that you have setup a “windows disk G:" for /games.

If not, adjust paths accordingly.

Steam

If you haven’t done so already, install Steam.

It is available in Debian/Devuan non-free repositories as steam but will install and self-update itself in a hidden subfolder of your home-directory upon first start.

You need a steam-account (free) and you need to be logged in for everything to work.

I really dislike this and it means that Steam quite likely does gather data about you.

I suspect that using non-Steam games makes it more difficult to track you, but I have not done any research on this.

See the end of this post for possible alternatives.

The first important thing to know about Steam on Linux is that it ships many system libraries, but it doesn’t ship everything that it needs and it gives you no diagnostic about missing stuff nor are UI elements correctly disabled when the respective feature is not available. This includes support for hardware video encoding and for playing windows games.

Hardware-video encoding is a feature you really want because it reduces latency and load on your CPU.

For reasons I don’t understand, Steam uses neither libva (the standard interface for video acceleration on free operating systems) nor vdpau (NVIDIA-specific but also free/open).

Instead it uses the proprietary NVENC interface.

On Debian / Devuan his has been patched out of all the libraries and applications, so you need to make sure that you get your libraries and apps like ffmpeg from the Debian multimedia project which has working versions.

I am not entirely sure which set of libraries/apps is required, for me it was sufficient to install libnvidia-encode1 and do an apt upgrade after adding the debian multimedia repo.

Note that only installing libnvidia-encode1 from the official repo was not sufficient.

See the troubleshooting section on how to diagnose problems with hardware video encoding.

To play Windows games with Steam, you can use Steam’s builtin windows emulator called Proton (here’s a current articel on it). It’s a fork of Wine with additional patches (most of which are upstreamed to official wine later). Unfortunately it is not installed after a fresh install of Steam on Linux and I found no explicit way of installing it (the interface still suggests it’s there, though!). To get it, select “Enable Steam Play for all titles” in the “Advanced” “Steam Play Settings” in the settings. This activates usage of Proton for Windows games not officially supported. Then install the free Windows game 1982 from inside Steam. This will automatically install Proton which is then listed as an installed application and updated automatically in the future. You can try the game to make sure Proton works as expected. Alternatively, buy the actual game on Steam and skip the next paragraph.

Now go to “Games” → “Add a Non-Steam Game to my Library…", then go to “BROWSE”, show all extensions and find the executable file of the game.

In our case this is /games/DOS2/DefEd/bin/EoCApp.exe or G:\DOS2\DefEd\bin\EoCApp.exe (yes, it’s not in the top-level directory of the game).

If your path contains spaces, it will break (without diagnostics).

To fix this, simply edit the shortcut created for the game again and make sure the path is right and “set launch options” is empty (part of your path may have ended up there).

In this dialog you can also explicitly state that you wish to use Proton to run the game (confusingly it will show multiple versions even if none of those are installed).

You can also give the game a nicer name or icon if desired.

Test the game

You are now ready to test the game. Simply click on the respective button. Now is also a good time for testing the controller(s), because if they don’t work now, they likely won’t later on. There are many tutorials on using the (Steam) controller on Linux and there are also some notes in the troubleshooting section.

This is also a good point in time to update the firmware of the steam controller to the newest version; we will need that later on.

Setting up the client

The client is my TV, because that runs Android TV and there is a SteamLink application for Android. If your TV has a different OS, you could probably use a small set-top-box built around a cheap embedded board and use Android (or Linux) as the client OS from that. I suppose all of this would be possible on an Android Tablet, as well. I recommend connecting the TV via wired network to the host, but WiFi works at lower resolutions, too.

Controllers and Android

The Xbox controller is just plugged into the USB-port of the TV and required no further configuration. The Steam controller was a little more tricky, and I had no luck getting it to work via USB. However, it comes with Bluetooth support (only after upgrading the firmware!).

Establishing the initial Bluetooth connection between the TV and the controller was surprisingly difficult. Start the Steam-controller with Steam+Y pressed or alternatively with Steam+B pressed and only do so after initiating device search on the TV. If it does not work immediately, keep trying! After a few attempts, the TV should state that it found the device; it calls it SteamController but recognises it as a Keyboard+Mouse. That’s ok.

After the connection has been established once, the controller will auto-connect when turned off and on again (do not press Y or B during start!). The controller’s mouse feature is surprisingly useful in the Android TV when you use Android apps that are not optimised for the TV.

SteamLink / Steam Remote Play

You need to install the SteamLink application from GooglePlay or through another channel like the Aurora Store (my Android TV is not associated with a Google account so I cannot use GooglePlay). After opening the app, Android will ask whether it should allow the app to access the Xbox controller which you have to agree to everytime (the “do this in the future” checkbox has no effect). Confusingly the TV then notifies you that the controller has been disconnected. This just means that the app controls it, I think. The app takes control of the Steam controller without asking and switches it from Keyboard+Mouse mode into Controller mode, so you can use the Joystick to navigate the buttons in the app.

It should automatically detect Steam running on the host and offer to make a connection. Press A on the controller or select “Start Playing”. The connection has to be verified once on the host for obvious reasons, but if everything works well you should now see the “Steam Big Picture Mode” interface on you TV. Your Desktop has switched to this at the same time (in general, the TV will now mirror your desktop). Maybe first try a native Steam game like the aforementioned “1982” to see if everything works.

Next try to start the Game we setup above via its regular entry in your Steam Library. Note that upon starting, the screen will initially flash black and return to Steam; the game is starting in the background, do not start it again, it just needs a second!

Everything should work now! If you hear audio but your screen stays black and shows a “red antenna symbol”, see the troubleshooting section below.

You can press the Steam-Button to return to Steam (although this sometimes breaks for non-native Steam games). You can also long-press the “back/select”-button on the controller to get a SteamLink specific menu provided by the Client. It can be used to launch an on-screen keyboard and force-quit the connection to the host and return to the SteamLink interface.

Video resolutions and codecs

If everything worked so far you are likely playing in 1080p. To change this to 4k resolution, go to the SteamLink app’s settings (wheel symbol) and then to “streaming settings” and then to “advanced”. You can increase the resolution limit there and also enable “HEVC Video” which improves video quality / reduces bitrate. If your desktop does not support streaming HEVC, SteamLink will establish no connection at all. See the troubleshooting section below if you get a black screen and the “red antenna symbol”.

Alternatives

The only viable alternative to Steam’s Remote Play for streaming your own games from your own hardware is NVIDIA GameStream – not to be confused with NVIDIA GeForce Now, the “cloud gaming” service. The advantages of GameStream over Steam Remote Play seem to be the following:

- The protocol is documented and there are good Free and Open Source client implementations.

- It claims better performance by tying closer into the host’s drivers.

- No online-connection or sign-up required like with Steam.

Also NVIDIA is a hardware company and even if the software is proprietary, it might be less likely to spy on you. The disadvantages are:

- The host software is only available for Windows.

- Only works with NVIDIA GPUs on the host machine, not AMD.

I could not try this, because I don’t have a Windows host and I wanted to stream from GNU/Linux.

Post scriptum

As you have seen the process really still has some rough edges, but I am honestly quite surprised that I did manage to set this up and that it works with really good quality in the end. Although I don’t like Steam because of its DRM, I have to admit that its impressive how much work they put into improving the driver situation on GNU/Linux and supporting such setups as discussed here. Consider that I didn’t even buy a game from Steam!

I would still love to see a host implementation of NVIDIA GameStream that runs on GNU/Linux. Even better would of course be a fully Free and Open Source solution.

On a sidenote: the setup I showed here can be used to stream any kind of application, even just the regular desktop if you want to (check out the advanced client options!).

Hopefully Steam Remote Play (and NVIDIA GameStream) can delay the full transition to “cloud gaming” a little.

Troubleshooting

~/.steam/debian-installation/logs/streaming_log.txt

You should have something like:

"CaptureDescriptionID" "Desktop OpenGL NV12 + NVENC HEVC"

The NVENC part is important. If you instead have the following:

"CaptureDescriptionID" "Desktop OpenGL NV12 + libx264 main (4 threads)"

It means you have software encoding.

Play around with ffmpeg to verify that NVENC works on your system. The following two commands should work:

% ffmpeg -h encoder=h264_nvenc

% ffmpeg -h encoder=hevc_nvenc

The second one is for the HEVC codec. If you are told that the selected encoder is not available, something is broken. Check to see if you correctly upgraded to the libraries from the Debian multimedia project.

audio dip video plugdev games scanner netdev input

When you start Steam but leave BigPicture mode (Alt+Tab or minimise), Steam usually switches the controller config back to “Desktop mode” which means Keyboard+Mouse emulation. If a game is started then, it will not detect any controller. The same thing seams to happen for certain non-steam games started through Steam. A workaround is going into the Steam controller settings and selecting the “Gamepad” configuration also as default for “Desktop mode”.

This happens when there is a resolution mismatch somewhere. Note that we have multiple places and layers where resolution can be set and that steam doesn’t always manage to sync these: the host (operating system, Steam, In-Game), the client (operating system, SteamLink). Additionally, Steam apparently can stream in a lower resolution than is set on either host or target.

I initially had this problem when streaming from 4k-host onto a SteamLink app that was configured to only accept 1080p. Changing the host resolution manually to 1080p before starting Steam solved this problem.

Now that everything (Host, In-Game, SteamLink on client) are configured to 4k, I still get this problem, because apparently Steam still attempts to reduce transmission resolution when starting the game. For obscure reasons the following workaround is possible: After starting the game, long-press “back/select” on the Controller to get to the SteamLink menu and select “Stop Game” there. This fixes the Black Screen and the regular game screen appears at 4K resolution. I suspect that “Stop game” terminates Steam’s wrapper around the game’s process that screws up the resolution. The devil knows why this does not terminate the game.

When switching in out of SteamLink via Android (e.g. TV remote), SteamLink sometimes doesn’t recover. Probably something goes wrong with putting the app to standby, but it’s also not something you typically would. Just quit the app correctly.

In any case, only a full reboot of the TV seems to fix this and make SteamLink usable again.

Sunday, 19 April 2020

Terminator 1.92 released

Do you still remember the project Terminator? People around the world are still using this tool as their terminal emulator of choice for Linux- and Unix-based systems (including Mac OS).

Unfortunately the development stagnated a bit since 2017 and within the last three years there had to be a lot of things to do. Terminator is written in Python, it had to be migrated to Python 3 for example, as distributions started to think about dropping support for Python 2. Packagers of several distributions started maintaining their own patches to support Terminator with Python 3, until today.

Two weeks ago, things have changed. A project at GitHub as been created, the source code has been migrated from Bazaar to Git and even some package maintainers from Arch Linux and Fedora contributed and were working hard towards whats happened this weekend. There is a Terminator 1.92 release available and you can find Terminator at it's new home here: https://github.com/gnome-terminator/terminator.

The update for Terminator 1.92 includes a lot of interesting changes you surely were waiting for, including the support for Python 3 and a bunch of bug fixes, for example you can now open links with just Ctrl+Click on the link. You can find a detailed change log right here: https://github.com/gnome-terminator/terminator/releases.

Of course, Fedora is one of the first distributions to update Terminator to 1.92 and if you're using Fedora or a RHEL8 based system, you can shortly update to it via:

dnf -y --enable-repo=updates-testing terminator

As usual you can leave your feedback and some Karma for the update:

- Fedora 30: https://bodhi.fedoraproject.org/updates/FEDORA-2020-ac15a5672d

- Fedora 31: https://bodhi.fedoraproject.org/updates/FEDORA-2020-2bfbbeec99

- Fedora 32: https://bodhi.fedoraproject.org/updates/FEDORA-2020-51d508ac25

- EPEL8: https://bodhi.fedoraproject.org/updates/FEDORA-EPEL-2020-6850774286

Friday, 17 April 2020

Should KDE fork CHMLib?

CHMLib is a library to handle CHM files.

It is used by Okular and other applications to show those files.

It hasn't had a release in 11 years.

It is packaged by all major distributions.

A few weeks ago I got annoyed because we need to carry a patch in Okular flathub because the code is not great and it defines it's own int types.

I tried contacting the upstream author, but unsurprisingly after 11 years he doesn't seem to care much and got no answer.

Then i looked saw that various distributions carry different random set of patches, not great.

So I said, OK, maybe we should just fork it, and emailed 14 people listed at repology as package maintainers for CHMLib saying how they would react to KDE forking CHMLib in an effort to do some basic maintenance, i.e. get it to compile without need for patches, make sure all the patches different distributions has are in the same place, etc.

1 packager answered saying "great"

1 packager answered "nah we want to follow upstream" (... which part of upstream is dead did they not understand?)

1 person answered saying "i haven't been packaging for $DISTRO for ages" (makes sense given how old the package is)

1 person answered saying "not a maintainer anymore but i think you should not break API/ABI of CHMLib" (which makes sense, never said that was planned)

And that's it, so only 4 out of 14 answers and only one of them encouraging.

So I'm asking *YOU*, should we push for a fork or I should stop this crazyness and do something more productive?

Wednesday, 08 April 2020

Geany and Geany-Plugins for EPEL8

If you're a lucky user of a RedHat Enterprise Linux based system, you're probably already aware of the Enterprise Packages for Enterprise Linux from the Fedora Project. In case you've missed the flyweight IDE Geany and it's plugins there this is probably some good news for you: Geany is coming to EPEL8 soon!

The update will hit the testing repositories in the next days and needs your karma here:

https://bodhi.fedoraproject.org/updates/FEDORA-EPEL-2020-5392d2e45e

Tuesday, 31 March 2020

System Hackers meeting - Lyon edition

For the 4th time, and less than 5 months after the last meeting, the FSFE System Hackers met in person to coordinate their activities, work on complex issues, and exchange know-how. This time, we chose yet another town familiar to one of our team members as venue – Lyon in France. What follows is a report of this gathering that happened shortly before #stayhome became the order of the day.

For those who do not know this less visible but important team: The System Hackers are responsible for the maintenance and development of a large number of services. From the fsfe.org website’s deployment to the mail servers and blogs, from Git to internal services like DNS and monitoring, all these services, virtual machines and physical servers are handled by this friendly group that is always looking forward to welcoming new members.

Interestingly, we have gathered in the same constellation as in the hackathon before, so Albert, Florian, Francesco, Thomas, Vincent and me tackled large and small challenges in the FSFE’s systems. But we have also used the time to exchange knowledge about complex tasks and some interconnected systems. The official part was conducted in the fascinating Astech Fablab, but word has it that Ninkasi, an excellent pub in Lyon, was the actual epicentre of this year’s meeting.

Sharing is caring

Saturday morning after reviewing open tasks and setting our priorities, we started to share more knowledge about our services to reduce bottlenecks. For this, I drew a few diagrams to explain how we deploy our Docker containers, how our community database interacts with the mail and lists server, and how DNS works at the FSFE.

To also help the non-present system hackers and “future generations”, I’ve added this information to a public wiki page. This could also be the starting point to transfer more internal knowledge to public pages to make maintenance and onboarding easier.

Todo? Done!

Afterwards, we focused on closing tasks that have been open for a longer time:

- The DNS has been a big issue for a long time. Over the past months we’ve migrated the source for our nameserver entries from SVN to Git, rewrote our deployment scripts, and eventually upgraded the two very sensitive systems to Debian 10. During the meeting, we came closer to perfection: all Bind configuration cleaned from old entries, uniformly formatted, and now featuring SPF, DMARC and CAA records.

- For a better security monitoring of the 100+ mailing lists the FSFE hosts, we’ve finalised the weekly automatic checks for sane and safe settings, and a tool that helps to easily update the internal documentation.

- Speaking of monitoring: we did lack proper monitoring of our 20+ hosts for availability, disk usage, TLS certificates, service status and more. While we tried for a longer time to get Prometheus and Grafana doing what we need, we performed a 180° turn: now, there is a Icinga2 installation running that already monitors a few hosts and their services – deployed with Ansible. In the following weeks we will add more hosts and services to the watched targets.

- We plan to migrate our user-unfriendly way to share files between groups to Nextcloud, including using some more of the software’s capabilities. During the weekend, we’ve tested the instance thoroughly, and created some more LDAP groups that are automatically transposed to groups in Nextcloud. In the same run, Albert shared some more knowledge about LDAP with Vincent and me, so we get rid of more bottlenecks.

Then, it was time to deal with other urgent issues:

- Some of us worked on making our systems more resilient against DDoS attacks. Over the Christmas season, we became a target of an attack. The idea is to come up with solutions that are easy to deploy on all our web services while keeping complexity low. We’ve tested some approaches and will further work on coming up with solutions.

- Regarding webservers, we’ve updated the TLS configurations on various services to the recommended settings, and also improved some other settings while touching the configuration files.

- We intend to ease people encrypting their emails with GnuPG. That is why we experimented with WKD/WKS and will work on setting up this service. As it requires some interconnection with others services, this will take us some more time unfortunately.

- On the maintenance side of things, we have upgraded all servers except one to the latest Debian version, and also updated many of our Docker images and containers to make use of the latest security and stability improvements.

- The FSFE hosts a few third party services, and unfortunately they have been running on unmaintained systems. That is why we set up a brand new host for our sister organisation in Latin America so they can eventually migrate, and moved the fossmarks.org website to our automatic CI/CD setup via Drone/Docker.

The next steps and developments

As you can see, we completed and started to tackle a lot of issues again, so it won’t become boring in our team any time soon. However, although we should know better, we intend to “change a running system”!

While the in-person meetings have been highly important and also fun, we are in a state where knowledge and mutual trust are further distributed between the members, the tasks separated more clearly and the systems mostly well documented. So part of our feedback session was the question whether these meetings in the 6-12 month rhythm are still necessary.

Yes, they are, but not more often than once a year. Instead, we would like to try virtual meetings and sprints. Before a sprint session, we would discuss all tasks (basically go through our internal Kan board), plan the challenges, ask for input if necessary, and resolve blockers as early as possible. Then, we would be prepared for a sprint day or afternoon during which everyone can work on their tasks while being able to directly contact other members. All that should happen over a video conference to have a more personal atmosphere.

For the analogue meetings, it was requested to also plan tasks and priorities beforehand together, and focus on tasks that require more people from the group. Also, we want to have more trainings and system introductions like we’ve just had to reduce dependencies on single persons.

All in all, this gathering has been another successful meeting and will set a corner stone for exciting new improvements for both the systems and the team. Thanks to everyone who participated, and a big applause to Vincent who organised the venue and the social activities!

Sunday, 29 March 2020

RSIBreak 0.12.12 released!

All of you that are in using a computer for a long time should use it!

https://userbase.kde.org/RSIBreak

Changes from 0.12.11:

* Don't reset pause counter on very short inputs that can just be accidental.

* Improve high dpi support

* Translation improvements

* Compile with Qt 5.15 beta

* Minor code cleanup

http://download.kde.org/stable/rsibreak/0.12/rsibreak-0.12.12.tar.xz

Friday, 27 March 2020

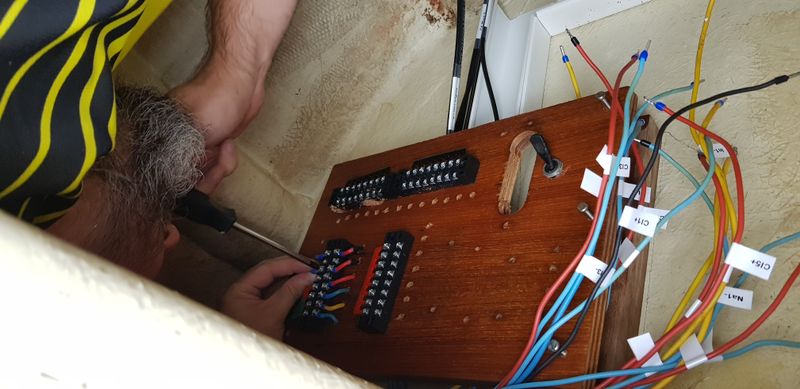

Cruising sailboat electronics setup with Signal K

I haven’t mentioned this on the blog earlier, but in the end of 2018 we bought a small cruising sailboat. After some looking, we went with a Van de Stadt designed Oceaan 25, a Dutch pocket cruiser from the early 1980s. S/Y Curiosity is an affordable and comfortable boat for cruising with 2-4 people, but also needed major maintenance work.

The refit has so far included osmosis repair, some fixes to the standing rigging, engine maintenance, and many structural improvements. But this post will focus on the electronics and navigation aspects of the project.

12V power

When we got it, the boat’s electrics setup was quite barebones. There was a small lead-acid battery, charged only when running the outboard. Light control was pretty much all-or-nothing, either we were running inside and navigation lights, or not. Everything was wired with 80s spec components, using energy-inefficient lightbulbs.

Looking at the state of the setup, it was also unclear when the electrics had been used for anything else than starting the engine last time.

Before going further with the electronics setup, all of this would have to be rebuilt. We made a plan, and scheduled two weekends in summer 2019 for rewiring and upgrading the electricity setup of the boat.

First step was to test all existing wiring with a multimeter, and label and document all of it. Surprisingly, there were only couple of bad connections from the main distribution panel to consumers, so for most part we decided to reuse that wiring, but just with a modern terminal block setup.

For most part we used a dymo label printer, with the labels covered with a transparent heat shrink.

We replaced the old main control panel with a modern one with the capability to power different parts of the boat separately, and added some 12V and USB sockets next to it.

All internal lighting was replaced with energy-efficient LEDs, and we added the option of using red lights all through the cabin for preserving night vision. A car charger was added to the system for easier battery charging while in harbour.

Next steps for power

With this, we had a workable lighting and power setup for overnight sailing. But next obvious step will be to increase the range of our boat.

For that, we’re adding a solar panel. We already have most parts for the setup, but are still waiting for the customized NOA mounting hardware to arrive. And of course the current COVID-19 curfews need to lift before we can install it.

Until we have actual data from our Victron MPPT charge controller, I’ve run some simulations using NASA’s insolation data for Berlin on how much the panel ought to increase our cruising range.

Navigation system

The basis for boat navigation is still the combination of a clock, a compass, and a paper chart (as well as a sextant on the open ocean). However, most modern cruising boats utilize some electrical tools to aid the process of running the boat. These typically come in form a chartplotter and a set of sensors to get things like GPS position, speed, and the water depth.

Commercial marine navigation equipment is a bit like computer networking in the 90s - everything is expensive, and you pretty much have to buy the whole kit from a single vendor to make it work. Standards like NMEA 0183 exist, but “embrace and extend” is typical vendor behaviour.

Signal K

Being open source hackerspace people, that was obviously not the way we wanted to do things. Instead of getting locked into an expensive proprietary single-vendor marine instrumentation setup, we decided to roll our own using off-the-shelf IoT components. To serve as the heart of the system, we picked Signal K.

Signal K is first of all a specification on how marine instruments can exchange data. It also has an open source implementation in Node.js. This allows piping in data from all of the relevant marine data buses, as well as setting up custom data providers. Signal K then harmonizes the data, and makes it available both via modern web APIs, and in traditional NMEA formats. This enables instruments like chartplotters also to utilize the Signal K enriched data.

We’re running Signal K on a Raspberry Pi 3B+ powered by the boat battery. With a GPS dongle, this was already enough to give some basic navigation capabilities like charts and anchor watch. We also added a WiFi hotspot with a LTE uplink to the boat.

To make the system robust, installation is automated via Ansible, and easy to reproduce. Our boat GitHub repo also has the needed functionality to run a clone of our boat’s setup on our laptops via Docker, which is great when developing new features.

Signal K has a very active developer community, which has been great for figuring out how the extend the capabilities of our system.

Chartplotter

We’re using regular tablets for navigation. The main chartplotter is a cheap old waterproof Samsung Galaxy Tab Active 8.0 tablet that can show both the Freeboard web-based chartplotter with OpenSeaMap charts, and run the Navionics Boating app to display commercial charts. Navionics is also able to receive some Signal K data over the boat WiFi to show things like AIS targets, and to utilize the boat GPS.

As a backup we have our personal smartphones and tablets.

Inside the cabin we also have an e-ink screen showing the primary statistics relevant to the current boat state.

Environmental sensing

Monitoring air pressure changes is important for dealing with the weather. For this, we added a cheap barometer-temperature-humidity sensor module wired to the Raspberry Pi, driven with the Signal K BME280 plugin. With this we were able to get all of this information from our cabin into Signal K.

However, there was more environmental information we wanted to get. For instance, the outdoor temperature, the humidity in our foul weather gear locker, and the temperature of our icebox. For these we found the Ruuvi tags produced by a Finnish startup. These are small weatherproofed Bluetooth environmental sensors that can run for years with a coin cell battery.

With Ruuvi tags and the Signal K Ruuvi tag plugin we were able to bring a rich set of environmental data from all around the boat into our dashboards.

Anchor watch

Like every cruising boat, we spend quite a lot of nights at anchor. One important safety measure with a shorthanded crew is to run an automated anchor watch. This monitors the boat’s distance to the anchor, and raises an alarm if we start dragging.

For this one, we’re using the Signal K anchor alarm plugin. We added a Bluetooth speaker to get these alarms in an audible way.

To make starting and stopping the anchor watch easier, I utilized a simple Bluetooth remote camera shutter button together with some scripts. This way the person dropping the anchor can also start the anchor watch immediately from the bow.

AIS

Automatic Identification System is a radio protocol used by most bigger vessels to tell others about their course and position. It can be used for collision avoidance. Having an active transponder on a small boat like Curiosity is a bit expensive, but we decided we’d at least want to see commercial traffic in our chartplotter in order to navigate safely.

For this we bought an RTL-SDR USB stick that can tune into the AIS frequency, and with the rtl_ais software, receive and forward all AIS data into Signal K.

This setup is still quite new, so we haven’t been able to test it live yet. But it should allow us to see all nearby bigger ships in our chartplotter in realtime, assuming that we have a good-enough antenna.

Putting it all together

All together this is quite a lot of hardware. To house all of it, we built a custom backing plate with 3D-printed brackets to hold the various components. The whole setup is called Voronoi-1 onboard computer. This is a setup that should be easy to duplicate on any small sailing vessel.

The total cost so far for the full boat navigation setup has been around 600€, which is less than just a commercial chartplotter would cost. And the system we have is both easy to extend, and to fix even on the go. And we get a set of capabilities that would normally require a whole suite of proprietary parts to put together.

Next steps for navigation setup

We of course have plenty of ideas on what to do next to improve the navigation setup. Here are some projects we’ll likely tackle over the coming year:

- Adding a timeseries database and some data visualization

- 9 degrees of freedom sensor to track the compass course, as well as boat heel

- Instrumenting our outboard motor to get RPMs into Signal K and track the engine running time

- Wind sensor, either open source or commercial

If you have ideas for suitable components or projects, please get in touch!

Source code

- https://github.com/meri-imperiumi/curiosity contains the Ansible Signal K setup for Curiosity, as well as the CNC and 3D printing designs we’re using on the boat

- https://github.com/meri-imperiumi/dashboard is the Python script we’re using to drive the e-ink dashboard in our cabin

- https://github.com/meri-imperiumi/signalk-autostate is a Signal K plugin for determining the boat state from sensor data

- https://github.com/meri-imperiumi/signalk-bluetooth-anchor-button contains the udev scripts for setting anchor watch from the Bluetooth remote camera shutter button

- https://github.com/meri-imperiumi/signalk-aws-iot is a Signal K plugin for transmitting our boat state to Amazon Web Services

Huge thanks to both the Signal K and Hackerfleet communities and the Curiosity crew for making all this happen.

Now we just wait for the curfews to lift so that we can get back to sailing!

Thursday, 26 March 2020

Jitsi and the power of shortcuts

During the last weeks I have used more video calls than in the past; and often the software used for that was Jitsi meet. That also meant that it made sense for me to look into how I and others can use this software more efficiently -- which for me means looking into the available shortcuts.

If you are using Jitsi meet, e.g. on one of its public

instances, you can press ? and then will see

the list of shortcuts:

F - Show or hide video thumbnails

M - Mute or unmute your microphone

V - Start or stop your camera

A - Manage call quality

C - Open or close the chat

D - Switch between camera and screen sharing

R - Raise or lower your hand

S - View or exit full screen

W - Toggle tile view

? - Show or hide keyboard shortcuts

SPACE - Push to talk

T - Show speaker stats

0 - Focus on your video

1-9 - Focus on another person's video

What I use most of the time is Mto quickly switch between being muted

or unmuted; sometimes then in combination with first muting and then

press SPACE while quickly saying something in a larger group and as

soon as I stop pressing it, I am muted again.

Another often used one for me is V to turn off / turn on my webcam in

combination with A to quickly reduce the video quality (unfortunately

I have not found a way that the default is lower video quality).

And finally, especially when I am moderating meetings, I encourage

people to use Rto indicate if someone wants to say something. This way

I do not have to ask several times in a meeting if someone wants to add

a point, or if there is another question. (This is also a feature for

which I am missing a quick access in the Jitsi meet mobile

application.)

In general I encourage you to check what shortcuts are available in a software you have to use more often, as in my experience you will highly benefit from that knowledge over time.

Monday, 23 March 2020

How and why to properly write copyright statements in your code

This blog post was not easy to write as it started as a very simple thing intended for developers, but later, when I was digging around, it turned out that there is no good single resource online on copyright statements. So I decided to take a stab at writing one.

I tried to strike a good balance between 1) keeping it short and to the point for developers who just want to know what to do, and 2) FOSS compliance officers and legal geeks who want to understand not just best practices, but also the reasons behind them.

If you are extremely short on time, the TL;DR should give you the bare minimal instructions, but if you have just 2 minutes I would advise you to read the actual HowTo a bit lower below.

Of course, if you have about 18 minutes of time, the best way is always to start reading at the beginning and finish at the end.

Where else to find this article

A copy of this blog is available also on Liferay Blog.

Haksung Jang (장학성) was awesome enough to publish a Korean translation.

TL;DR¶

Use the following format:

SPDX-FileCopyrightText: © {$year_of_file_creation} {$name_of_copyright_holder} <{$contact}>

SPDX-License-Identifier: {$SPDX_license_name}

… put that in every source code file and go check out (and follow) REUSE.software best practices.

E.g. for a file that I created today and I released under the BSD-3-Clause license, I would use put the following as a comment at the top of the source code file:

SPDX-FileCopyrightText: © 2020 Matija Šuklje <matija@suklje.name>

SPDX-License-Identifier: BSD-3-Clause

Introduction and copyright basics¶

Copyright is automatic (since the Berne convention) and any work of authorship is automatically protected by it – essentially giving the copyright holder1 exclusive power over its work. In order for your downstream to have the rights to use any of your work – be that code, text, images or other media – you need to give them a license to it.

So in order for you to copy, implement, modify etc. the code from others, you need to be given the needed rights – i.e. a license2 –, or make use of a statutory limitation or exception3. And if that license has some obligations attached, you need to meet them as well.

In any case, you have to meet the basic requirements of copyright law as well. At the very least you need to have the following two in place:

- attribution – list the copyright holders and/or authors (especially in jurisdictions which recognise moral rights);

- license(s) – since a license is the only thing that gives anybody other than the copyright holder themself the right to use the code, you are very well advised to have a notice of the the license and its full text present – this goes for both for your outbound licenses and the inbound licenses you received from others by using 3rd party works, such as copied code or libraries.

Inbound vs. outbound licenses

The license you give to your downstream is called an outbound license, because it handles the rights in the code that flow out of you. In turn that same license in the same work would then be perceived by your downstream as their inbound license, as it handles the rights in the code that flows into them.

In short, licenses describing rights flowing in are called inbound licenses, and the licenses describing rights flowing out are called outbound licenses.

The good news is that attribution is the author’s right, not obligation. And you are obliged to keep the attribution notices only insofar as the author(s) made use of that right. Which means that if the author has not listed themselves, you do not have to hunt them down yourself.

Why have the copyright statement?¶

Which brings us to the question of whether you need to write your own copyright statement4.

First, some very brief history …

The urge to absolutely have to write copyright statements stems from the inertia in the USA, as it only joined the Berne convention in 1989, well after computer programs were a thing. Which means that until then the US copyright law still required an explicit copyright statement in order for a work to be protected.

Copyright statements are useful

The copyright statement is not required by law, but in practice very useful as proof, at best, and indicator, more likely, of what the copyright situation of that work is. This can be very useful for compliance reasons, traceability of the code etc.

Attribution is practically unavoidable, because a) most licenses explicitly call for it, and if that fails b) copyright laws of most jurisdictions require it anyway.

And if that is not enough, then there is also c) sometimes you will want to reach the original author(s) of the code for legal or technical reasons.

So storing both the name and contact information makes sense for when things go wrong. Finding the original upstream of a runaway file you found in your codebase – if there are no names or links in it – is a huge pain and often includes (currently still) expensive specialised software. I would suspect the onus on a FOSS project to be much lower than on a corporation in this case, but still better to put a little effort upfront than having to do some serious archæology later.

How to write a good copyright statement and license notice¶

Finally we come to the main part of this article!

A good copyright statement should consist of the following information:

- start with the © sign;

- the year of the first publication – a good date would be the year in which you created the file and then do not touch it anymore;

- the name of the copyright holder – typically the author, but can also be your employer or the if there is a CLA in place another legal entity or person;

- a valid contact to the copyright owner

As an example, this is what I would put on something I wrote today:

© 2020 Matija Šuklje <matija@suklje.name>

While you are at it, it would make a lot of sense to also notify everyone which license you are releasing your code under as well. Using an SPDX ID is a great way to unambiguously state the license of your code. (See note mentioned below for an example of how things can go wrong otherwise.)

And if you have already come so far, it is just a small step towards following the best practices as described by REUSE.software by using SPDX tags to make your copyright statement (marked with SPDX-FileCopyrightText) and license notice (marked with SPDX-License-Identifier and followed by an SPDX ID).

Here is now an example of a copyright statement and license notice that check all the above boxes and also complies with both the SPDX and the REUSE.software specifications:

SPDX-FileCopyrightText: © 2020 Matija Šuklje <matija@suklje.name>

SPDX-License-Identifier: BSD-3-Clause

Now make sure you have these in comments of all your source code files.

Q&A¶

Over the years, I have heard many questions on this topic – both from developers and lawyers.

I will try to address them below in no particular order.

If you have a question that is not addressed here, do let me know and I will try to include it in an update.

Why keep the year?¶

Some might argue that for the sake of simplicity it would be much easier to maintain copyright statements if we just skip the years. In fact, that is a policy at Microsoft/GitHub at the time of this writing.

While I agree that not updating the year simplifies things enormously, I do think that keeping a date helps preserve at least a vague timeline in the codebase. As the question is when the work was first expressed in a medium, the earliest date provable is the time when that file was first created.

In addition, having an easy way to find the earliest date of a piece of code, might prove useful also in figuring out when an invention was first expressed to the general public. Something that might become useful for patent defense.

This is also why e.g. in Liferay our new policy is to write the year of the file creation, and then not change the year any more.

Innocent infringement excursion for legal geeks

17 U.S. Code § 401.(d) states that if a work carries a copyright notice in the form that the law proscribes, in a copyright infringement case the defendant cannot rely on the innocent infringement defense, except if they had reason to believe their use was covered fair use. And even then, the innocent infringer would have to be e.g. a non-profit broadcaster or archive to be still eligible to such defence.

So, if you are concerned with copyright violations (at least in USA), you may actually want to make sure your copyright statements include both the copyright sign and year of publication.

See also note in Why the © sign for how a copyright notice following the US copyright act looks like.

Why not bump the year on change?¶

I am sure you have seen something like this before:

Copyright (C) 1992, 1995, 2000, 2001, 2003 CompanyX Inc.

The presumption behind this is that whenever you add a new year in the copyright statement, the copyright term would start anew, and therefore prolong the time that file would be protected by copyright.

Adding a new year on every change – or, even worse, simply every 1st January – is a practice still too wide-spread even today. Unfortunately, doing this is useless at best, and misleading at worst. For the origin of this myth see the short history above.

A big problem with this approach is that not every contribution is original or substantial enough to be copyrightable – even the popular 5 (or 10, or X) SLOC rule of thumb5 is legally-speaking very debatable

So, in order to keep your copyright statement true, you would need to make a judgement call every time whether the change was substantial and original enough to be granted copyright protection by the law and therefore if the year should be bumped. And that is a substantial test for every time you change a file.

On the other hand copyright lasts at least 50 (and usually 70) years6 after the death of the author; or if the copyright holder is a legal entity (e.g. CompanyX Inc.), since publication. So the risk of your own copyright expiring under your feet is very very low.

Worst case thought experiment

Let us imagine the worst possible scenario now:

1) you never bump the year in a copyright statement in a file and 2) 50+ years after its initial release, someone copies your code as if it were in public domain. Now, if you would have issue with that and go to court, and 3) the court would (very unlikely) take only the copyright statements in that file into account as the only proof and based on that 4) rule that the code in that file would have fallen under public domain and therefore the FOSS license would not apply to it any more.

The end result would simply be that (in one jurisdiction) that file would fall into public domain and be up for grabs by anyone for anything, no copyright, no copyleft, 50+ years from the file’s creation (instead of e.g. 5, maybe 20 years later).

But, honestly, how likely is it that 50 years from now the same (unaltered) code would still be (commercially) interesting?

… and if it turns out you do need to bump the year eventually, you still have, at worst, 50 years to sort it out – so, ample opportunity to mitigate the risk.

In addition to that, as typically a single source code file is just one of the many cogs in a bigger piece of software, what you are more concerned with is the software product/project as a whole. As the software grows, you will keep adding new files, and those will obviously have newer years in them. So the codebase as a whole work will already include copyright statements with newer years in it anyway.

Keep the Git/VCS history clean

Also, bumping the year in all the files every year messes with the usefulness of the Git/VCS history, and makes the log unnecessarily long(er) and the repository consumes more space.

It makes all the files seem equally old (in years), which makes it hard to identify stale code if you are looking for it.

Another issue might be that your year-bumping script can be too trigger-happy and bump the years also in the files that do not even belong to you. Furthering misinformation both in your VCS and the files’ copyright notices.

Why not use a year range?¶

Similar to the previous question, the year span (e.g. 1990-2013) is basically just a lazy version of bumping the year. So all of the above-mentioned applies.

A special case is when people use a range like {$year}-present. This has almost all of the above-mentioned issues7, plus it adds another dimension of confusion, because what constitutes the “present” is an open – and potentially philosophical – question. Does it mean:

- the time when the file was last modified?

- the time it was released as a package?

- the time you downloaded it (maybe for the first time)?

- the time you ran it the last time?

- or perhaps even the ever eluding “right now”?

As you can see, this does not help much at all. Quite the opposite!

But doesn’t Git/Mercurial keep a better track?¶

Not reliably.

Git (and other VCS) are good at storing metadata, but you should be careful about it.

Git does have an Author field, which is separate from the Committer field. But even if we were to assume – and that is a big assumption8 – Git’s Author was the actual author of the code committed, they may not be the copyright holder.

Furthermore, the way git blame and git diff currently work, is line-by-line and using the last change as the final author, making Git suboptimal for finding out who actually wrote what.

Token-based blame information

For a more fine-grained tool to see who to blame for which piece of code, check out cregit.

And ultimately – and most importantly – as soon as the file(s) leave the repository, the metadata is lost. Whether it is released as a tarball, the repository is forked and/or rebased, or a single file is simply copied into a new codebase, the trace is lost.

All of these issues are addressed by simply including the copyright statement and license information in every file. REUSE.software best practices handle this very well.

Why the © sign?¶

Some might argue that the English word “Copyright” is so common nowadays that everyone understands it, but if you actually read the copyright laws out there, you will find that using © (i.e. the copyright sign) is the only way to write a copyright statement that is common in copyright laws around the world9.

Using the © sign makes sense, as it is the the common global denominator.

Comparison between US and Slovenian copyright statements

As an EU example, the Slovenian ZASP §175.(1) simply states that holders of exclusive author’s rights may mark their works with a (c)/© sign in front of their name or firm and year of first publication, which can be simply put as:

© {$year_of_first_publication} {$name_of_author_or_other_copyright_holder}

On the other side of the pond, in the USA, 17 U.S. Code § 401.(b) uses more words to give a more varied approach, and relevant for this question in §401(b)(1) proscribes the use of

the symbol © (the letter C in a circle), or the word “Copyright”, or the abbreviation “Copr.”;

The rest you can go read yourself, but can be summarised as:

(©|Copyright|Copr.) {$year_of_first_publication} {$name_or_abreviation_of_copyright_holder}

See also the note in Why keep the year for why this can matter in front of USA courts.

While the © sign is a pet peeve of mine, from the practical point of view, this is the least important point here. As we established in the introduction, copyright is automatic, so the actual risk of not following the law by its letter is pretty low if you write e.g. “Copyright” instead.

Why leave a contact? Even when there is more than one author?¶

A contact is in no way required by copyright law, but from practical reasons can be extremely useful.

It can happen that you need to access the author and/or copyright holder of the code for legal or technical question. Perhaps you need to ask how the code works, or have a fix you want to send their way. Perhaps you found a licensing issue and want to help them fix it (or ask for a separate license). In all of these cases, having a contact helps a lot.

As pretty much all of internet still hinges on the e-mail10, the copyright holder’s e-mail address should be the first option. But anything really goes, as long as that contact is easily accessible and actually in use long-term.

Avoiding orphan works

For the legal geeks out there, a contact to the copyright holder mitigates the issue of orphan works.

There will be cases where the authorship will be very dispersed or lie with a legal entity instead. In those cases, it might be more sense to provide a URL to either the project’s or legal entity’s homepage and provide useful information there. If a project lists copyright holders in a file such as AUTHORS or CONTRIBUTORS.markdown a permalink to that file (in the master) of the publicly available repository could also be a good URL option.

How to handle multitudes of authors?

Here are two examples of what you can write in case the project (e.g. Project X) has many authors and does not have a CAA or exclusive CLA in place to aggregate the copyright in a single entity:

© 2010 The Project X Authors <{$url}>

© 1998 Contributors to the Project X <{$url}>

What about public domain?¶

Public domain is tricky.

In general the public domain are works to which the copyright term has expired11.

While in some jurisdictions (e.g. USA, UK) you can actually waive your copyright and dedicate your work to public domain, in most jurisdiction (e.g. most of EU member countries) that is not possible.

Which means that depending on the applicable jurisdiction, it may be that although an author wrote that they dedicate their work into public domain this does not meet the legal standard for it to actually happen – they retain the copyright in their own work.

Unsurprisingly, FOSS compliance officers and other people/projects who take copyright and licensing seriously are typically very wary of statements like “this is public domain”.

This can be mitigated in two ways:

- instead of some generic wording, when you want to dedicate something to public domain use a tried and tested public copyright waiver / public domain dedication with a very permissive license, such as CC0-1.0; and

- include your name and contact if you are the author in the

SPDX-FileCopyrightText:field – 1) because in doubt that will associate you with your dedication to the public domain, and 2) in case anything is unclear, people have a contact to you.

This makes sense to do even for files that you deem are not copyrightable, such as config files – if you mark them as above, everyone will know that you will not exercise your author’s rights (if they existed) in those files.

It may seem a bit of a hassle for something you just released to the public to use however they see fit, without people having to ask you for permission. I get that, I truly do! But do consider that if you already put so much effort into making this wonderful stuff you and donating it to the general humanity, it would be a huge pity that, for (silly) legal details, in the end people would not (be able to) use it at all.

What about minified JS?¶

Modern code minifiers/uglifiers tend to have an optional flag to preserve copyright and licensing info, even when they rip out all the other comments.

The copyright does not simply go away if you minify/uglify the code, so do make sure that you use a minifier that preserves both the copyright statement as well as the license (at least its SPDX Identifier) – or better yet, the whole REUSE-compliant header.

Transformations of code

Translations between different languages, compilations and other transformations are all exclusive rights of the copyright owner. So you need a valid license even for compiling and minifying.

What is wrong with “All rights reserved”?¶

Often you will see “all rights reserved” in copyright statements even in a FOSS project.

The cause of this, I suspect, lies again from a copycat behaviour where people tend to simply copy what they so often found on a (music) CD or in a book. Again, the copyright law does not ask for this, even if you want to follow the fullest formal copyright statement rules.

But what it does bring, is confusion.

The statement “all rights reserved” obviously contradicts the FOSS license the same file is released under. The latter gives everyone the rights to use, study, share and improve the code, while the former states that all of these rights the author reserves to themself.

So, as those three words cause a contradiction, and do not bring anything useful to the table in the first place, you should not write them in vain.

Practical example

Imagine12 a FOSS project that has a copy of the MIT license stored in its LICENSE file and (only) the following comment at the top of all its source code files:

# This file is Copyright (C) 1997 Master Hacker, all rights reserved.

Now imagine that someone simply copies one file from that repository/archive into their own work, which is under the AGPL-3.0-only license, and this is also what it says in the LICENSE file in the root of its own repository. And you, in turn, are using this second person’s codebase.

According to the information you have at hand:

- the copyright in the copied file is held by Master Hacker;

- apparently, Mr Hacker reserves all the rights they have under copyright law;

- if you felt like taking a risk, you could assume that the copied file is under the AGPL-3.0-or-later license – which is false, and could lead to copyright violation13;

- if you wanted to play it safe, you could assume that you have no valid license to this file, so you decide to remove it and work around it – again false and much more work, but safe;

- you could wait until 2067 and hope this actually falls under public domain by then – but who has time for that.

This example highlights both how problematic the wording of “all rights reserved” can be even if there is a license text somewhere in the codebase.

This can be avoided by using a sane copyright statement (as described in this blog post) and including an unambiguous license ID. REUSE.software ties both of these together in an easy to follow specification.

hook out → hat tip to the TODO Group for giving me the push to finally finish this article and Carmen Bianca Bakker for some very welcome feedback

-

A license is by definition “[t]he permission granted by competent authority to exercise a certain privilege that, without such authorization, would constitute an illegal act, a trespass or a tort.” ↩

-

Limitations and exceptions (or fair use/dealings) in copyright are extremely limited when it comes to software compared to more traditional media. Do not rely on them. ↩

-

In USA, the copyright statement is often called a copyright notice. The two terms are used intercheangably. ↩

-

E.g. the 5 SLOC rule of thumb means that any contribution that is 5 lines or shorter, is (likely) too short to be deemed copyrightable, and therefore can be treated as un-copyrightable or as in public domain; and on the flip-side anything longer than 5 lines of code needs to be treated as copyrightable. This rule can pop up when a project has a relatively strict contribution agreement (a CLA or even CAA), but wants some leeway to accept short fix patches from drive-by contributors. The obvious problem with this is that on one hand someone can be very original even in 5 lines (think haiku), while one can also have pages and pages of absolute fluff or just plain raw factual numbers. ↩

-

This depends from jurisdiction to jurisdiction. The Berne convention stipulates at least 50 years after death of the author as the baseline. There are very few non-signatory states that have shorter terms, but the majority of countries have life + 70 years. The current longest copyright term is life + 100 years, in Mexico. ↩

-

The only improvement is that it avoids messing up the Git/VCS history. ↩

-

Of course, I did not go through all of the copyright laws out there, but I checked a handful of them in different languages I understand, and this is the pattern I identified. If anyone has a more thorough analysis at hand, please reach out and I will happily include it. ↩

-

Just think about it, pretty much every time you create a new account somewhere online, you are asked for your e-mail address, and in general people rarely change their e-mail address. ↩

-

As stated before, in most jurisdictions that is 70 years after the death of the author. ↩

-

I suspect many of the readers not only can imagine one, but have seen many such projects before

;). ↩ -

Granted, MIT code embedded into AGPL-3.0-or-later code is less risky than vice versa. But simply imagine what it would be the other way around … or wtih an even odder combination of licenses. ↩

Saturday, 21 March 2020

Using LibreDNS with dnscrypt-proxy

Using DNS over HTTPS aka DoH is fairly easy with the latest version of firefox. To use libredns is just a few settings in your browser, see here. In libredns’ site, there are also instructions for DNS over TLS aka DoT.

In this blog post, I am going to present how to use dnscrypt-proxy as a local dns proxy resolver using DoH the LibreDNS noAds (tracking) endpoint. With this setup, your entire operating system can use this endpoint for everything.

Disclaimer: This blog post is about dnscrypt-proxy version 2.

dnscrypt-proxy

dnscrypt-proxy 2 - A flexible DNS proxy, with support for modern encrypted DNS protocols such as DNSCrypt v2, DNS-over-HTTPS and Anonymized DNSCrypt.

Installation

sudo pacman -S dnscrypt-proxyVerify Package

$ pacman -Qi dnscrypt-proxy

Name : dnscrypt-proxy

Version : 2.0.39-3

Description : DNS proxy, supporting encrypted DNS protocols such as DNSCrypt v2 and DNS-over-HTTPS

Architecture : x86_64

URL : https://dnscrypt.info

Licenses : custom:ISC

Groups : None

Provides : None

Depends On : glibc

Optional Deps : python-urllib3: for generate-domains-blacklist [installed]

Required By : None

Optional For : None

Conflicts With : None

Replaces : None

Installed Size : 12.13 MiB

Packager : David Runge <dvzrv@archlinux.org>

Build Date : Sat 07 Mar 2020 08:10:14 PM EET

Install Date : Fri 20 Mar 2020 10:46:56 PM EET

Install Reason : Explicitly installed

Install Script : Yes

Validated By : SignatureDisable systemd-resolved

if necessary

$ ps -e fuwww | grep re[s]olv

systemd+ 525 0.0 0.1 30944 21804 ? Ss 10:00 0:01 /usr/lib/systemd/systemd-resolved

$ sudo systemctl stop systemd-resolved.service

$ sudo systemctl disable systemd-resolved.service

Removed /etc/systemd/system/multi-user.target.wants/systemd-resolved.service.

Removed /etc/systemd/system/dbus-org.freedesktop.resolve1.service.Configuration

It is time to configure dnscrypt-proxy to use libredns

sudo vim /etc/dnscrypt-proxy/dnscrypt-proxy.tomlIn the top of the file, there is a server_names section

server_names = ['libredns-noads']Resolv Conf

We can now change our resolv.conf to use our local IP address.

echo -e "nameserver 127.0.0.1noptions edns0 single-request-reopen" | sudo tee /etc/resolv.conf$ cat /etc/resolv.conf

nameserver 127.0.0.1

options edns0 single-request-reopenSystemd

start & enable dnscrypt service

sudo systemctl start dnscrypt-proxy.service

sudo systemctl enable dnscrypt-proxy.service$ sudo ss -lntup '( sport = :domain )'

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

udp UNCONN 0 0 127.0.0.1:53 0.0.0.0:* users:(("dnscrypt-proxy",pid=55795,fd=6))

tcp LISTEN 0 4096 127.0.0.1:53 0.0.0.0:* users:(("dnscrypt-proxy",pid=55795,fd=7))Verify

$ dnscrypt-proxy -config /etc/dnscrypt-proxy/dnscrypt-proxy.toml -list

libredns-noads$ dnscrypt-proxy -config /etc/dnscrypt-proxy/dnscrypt-proxy.toml -resolve balaskas.gr

Resolving [balaskas.gr]

Domain exists: yes, 2 name servers found

Canonical name: balaskas.gr.

IP addresses: 158.255.214.14, 2a03:f80:49:158:255:214:14:80

TXT records: v=spf1 ip4:158.255.214.14/31 ip6:2a03:f80:49:158:255:214:14:0/112 -all

Resolver IP: 116.202.176.26 (libredns.gr.)Dig

asking our local dns (proxy)

dig @localhost balaskas.gr; <<>> DiG 9.16.1 <<>> @localhost balaskas.gr

; (2 servers found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 2449

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;balaskas.gr. IN A

;; ANSWER SECTION:

balaskas.gr. 7167 IN A 158.255.214.14

;; Query time: 0 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Sat Mar 21 19:48:53 EET 2020

;; MSG SIZE rcvd: 56That’s it !

Yoursystem is now using LibreDNS DoH noads endpoint.

Manual Steps

If your operating system does not yet support dnscrypt-proxy-2 then:

Latest version

You can always download the latest version from github:

To view the files

curl -sLo - $(curl -sL https://api.github.com/repos/DNSCrypt/dnscrypt-proxy/releases/latest | jq -r '.assets[].browser_download_url | select( contains("linux_x86_64"))') | tar tzf -

linux-x86_64/

linux-x86_64/dnscrypt-proxy

linux-x86_64/LICENSE

linux-x86_64/example-cloaking-rules.txt

linux-x86_64/example-dnscrypt-proxy.toml

linux-x86_64/example-blacklist.txt

linux-x86_64/example-whitelist.txt

linux-x86_64/localhost.pem

linux-x86_64/example-ip-blacklist.txt

linux-x86_64/example-forwarding-rules.txtTo extrace the files

$ curl -sLo - $(curl -sL https://api.github.com/repos/DNSCrypt/dnscrypt-proxy/releases/latest | jq -r '.assets[].browser_download_url | select( contains("linux_x86_64"))') | tar xzf -

$ ls -l linux-x86_64/

total 9932

-rwxr-xr-x 1 ebal ebal 10117120 Μαρ 21 13:56 dnscrypt-proxy

-rw-r--r-- 1 ebal ebal 897 Μαρ 21 13:50 example-blacklist.txt

-rw-r--r-- 1 ebal ebal 1277 Μαρ 21 13:50 example-cloaking-rules.txt

-rw-r--r-- 1 ebal ebal 20965 Μαρ 21 13:50 example-dnscrypt-proxy.toml

-rw-r--r-- 1 ebal ebal 970 Μαρ 21 13:50 example-forwarding-rules.txt

-rw-r--r-- 1 ebal ebal 439 Μαρ 21 13:50 example-ip-blacklist.txt

-rw-r--r-- 1 ebal ebal 743 Μαρ 21 13:50 example-whitelist.txt

-rw-r--r-- 1 ebal ebal 823 Μαρ 21 13:50 LICENSE

-rw-r--r-- 1 ebal ebal 2807 Μαρ 21 13:50 localhost.pem

$ cd linux-x86_64/Prepare the configuration

$ cp example-dnscrypt-proxy.toml dnscrypt-proxy.toml

$

$ vim dnscrypt-proxy.tomlIn the top of the file, there is a server_names section

server_names = ['libredns-noads']$ ./dnscrypt-proxy -config dnscrypt-proxy.toml --list

[2020-03-21 19:27:20] [NOTICE] dnscrypt-proxy 2.0.40

[2020-03-21 19:27:20] [NOTICE] Network connectivity detected

[2020-03-21 19:27:22] [NOTICE] Source [public-resolvers] loaded

[2020-03-21 19:27:23] [NOTICE] Source [relays] loaded

libredns-noadsRun as root

$ sudo ./dnscrypt-proxy -config ./dnscrypt-proxy.toml

[sudo] password for ebal: *******

[2020-03-21 20:11:04] [NOTICE] dnscrypt-proxy 2.0.40

[2020-03-21 20:11:04] [NOTICE] Network connectivity detected

[2020-03-21 20:11:04] [NOTICE] Source [public-resolvers] loaded

[2020-03-21 20:11:04] [NOTICE] Source [relays] loaded

[2020-03-21 20:11:04] [NOTICE] Firefox workaround initialized

[2020-03-21 20:11:04] [NOTICE] Now listening to 127.0.0.1:53 [UDP]

[2020-03-21 20:11:04] [NOTICE] Now listening to 127.0.0.1:53 [TCP]

[2020-03-21 20:11:04] [NOTICE] [libredns-noads] OK (DoH) - rtt: 65ms

[2020-03-21 20:11:04] [NOTICE] Server with the lowest initial latency: libredns-noads (rtt: 65ms)

[2020-03-21 20:11:04] [NOTICE] dnscrypt-proxy is ready - live servers: 1Check DNS

Interesting enough, first time is 250ms , second time is zero!

$ dig libredns.gr

; <<>> DiG 9.11.3-1ubuntu1.11-Ubuntu <<>> libredns.gr

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 53609

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;libredns.gr. IN A

;; ANSWER SECTION:

libredns.gr. 2399 IN A 116.202.176.26

;; Query time: 295 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Sat Mar 21 20:12:52 EET 2020

;; MSG SIZE rcvd: 72

$ dig libredns.gr

; <<>> DiG 9.11.3-1ubuntu1.11-Ubuntu <<>> libredns.gr

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 31159

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 512

;; QUESTION SECTION:

;libredns.gr. IN A

;; ANSWER SECTION:

libredns.gr. 2395 IN A 116.202.176.26

;; Query time: 0 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Sat Mar 21 20:12:56 EET 2020

;; MSG SIZE rcvd: 72That’s it

Thursday, 19 March 2020

Tools I use daily the Win10 edition

almost three (3) years ago I wrote an article about the Tools I use daily. But for the last 18 months (or so), I am partial using windows 10 due to my new job role, thus I would like to write an updated version on that article.

I’ ll try to use the same structure for comparison as the previous article, keep in mind this a nine to five setup (work related). So here it goes.

NOTICE beer is just for decor ;)

Operating System

I use Win10 as my primary operating system in my worklaptop. I have a couple of impediments that can not work on a linux distribution but I am not going to bother you with them (it’s webex and some internal internet-explorer only sites).

We used to use webex as our primary communication tool. We are sharing our screen and have our video camera on, so that everybody can see each other.Working with remote teams, it’s kind of nice to see the faces of your coworkers. A lot of meetings are integrated with the company’s outlook. I use OWA (webmail) as an alternative but in fact it is still difficult to use both of them with a linux desktop.

We successful switched to slack for text communications, video calls and screen sharing. This choice gave us a boost in productivity as we are now daily using slack calls to align with each other. Although still webex is in the mix. Company is now using a newer webex version that works even better with browser support so that is a plus. It’s not always easy to get everybody with a webex license but as long as we are using slack it is okay. Only problem with slack in linux is when working with multiple monitors, you can not choose which monitor to share.

I have considered to use a VM (virtual machine) but a win10 vm needs more than 4G of RAM and a couple of CPUs just to boot up. In that case, it means that I have to reduce my work laptop resources for half the day, every day. So for the time being I am staying with Win10 as the primary operating system. I have to use the winVM for some other internal works but it is limited time.

Desktop

Default Win10 desktop

I daily use these OpenSource Tools:

- AutoHotkey for keyboard shortcut (I like switching languages by pressing capslock)

- Ditto as clipboard manager

- Greenshot for screenshot tool

and from time to time, I also use:

- X-Mouse Controls (window focus with mouse)

- Always on Top to Keep Any Window Visible Always

- Plumb a Tiling Window Manager

except plumb, everything else is opensource!

So I am trying to have the same user desktop experience as in my Linux desktop, like my language swith is capslock (authotkey), I dont even think about it.

Disk / Filesystem

Default Win10 filesystem with bitlocker. Every HW change will lock the entire system. In the past this happened twice with a windows firmware device upgrade. Twice!

Dropbox as a cloud sync software, with EncFSMP partition and syncthing for secure personal syncing files.

(same setup as linux, except bitlocker is luks)

OWA for calendar purposes and … still Thunderbird for primary reading mails.

Thunderbird 68.6.0 AddOns:

- TbSync

- Provider for CalDAV & CardDAV

- ExQuilla for Exchange

- CompactHeader

- Toggle Headers

- ConfigDate

- Duplicate Contacts Manager

- Remove Duplicates

- Lightning

- Expression Search / GMailUI

- Mail Redirect

- Markdown Here

- Open With

(same setup as linux)

Shell