Quantopian Overview

Quantopian provides you with everything you need to write a high-quality algorithmic trading strategy. Here, you can do your research using a variety of data sources, test your strategy over historical data, and then test it going forward with live data. Top-performing algorithms will be offered investment allocations, and those algorithm authors will share in the profits.

Important Concepts

The Quantopian platform consists of several linked components. The Quantopian Research platform is an IPython notebook environment that is used for research and data analysis during algorithm creation. It is also used for analyzing the past performance of algorithms. The IDE (interactive development environment) is used for writing algorithms and kicking off backtests using historical data. Algorithms can also be simulated using live data, also known as paper trading. All of these tools use the data provided by Quantopian: US equity price and volumes (minute frequency), US futures price and volumes (minute frequency), US equity corporate fundamentals, and dozens of other integrated data sources.

This section covers each of these components and concepts in greater detail.

Data Sources

We have minute-bar historical data for US equities and US futures since 2002 up to the most recently completed trading day (data uploaded nightly).

A minute bar is a summary of an asset's trading activity for a one-minute period, and gives you the opening price, closing price, high price, low price, and trading volume during that minute. All of our datasets are point-in-time, which is important for backtest accuracy. Since our event-based system sends trading events to you serially, your algorithm receives accurate historical data without any bias towards the present.

During algorithm simulation, Quantopian uses as-traded prices. That means that when your algorithm asks for a price of a specific asset, it gets the price of that asset at the time of the simulation. For futures, as-traded prices are derived from electronic trade data.

When your algorithm calls for historical equity price or volume data, it is adjusted for splits, mergers, and dividends as of the current simulation date. In other words, if your algorithm asks for a historical window of prices, and there is a split in the middle of that window, the first part of that window will be adjusted for the split. This adjustment is done so that your algorithm can do meaningful calculations using the values in the window.

Quantopian also provides access to fundamental data, free of charge. The data, from Morningstar, consists of over 600 metrics measuring the financial performance of companies and is derived from their public filings. The most common use of this data is to filter down to a sub-set of securities for use in an algorithm. It is common to use the fundamentals metrics within the trading logic of the algorithm.

In addition to pricing and fundamental data, we offer a host of partner datasets. Combining signals found in a wide variety of datasets is a powerful way to create a high-quality algorithm. The datasets available include market-wide indicators like sentiment analysis, as well as industry-specific indicators like clinical trial data.

Each dataset has a limited set of data available for free; full datasets available for purchase, a la carte, for a monthly fee. The complete list of datasets is available here.

Our US equities database includes all stocks and ETFs that traded since 2002, even ones that are no longer traded. This is very important because it helps you avoid survivorship bias in your algorithm. Databases that omit securities that are no longer traded ignore bankruptcies and other important events, and lead to false optimism about an algorithm. For example, LEH (Lehman Brothers) is a security in which your algorithm can trade in 2008, even though the company no longer exists today; Lehman's bankruptcy was a major event that affected many algorithms at the time.

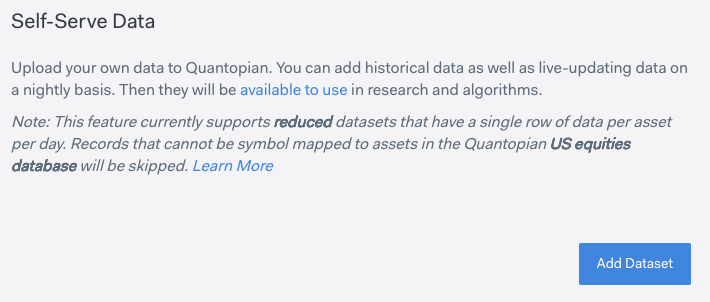

Self-Serve Data allows you to upload your own time-series csv data to Quantopian. In order to accurately represent your data in pipeline and avoid lookahead bias, your data will be collected, stored, and surfaced in a point-in-time nature similar to Quantopian Partner Data.

Our US futures database includes 72 futures. The list includes commodity, interest rate, equity, and currency futures. 24 hour data is available for these futures, 5 days a week (Sunday at 6pm - Friday at 6pm ET). Some of these futures have stopped trading since 2002, but are available in research and algorithms to help you avoid survivorship bias.

There are many other financial data sources we'd like to incorporate, such as options and non-US markets. What kind of data sources would you like us to have? Let us know.

Research Environment

The research environment is a customized IPython server. With this open-ended platform, you are free to explore new investing ideas. For most people, the hardest part of writing a good algorithm is finding the idea or pricing "edge." The research environment is the place to do that kind of research and analysis. In the research environment you have access to all of Quantopian's data- price, volume, corporate fundamentals, and partner datasets including sentiment data, earnings calendars, and more.

The research environment is also useful for analyzing the performance of backtests and live trading algorithms. You can load the result of a backtest or live algorithm into research, analyze results, and compare to other algorithms' performances.

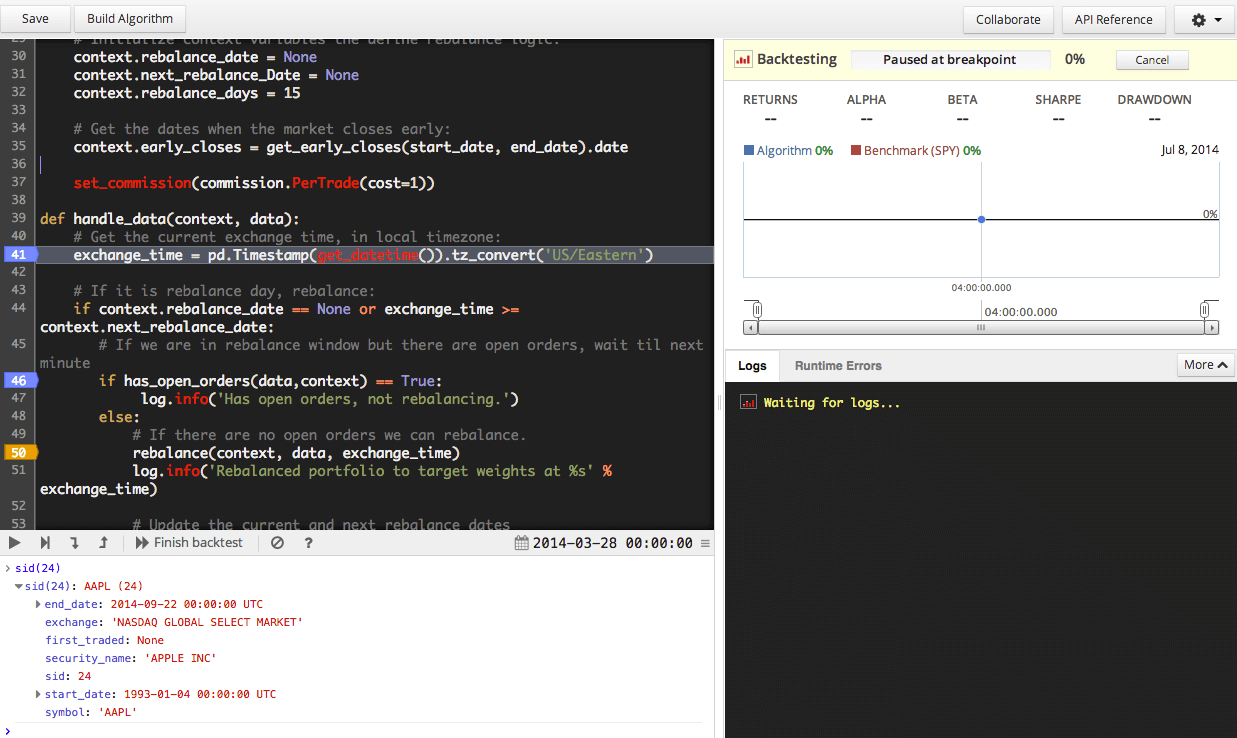

IDE and Backtesting

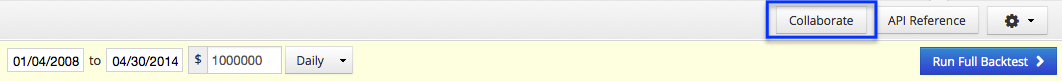

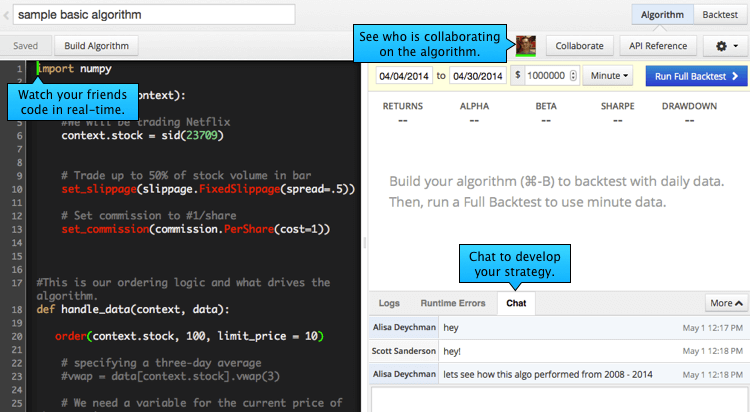

The IDE (interactive development environment) is where you write your algorithm. It's also where you kick off backtests. Pressing "Build" will check your code for syntax errors and run a backtest right there in the IDE. Pressing "Full Backtest" runs a backtest that is saved and accessible for future analysis.

The IDE is covered in more detail later in this help document.

Paper Trading

Paper trading is also sometimes known as walk-forward, or out-of-sample testing. In paper trading, your algorithm gets live market data (actually, 15-minute delayed data) and 'trades' against the live data with a simulated portfolio. This is a good test for any algorithm. If you inadvertently overfit during your algorithm development process, paper trading will often reveal the problem. You can simulate trades for free, with no risks, against the current market on Quantopian.

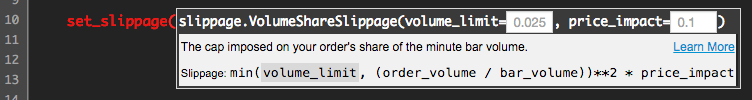

Quantopian paper trading uses the same order-fulfillment logic as a regular backtest, including the slippage model.

Before you can paper trade your algorithm, you must run a full backtest. Go to your algorithm and click the 'Full Backtest' button. Once that backtest is complete, you will see a 'Live Trade’ button. When you start paper trading, you will be prompted to specify the amount of money used in the strategy. Note that this cash amount is not enforced - it is used solely to calculate your algorithm's returns.

Paper trading is currently only available for US equity trading.

Privacy and Security

We take privacy and security extremely seriously. All trading algorithms and backtest results you generate inside Quantopian are owned by you. You can, of course, choose to share your intellectual property with the community, or choose to grant Quantopian permission to see your code for troubleshooting. We will not access your algorithm without your permission except for unusual cases, such as protecting the platform's security.

Our platform is run on a cloud infrastructure.

Specifically, our security layers include:

- Never storing your password in plaintext in our database

- SSL encryption for the Quantopian application

- Secure websocket communication for the backtest data

- Encrypting all trading algorithms and other proprietary information before writing them to our database

We want to be very transparent about our security measures. Don't hesitate to email us with any questions or concerns.

Two-Factor Authentication

Two-factor authentication (2FA) is a security mechanism to protect your account. When 2FA is enabled, your account requires both a password and an authentication code you generate on your smart phone.

With 2FA enabled, the only way someone can sign into your account is if they know both your password and have access to your phone (or backup codes). We strongly urge you to turn on 2FA to protect the safety of your account.

How does it work?

Once you enable 2FA, you will still enter your password as you normally do when logging into Quantopian. Then you will be asked to enter an authentication code. This code can be either generated by the Google Authenticator app on your smartphone, or sent as a text message (SMS).

If you lose your phone, you will need to use a backup code to log into your account. It's very important that you get your backup code as soon as you enable 2FA. If you don't have your phone, and you don't have your backup code, you won't be able to log in. Make sure to save your backup code in a safe place!

You can setup 2FA via SMS or Google Authenticator, or both. These methods require a smartphone and you can switch between them at any time. To use the SMS option you need a US-based phone number, beginning with the country code +1.

To configure 2FA via SMS:

- Go to Account Settings and click 'Configure via SMS'.

- Enter your phone number. Note: Only US-based phone numbers are allowed for the SMS option. Standard messaging rates apply.

- You'll receive a 5 or 6 digit security token on your mobile. Enter this code in the pop-on screen to verify your device. Once verified, you'll need to provide the security token sent via SMS every time you login to Quantopian.

- Download your backup login code in case you lose your mobile device. Without your phone and backup code, you will not be able to access your Quantopian account.

To configure 2FA via Google Authenticator:

- Go to Two-Factor Auth and click 'Configure Google Authenticator'.

- If you don’t have Google Authenticator, go to your App Store and download the free app. Once installed, open the app, click 'Add an Account', then 'Scan a barcode'.

- Hold the app up to your computer screen and scan the QR code. Enter the Quantopian security token from the Google Authenticator app.

- Copy your backup login code in case you lose your mobile device. Without your phone and backup code, you will not be able to access your Quantopian account.

Backup login code

If you lose your phone and can’t login via SMS or Google Authenticator, your last resort is to login using your backup login code. Without your phone and this code, you will not be able to login to your Quantopian account.

To save your backup code:

- Go to https://www.quantopian.com/account?page=twofactor

- Click 'View backup login code'.

- Enter your security token received via SMS or on your Google Authenticator app.

- Copy your backup code to a safe location.

Developing in the IDE

Quantopian's Python IDE is where you develop your trading ideas. The standard features (tab completion, autosave, fullscreen, font size, color theme) help make your experience as smooth as possible.

Your work is automatically saved every 10 seconds, and you can click Save to manually save at any time. We'll also warn you if you navigate away from the IDE page while there's unsaved content. Tab completion is also available in the IDE, and can be enabled from the settings button on the top right.

Overview

We have a simple but powerful API for you to use:

initialize(context) is a required setup method for initializing state or other bookkeeping. This method is called only once at the beginning of your algorithm. context is an augmented Python dictionary used for maintaining state during your backtest or live trading session. context should be used instead of global variables in the algorithm. Properties can be accessed using dot notation (context.some_property).

handle_data(context, data) is an optional method that is called every minute. Your algorithm uses data to get individual prices and windows of prices for one or more assets. handle_data(context, data) should be rarely used; most algorithm functions should be run in scheduled functions.

before_trading_start(context, data) is an optional method called once a day, before the market opens. Your algorithm can select securities to trade using Pipeline, or do other once-per-day calculations.

Your algorithm can have other Python methods. For example, you might have a scheduled function call a utility method to do some calculations.

For more information, jump to the API Documentation section below. These core functions are also covered in Core Functions in the Getting Started Tutorial.

Manual Asset Lookup

Equities

If you want to manually select an equity, you can use the symbol function to look up a security by its ticker or company name. Using the symbol method brings up a search box that shows you the top results for your query.

To reference multiple securities in your algorithm, call the symbols function to create a list of securities. Note the pluralization! The function can accept a list of up to 500 securities and its parameters are not case sensitive.

Sometimes, tickers are reused over time as companies delist and new ones begin trading. For example, G used to refer to Gillette but now refers to Genpact. If a ticker was reused by multiple companies, use set_symbol_lookup_date('YYYY-MM-DD') to specify what date to use when resolving conflicts. This date needs to be set before any calls to symbol or symbols.

Another option to manually enter securities is to use the sid function. All securities have a unique security id (SID) in our system. Since symbols may be reused among exchanges, this prevents any confusion and verifies you are calling the desired security. You can use our sid method to look up a security by its id, symbol, or name.

In other words, sid(24) is equivalent to symbol('AAPL') or Apple.

Quantopian's backtester makes a best effort to automatically adjust the backtest's start or end dates to accommodate the assets that are being used. For example, if you're trying to run a backtest with Tesla in 2004, the backtest will suggest you begin on June 28, 2010 the first day the security traded. This ability is significantly decreased when using symbol instead of sid.

The symbol and symbols methods accept only string literals as parameters. The sid method accepts only an integer literal as a parameter. A static analysis is run on the code to quickly retrieve data needed for the backtest.

Manually looking up equities is covered in Referencing Securities in the Getting Started Tutorial.

Futures

To manually select a future in your algorithm, you probably want to reference the corresponding continuous future. Continuous futures are objects that stitch together consecutive contracts of a particular underlying asset. The continuous_future function can be used to reference a continuous future. The continuous_future function has auto-complete similar to symbol

Continuous futures maintain a reference to the active contract of an underlying asset, but cannot themselves be traded. To trade a particular future contract, you can get the current contract of a continuous future. This is covered in the Futures Tutorial.

Futures contracts can be looked up manually with future_symbol by passing a string for the particular contract (e.g. future_symbol('CLF16'). This is uncommon outside of research given the short lifetime of contracts.

Trading Calendars

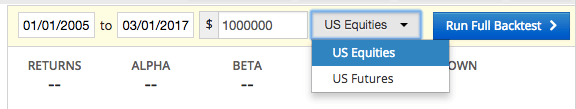

Before running an algorithm, you will need to select which calendar you want to trade on. Currently, there are two calendars: the US Equities Calendar and the US Futures Calendar.

The US Equity calendar runs weekdays from 9:30AM-4PM Eastern Time and respects the US stock market holiday schedule, including partial days. Futures trading is not supported on the US Equity calendar.

The US Futures calendar runs weekdays from 6:30AM-5PM Eastern Time and respects the futures exchange holidays observed by all US futures (US New Years Day, Good Friday, Christmas). Trading futures and equities are both supported on the US Futures calendar.

To choose a calendar, select it from the dropdown in the IDE before running a backtest.

Scheduling Functions

Your algorithm will run much faster if it doesn't have to do work every single minute. We provide the schedule_function method that lets your algorithm specify when methods are run by using date and/or time rules. All scheduling must be done from within the initialize method.

def initialize(context):

schedule_function(

func=myfunc,

date_rule=date_rules.every_day(),

time_rule=time_rules.market_close(minutes=1),

half_days=True

)

For a condensed introduction to scheduling functions, check out the Scheduling Functions lesson in the Getting Started Tutorial.

Monthly modes (month_start and month_end) accept a days_offset parameter to offset the function execution by a specific number of trading days from the beginning and end of the month, respectively. In monthly mode, all day calculations are done using trading days for your selected trading calendar. If the offset exceeds the number of trading days in a month, the function isn't run during that month.

Weekly modes (week_start and week_end) also accept a days_offset parameter. If the function execution is scheduled for a market holiday and there is at least one more trading day in the week, the function will run on the next trading day. If there are no more trading days in the week, the function is not run during that week.

Specific times for relative rules such as 'market open' or 'market close' will depend on the calendar that is selected before running a backtest. If you would like to schedule a function according to the time rules of a different calendar, you can specify a calendar argument. To specify a calendar, you must import calendars from the quantopian.algorithm module.

If calendars.US_EQUITIES is used, market open is usually 9:30AM ET and market close is usually 4PM ET.

If calendars.US_FUTURES is used, market open is at 6:30AM ET and market close is at 5PM ET.

from quantopian.algorithm import calendars

def initialize(context):

schedule_function(

func=myfunc,

date_rule=date_rules.every_day(),

time_rule=time_rules.market_close(minutes=1),

calendar=calendars.US_EQUITIES

)

To schedule a function more frequently, you can use multiple schedule_function calls. For instance, you can schedule a function to run every 30 minutes, every three days, or at the beginning of the day and the middle of the day.

Scheduled functions are not asynchronous. If two scheduled functions are supposed to run at the same time, they will happen sequentially, in the order in which they were created.

Note: The handle_data function runs before any functions scheduled to run in the same minute. Also, scheduled functions have the same timeout restrictions as handle_data, i.e., the total amount of time taken up by handle_data and any scheduled functions for the same minute can't exceed 50 seconds.

Below are the options for date rules and time rules for a function. For more details, go to the API overview.

Date Rules

Every Day

Runs the function once per trading day. The example below calls myfunc every morning, 15 minutes after the market opens. The exact time will depend on the calendar you are using. For the US Equity calendar, the first minute runs from 9:30-9:31AM Eastern Time. On the US Futures calendar, the first minute usually runs from 6:30-6:31AM ET.

def initialize(context):

# Algorithm will call myfunc every day 15 minutes after the market opens

schedule_function(

myfunc,

date_rules.every_day(),

time_rules.market_open(minutes=15)

)

def myfunc(context,data):

pass

Week Start

Runs the function once per calendar week. By default, the function runs on the first trading day of the week. You can add an optional offset from the start of the week to choose another day. The example below calls myfunc once per week, on the second day of the week, 3 hours and 10 minutes after the market opens.

def initialize(context):

# Algorithm will call myfunc once per week, on the second day of the week,

# 3 hours and 10 minutes after the market opens

schedule_function(

myfunc,

date_rules.week_start(days_offset=1),

time_rules.market_open(hours=3, minutes=10)

)

def myfunc(context,data):

pass

Week End

Runs the function once per calendar week. By default, the function runs on the last trading day of the week. You can add an optional offset, from the end of the week, to choose the trading day of the week. The example below calls myfunc once per week, on the second-to-last day of the week, 1 hour after the market opens.

def initialize(context):

# Algorithm will call myfunc once per week, on the last day of the week,

# 1 hour after the market opens

schedule_function(

myfunc,

date_rules.week_end(days_offset=1),

time_rules.market_open(hours=1)

)

def myfunc(context,data):

pass

Month Start

Runs the function once per calendar month. By default, the function runs on the first trading day of each month. You can add an optional offset from the start of the month, counted in trading days (not calendar days).

The example below calls myfunc once per month, on the second trading day of the month, 30 minutes after the market opens.

def initialize(context):

# Algorithm will call myfunc once per month, on the second day of the month,

# 30 minutes after the market opens

schedule_function(

myfunc,

date_rules.month_start(days_offset=1),

time_rules.market_open(minutes=30)

)

def myfunc(context,data):

pass

Month End

Runs the function once per calendar month. By default, the function runs on the last trading day of each month. You can add an optional offset from the end of the month, counted in trading days. The example below calls myfunc once per month, on the third-to-last trading day of the month, 15 minutes after the market opens.

def initialize(context):

# Algorithm will call myfunc once per month, on the last day of the month,

# 15 minutes after the market opens

schedule_function(

myfunc,

date_rules.month_end(days_offset=2),

time_rules.market_open(minutes=15)

)

def myfunc(context,data):

pass

Time Rules

Market Open

Runs the function at a specific time relative to the market open. The exact time will depend on the calendar specified in the calendar argument. Without a specified offset, the function is run one minute after the market opens.

The example below calls myfunc every day, 1 hour and 20 minutes after the market opens. The exact time will depend on the calendar you are using.

def initialize(context):

# Algorithm will call myfunc every day, 1 hour and 20 minutes after the market opens

schedule_function(

myfunc,

date_rules.every_day(),

time_rules.market_open(hours=1, minutes=20)

)

def myfunc(context,data):

pass

Market Close

Runs the function at a specific time relative to the market close. Without a specified offset, the function is run one minute before the market close. The example below calls myfunc every day, 2 minutes before the market close. The exact time will depend on the calendar you are using.

def initialize(context):

# Algorithm will call myfunc every day, 2 minutes before the market closes

schedule_function(

myfunc,

date_rules.every_day(),

time_rules.market_close(minutes=2)

)

def myfunc(context,data):

pass

Scheduling Multiple Functions

More complicated function scheduling can be done by combining schedule_function calls.

Using two calls to schedule_function, a portfolio can be rebalanced at the beginning and middle of each month:

def initialize(context):

# execute on the second trading day of the month

schedule_function(

myfunc,

date_rules.month_start(days_offset=1)

)

# execute on the 10th trading day of the month

schedule_function(

myfunc,

date_rules.month_start(days_offset=9)

)

def myfunc(context,data):

pass

To call a function every 30 minutes, use a loop with schedule_function:

def initialize(context):

# For every minute available (max is 6 hours and 30 minutes)

total_minutes = 6*60 + 30

for i in range(1, total_minutes):

# Every 30 minutes run schedule

if i % 30 == 0:

# This will start at 9:31AM on the US Equity calendar and will run every 30 minutes

schedule_function(

myfunc,

date_rules.every_day(),

time_rules.market_open(minutes=i),

True

)

def myfunc(context,data):

pass

def handle_data(context,data):

pass

The data object

The data object gives your algorithm a way to fetch all sorts of data. The data object serves many functions:

- Get open/high/low/close/volume (OHLCV) values for the current minute for any asset.

- Get historical windows of OHLCV values for any asset.

- Check if the price data of an asset is stale.

- Check if an asset can be traded in the current minute (based on volume).

- Get the current contract of a continuous future.

- Get the forward looking chain of contracts for a continuous future.

The data object knows your algorithm's current time and uses that time for all its internal calculations.

All the methods on data accept a single asset or a list of assets, and the price fetching methods also accept an OHLCV field or a list of OHLCV fields. The more that your algorithm can batch up queries by passing multiple assets or multiple fields, the faster we can get that data to your algorithm.

Learn about the various methods on the data object in the API documentation below. The data object is also covered in the Getting Started Tutorial and the Futures Tutorial.

Getting price data for securities

One of the most common actions your algorithm will do is fetch price and volume information for one or more securities. Quantopian lets you get this data for a specific minute, or for a window of minutes.

To get data for a specific minute, use data.current and pass it one or more securities and one or more fields. The data returned will be the as-traded values.

For examples of getting current data and a list of possible fields, see the Getting Started Tutorial (equities) or the Futures Tutorial (futures).

data.current can also be used to find the last minute in which an asset traded, by passing 'last_traded' as the field name

To get data for a window of time, use data.history and pass it one or more assets, one or more fields, '1m' or '1d' for granularity of data, and the number of bars. The data returned for equities will be adjusted for splits, mergers, and dividends as of the current simulation time.

Important things to know:

priceis forward-filled, returning last known price, if there is one. Otherwise,NaNis returned.volumereturns 0 if the security didn't trade or didn't exist during a given minute.- For

open,high,low, andclose, aNaNis returned if the security didn't trade or didn't exist at the given minute. - Don't save the results of

data.historyfrom one day to the next. Your history call today is price-adjusted relative to today, and your history call from yesterday was adjusted relative to yesterday.

To read about all the details, go to the API documentation.

History

Getting historical data is covered in the Getting Started Tutorial and the Futures Tutorial.

In many strategies, it is useful to compare the most recent bar data to previous bars. The Quantopian platform provides utilities to easily access and perform calculations on recent history.

When your algorithm calls data.history on equities, the returned data is adjusted for splits, mergers, and dividends as of the current simulation date. In other words, when your algorithm asks for a historical window of prices, and there is a split in the middle of that window, the first part of that window will be adjusted for the split. This adustment is done so that your algorithm can do meaningful calculations using the values in the window.

This code queries the last 20 days of price history for a static set of securities. Specifically, this returns the closing daily price for the last 20 days, including the current price for the current day. Equity prices are split- and dividend-adjusted as of the current date in the simulation:

def initialize(context):

# AAPL, MSFT, and SPY

context.assets = [sid(24), sid(5061), sid(8554)]

def handle_data(context, data):

price_history = data.history(context.assets, fields="price", bar_count=20, frequency="1d")

The bar_count field specifies the number of days or minutes to include in the pandas DataFrame returned by the history function. This parameter accepts only integer values.

The frequency field specifies how often the data is sampled: daily or minutely. Acceptable inputs are ‘1d’ or ‘1m’. For other frequencies, use the pandas resample function.

Examples

Below are examples of code along with explanations of the data returned.

Daily History

Use "1d" for the frequency. The dataframe returned is always in daily bars. The bars never span more than one trading day. For US equities, a daily bar captures the trade activity during market hours (usually 9:30am-4:00pm ET). For US futures, a daily bar captures the trade activity from 6pm-6pm ET (24 hours). For example, the Monday daily bar captures trade activity from 6pm the day before (Sunday) to 6pm on the Monday. Tuesday's daily bar will run from 6pm Monday to 6pm Tuesday, etc. For either asset class, the last bar, if partial, is built using the minutes of the current day.

Examples (assuming context.assets exists):

data.history(context.assets, "price", 1, "1d")returns the current price.data.history(context.assets, "volume", 1, "1d")returns the volume since the current day's open, even if it is partial.data.history(context.assets, "price", 2, "1d")returns yesterday's close price and the current price.data.history(context.assets, "price", 6, "1d")returns the prices for the previous 5 days and the current price.

Partial trading days are treated as a single day unit. Scheduled half day sessions, unplanned early closures for unusual circumstances, and other truncated sessions are each treated as a single trading day.

Minute History

Use "1m" for the frequency.

Examples (assuming context.assets exists):

data.history(context.assets, "price", 1, "1m")returns the current price.data.history(context.assets, "price", 2, "1m")returns the previous minute's close price and the current price.data.history(context.assets, "volume", 60, "1m")returns the volume for the previous 60 minutes.

Note: If you request a history of minute data that extends past the start of the day (9:30AM on the equities calendar, 6:30AM on the futures calendar), the data.history function will get the remaining minute bars from the end of the previous day. For example, if you ask for 60 minutes of pricing data at 10:00AM on the equities calendar, the first 30 prices will be from the end of the previous trading day, and the next 30 will be from the current morning.

Returned Data

If a single security and a single field were passed into data.history, a pandas Series is returned, indexed by date.

If multiple securities and single field are passed in, the returned pandas DataFrame is indexed by date, and has assets as columns.

If a single security and multiple fields are passed in, the returned pandas DataFrame is indexed by date, and has fields as columns.

If multiple assets and multiple fields are passed in, the returned pandas Panel is indexed by field, has date as the major axis, and securities as the minor axis.

All pricing data is split- and dividend-adjusted as of the current date in the simulation or live trading. As a result, pricing data returned by data.history() should not be stored and past the end of the day on which it was retreived, as it may no longer be correctly adjusted.

"price" is always forward-filled. The other fields ("open", "high", "low", "close", "volume") are never forward-filled.

History and Backtest Start

Quantopian's price data starts on Jan 2, 2002. Any data.history call that extends before that date will raise an exception.

Common Usage: Current and Previous Bar

A common use case is to compare yesterday's close price with the current price.

This example compares yesterday's close (labeled prev_bar) with the current price (labeled curr_bar) and places an order for 20 shares if the current price is above yesterday's closing price.

def initialize(context):

# AAPL, MSFT, and SPY

context.securities = [sid(24), sid(5061), sid(8554)]

def handle_data(context, data):

price_history = data.history(context.securities, fields="price", bar_count=2, frequency="1d")

for s in context.securities:

prev_bar = price_history[s][-2]

curr_bar = price_history[s][-1]

if curr_bar > prev_bar:

order(s, 20)

Common Usage: Looking Back X Bars

It can also be useful to look further back into history for a comparison. Computing the percent change over given historical time frame requires the starting and ending price values only, and ignores intervening prices.

The following example operates over AAPL and TSLA. It passes both securities into history, getting back a pandas Series.

def initialize(context):

# AAPL and TSLA

context.securities = [sid(24), sid(39840)]

def handle_data(context, data):

prices = data.history(context.securities, fields="price", bar_count=10, frequency="1d")

pct_change = (prices.ix[-1] - prices.ix[0]) / prices.ix[0]

log.info(pct_change)

Alternatively, leveraging the following iloc pandas DataFrame function, which returns the first and last values as a pair:

price_history.iloc[[0, -1]]

The percent change example can be re-written as:

def initialize(context):

# AAPL, MSFT, and SPY

context.securities = [sid(24), sid(5061), sid(8554)]

def handle_data(context, data):

price_history = data.history(context.securities, fields="price", bar_count=10, frequency="1d")

pct_change = price_history.iloc[[0, -1]].pct_change()

log.info(pct_change)

To find the difference between the values, the code example can be written as:

def initialize(context):

# Crude Oil and Spy E-Mini

context.futures = [continuous_future('CL'), continuous_future('ES')]

def handle_data(context, data):

price_history = data.history(context.futures, fields="price", bar_count=10, frequency="1d")

diff = price_history.iloc[[0, -1]].diff()

log.info(diff)

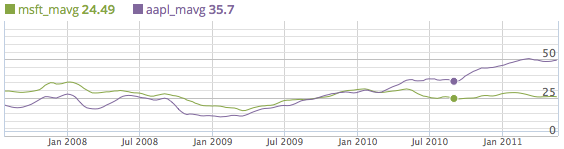

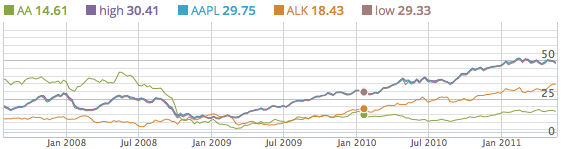

Common Usage: Rolling Transforms

Rolling transform calculations such as mavg, stddev, etc. can be calculated via methods provided by pandas.

- mavg -> DataFrame.mean

- stddev -> DataFrame.std

- vwap -> DataFrame.sum, for volume and price

Common Usage: Moving Average

def initialize(context):

# AAPL, MSFT, and SPY

context.securities = [sid(24), sid(5061), sid(8554)]

def handle_data(context, data):

price_history = data.history(context.securities, fields="price", bar_count=5, frequency="1d")

log.info(price_history.mean())

Common Usage: Standard Deviation

def initialize(context):

# Crude Oil and Spy E-Mini

context.futures = [continuous_future('CL'), continuous_future('ES')]

def handle_data(context, data):

price_history = data.history(context.futures, fields="price", bar_count=5, frequency="1d")

log.info(price_history.std())

Common Usage: VWAP

def initialize(context):

# AAPL, MSFT, and SPY

context.securities = [sid(24), sid(5061), sid(8554)]

def vwap(prices, volumes):

return (prices * volumes).sum() / volumes.sum()

def handle_data(context, data):

hist = data.history(context.securities, fields=["price", "volume"], bar_count=30, frequency="1d")

vwap_15 = vwap(hist["price"][-15:], hist["volume"][-15:])

vwap_30 = vwap(hist["price"], hist["volume"])

for s in context.securities:

if vwap_15[s] > vwap_30[s]:

order(s, 50)

Common Usage: Using An External Library

Since history can return a pandas DataFrame, the values can then be passed to libraries that operate on numpy and pandas data structures.

An example OLS strategy:

import statsmodels.api as sm

def ols_transform(prices, sec1, sec2):

"""

Computes regression coefficients (slope and intercept)

via Ordinary Least Squares between two securities.

"""

p0 = prices[sec1]

p1 = sm.add_constant(prices[sec2], prepend=True)

return sm.OLS(p0, p1).fit().params

def initialize(context):

# KO

context.sec1 = sid(4283)

# PEP

context.sec2 = sid(5885)

def handle_data(context, data):

price_history = data.history([context.sec1, context.sec2], fields="price", bar_count=30, frequency="1d")

intercept, slope = ols_transform(price_history, context.sec1, context.sec2)

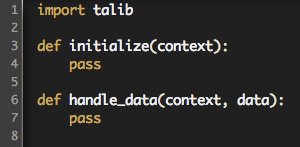

Common Usage: Using TA-Lib

Since history can return a pandas Series, that Series can be passed to TA-Lib.

An example EMA calculation:

# Python TA-Lib wrapper

# https://github.com/mrjbq7/ta-lib

import talib

def initialize(context):

# AAPL

context.my_stock = sid(24)

def handle_data(context, data):

my_stock_series = data.history(context.my_stock, fields="price", bar_count=30, frequency="1d")

ema_result = talib.EMA(my_stock_series, timeperiod=12)

record(ema=ema_result[-1])

Ordering

Ordering is covered here in the Getting Started Tutorial, and here in the Futures Tutorial.

There are many different ways to place orders from an algorithm. For most use-cases, we recommend using order_optimal_portfolio, which allows for an easy transition to sophisticated portfolio optimization techniques.

There are also various manual ordering methods that may be useful in certain cases. However, order_optimal_portfolio is required for algorithms to receive a capital allocation from Quantopian.

Ordering a delisted security is an error condition; so is ordering a security before an IPOor a futures contract after its auto_close_date. To check if a stock or future can be traded at a given point in your algorithm, use data.can_trade, which returns True if the asset is alive, has traded at least once and is not restricted. For example, on the day a security IPOs, can_trade will return False until trading actually starts, often several hours after the market open. The FAQ has more detailed information about how orders are handled and filled by the backtester.

Since Quantopian forward-fills price, your algorithm might need to know if the price for a security or contract is from the most recent minute. The data.is_stale method returns True if the asset is alive but the latest price is from a previous minute.

Quantopian supports four different order types:

- market order:

order(asset, amount)will place a simple market order. - limit order: Use

order(asset, amount, style=LimitOrder(price))to place a limit order. A limit order executes at the specified price or better, if the price is reached.

Note: order(asset, amount, limit_price=price) is old syntax and will be deprecated in the future.

- stop order: Call

order(asset, amount, style=StopOrder(price))to place a stop order (also known as a stop-loss order). When the specified price is hit, the order converts into a market order.

Note: order(asset, amount, stop_price=price) is old syntax and will be deprecated in the future.

- stop-limit order: Call

order(asset, amount, style=StopLimitOrder(limit_price, stop_price))to place a stop-limit order. When the specified stop price is hit, the order converts into a limit order.

Note: order(asset, amount, limit_price=price1, stop_price=price2) is old syntax and will be deprecated in the future.

All open orders are cancelled at the end of the day, both in backtesting and live trading.

You can see the status of a specific order by calling get_order(order). Here is a code example that places an order, stores the order_id, and uses the id on subsequent calls to log the order amount and the amount filled:

# place a single order at market open.

if context.ordered == True:

context.order_id = order_value(context.aapl, 1000000)

context.ordered = False

# retrieve the order placed in the first bar

context.order_id = get_order(context.order_id)

if aapl_order:

# log the order amount and the amount that is filled

message = 'Order for {amount} has {filled} shares filled.'

message = message.format(amount=aapl_order.amount, filled=aapl_order.filled)

log.info(message)

If your algorithm is using a stop order, you can check the stop status in the stop_reached attribute of the order ID:

# Monitor open orders and check is stop order triggered

for stock in context.secs:

#check if we have any open orders queried by order id

ID = context.secs[stock]

order_info = get_order(ID)

# If we have orders, then check if stop price is reached

if order_info:

CheckStopPrice = order_info.stop_reached

if CheckStopPrice: log.info(('Stop price triggered for stock %s') % (stock.symbol)

You can see a list of all open orders by calling get_open_orders(). This example logs all the open orders across all securities:

# retrieve all the open orders and log the total open amount

# for each order

open_orders = get_open_orders()

# open_orders is a dictionary keyed by sid, with values that are lists of orders.

if open_orders:

# iterate over the dictionary

for security, orders in open_orders.iteritems():

# iterate over the orders

for oo in orders:

message = 'Open order for {amount} shares in {stock}'

message = message.format(amount=oo.amount, stock=security)

log.info(message)

If you want to see the open orders for a specific stock, you can specify the security like: get_open_orders(sid(24)). Here is an example that iterates over open orders in one stock:

# retrieve all the open orders and log the total open amount

# for each order

open_aapl_orders = get_open_orders(context.aapl)

# open_aapl_orders is a list of order objects.

# iterate over the orders in aapl

for oo in open_aapl_orders:

message = 'Open order for {amount} shares in {stock}'

message = message.format(amount=oo.amount, stock=security)

log.info(message)

Orders can be cancelled by calling cancel_order(order). Orders are cancelled asynchronously.

Viewing Portfolio State

Your current portfolio state is accessible from the context object in handle_data:

To view an individual position's information, use the context.portfolio.positions dictionary:

Details on the portfolio and position properties can be found in the API documentation below.

Pipeline

Many algorithms depend on calculations that follow a specific pattern:

Every day, for some set of data sources, fetch the last N days’ worth of data for a large number of assets and apply a reduction function to produce a single value per asset.

This kind of calculation is called a cross-sectional trailing-window computation.

A simple example of a cross-sectional trailing-window computation is “close-to-close daily returns”, which has the form:

Every day, fetch the last two days of close prices for all assets. For each asset, calculate the percent change between the asset’s previous close price and its current close price.

The purpose of the Pipeline API is to make it easy to define and execute cross-sectional trailing-window computations.

Basic Usage

It’s easiest to understand the basic concepts of the Pipeline API after walking through an example.

In the algorithm below, we use the Pipeline API to describe a computation

producing 10-day and 30-day Simple Moving Averages of close price for every

stock in Quantopian’s database. We then specify that we want to filter down

each day to just stocks with a 10-day average price of $5.00 or less. Finally,

in our before_trading_start, we print the first five rows of our results.

from quantopian.algorithm import attach_pipeline, pipeline_output

from quantopian.pipeline import Pipeline

from quantopian.pipeline.data.builtin import USEquityPricing

from quantopian.pipeline.factors import SimpleMovingAverage

def initialize(context):

# Create and attach an empty Pipeline.

pipe = Pipeline()

pipe = attach_pipeline(pipe, name='my_pipeline')

# Construct Factors.

sma_10 = SimpleMovingAverage(inputs=[USEquityPricing.close], window_length=10)

sma_30 = SimpleMovingAverage(inputs=[USEquityPricing.close], window_length=30)

# Construct a Filter.

prices_under_5 = (sma_10 < 5)

# Register outputs.

pipe.add(sma_10, 'sma_10')

pipe.add(sma_30, 'sma_30')

# Remove rows for which the Filter returns False.

pipe.set_screen(prices_under_5)

def before_trading_start(context, data):

# Access results using the name passed to `attach_pipeline`.

results = pipeline_output('my_pipeline')

print results.head(5)

# Store pipeline results for use by the rest of the algorithm.

context.pipeline_results = results

On each before_trading_start call, our algorithm will print a

pandas.DataFrame containing data like this:

| index | sma_10 | sma_30 |

|---|---|---|

| Equity(21 [AAME]) | 2.012222 | 1.964269 |

| Equity(37 [ABCW]) | 1.226000 | 1.131233 |

| Equity(58 [SERV]) | 2.283000 | 2.309255 |

| Equity(117 [AEY]) | 3.150200 | 3.333067 |

| Equity(225 [AHPI]) | 4.286000 | 4.228846 |

We can break our example algorithm into five parts:

Initializing a Pipeline

The first step of any algorithm using the Pipeline API is to create and

register an empty Pipeline object.

from quantopian.algorithm import attach_pipeline, pipeline_output

from quantopian.pipeline import Pipeline

...

def initialize(context):

pipe = Pipeline()

attach_pipeline(pipe, name='my_pipeline')

A Pipeline is an object that represents computation we would like to

perform every day. A freshly-constructed pipeline is empty, which means it

doesn’t yet know how to compute anything, and it won’t produce any values if we

ask for its outputs. We’ll see below how to provide our Pipeline with

expressions to compute.

Just constructing a new pipeline doesn’t do anything by itself: we have to tell

our algorithm to use the new pipeline, and we have to provide a name for the

pipeline so that we can identify it later when we ask for results. The

attach_pipeline() function from quantopian.algorithm accomplishes

both of these tasks.

Importing Datasets

Before we can build computations for our pipeline to execute, we need a way to

identify the inputs to those computations. In our example, we’ll use data from

the USEquityPricing dataset, which

we import from quantopian.pipeline.data.builtin.

from quantopian.pipeline.data.builtin import USEquityPricing

USEquityPricing is an example of a DataSet.

The most important thing to understand about DataSets is that they do not

hold actual data. DataSets are simply collections of objects that tell the

Pipeline API where and how to find the inputs to computations. Since these

objects often correspond to database columns, we refer to the attributes of

DataSets as columns.

USEquityPricing provides five columns:

USEquityPricing.openUSEquityPricing.highUSEquityPricing.lowUSEquityPricing.closeUSEquityPricing.volume

Many datasets besides USEquityPricing are available on Quantopian. These

include corporate fundamental data, news sentiment, macroeconomic indicators,

and more. Morningstar fundamental data is available in the quantopian.pipeline.data.Fundamentals dataset. All other datasets are namespaced by provider under

quantopian.pipeline.data.

Pipeline-compatible datasets are currently importable from the following modules:

quantopian.pipeline.data.estimize(Estimize)quantopian.pipeline.data.eventvestor(EventVestor)quantopian.pipeline.data.psychsignal(PsychSignal)quantopian.pipeline.data.quandl(Quandl)quantopian.pipeline.data.sentdex(Sentdex)

Example algorithms and notebooks for working with each of these datasets can be found on the Quantopian Data page.

Building Computations

Once we’ve imported the datasets we intend to use in our algorithm, our next step is to build the transformations we want our Pipeline to compute each day.

sma_10 = SimpleMovingAverage(inputs=[USEquityPricing.close], window_length=10)

sma_30 = SimpleMovingAverage(inputs=[USEquityPricing.close], window_length=30)

The SimpleMovingAverage class used here

is an example of a Factor. Factors, in the

Pipeline API, are objects that represent reductions on trailing windows of

data. Every Factor stores four pieces of state:

inputs: A list ofBoundColumnobjects describing the inputs to the Factor.window_length: An integer describing how many rows of historical data the Factor needs to be provided each day.dtype: A numpydtypeobject representing the type of values computed by the Factor. Most factors are of dtypefloat64, indicating that they produce numerical values represented as 64-bit floats. Factors can also be of dtypedatetime64[ns].- A

computefunction that operates on the data described byinputsandwindow_length.

When we compute a Factor for a day on which

we have N assets in our database, the underlying Pipeline API engine

provides that Factor’s compute function a two-dimensional array of shape

(window_length x N) for each input in inputs. The job of the compute

function is to produce a one-dimensional array of length N as an output.

The figure to the right shows the computation performed on a single day by

another built-in Factor, VWAP.

prices_under_5 = (sma_10 < 5)

The next line of our example algorithm constructs a

Filter. Like Factors, Filters are

reductions over input data defined by datasets. The difference between Filters

and Factors is that Filters produce boolean-valued outputs, whereas Factors

produce numerical- or datetime-valued outputs. The expression

(sma_10 < 5) uses Operator Overloading to construct a Filter instance

whose compute is equivalent to “compute 10-day moving average price for

each asset, then return an array containing True for all assets whose

computed value was less than 5, otherwise containing False.

Quantopian provides a library of factors (SimpleMovingAverage, RSI, VWAP and MaxDrawdown) which will continue to grow. You can also create custom factors. See the examples, and API Documentation for more information.

Adding Computations to a Pipeline

Pipelines support two broad classes of operations: adding new columns and screening out unwanted rows.

To add a new column, we call the add() method of our pipeline with a

Filter or Factor and a name for the column to be created.

pipe.add(sma_10, name='sma_10')

pipe.add(sma_30, name='sma_30')

These calls to add tell our pipeline to compute a 10-day SMA with the name

"sma_10" and our 30-day SMA with the name "sma_30".

The other important operation supported by pipelines is screening out unwanted rows our final results.

pipe.set_screen(prices_under_5)

The call to set_screen informs our pipeline that each day we want to throw

away any rows for whose assets our prices_under_5 Filter produced a value

of False.

Using Results

By the end of our initialize function, we’ve already defined the core logic

of our algorithm. All that remains to be done is to ask for the results of the

pipeline attached to our algorithm.

def before_trading_start(context, data):

results = pipeline_output('my_pipeline')

print results.head(5)

The return value of pipeline_output will be a pandas.DataFrame

containing a column for each call we made to Pipeline.add() in our

initialize method and containing a row for each asset that our pipeline’s

screen. The printed values should look like this:

| index | sma_10 | sma_30 |

|---|---|---|

| Equity(21 [AAME]) | 2.012222 | 1.964269 |

| Equity(37 [ABCW]) | 1.226000 | 1.131233 |

| Equity(58 [SERV]) | 2.283000 | 2.309255 |

| Equity(117 [AEY]) | 3.150200 | 3.333067 |

| Equity(225 [AHPI]) | 4.286000 | 4.228846 |

Most algorithms save their pipeline Pipeline outputs on context for use in

functions other than before_trading_start. For example, an algorithm might

compute a set of target portfolio weights as a Factor, store the target weights

on context, and then use the stored weights in a rebalance function scheduled

to run once per day.

context.pipeline_results = results

Next Steps

Custom Factors

Quantopian provides a large library of built-in factors in the

quantopian.pipeline.factors module.

One of the most powerful features of the Pipeline API is that it allows users

to define their own custom factors. The easiest way to do this is to subclass

quantopian.pipeline.CustomFactor and implement a compute method

whose signature is:

def compute(self, today, assets, out, *inputs):

...

An instance of CustomFactor that’s been added to a pipeline will have

its compute method called every day with data defined by the values

supplied to its constructor as inputs and window_length.

For example, if we define and add a CustomFactor like this:

class MyFactor(CustomFactor):

def compute(self, today, asset_ids, out, values):

out[:] = do_something_with_values(values)

def initialize(context):

p = attach_pipeline(Pipeline(), 'my_pipeline')

my_factor = MyFactor(inputs=[USEquityPricing.close], window_length=5)

p.add(my_factor, 'my_factor')

then every simulation day my_factor.compute() will be called with data as follows:

valueswill all be 5 x N numpy array, where N is roughly number of assets in our database on the day in question (generally between 8000-10000). Each column ofvalueswill contain the last 5 close prices of one asset. There will be 5 rows in the array because we passedwindow_length=5to theMyFactorconstructor.outwill be an empty array of length N. The job ofcomputeis to write output values into out. We write values directly into an output array rather than returning them to avoid making unnecessary copies. This is an uncommon idiom in Python, but very common in languages like C and Fortran that care about high performance numerical code. Manynumpyfunctions take an out parameter for similar reasons.asset_idswill be an integer array of length N containing security ids corresponding to each column ofvalues.todaywill be adatetime64[ns]representing the day for which compute is being called.

Default Inputs

Some factors are naturally parametrizable on both inputs and

window_length. It makes reasonable sense, for example, to take a

SimpleMovingAverage of more than one length or over more than one

input. For this reason, factors like SimpleMovingAverage don’t

provide defaults for inputs or window_length. Every time you construct

an instance of SimpleMovingAverage, you have to pass a window_length

and an inputs list.

Many factors, however, are naturally defined as operating on a specific dataset

or on a specific window size. VWAP

should always be called with inputs=[USEquityPricing.close,

USEquityPricing.volume], and RSI is canonically calculated over a

14-day window. For factors, it’s convenient to be able to provide a default.

If values are not passed for window_length or inputs, the

CustomFactor constructor will try to fall back to class-level

attributes with the same names. This means that we can implement a VWAP-like

CustomFactor by defining an inputs list as a class level attribute.

import numpy as np

class PriceRange(CustomFactor):

"""

Computes the difference between the highest high and the lowest

low of each over an arbitrary input range.

"""

inputs = [USEquityPricing.high, USEquityPricing.low]

def compute(self, today, assets, out, highs, lows):

out[:] = np.nanmax(highs, axis=0) - np.nanmin(lows, axis=0)

...

# We'll automatically use high and low as inputs because we declared them as

# defaults for the class.

price_range_10 = PriceRange(window_length=10)

Fundamental Data

Quantopian provides a number of Pipeline-compatible fundamental data fields

sourced from Morningstar. Each field exists as a

BoundColumn under the

quantopian.pipeline.data.Fundamentals dataset. These fields can be

passed as inputs to Pipeline computations.

For example, Fundamentals.market_cap is a column representing

the most recently reported market cap for each asset on each date. There are

over 900 total columns available in the Fundamentals dataset. See the

Quantopian Fundamentals Reference for a full description of all such

attributes.

Masking Factors

When computing a CustomFactor we are sometimes

only interested in computing over a certain set of stocks, especially if our

CustomFactor’s compute method is computationally expensive. We can restrict the

set of stocks over which we would like to compute by passing a

Filter to our CustomFactor upon

instantiation via the mask parameter. When passed a mask, a CustomFactor

will only compute values over stocks for which the Filter returns True. All

other stocks for which the Filter returned False will be filled with missing

values.

Example:

Suppose we want to compute a factor over only the top 500 stocks by dollar volume. We can define a CustomFactor and Filter as follows:

from quantopian.algorithm import attach_pipeline

from quantopian.pipeline import CustomFactor, Pipeline

from quantopian.pipeline.data.builtin import USEquityPricing

from quantopian.pipeline.factors import AverageDollarVolume

def do_something_expensive(some_input):

# Not actually expensive. This is just an example.

return 42

class MyFactor(CustomFactor):

inputs = [USEquityPricing.close]

window_length = 252

def compute(self, today, assets, out, close):

out[:] = do_something_expensive(close)

def initialize(context):

pipeline = attach_pipeline(Pipeline(), 'my_pipeline')

dollar_volume = AverageDollarVolume(window_length=30)

high_dollar_volume = dollar_volume.top(500)

# Pass our filter to our custom factor via the mask parameter.

my_factor = MyFactor(mask=high_dollar_volume)

pipeline.add(my_factor, 'my_factor')

Slicing Factors

Using a technique we call slicing, it is possible to extract the values of a

Factor for a single asset. Slicing can be

thought of as extracting a single column of a Factor, where each column

corresponds to an asset. Slices are created by indexing into an ordinary

factor, keyed by asset. These Slice objects can then be used as an input to

a CustomFactor.

When a Slice object is used as an input to a custom factor, it always

returns an N x 1 column vector of values, where N is the window length. For

example, a slice of a Returns factor

would output a column vector of the N previous returns values for a given

security.

Example:

Create a Returns factor and extract the column for AAPL.

from quantopian.pipeline import CustomFactor

from quantopian.pipeline.factors import Returns

returns = Returns(window_length=30)

returns_aapl = returns[sid(24)]

class MyFactor(CustomFactor):

inputs = [returns_aapl]

window_length = 5

def compute(self, today, assets, out, returns_aapl):

# `returns_aapl` is a 5 x 1 numpy array of the last 5 days of

# returns values for AAPL. For example, it might look like:

# [[ .01],

# [ .02],

# [-.01],

# [ .03],

# [ .01]]

pass

Note: Only slices of certain factors can be used as inputs. These factors

include Returns and any factors created from rank or zscore. The

reason for this is that these factors produce normalized values, so they are

safe for use as inputs to other factors.

Note: Slice objects cannot be added as a column to a pipeline. Each

day, a slice only computes a value for the single asset with which it is

associated, whereas ordinary factors compute a value for every asset. In the

current pipeline framework there would be no reasonable way to represent the

single-valued output of a Slice term.

Normalizing Results

It is often desirable to normalize the output of a factor before using it in an algorithm. In this context, normalizing means re-scaling a set of values in a way that makes comparisons between values more meaningful.

Such re-scaling can be useful for comparing the results of different factors.

A technical indicator like RSI might

produce an output bounded between 1 and 100, whereas a fundamental ratio might

produce a values of any real number. Normalizing two incomensurable factors via

an operation like Z-Score makes it easier to incorporate both factors into a

larger model, because doing so reduces both factors to dimensionless

quantities.

The base Factor class provides several

methods for normalizing factor outputs. The

demean() method transforms the output

of a factor by computing the mean of each row and subtracting it from every

entry in the row. Similarly, the

zscore() method subtracts the mean

from each row and then divides by the standard deviation of the row.

Example:

Suppose that f is a Factor which

would produce the following output:

AAPL MSFT MCD BK

2017-03-13 1.0 2.0 3.0 4.0

2017-03-14 1.5 2.5 3.5 1.0

2017-03-15 2.0 3.0 4.0 1.5

2017-03-16 2.5 3.5 1.0 2.0

f.demean() constructs a new Factor

that will produce its output by first computing f and then subtracting the

mean from each row:

AAPL MSFT MCD BK

2017-03-13 -1.500 -0.500 0.500 1.500

2017-03-14 -0.625 0.375 1.375 -1.125

2017-03-15 -0.625 0.375 1.375 -1.125

2017-03-16 0.250 1.250 -1.250 -0.250

f.zscore() works similarly, except that it also divides each row by the

standard deviation of the row.

Eliminating Outliers with Masks

A common pitfall when normalizing a factor is that many normalization methods are sensitive to the magnitude of large outliers. A common technique for dealing with this issue is to ignore extreme or otherwise undesired data points when transforming the result of a Factor.

To simplify the process of programmatically excluding data points, all

Factor normalization methods accept an

optional keyword argument, mask, which accepts a

Filter. When a filter is provided,

entries where the filter produces False are ignored by the normalization

process, and those values are assigned a value of NaN in the normalized

output.

Example:

Suppose that f is a Factor which

would produce the following output:

AAPL MSFT MCD BK

2017-03-13 99.0 2.0 3.0 4.0

2017-03-14 1.5 99.0 3.5 1.0

2017-03-15 2.0 3.0 99.0 1.5

2017-03-16 2.5 3.5 1.0 99.0

Simply demeaning or z-scoring this data isn’t helpful, because the row-means

are heavily skewed by the outliers on the diagonal. We can construct a

Filter that identifiers outliers using

the percentile_between() method:

>>> f = MyFactor(...)

>>> non_outliers = f.percentile_between(0, 75)

In the above, non_outliers is a

Filter that produces True for locations

in the output of f that fall on or below the 75th percentile for their row

and produces False otherwise:

AAPL MSFT MCD BK

2017-03-13 False True True True

2017-03-14 True False True True

2017-03-15 True True False True

2017-03-16 True True True False

We can use our non_outliers filter to more intelligently normalize f by

passing as a mask to demean():

>>> normalized = f.demean(mask=non_outliers)

normalized is a new Factor that will

produce its output by computing f, subtracting the mean of non-diagonal

values from each row, and then writing NaN into the masked locations:

AAPL MSFT MCD BK

2017-03-13 NaN -1.000 0.000 1.000

2017-03-14 -0.500 NaN 1.500 -1.000

2017-03-15 -0.166 0.833 NaN -0.666

2017-03-16 0.166 1.166 -1.333 NaN

Grouped Normalization with Classifiers

Another important application of normalization is adjusting the results of a factor based some method of grouping or classifying assets. For example, we might want to compute earnings yield across all known assets and then normalize the result by dividing each asset’s earnings ratio by the mean earnings yield for that asset’s sector or industry.

In the same way that the optional mask parameter allows us to modify the

behavior of demean() to ignore

certain values, the groupby parameter allows us to specify that

normalizations should be performed on subsets of rows, rather than on the

entire row at once.

In the same way that we pass a Filter to

define values to ignore, we pass a

Classifier to define how to partition up

the rows of the factor being normalized. Classifiers are expressions similar

to Factors and Filters, except that they produce integers instead of floats or

booleans. Locations for which a classifier produced the same integer value are

grouped together when that classifier is passed to a normalization method.

Example:

Suppose that we again have a factor, f, which produces the following

output:

AAPL MSFT MCD BK

2017-03-13 1.0 2.0 3.0 4.0

2017-03-14 1.5 2.5 3.5 1.0

2017-03-15 2.0 3.0 4.0 1.5

2017-03-16 2.5 3.5 1.0 2.0

and suppose that we have a classifier c, which classifies companies by

industry, using a label of 1 for consumer electronics and a label of 2 for

food service

AAPL MSFT MCD BK

2017-03-13 1 1 2 2

2017-03-14 1 1 2 2

2017-03-15 1 1 2 2

2017-03-16 1 1 2 2

We construct a new factor that de-means f by sector via:

>>> f.demean(groupby=c)

which produces the following output:

AAPL MSFT MCD BK

2017-03-13 -0.500 0.500 -0.500 0.500

2017-03-14 -0.500 0.500 1.250 -1.250

2017-03-15 -0.500 0.500 1.250 -1.250

2017-03-16 -0.500 0.500 -0.500 0.500

There are currently three ways to construct a

Classifier:

- The

.latestattribute of anyFundamentalscolumn of dtype int64 produces aClassifier. There are currently nine such columns:Fundamentals.cannaicsFundamentals.morningstar_economy_sphere_codeFundamentals.morningstar_industry_codeFundamentals.morningstar_industry_group_codeFundamentals.morningstar_sector_codeFundamentals.naicsFundamentals.sicFundamentals.stock_typeFundamentals.style_box

- There are a few

Classifierapplications common enough that Quantopian provides dedicated subclasses for them. These hand-written classes come with additional documentation and class-level attributes with symbolic names for their labels. There are currently two such built-in classifiers: - Any

Factorcan be converted into aClassifiervia itsquantiles()method. The resultingClassifierproduces labels by sorting the results of the originalFactoreach day and grouping the results into equally-sized buckets.

Working with Dates

Most of the data available in the Pipeline API is numerically-valued. There are, however, some pipeline datasets that contain non-numeric data.

The most common class of non-numeric data available in the Pipeline API is data

representing dates and times of events. An example of such a dataset is the

EventVestor EarningsCalendar, which provides two columns:

When supplied as inputs to a CustomFactor, these

columns provide numpy arrays of dtype numpy.datetime64 representing,

for each pair of asset and simulation date, the dates of the next and previous

earnings announcements for the asset as of the simulation date.

For example, Microsoft’s earnings announcement calendar for 2014 was as follows:

| Quarter | Announcement Date |

|---|---|

| Q1 | 2014-01-23 |

| Q2 | 2014-04-24 |

| Q3 | 2014-07-22 |

| Q4 | 2014-10-23 |

If we specify EarningsCalendar.next_announcement as an input to a

CustomFactor with a window_length of 1, we’ll be passed the following data for

MSFT in our compute function:

- From

2014-01-24to2014-04-24, the next announcement for MSFT will be2014-04-24. - From

2014-04-25to2014-07-22, the next announcement will be2014-07-22. - From

2014-07-23to2014-10-23, the next announcement will be2014-10-23.

If a company had not yet announced its next earnings on a given simulation

date, next_announcement will contain a value of

numpy.NaT which stands for “Not a Time”. Note that unlike the special

floating-point value NaN, (“Not a Number”), NaT compares equal to itself.

The previous_announcement column behaves similarly,

except that it fills backwards rather than forwards, producing the date of the

most-recent historical announcement as of any given date.

Working with Strings

The Pipeline API also supports string-based expressions. There are two major uses for string data in pipeline:

String-typed

Classifierscan be used to perform grouped normalizations via the same methods (demean(),zscore(), etc.) available on integer-typed classifiers.For example, we can build a Factor that Z-Scores equity returns by country of business by doing:

from quantopian.pipeline.data import morningstar as mstar from quantopian.pipeline.factors import Returns country_id = mstar.company_reference.country_id.latest daily_returns = Returns(window_length=2) zscored = daily_returns.zscore(groupby=country_id)

String expressions can be converted into

Filtersvia string matching methods likestartswith(),endswith(),has_substring(), andmatches().For example, we can build a

Filterthat accepts only stocks whose exchange name starts with'OTC'by doing:from quantopian.pipeline.data import morningstar as mstar exchange_id = mstar.share_class_reference.exchange_id.latest is_over_the_counter = exchange_id.startswith('OTC')

Portfolio Optimization

The ultimate goal of any trading algorithm is to hold the “best” possible portfolio at each point in time, but algorithms vary in their definition of “best”. One algorithm might want the portfolio that maximizes expected returns based on a prediction of future prices. Another algorithm might want a portfolio that’s as close as possible to equal-weighted across a fixed set of longs and shorts.

Many algorithms also want to ensure that their “best” portfolio satisfies some set of constraints. The algorithm that wants to maximize expected returns might also want to place a cap on the gross market value of its portfolio, and the algorithm that wants to maintain an equal-weight long-short portfolio might also want to limit its daily turnover.

One powerful technique for finding a constrained “best” portfolio is to frame the task in the form of a Portfolio Optimization problem.

A portfolio optimization problem is a mathematical problem of the following form:

Given an objective function, F, and a list of inequality constraints, Ci ≤ hi, find a vector w of portfolio weights that maximizes F while satisfying each of the constraints.

In mathematical notation:

Example

An algorithm builds a model that predicts expected returns for a list of stocks. The algorithm wants to allocate a limited amount of capital to those stocks in a way that gives it the greatest possible expected return without placing too big a bet on any single stock.

We can express the algorithm’s goal as a mathematical optimization problem as follows:

Let be a vector of expected returns for stocks. Let be the maximum allowed weight for any single stock, and let be the maximum total weight for the whole portfolio. Find the portfolio weight vector, that solves the problem:Python libraries like CVXOPT, and scipy.optimize can solve many kinds

of optimization problems, but they require the user to represent their problem

in a complicated standard form, which often requires deep understanding of the

underlying solution method. Modeling libraries like cvxpy provide a

higher-level interface, allowing users to describe problems in terms of

abstract mathematical expressions, but this still requires that users translate

back and forth between financial domain concepts and abstract mathematical

concepts, which can be tedious and error-prone.

The quantopian.optimize module provides tools for defining and solving

portfolio optimization problems directly in terms of financial domain

concepts. Users interact with the Optimize API by providing an

Objective and a list of

Constraint objects to an API function that runs a

portfolio optimization. The Optimize API hides most of the complex mathematics

of portfolio optimization, allowing users to think in terms of high-level

concepts like “maximize expected returns” and “constrain sector exposure”

instead of abstract matrix products.

Using the Optimize API, we would solve the example optimization above as like this:

import quantopian.optimize as opt

objective = opt.MaximizeAlpha(expected_returns)

constraints = [

opt.MaxGrossExposure(W_max),

opt.PositionConcentration(min_weights, max_weights),

]

optimal_weights = opt.calculate_optimal_portfolio(objective, constraints)

Running Optimizations

There are three ways to run an optimization with the optimize API:

quantopian.optimize.calculate_optimal_portfolio()calculates a portfolio that optimizes an objective while respecting a list of constraints.quantopian.algorithm.order_optimal_portfolio()runs the same optimization ascalculate_optimal_portfolio()and places the orders necessary to achieve that portfolio.quantopian.optimize.run_optimization()performs the same optimization ascalculate_optimal_portfolio()but returns anOptimizationResultwith additional information.

calculate_optimal_portfolio() is the primary

interface to the Optimize API in research notebooks.

order_optimal_portfolio() is the primary interface

to the Optimize API in trading algorithms.

run_optimization() is a lower-level API. It is

primarily useful for debugging optimizations that are failing or producing

unexpected results.

Objectives

Every portfolio optimization requires an

Objective to tell the optimizer what function

should be maximized by the new portfolio.

There are currently two available objectives:

MaximizeAlpha is used by algorithms that try to

predict expected returns. MaximizeAlpha takes a

Series mapping assets to “alpha” values for each asset, and it

finds an array of new portfolio weights that maximizes the sum of each asset’s

weight times its alpha value.

TargetWeights is used by algorithms that

explicitly construct their own target portfolios. It is useful, for example, in

algorithms that identify a list of target assets (e.g. a list of 50 longs and

50 shorts) and simply want to target an equal-weight or equal-risk portfolio of

those assets. TargetWeights takes a

Series mapping assets to target weights for those assets. It

finds an array of portfolio weights that is as close as possible (by Euclidean

Distance) to the targets.

Constraints

It’s often necessary to enforce constraints on the results produced by a

portfolio optimizer. When using MaximizeAlpha,

for example, we need to enforce a constraint on the total value of our long and

short positions. If we don’t provide such a constraint, the optimizer will

fail trying to put “infinite” capacity into every asset with a nonzero alpha

value.

We tell the optimizer about the constraints on our portfolio by passing a list

of Constraint objects when we run an

optimization. For example, to require the optimizer to produce a portfolio with

gross exposure less than or equal to the current portfolio value, we supply a

MaxGrossExposure constraint:

import quantopian.optimize as opt

objective = opt.MaximizeAlpha(calculate_alphas())

constraints = [opt.MaxGrossExposure(1.0)]

order_optimal_portfolio(objective, constraints)

The most commonly-used constraints are as follows: