Monday, 25 March 2019

Another Step to a Google-free Life

I watch a lot of YouTube videos. So much, that it starts to annoy me, how much of my free time I’m wasting by watching (admittedly very interesting) clips of a broad range of content creators.

Logging out of my Google account helped a little bit to keep my addiction at bay, as it appears to prevent the YouTube algorithm, which normally greets me with a broad set of perfectly selected videos from recognizing me. But then again I use Google to log in to one service or another, so it became annoying to log in and back out again all the time. At one point I decided to delete my YouTube history, which resulted in a very bad prediction of what videos I might like. This helped for a short amount of time, but the algorithm quickly returned to its merciless precision after a few days.

Today I decided, that its time to leave Google behind completely. My Google Mail account was used only for online shopping anyways, so I figured why not use a more privacy respecting service instead. Self-hosting was not an option for me, as I only have a residential IP address on my Raspberry Pi and also I heard that hosting a mail server is a huge pain.

A New Mail Account

So I created an account at the Berlin based service mailbox.org. They offer emails plus some cloud stuff like an office suite, storage etc., although I don’t think I’ll use any of the additional services (oh, they offer an XMPP account as well :P). The service is not free as in free beer as it costs 1â‚Ź per month, but that’s a fair price in my opinion. All in all it appears to be a good replacement for all the Google stuff.

As a next step, I went through the long list of all the websites and shops that I have accounts on, scouting for those services that are registered on my Google Mail address. All those mail settings had to be changed to the new account.

Mail Extensions

Bonus Tipp: Mailbox.org has support for so called Mail Extensions (or Plus Extensions, I’m not really sure how they are called). This means that you can create a folder in your inbox, lets say “fsfe”. Now you can change your mail address of your FSFE account to “username+fsfe@mailbox.org”. Mails from the FSFE will still go to your “username@mailbox.org” mail account, but they are automatically sorted into the fsfe inbox. This is useful not only to sort mails by sender, but also to find out, which of the many services you use messed up and leaked your mail address to those nasty spammers, so you can avoid that service in the future.

This trick also works for Google Mail by the way.

Deleting (most) the Google Services

The last step logically would be to finally delete my Google account. However, I’m not entirely sure if I really changed all the important services over to the new account, so I’ll keep it for a short period of time (a month or so) to see if any more important mails arrive.

However, I discovered that under the section “Delete Services or Account” you can see a list of all the services which are connected with your Google account. It is possible to partially delete those services, so I went ahead and deleted most of it, except Google Mail.

Additional Bonus Tipp: I use NewPipe on my phone, which is a free libre replacement for the YouTube app. It has a neat feature which lets you import your subscriptions from your YouTube account. That way I can still follow some of the creators, but in a more manual way (as I have to open the app on my phone, which I don’t often do). In my eyes, this is a good compromise

I’m looking forward to go fully Google-free soon. I de-googled my phone ages ago, but for some reason I still held on to my Google account. This will be sorted out soon though!

De-Googling your Phone?

By the way, if you are looking to de-google your phone, Mike Kuketz has a great series of blog posts about that topic (in German though):

Happy Hacking!

Friday, 22 March 2019

How to create good SSH keys

A couple years back we wrote a guide on how to create good OpenPGP/GnuPG keys and now it is time to write a guide on SSH keys for much of the same reasons: SSH key algorithms have evolved in past years and the keys generated by the default OpenSSH settings a few years ago are no longer considered state-of-the-art. This guide is intended both for those completely new to SSH and to those who have already been using it for years and who want to make sure they are following the latest best practices.

Use OpenSSH 7 or later

Related to SSH keys there have been some relevant changes in versions 5.7, 6.5 and 7.0. Latest version is 7.9. You should be running at least 7.0. Current Debian stable (“Stretch”) shipped version 7.4 and for example Ubuntu 16.04 (“Xenial”) shipped 7.2, so nobody should be running on their laptop any later versions than these.

Generate Ed25519 keys

With a recent version of OpenSSH, simply run ssh-keygen -t ed25519. This will create a private and public key pair files at .ssh/id_ed25519 (and .pub) using the Ed25519 algorithm, which is considered state of the art. Elliptic curve algorithms in general are sleek and efficient and unlike the other well known elliptic curve algorithm ECDSA, this Ed25519 does not depend on any suspicious NIST defined constants. If you encounter a server that is very old and does not support Ed25519 keys, you might need to have a more traditinal RSA keypair. A strong 4k RSA key pair can be generated with ssh-keygen -b 4096. Hopefully you won’t need to ever do that.

Running ssh-keygen will prompt for a password. If your laptop is encrypted and well protected you can omit the password and gain some speed and convenience in your SSH commands.

Store the private key securely

Hopefully your laptop is well protected with full disk encryption etc and you can trust that nobody else than yourself has access to your /home/<username>/.ssh directory. Hopefully you also have securely stored encrypted backups of your laptop so that you can recover the .ssh directory if your laptop for any reason is lost or broken.

The SSH keyfiles are stored as armored ASCII, which means that you could even print them on paper and store the printed key in a real vault just to be extra sure you never loose your private key (.ssh/id_ed25519).

The public key is indeed designed to be public

The public key .ssh/id_ed25519.pubon the other hand is meant to be public. Here is mine for example:

~/.ssh$ cat id_ed25519.pub

ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIMdBlrVoDupARk3pd1Q9sDImaGCxEalcFt7QTqBa36kH otto@XPS-13-9370You can find a lot of public SSH keys for example on Github using the URL https://github.com/<username>.keys. You may also want to check out the excellent Github SSH usage docs.

This public key is the one you will distribute to remote servers. Place them on the remote server in the .ssh/authorized_keys file. The servers you try to access will use the public key to create a challenge, and only your laptop that has the private key pair can solve that challenge, and thus authenticate that your connection to the server is authorized.

On Linux machines a shorthand to copy your SSH key to a remote server is to run ssh-copy-id remote.example.com. This will ask for a password on the first time, but once the key is in place, a SSH keys will be used instead and no password is asked anymore.

Best practices

Once you are familiar with SSH key usage basics, please adopt these policies:

- Disable password authentication on the SSH servers you control altogether. The less there are passwords, the less there as ones that can leak or be forgotten etc. For passwords less is indeed more. Only use keys for authentication on SSH connections.

- Use a separate key per client you SSH from. So don’t copy the private key from your laptop to another laptop for use in parallel. Each client system should have only one key, so in case a key leaks, you know which client system was compromised. If you stop using your old laptop and start using a new one it is naturally another case and then you can copy the key.

- Avoid chaining SSH connections. Any system that is used for chaining SSH connections incurs a potential man-in-the-middle situation.

- For the same reasons try to avoid X11 forwarding and agent forwarding. Consider putting these in your

.ssh/config:

Host *

ForwardX11 no

ForwardAgent noUsing ProxyCommand

You shouldn’t forward SSH agent (ssh -A), but it’s ok to use ProxyCommand or ProxyJump. You can permanently configure it in your .ssh/config like this:

Host backend.example.com

Hostname 1.2.3.4.

Port 23

ProxyCommand ssh bastion.example.com -W %h:%pStay vigilant

If running ssh remote.example.comyields some error messages, don’t ignore them! SSH has an opportunistic key model, which is convenient, but it also means that if you are confronted with warnings that the connection might be eavesdropped you should really take note and not proceed.

Monday, 18 March 2019

Dataspaces and Paging in L4Re

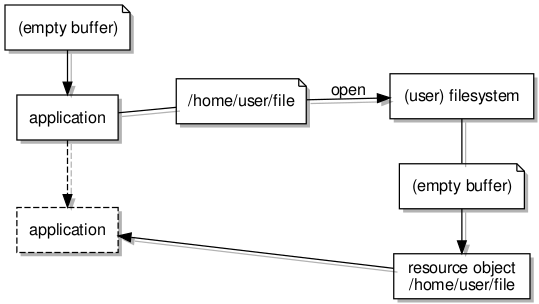

The experiments covered by my recent articles about filesystems and L4Re managed to lead me along another path in the past few weeks. I had defined a mechanism for providing access to files in a filesystem via a programming interface employing interprocess communication within the L4Re system. In doing so, I had defined calls or operations that would read from and write to a file, observing that some kind of “memory-mapped” file support might also be possible. At the time, I had no clear idea of how this would actually be made to work, however.

As can often be the case, once some kind of intellectual challenge emerges, it can become almost impossible to resist the urge to consider it and to formulate some kind of solution. Consequently, I started digging deeper into a number of things: dataspaces, pagers, page faults, and the communication that happens within L4Re via the kernel to support all of these things.

Dataspaces and Memory

Because the L4Re developers have put a lot of effort into making a system where one can compile a fairly portable program and probably expect it to work, matters like the allocation of memory within programs, the use of functions like malloc, and other things we take for granted need no special consideration in the context of describing general development for an L4Re-based system. In principle, if our program wants more memory for its own use, then the use of things like malloc will probably suffice. It is where we have other requirements that some of the L4Re abstractions become interesting.

In my previous efforts to support MIPS-based systems, these other requirements have included the need to access memory with a fixed and known location so that the hardware can be told about it, thus supporting things like framebuffers that retain stored data for presentation on a display device. But perhaps most commonly in a system like L4Re, it is the need to share memory between processes or tasks that causes us to look beyond traditional memory allocation techniques at what L4Re has to offer.

Indeed, the filesystem work so far employs what are known as dataspaces to allow filesystem servers and client applications to exchange larger quantities of information conveniently via shared buffers. First, the client requests a dataspace representing a region of memory. It then associates it with an address so that it may access the memory. Then, the client shares this with the server by sending it a reference to the dataspace (known as a capability) in a message.

The kernel, in propagating the message and the capability, makes the dataspace available to the server so that both the client and the server may access the memory associated with the dataspace and that these accesses will just work without any further effort. At this level of sophistication we can get away with thinking of dataspaces as being blocks of memory that can be plugged into tasks. Upon obtaining access to such a block, reads and writes (or loads and stores) to addresses in the block will ultimately touch real memory locations.

Even in this simple scheme, there will be some address translation going on because each task has its own way of arranging its view of memory: its virtual address space. The virtual memory addresses used by a task may very well be different from the physical memory addresses indicating the actual memory locations involved in accesses.

Such address translation is at the heart of operating systems like those supported by the L4 family of microkernels. But the system will make sure that when a task tries to access a virtual address available to it, the access will be translated to a physical address and supported by some memory location.

Mapping and Paging

With some knowledge of the underlying hardware architecture, we can say that each task will need support from the kernel and the hardware to be able to treat its virtual address space as a way of accessing real memory locations. In my experiments with simple payloads to run on MIPS-based hardware, it was sufficient to define very simple tables that recorded correspondences between virtual and physical addresses. Processes or tasks would access memory addresses, and where the need arose to look up such a virtual address, the table would be consulted and the hardware configured to map the virtual address to a physical address.

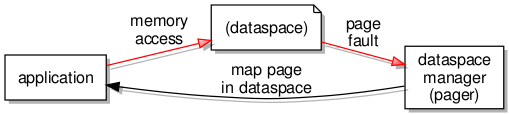

Naturally, proper operating systems go much further than this, and systems built on L4 technologies go as far as to expose the mechanisms for normal programs to interact with. Instead of all decisions about how memory is mapped for each task being taken in the kernel, with the kernel being equipped with all the necessary policy and information, such decisions are delegated to entities known as pagers.

When a task needs an address translated, the kernel pushes the translation activity over to the designated pager for a decision to be made. And the event that demands an address translation is known as a page fault since it occurs when a task accesses a memory page that is not yet supported by a mapping to physical memory. Pagers are therefore present to receive page fault notifications and to respond in a way that causes the kernel to perform the necessary privileged actions to configure the hardware, this being one of the few responsibilities of the kernel.

Treating a dataspace as an abstraction for memory accessed by a task or application, the designated pager for the dataspace acts as a dataspace manager, ensuring that memory accesses within the dataspace can be satisfied. If an access causes a page fault, the pager must act to provide a mapping for the accessed page, leaving the application mostly oblivious to the work going on to present the dataspace and its memory as a continuously present resource.

An Aside

It is rather interesting to consider the act of delegation in the context of processor architecture. It would seem to be fairly common that the memory management units provided by various architectures feature built-in support for consulting various forms of data structures describing the virtual memory layout of a process or task. So, when a memory access fails, the information about the actual memory address involved can be retrieved from such a predefined structure.

However, the MIPS architecture largely delegates such matters to software: a processor exception is raised when a “bad” virtual address is used, and the job of doing something about it falls immediately to a software routine. So, there seems to be some kind of parallel between processor architecture and operating system architecture, L4 taking a MIPS-like approach of eager delegation to a software component for increased flexibility and functionality.

Messages and Flexpages

So, the high-level view so far is as follows:

- Dataspaces represent regions of virtual memory

- Virtual memory is mapped to physical memory where the data actually resides

- When a virtual memory access cannot be satisfied, a page fault occurs

- Page faults are delivered to pagers (acting as dataspace managers) for resolution

- Pagers make data available and indicate the necessary mapping to satisfy the failing access

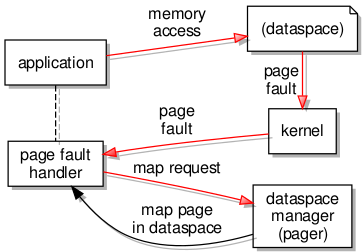

To get to the level of actual implementation, some familiarisation with other concepts is needed. Previously, my efforts have exposed me to the interprocess communications (IPC) central in L4Re as a microkernel-based system. I had even managed to gain some level of understanding around sending references or capabilities between processes or tasks. And it was apparent that this mechanism would be used to support paging.

Unfortunately, the main L4Re documentation does not seem to emphasise the actual message details or protocols involved in these fundamental activities. Instead, the library code is described in reference documentation with some additional explanation. However, some investigation of the code yielded some insights as to the kind of interfaces the existing dataspace implementations must support, and I also tracked down some message sending activities in various components.

When a page fault occurs, the first thing to know about it is the kernel because the fault occurs at the fundamental level of instruction execution, and it is the kernel’s job to deal with such low-level events in the first instance. Notification of the fault is then sent out of the kernel to the page fault handler for the affected task. The page fault handler then contacts the task’s pager to request a resolution to the problem.

In L4Re, this page fault handler is likely to be something called a region mapper (or perhaps a region manager), and so it is not completely surprising that the details of invoking the pager was located in some region mapper code. Putting together both halves of the interaction yielded the following details of the message:

- map: offset, hot spot, flags → flexpage

Here, the offset is the position of the failing memory access relative to the start of the dataspace; the flags describe the nature of the memory to be accessed. The “hot spot” and “flexpage” need slightly more explanation, the latter being an established term in L4 circles, the former being almost arbitrarily chosen and not particularly descriptive.

The term “flexpage” may have its public origins in the “Flexible-Sized Page-Objects” paper whose title describes the term. For our purposes, the significance of the term is that it allows for the consideration of memory pages in a range of sizes instead of merely considering a single system-wide page size. These sizes start at the smallest page size supported by the system (but not necessarily the absolute smallest supported by the hardware, but anyway…) and each successively larger size is double the size preceding it. For example:

- 4096 (212) bytes

- 8192 (213) bytes

- 16384 (214) bytes

- 32768 (215) bytes

- 65536 (216) bytes

- …

When a page fault occurs, the handler identifies a region of memory where the failing access is occurring. Although it could merely request that memory be made available for a single page (of the smallest size) in which the access is situated, there is the possibility that a larger amount of memory be made available that encompasses this access page. The flexpage involved in a map request represents such a region of memory, having a size not necessarily decided in advance, being made available to the affected task.

This brings us to the significance of the “hot spot” and some investigation into how the page fault handler and pager interact. I must admit that I find various educational materials to be a bit vague on this matter, at least with regard to explicitly describing the appropriate behaviour. Here, the flexpage paper was helpful in providing slightly different explanations, albeit employing the term “fraction” instead of “hot spot”.

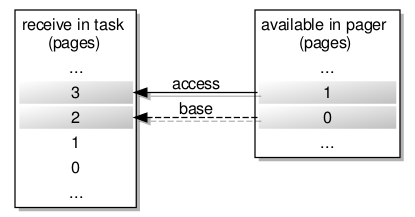

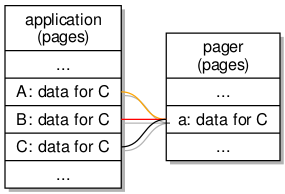

Since the map request needs to indicate the constraints applying to the region in which the failing access occurs, without demanding a particular size of region and yet still providing enough useful information to the pager for the resulting flexpage to be useful, an efficient way is needed of describing the memory landscape in the affected task. This is apparently where the “hot spot” comes in. Consider a failing access in page #3 of a memory region in a task, with the memory available in the pager to satisfy the request being limited to two pages:

Here, the “hot spot” would reference page #3, and this information would be received by the pager. The significance of the “hot spot” appears to be the location of the failing access within a flexpage, and if the pager could provide it then a flexpage of four system pages would map precisely to the largest flexpage expected by the handler for the task.

However, with only two system pages to spare, the pager can only send a flexpage consisting of those two pages, the “hot spot” being localised in page #1 of the flexpage to be sent, and the base of this flexpage being the base of page #0. Fortunately, the handler is smart enough to fit this smaller flexpage onto the “receive window” by using the original “hot spot” information, mapping page #2Â in the receive window to page #0 of the received flexpage and thus mapping the access page #3 to page #1 of that flexpage.

So, the following seems to be considered and thus defined by the page fault handler:

- The largest flexpage that could be used to satisfy the failing access.

- The base of this flexpage.

- The page within this flexpage where the access occurs: the “hot spot”.

- The offset within the broader dataspace of the failing access, it indicating the data that would be expected in this page.

(Given this phrasing of the criteria, it becomes apparent that “flexpage offset” might be a better term than “hot spot”.)

With these things transferred to the pager using a map request, the pager’s considerations are as follows:

- How flexpages of different sizes may fit within the memory available to satisfy the request.

- The base of the most appropriate flexpage, where this might be the largest that fits within the available memory.

- The population of the available memory with data from the dataspace.

To respond to the request, the pager sends a special flexpage item in its response message. Consequently, this flexpage is mapped into the task’s address space, and the execution of the task may resume with the missing data now available.

Practicalities and Pitfalls

If the dataspace being provided by a pager were merely a contiguous region of memory containing the data, there would probably be little else to say on the matter, but in the above I hint at some other applications. In my example, the pager only uses a certain amount of memory with which it responds to map requests. Evidently, in providing a dataspace representing a larger region, the data would have to be brought in from elsewhere, which raises some other issues.

Firstly, if data is to be copied into the limited region of memory available for satisfying map requests, then the appropriate portion of the data needs to be selected. This is mostly a matter of identifying how the available memory pages correspond to the data, then copying the data into the pages so that the accessed location ultimately provides the expected data. It may also be the case that the amount of data available does not fill the available memory pages; this should cause the rest of those pages to be filled with zeros so that data cannot leak between map requests.

Secondly, if the available memory pages are to be used to satisfy the current map request, then what happens when we re-use them in each new map request? It turns out that the mappings made for previous requests remain active! So if a task traverses a sequence of pages, and if each successive page encountered in that traversal causes a page fault, then it will seem that new data is being made available in each of those pages. But if that task inspects the earlier pages, it will find that the newest data is exposed through those pages, too, banishing the data that we might have expected.

Of course, what is happening is that all of the mapped pages in the task’s dataspace now refer to the same collection of pages in the pager, these being dedicated to satisfying the latest map request. And so, they will all reflect the contents of those available memory pages as they currently are after this latest map request.

One solution to this problem is to try and make the task forget the mappings for pages it has visited previously. I wondered if this could be done automatically, by sending a flexpage from the pager with a flag set to tell the kernel to invalidate prior mappings to the pager’s memory. After a time looking at the code, I ended up asking on the l4-hackers mailing list and getting a very helpful response that was exactly what I had been looking for!

There is, in fact, a special way of telling the kernel to “unmap” memory used by other tasks (l4_task_unmap), and it is this operation that I ended up using to invalidate the mappings previously sent to the task. Thus the task, upon backtracking to earlier pages, finds that the mappings from virtual addresses to the physical memory holding the latest data are absent once again, and page faults are needed to restore the data in those pages. The result is a form of multiplexing access to a resource via a limited region of memory.

Applications of Flexible Paging

Given the context of my investigations, it goes almost without saying that the origin of data in such a dataspace could be a file in a filesystem, but it could equally be anything that exposes data in some kind of backing store. And with this backing store not necessarily being an area of random access memory (RAM), we enter the realm of a more restrictive definition of paging where processes running in a system can themselves be partially resident in RAM and partially resident in some other kind of storage, with the latter portions being converted to the former by being fetched from wherever they reside, depending on the demands made on the system at any given point in time.

One observation worth making is that a dataspace does not need to be a dedicated component in the system in that it is not a separate and special kind of entity. Anything that is able to respond to the messages understood by dataspaces – the paging “protocol” – can provide dataspaces. A filesystem object can therefore act as a dataspace, exposing itself in a region of memory and responding to map requests that involve populating that region from the filesystem storage.

It is also worth mentioning that dataspaces and flexpages exist at different levels of abstraction. Dataspaces can be considered as control mechanisms for accessing regions of virtual memory, and the Fiasco.OC kernel does not appear to employ the term at all. Meanwhile, flexpages are abstractions for memory pages existing within or even independently of dataspaces. (If you wish, think of the frame of Banksy’s work “Love is in the Bin” as a dataspace, with the shredded pieces being flexpages that are mapped in and out.)

One can envisage more exotic forms of dataspace. Consider an image whose pixels need to be computed, like a ray-traced image, for instance. If it exposed those pixels as a dataspace, then a task reading from pages associated with that dataspace might cause computations to be initiated for an area of the image, with the task being suspended until those computations are performed and then being resumed with the pixel data ready to read, with all of this happening largely transparently.

I started this exercise out of somewhat idle curiosity, but it now makes me wonder whether I might introduce memory-mapped access to filesystem objects and then re-implement operations like reading and writing using this particular mechanism. Not being familiar with how systems like GNU/Linux provide these operations, I can only speculate as to whether similar decisions have been taken elsewhere.

But certainly, this exercise has been informative, even if certain aspects of it were frustrating. I hope that this account of my investigations proves useful to anyone else wondering about microkernel-based systems and L4Re in particular, especially if they too wish there were more discussion, reflection and collaboration on the design and implementation of software for these kinds of systems.

Sunday, 10 March 2019

Generate a random root password aka Ansible Password Plugin

I was suspicious with a cron entry on a new ubuntu server cloud vm, so I ended up to be looking on the logs.

Authentication token is no longer valid; new one required

After a quick internet search,

# chage -l root

Last password change : password must be changed

Password expires : password must be changed

Password inactive : password must be changed

Account expires : never

Minimum number of days between password change : 0

Maximum number of days between password change : 99999

Number of days of warning before password expires : 7due to the password must be changed on the root account, the cron entry does not run as it should.

This ephemeral image does not need to have a persistent known password, as the notes suggest, and it doesn’t! Even so, we should change to root password when creating the VM.

Ansible

Ansible have a password plugin that we can use with lookup.

TLDR; here is the task:

- name: Generate Random Password

user:

name: root

password: "{{ lookup('password','/dev/null encrypt=sha256_crypt length=32') }}"after ansible-playbook runs

# chage -l root

Last password change : Mar 10, 2019

Password expires : never

Password inactive : never

Account expires : never

Minimum number of days between password change : 0

Maximum number of days between password change : 99999

Number of days of warning before password expires : 7and cron entry now runs as it should.

Password Plugin

Let explain how password plugin works.

Lookup needs at-least two (2) variables, the plugin name and a file to store the output. Instead, we will use /dev/null not to persist the password to a file.

To begin with it, a test ansible playbook:

- hosts: localhost

gather_facts: False

connection: local

tasks:

- debug:

msg: "{{ lookup('password', '/dev/null') }}"

with_sequence: count=5Output:

ok: [localhost] => (item=1) => {

"msg": "dQaVE0XwWti,7HMUgq::"

}

ok: [localhost] => (item=2) => {

"msg": "aT3zqg.KjLwW89MrAApx"

}

ok: [localhost] => (item=3) => {

"msg": "4LBNn:fVw5GhXDWh6TnJ"

}

ok: [localhost] => (item=4) => {

"msg": "v273Hbox1rkQ3gx3Xi2G"

}

ok: [localhost] => (item=5) => {

"msg": "NlwzHoLj8S.Y8oUhcMv,"

}Length

In password plugin we can also use length variable:

msg: "{{ lookup('password', '/dev/null length=32') }}"

output:

ok: [localhost] => (item=1) => {

"msg": "4.PEb6ycosnyL.SN7jinPM:AC9w2iN_q"

}

ok: [localhost] => (item=2) => {

"msg": "s8L6ZU_Yzuu5yOk,ISM28npot4.KwQrE"

}

ok: [localhost] => (item=3) => {

"msg": "L9QvLyNTvpB6oQmcF8WVFy.7jE4Q1K-W"

}

ok: [localhost] => (item=4) => {

"msg": "6DMH8KqIL:kx0ngFe8:ri0lTK4hf,SWS"

}

ok: [localhost] => (item=5) => {

"msg": "ByW11i_66K_0mFJVB37Mq2,.fBflepP9"

}Characters

We can define a specific type of python string constants

- ascii_letters (ascii_lowercase and ascii_uppercase

- ascii_lowercase

- ascii_uppercase

- digits

- hexdigits

- letters (lowercase and uppercase)

- lowercase

- octdigits

- punctuation

- printable (digits, letters, punctuation and whitespace

- uppercase

- whitespace

eg.

msg: "{{ lookup('password', '/dev/null length=32 chars=ascii_lowercase') }}"

ok: [localhost] => (item=1) => {

"msg": "vwogvnpemtdobjetgbintcizjjgdyinm"

}

ok: [localhost] => (item=2) => {

"msg": "pjniysksnqlriqekqbstjihzgetyshmp"

}

ok: [localhost] => (item=3) => {

"msg": "gmeoeqncdhllsguorownqbynbvdusvtw"

}

ok: [localhost] => (item=4) => {

"msg": "xjluqbewjempjykoswypqlnvtywckrfx"

}

ok: [localhost] => (item=5) => {

"msg": "pijnjfcpjoldfuxhmyopbmgdmgdulkai"

}Encrypt

We can also define the encryption hash. Ansible uses passlib so the unix active encrypt hash algorithms are:

- passlib.hash.bcrypt - BCrypt

- passlib.hash.sha256_crypt - SHA-256 Crypt

- passlib.hash.sha512_crypt - SHA-512 Crypt

eg.

msg: "{{ lookup('password', '/dev/null length=32 chars=ascii_lowercase encrypt=sha512_crypt') }}"

ok: [localhost] => (item=1) => {

"msg": "$6$BR96lZqN$jy.CRVTJaubOo6QISUJ9tQdYa6P6tdmgRi1/NQKPxwX9/Plp.7qETuHEhIBTZDTxuFqcNfZKtotW5q4H0BPeN."

}

ok: [localhost] => (item=2) => {

"msg": "$6$ESf5xnWJ$cRyOuenCDovIp02W0eaBmmFpqLGGfz/K2jd1FOSVkY.Lsuo8Gz8oVGcEcDlUGWm5W/CIKzhS43xdm5pfWyCA4."

}

ok: [localhost] => (item=3) => {

"msg": "$6$pS08v7j3$M4mMPkTjSwElhpY1bkVL727BuMdhyl4IdkGM7Mq10jRxtCSrNlT4cAU3wHRVxmS7ZwZI14UwhEB6LzfOL6pM4/"

}

ok: [localhost] => (item=4) => {

"msg": "$6$m17q/zmR$JdogpVxY6MEV7nMKRot069YyYZN6g8GLqIbAE1cRLLkdDT3Qf.PImkgaZXDqm.2odmLN8R2ZMYEf0vzgt9PMP1"

}

ok: [localhost] => (item=5) => {

"msg": "$6$tafW6KuH$XOBJ6b8ORGRmRXHB.pfMkue56S/9NWvrY26s..fTaMzcxTbR6uQW1yuv2Uy1DhlOzrEyfFwvCQEhaK6MrFFye."

}

A look at Matrix.org’s OLM | MEGOLM encryption protocol

Everyone who knows and uses XMPP is probably aware of a new player in the game. Matrix.org is often recommended as a young, arising alternative to the aging protocol behind the Jabber ecosystem. However the founders do not see their product as a direct competitor to XMPP as their approach to the problem of message exchanging is quite different.

An open network for secure, decentralized communication.

matrix.org

During his talk at the FOSDEM in Brussels, matrix.org founder Matthew Hodgson roughly compared the concept of matrix to how git works. Instead of passing single messages between devices and servers, matrix is all about synchronization of a shared state. A chat room can be seen as a repository, which is shared between all servers of the participants. As a consequence communication in a chat room can go on, even when the server on which the room was created goes down, as the room simultaneously exists on all the other servers. Once the failed server comes back online, it synchronizes its state with the others and retrieves missed messages.

Olm, Megolm – What’s the deal?

Matrix introduced two different crypto protocols for end-to-end encryption. One is named Olm, which is used in one-to-one chats between two chat partners (this is not quite correct, see Updates for clarifying remarks). It can very well be compared to OMEMO, as it too is an adoption of the Signal Protocol by OpenWhisperSystems. However, due to some differences in the implementation Olm is not compatible with OMEMO although it shares the same cryptographic properties.

The other protocol goes by the name of Megolm and is used in group chats. Conceptually it deviates quite a bit from Olm and OMEMO, as it contains some modifications that make it more suitable for the multi-device use-case. However, those modifications alter its cryptographic properties.

Comparing Cryptographic Building Blocks

| Protocol | Olm | OMEMO (Signal) |

| IdentityKey | Curve25519 | X25519 |

| FingerprintKeyâ˝Âšâž | Ed25519 | none |

| PreKeys | Curve25519 | X25519 |

| SignedPreKeysâ˝Â˛âž | none | X25519 |

| Key Exchange Algorithmâ˝Âłâž | Triple Diffie-Hellman (3DH) | Extended Triple Diffie-Hellman (X3DH) |

| Ratcheting Algoritm | Double Ratchet | Double Ratchet |

- Signal uses a Curve X25519 IdentityKey, which is capable of both encrypting, as well as creating signatures using the XEdDSA signature scheme. Therefore no separate FingerprintKey is needed. Instead the fingerprint is derived from the IdentityKey. This is mostly a cosmetic difference, as one less key pair is required.

- Olm does not distinguish between the concepts of signed and unsigned PreKeys like the Signal protocol does. Instead it only uses one type of PreKey. However, those may be signed with the FingerprintKey upon upload to the server.

- OMEMO includes the SignedPreKey, as well as an unsigned PreKey in the handshake, while Olm only uses one PreKey. As a consequence, if the senders Olm IdentityKey gets compromised at some point, the very first few messages that are sent could possibly be decrypted.

In the end Olm and OMEMO are pretty comparable, apart from some simplifications made in the Olm protocol. Those do only marginally affect its security though (as far as I can tell as a layman).

Megolm

The similarities between OMEMO and Matrix’ encryption solution end when it comes to group chat encryption.

OMEMO does not treat chats with more than two parties any other than one-to-one chats. The sender simply has to manage a lot more keys and the amount of required trust decisions grows by a factor roughly equal to the number of chat participants.

Yep, this is a mess but luckily XMPP isn’t a very popular chat protocol so there are no large encrypted group chats ;P

So how does Matrix solve the issue?

When a user joins a group chat, they generate a session for that chat. This session consists of an Ed25519 SigningKey and a single ratchet which gets initialized randomly.

The public part of the signing key and the state of the ratchet are then shared with each participant of the group chat. This is done via an encrypted channel (using Olm encryption). Note, that this session is also shared between the devices of the user. Contrary to Olm, where every device has its own Olm session, there is only one Megolm session per user per group chat.

Whenever the user sends a message, the encryption key is generated by forwarding the ratchet and deriving a symmetric encryption key for the message from the ratchets output. Signing is done using the SigningKey.

Recipients of the message can decrypt it by forwarding their copy of the senders ratchet the same way the sender did, in order to retrieve the same encryption key. The signature is verified using the public SigningKey of the sender.

There are some pros and cons to this approach, which I briefly want to address.

First of all, you may find that this protocol is way less elegant compared to Olm/Omemo/Signal. It poses some obvious limitations and security issues. Most importantly, if an attacker gets access to the ratchet state of a user, they could decrypt any message that is sent from that point in time on. As there is no new randomness introduced, as is the case in the other protocols, the attacker can gain access by simply forwarding the ratchet thereby generating any decryption keys they need. The protocol defends against this by requiring the user to generate a new random session whenever a new user joins/leaves the room and/or a certain number of messages has been sent, whereby the window of possibly compromised messages gets limited to a smaller number. Still, this is equivalent to having a single key that decrypts multiple messages at once.

The Megolm specification lists a number of other caveats.

On the pro side of things, trust management has been simplified as the user basically just has to decide whether or not to trust each group member instead of each participating device – reducing the complexity from a multiple of n down to just n. Also, since there is no new randomness being introduced during ratchet forwarding, messages can be decrypted multiple times. As an effect devices do not need to store the decrypted messages. Knowledge of the session state(s) is sufficient to retrieve the message contents over and over again.

By sharing older session states with own devices it is also possible to read older messages on new devices. This is a feature that many users are missing badly from OMEMO.

On the other hand, if you really need true future secrecy on a message-by-message base and you cannot risk that an attacker may get access to more than one message at a time, you are probably better off taking the bitter pill going through the fingerprint mess and stick to normal Olm/OMEMO (see Updates for remarks on this statement).

Note: End-to-end encryption does not really make sense in big, especially public chat rooms, since an attacker could just simply join the room in order to get access to ongoing communication. Thanks to Florian Schmaus for pointing that out.

I hope I could give a good overview of the different encryption mechanisms in XMPP and Matrix. Hopefully I did not make any errors, but if you find mistakes, please let me know, so I can correct them asap

Happy Hacking!

Sources

- Olm – A Cryptographic Ratchet

- Megolm Group Ratchet

- Implementing End-to-End Encryption in Matrix clients

- OMEMO Encryption

- The Double Ratchet Algorithm

- Extended Triple Diffie-Hellman Key Exchange

Updates:

Thanks for Matthew Hodgson for pointing out, that Olm/OMEMO is also effectively using a symmetric ratchet when multiple consecutive messages are sent without the receiving device sending an answer. This can lead to loss of future secrecy as discussed in the OMEMO protocol audit.

Also thanks to Hubert Chathi for noting, that Megolm is also used in one-to-one chats, as matrix doesn’t have the same distinction between group and single chats. He also pointed out, that the security level of Megolm (the criteria for regenerating the session) can be configured on a per-chat basis.

Tuesday, 05 March 2019

Copyright Reform

Το Ευρωπαικό Κοινοβούλιο ετοιμάζει να ψηφίσει το τελικό κείμενο για την οδηγία "Πνευματικής Ιδιοκτησίας". Μέσα σε αυτή την οδηγία υπάρχουν δύο άρθρα που αν εγκριθούν θα αλλάξουν δραματικά τον χαρακτήρα του Internet. Προς το χειρότερο. Θα αλλάξει όχι μόνο ο τρόπος με το οποίο οι άνθρωποι το χρησιμοποιούν ως πλατφόρμα έκφρασης λόγου, αλλά θα επηρεάσεις αρνητικά ακόμα και τους ίδιους τους δημιουργούς, που υποτίθεται προσπαθεί να προστατέψει.

Παρακάτω είναι μια συνοπτική περίληψη των καταστροφικών συνεπειών, που θα γίνουν πραγματικότητα αν το Ευρωπαικό Κοινοβούλιο υπερψηφίσει την οδηγία.

Άρθρο 13: Φίλτρα περιεχομένου (Upload filters)

- Τα εμπορικά sites και εφαρμογές, όπου χρήστες αναρτούν περιεχόμενο, πρέπει να καταβάλουν κάθε προσπάθεια ώστε να έχουν αγοράσει εκ των προτέρων τις απαραίτητες άδειες για οτιδήποτε μπορεί να αναρτήσουν οι χρήστες τους. Αυτό πρακτικά σημαίνει το σύνολο της ανθρώπινης δημιουργίας που διέπεται από περιορισμούς πνευματικής ιδιοκτησίας. Είναι προφανές πως κάτι τέτοιο είναι αδύνατο να εφαρμοστεί τόσο τεχνικά, όσο και οικονομικά. Ειδικά για μικρές πλατφόρμες και υπηρεσίες.

- Επιπρόσθετα, τα περισσότερα sites θα πρέπει να κάνουν ό,τι είναι δυνατόν ώστε να αποτρέψουν την ανάρτηση περιεχομένου απ' τους χρήστες τους, για το οποίο δεν έχουν την άδεια του δημιουργού. Δεν θα έχουν άλλη επιλογή απ' το να εφαρμόσουν φίλτρα περιεχομένου, τα οποία είναι ακριβά και αναποτελεσματικά.

- Σε περίπτωση που ένα δικαστήριο κρίνει πως τα φίλτρα δεν είναι επαρκώς αυστηρά, τα sites θα είναι νομικά υπόλογα για τις παραβάσεις, σαν να τις έχουν διαπράξει τα ίδια. Αυτή η νομική απειλή θα οδηγήσει αρκετές υπηρεσίες να υπερβάλουν στην εφαρμογή των φίλτρων ώστε να παραμείνουν ασφαλή από διώξεις. Γεγονός που απειλεί την ελευθερία του λόγου στο διαδίκτυο.

- Το περιεχόμενο που θα αναρτούμε online δεν είναι πλέον άμεσα διαθέσιμο, σε πολλές περιπτώσεις, καθώς θα πρέπει πρώτα να εγκριθεί απ' τα παραπάνω φίλτρα.

- Αρκετές απ' τις υπηρεσίες που χρησιμοποιούμε σήμερα θα σταματήσουν να λειτουργούν εντός της ΕΕ, υπό το φόβο διώξεων για παράβαση της οδηγίας ή για τη μη-αυστηρή εφαρμογή της.

- Τα φίλτρα περιεχομένου θα βλάψουν και τους δημιουργούς. Διασκευές, reviews, memes και άλλες κατηγορίες περιεχομένου που βασίζονται σε copoyrighted υλικό θα μπλοκάρεται απ' τα φίλτρα.

- Θα είσαι ένοχος μέχρι να αποδείξεις το αντίθετο. Ακόμα κι αν το περιεχόμενό σου ανήκει, θα φέρεις το βάρος της απόδειξης όταν τα φίλτρα θα το μαρκάρουν λανθασμένα ως παράβαση πνευματικής ιδιοκτησίας.

- Θα επωφεληθούν οι μεγάλοι παίχτες της αγοράς, καθώς είναι οι μόνοι που μπορούν να αντέξουν οικονομικά την εφαρμογή της οδηγίας.

- Τελικώς λιγότερες νέες και καινοτόμες υπηρεσίες θα ξεκινούν εντός της ΕΕ, γιατί το κόστος εκκίνησης θα είναι δυσβάσταχτο.

Άρθρο 11: Φόρος συνδέσμων (Link tax)

- Η αναπαραγωγή ειδήσεων με παραπάνω από μία λέξη ή περισσότερο από μια πολύ σύντομη περιγραφή, θα απαιτεί σχετική άδεια. Αυτό πιθανότατα θα καλύψει και τις περιλήψεις (snippets) ειδήσεων που χρησιμοποιούνται κατά κόρον. Υπάρχει αρκετή ασάφεια ως προς το τι σημαίνει το "σύντομη περιγραφή", αλλά υπάρχει ο κίνδυνος να καταστεί νομικά επικίνδυνη η χρήση περιλήψεων με συνδέσμους.

- Δεν υπάρχουν εξαιρέσεις. Η οδηγία περιλαμβάνει ακόμα και ατομικές υπηρεσίες, μικρές ή μη-κερδοσκοπικές εταιρείες, blogs.

- Πολλοί θεωρούν πως η οδηγία θα δημιουργήσει έσοδα για τους ειδησεογραφικούς οργανισμούς από μεγάλες υπηρεσίες (πχ. Google News), που χρησιμοποιούν περιλήψεις άρθρων. Στην πράξη όμως, θα πλήξει τους μικρούς παίχτες (πχ. blogs) και οι μεγάλες υπηρεσίες πιθανότατα θα αναστείλουν τη λειτουργία τους στην ΕΕ αφαιρώντας απ' τα ειδησιογραφικά sites ακόμα και αυτή την κίνηση απ' τους συνδέσμους προς τα άρθρα τους.

- Τελικώς η εφαρμογή της οδηγίας θα περιορίσει τη ροή της πληροφορίας και της ενημέρωσης.

Τι μπορείς να κάνεις

Ενημέρωσε τους ευρωβουλευτές. Πολλοί εξ αυτών θα υπερψηφίσουν είτε από άγνοια είτε από κακή πληροφόρηση. Επισκέψου το saveyourinternet, όπου υπάρχουν σχετικά στοιχεία επικοινωνίας. Με κόκκινο χρώμα είναι οι ευρωβουλευτές που έχουν ταχθεί υπέρ της οδηγίας στο παρελθόν.

Βοήθησε να ενημερωθούν καλύτερα για τους κινδύνους της οδηγίας. Αν σε βοηθάει στην επικοινωνία, μπορείς να χρησιμοποιήσεις όσα αποσπάσματα επιθυμείς απ' το παρόν κείμενο είτε ακόμα και ολόκληρο το κείμενο.

Sunday, 03 March 2019

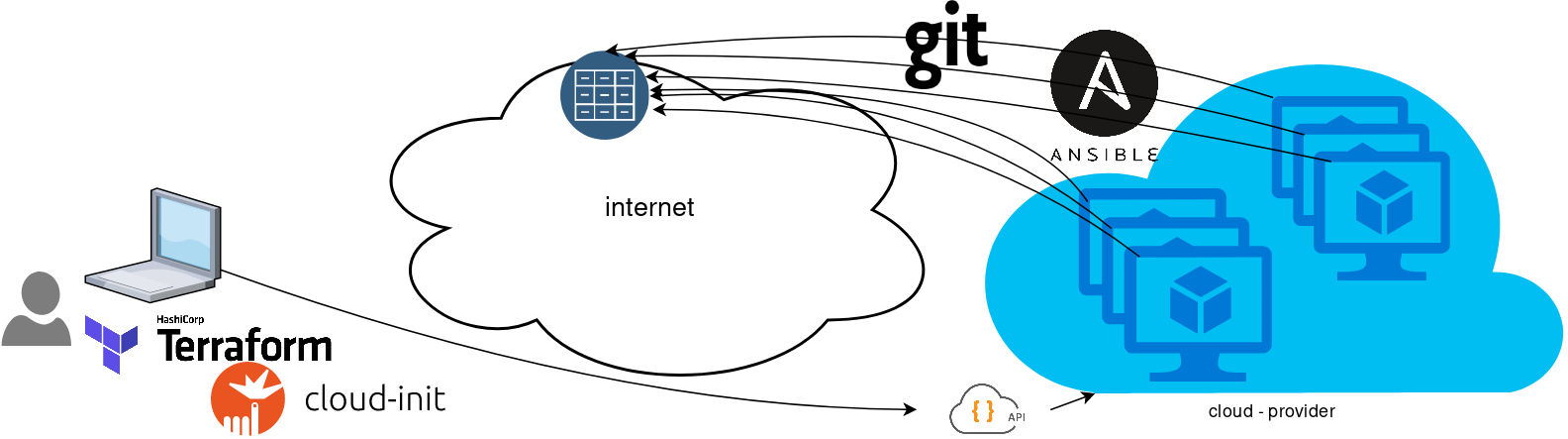

Scaling automation with ansible-pull

Ansible is a wonderful software to automatically configure your systems. The default mode of using ansible is Push Model.

That means from your box, and only using ssh + python, you can configure your flee of machines.

Ansible is imperative. You define tasks in your playbooks, roles and they will run in a serial manner on the remote machines. The task will first check if needs to run and otherwise it will skip the action. And although we can use conditional to skip actions, tasks will perform all checks. For that reason ansible seems slow instead of other configuration tools. Ansible runs in serial mode the tasks but in psedo-parallel mode against the remote servers, to increase the speed. But sometimes you need to gather_facts and that would cost in execution time. There are solutions to cache the ansible facts in a redis (in memory key:value db) but even then, you need to find a work-around to speed your deployments.

But there is an another way, the Pull Mode!

Useful Reading Materials

to learn more on the subject, you can start reading these two articles on ansible-pull.

Pull Mode

So here how it looks:

You will first notice, that your ansible repository is moved from you local machine to an online git repository. For me, this is GitLab. As my git repo is private, I have created a Read-Only, time-limit, Deploy Token.

With that scenario, our (ephemeral - or not) VMs will pull their ansible configuration from the git repo and run the tasks locally. I usually build my infrastructure with Terraform by HashiCorp and make advance of cloud-init to initiate their initial configuration.

Cloud-init

The tail of my user-data.yml looks pretty much like this:

...

# Install packages

packages:

- ansible

# Run ansible-pull

runcmd:

- ansible-pull -U https://gitlab+deploy-token-XXXXX:YYYYYYYY@gitlab.com/username/myrepo.git Playbook

You can either create a playbook named with the hostname of the remote server, eg. node1.yml or use the local.yml as the default playbook name.

Here is an example that will also put ansible-pull into a cron entry. This is very useful because it will check for any changes in the git repo every 15 minutes and run ansible again.

- hosts: localhost

tasks:

- name: Ensure ansible-pull is running every 15 minutes

cron:

name: "ansible-pull"

minute: "15"

job: "ansible-pull -U https://gitlab+deploy-token-XXXXX:YYYYYYYY@gitlab.com/username/myrepo.git &> /dev/null"

- name: Create a custom local vimrc file

lineinfile:

path: /etc/vim/vimrc.local

line: 'set modeline'

create: yes

- name: Remove "cloud-init" package

apt:

name: "cloud-init"

purge: yes

state: absent

- name: Remove useless packages from the cache

apt:

autoclean: yes

- name: Remove dependencies that are no longer required

apt:

autoremove: yes

# vim: sts=2 sw=2 ts=2 et

More Languages to the F-Droid Planet?

You may know about Planet F-Droid, a feed aggregator that aims to collect the blogs of many free Android projects in one place. Currently all of the registered blogs are written in English (as is this post, so if you know someone who might be concerned by the matter below and is not able to understand English, please feel free to translate for them).

Recently someone suggested that we should maybe create additional feeds for blogs in other languages. I’m not sure if there is interest in having support for more languages, so that’s why I want to ask you.

If you feel that Planet F-Droid should offer additional feeds for non-English blogs, please vote by thumbs up/down in the planets repository.

Happy Hacking!

Friday, 01 March 2019

Protect freedom on radio devices: raise your voice today!

We are facing a EU regulation which may make it impossible to install a custom piece of software on most radio decives like WiFi routers, smartphones and embedded devices. You can now give feedback on the most problematic part by Monday, 4 March. Please participate – it’s not hard!

In the EU Radio Equipment Directive (2014/53/EU) contains one highly dangerous article will cause many issues if implemented: Article 3(3)(i). It requires hardware manufacturers of most devices sending and receiving radio signals to implement a barrier that disallows installing software which has not been certified by the manufacturer. That means, that for installing an alternative operating system on a router, mobile phone or any other radio-capable device, the manufacturer of this device has to assess its conformity.

[R]adio equipment [shall support] certain features in order to ensure that software can only be loaded into the radio equipment where the compliance of the combination of the radio equipment and software has been demonstrated.

Article 3(3)(i) of the Radio Equipment Directive 2014/53/EU

That flips the responsibility of radio conformity by 180°. In the past, you as the one who changed the software on a device have been responsible to make sure that you don’t break any applicable regulations like frequency and signal strength. Now, the manufacturers have to prevent you from doing something wrong (or right?). That further takes away freedom to control our technology. More information here by the FSFE.

The European Commission has installed an Expert Group to come up with a list of classes of devices which are supposed to be affected by the said article. Unfortunately, as it seems, the recommendation by this group is to put highly diffuse device categories like „Software Defined Radio“ and „Internet of Things“ under the scope of this regulation.

Get active today

But there is something you can do! The European Commission has officially opened a feedback period. Everyone, individuals, companies and organisations, can provide statements on their proposed plans. All you need to participate is an EU Login account, and you can hide your name from the public list of received feedback. A summary, the impact assessment, already received feedback, and the actual feedback form is available here.

To help you word your feedback, here’s a list of some of the most important disadvantages for user freedom I see (there is a more detailed list by the FSFE):

- Free Software: To control technology, you have to be able to control the software. This only is possible with Free and Open Source Software. So if you want to have a transparent and trustworthy device, you need to make the software running on it Free Software. But any device affected by Article 3(3)(i) will only allow the installation of software authorised by the manufacturer. It is unlikely that a manufacturer will certify all the available software for your device which suits your needs. Having these gatekeepers with their particular interests will make using Free Software on radio devices hard.

- Security: Radio equipment like smartphones, routers, or smart home devices are highly sensitive parts of our lives. Unfortunately, many manufacturers sacrifice security for lower costs. For many devices there is better software which protects data and still offers equal or even better functionality. If such manufacturers do not even care for security, will they even allow running other (Free and Open Source) software on their products?

- Fair competition: If you don’t like a certain product, you can use another one from a different manufacturer. If you don’t find any device suiting your requirements, you can (help) establish a new competitor that e.g. enables user freedom. But Article 3(3)(i) favours huge enterprises as it forces companies to install software barriers and do certification of additional software. For example, a small and medium-sized manufacturer of wifi routers cannot certify all available Free Software operating systems. Also, companies bundling their own software with third-party hardware will have a really hard time. On the other hand, large companies which don’t want users to use any other software than their own will profit from this threshold.

- Community services: Volunteer initiatives like Freifunk depend on hardware which they can use with their own software for their charity causes. They were able to create innovative solutions with limited resources.

- Sustainability: No updates available any more for your smartphone or router? From a security perspective, there are only two options: Flash another firmware which still recieves updates, or throw the whole device away. From an environmental perspective, the first solution is much better obviously. But will manufacturers still certify alternative firmware for devices they want to get rid of? I doubt so…

There will surely be more, so please make your points in your individual feedback. It will send a signal to the European Commission that there are people who care about freedom on radio devices. It’s only a few minutes work to avoid legal barriers that will worsen your and others‘ lives for years.

Thank you!

Tuesday, 26 February 2019

TIL: Browsers ignore Expires header on reload

This may have been obvious, but I’ve just learned that browsers ignore Expires header when the user manually reloads the page (as in by pressing F5 or choosing Reload option).

I’ve run into this when testing how Firefox treats pages which ‘never’ expire. To my surprise, the browser made requests for files it had a fresh copy of in its cache. To see behaviour much more representative of the experience of a returning user, one should select the address bar (Alt+D does the trick) and then press Return to navigate to the current page again. Hitting Reload is more akin, though not exactly the same, to the first visit.

Of course, all of the above applies to the max-age directive of the Cache-Control header as well.

Moral of the story? Make sure you test the actual real-life scenarios before making any decisions.

Saturday, 23 February 2019

An argument for the existence of non-binary gender identities

So there’s a thought I’ve been having lately. The idea that some people identify as neither male nor female has become more mainstream over the past few years. And I assume like a lot of people, I’ve struggled with giving those people a place within my own concept and experience of gender. So I’d taken the accepting-but-condescending view of “yeah whatever, do what makes you happy, I just don’t understand it”. That stance is sufficient for making the lives of those people nicer, but what really makes the lives of those people nicer is if they feel understood and believed.

And I think I finally grok it within my own understanding of what gender is. I want to share this, but it requires already being on board with a few things:

Gender is a social construct, in so much as it can only be understood by humans within social contexts. It is different from biological sex, but possibly informed by it. Some people understand gender to be bad because it is a social construct, but I am not one of those people.

Transgender people exist. This mostly goes without saying, but it needs saying. Some people, somehow, are inclined towards a gender that does not match with their sex.

Trans women are women. Trans men are men. There are a lot of arguments for this—just pick your poison for which one you like best. I am personally convinced by performativism, or the Intrinsic Inclination model suggested by Julia Serano.

Intersex people exist. This also goes without saying. Some people are born neither male nor female, either because their genetics are different, or because their genitals are different.

The last point is especially important. As you do, I was thinking about intersex people a few weeks ago, and I wondered what gender they might identify as. What usually happens is that they gravitate towards either binary choice, and that is fine. But I wondered then, what if they do not? What if they identify along the same lines of their sex: somewhere in between?

I could not find a single good argument that might explain why they couldn’t. Even a gender essentialist might be convinced that an intersex person has every right to identify somewhere in between in line with their physical sex. So if there is room for an “intersex” gender identity, then that must necessarily mean that gender is a spectrum.

And if gender is a spectrum, and we agree that the gender identities of trans people are valid even though they do not match their sex, then that must necessarily mean that the gender identities of non-binary people are also valid. Otherwise you end up in a strange situation where only intersex people are allowed to identify as non-binary, which is equally as restricting as saying that only cis men are allowed to identify as men.

I am half-certain that I am making some incorrect leaps in logic here or there, and I am not academically well-read on the topic, but this reasoning helped me a lot with at least giving the existence of a non-binary gender identity a place within my own framework of how gender works. And I hope it helps others too.

Open Call: carpenter for children’s playground in refugee camp Greece

On of the members of the Designers With Refugees community, which is part of innovation lab Latra, shared the following open call:

Subject: My quest for a compassionate Carpenter

Dear reader,

I wanted to grab your attention for the following. Finally, after weeks of being in contact with different people and organisations, I settled with a new project on Lesvos.

Refugee 4 Refugees together with Infinitum Limits is setting up a new project, called Mandala, right next to the Olive Grove and camp Moria. A field of +/†4000 m2 will be transformed into a child friendly space, offering education, sports and play activities, with the aim to create a safe space.

I will go there with a small team to help out in the design and build activities from half of March to the end of May. At the moment I am with a civil engineer and another architecture student, but I’m still looking for a fairly skilled carpenter to strengthen the team for any amount of time in above period, on site! I’m able to offer free accommodation.

Would you all like to consider whether this could apply on you or someone you know well that would openâ€minded for this? It would mean a lot tome to find someone!

Also if you’re not a carpenter but would like to learn more, contact me!

WhatsApp: 0613332421

Email: *protected email*

The information is also available for download as PDF.

Thursday, 21 February 2019

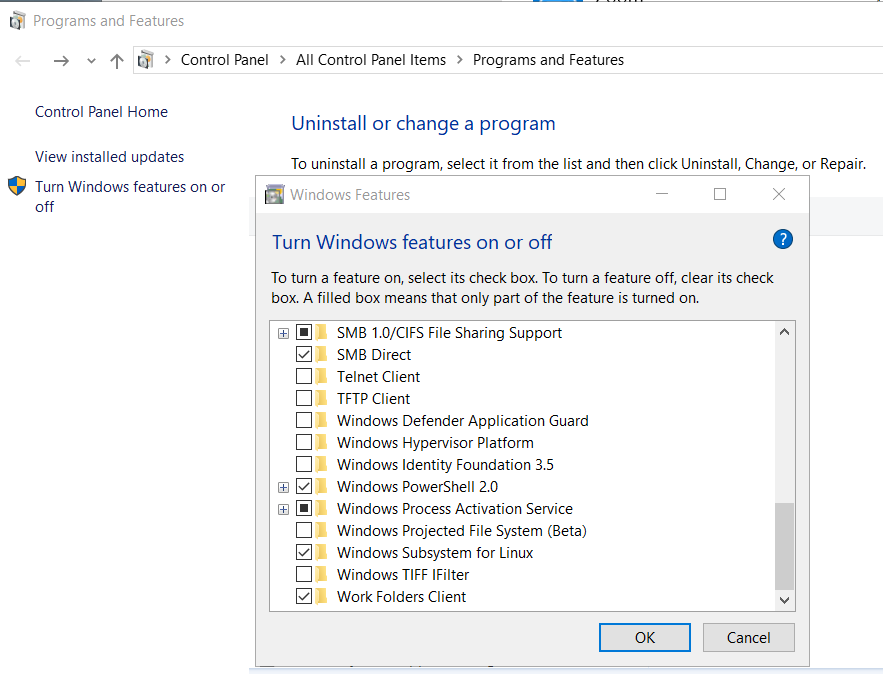

ArchLinux WSL

This article will show how to install Arch Linux in Windows 10 under Windows Subsystem for Linux.

WSL

Prerequisite is to have enabled WSL on your Win10 and already reboot your machine.

You can enable WSL :

- Windows Settings

- Apps

- Apps & features

- Related settings -> Programs and Features (bottom)

- Turn Windows features on or off (left)

Store

After rebooting your Win10, you can use Microsoft Store to install a Linux distribution like Ubuntu. Archlinux is not an official supported linux distribution thus this guide !

Launcher

The easiest way to install Archlinux (or any Linux distro) is to download the wsldl from github. This project provides a generic Launcher.exe and any rootfs as source base. First thing is to rename Launcher.exe to Archlinux.exe.

ebal@myworklaptop:~$ mkdir -pv Archlinux

mkdir: created directory 'Archlinux'

ebal@myworklaptop:~$ cd Archlinux/

ebal@myworklaptop:~/Archlinux$ curl -sL -o Archlinux.exe https://github.com/yuk7/wsldl/releases/download/18122700/Launcher.exe

ebal@myworklaptop:~/Archlinux$ ls -l

total 320

-rw-rw-rw- 1 ebal ebal 143147 Feb 21 20:40 Archlinux.exe

RootFS

Next step is to download the latest archlinux root filesystem and create a new rootfs.tar.gz archive file, as wsldl uses this type.

ebal@myworklaptop:~/Archlinux$ curl -sLO http://ftp.otenet.gr/linux/archlinux/iso/latest/archlinux-bootstrap-2019.02.01-x86_64.tar.gz

ebal@myworklaptop:~/Archlinux$ ls -l

total 147392

-rw-rw-rw- 1 ebal ebal 143147 Feb 21 20:40 Archlinux.exe

-rw-rw-rw- 1 ebal ebal 149030552 Feb 21 20:42 archlinux-bootstrap-2019.02.01-x86_64.tar.gz

ebal@myworklaptop:~/Archlinux$ sudo tar xf archlinux-bootstrap-2019.02.01-x86_64.tar.gz

ebal@myworklaptop:~/Archlinux$ cd root.x86_64/

ebal@myworklaptop:~/Archlinux/root.x86_64$ ls

README bin boot dev etc home lib lib64 mnt opt proc root run sbin srv sys tmp usr var

ebal@myworklaptop:~/Archlinux/root.x86_64$ sudo tar czf rootfs.tar.gz .

tar: .: file changed as we read it

ebal@myworklaptop:~/Archlinux/root.x86_64$ ls

README bin boot dev etc home lib lib64 mnt opt proc root rootfs.tar.gz run sbin srv sys tmp usr var

ebal@myworklaptop:~/Archlinux/root.x86_64$ du -sh rootfs.tar.gz

144M rootfs.tar.gz

ebal@myworklaptop:~/Archlinux/root.x86_64$ sudo mv rootfs.tar.gz ../

ebal@myworklaptop:~/Archlinux/root.x86_64$ cd ..

ebal@myworklaptop:~/Archlinux$ ls

Archlinux.exe archlinux-bootstrap-2019.02.01-x86_64.tar.gz root.x86_64 rootfs.tar.gz

ebal@myworklaptop:~/Archlinux$

ebal@myworklaptop:~/Archlinux$ ls

Archlinux.exe rootfs.tar.gz

ebal@myworklaptop:~$ mv Archlinux/ /mnt/c/Users/EvaggelosBalaskas/Downloads/ArchlinuxWSL

ebal@myworklaptop:~$As you can see, I do a little clean up and I move the directory under windows filesystem.

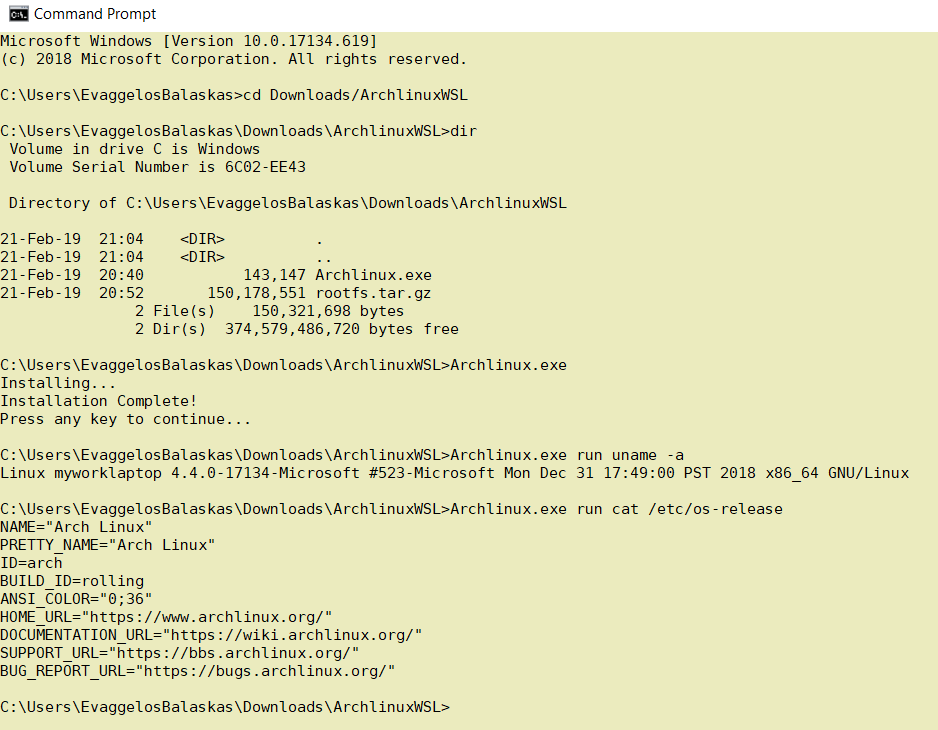

Install & Verify

Microsoft Windows [Version 10.0.17134.619]

(c) 2018 Microsoft Corporation. All rights reserved.

C:UsersEvaggelosBalaskas>cd Downloads/ArchlinuxWSL

C:UsersEvaggelosBalaskasDownloadsArchlinuxWSL>dir

Volume in drive C is Windows

Volume Serial Number is 6C02-EE43

Directory of C:UsersEvaggelosBalaskasDownloadsArchlinuxWSL

21-Feb-19 21:04 <DIR> .

21-Feb-19 21:04 <DIR> ..

21-Feb-19 20:40 143,147 Archlinux.exe

21-Feb-19 20:52 150,178,551 rootfs.tar.gz

2 File(s) 150,321,698 bytes

2 Dir(s) 374,579,486,720 bytes free

C:UsersEvaggelosBalaskasDownloadsArchlinuxWSL>Archlinux.exe

Installing...

Installation Complete!

Press any key to continue...

C:UsersEvaggelosBalaskasDownloadsArchlinuxWSL>Archlinux.exe run uname -a

Linux myworklaptop 4.4.0-17134-Microsoft #523-Microsoft Mon Dec 31 17:49:00 PST 2018 x86_64 GNU/Linux

C:UsersEvaggelosBalaskasDownloadsArchlinuxWSL>Archlinux.exe run cat /etc/os-release

NAME="Arch Linux"

PRETTY_NAME="Arch Linux"

ID=arch

BUILD_ID=rolling

ANSI_COLOR="0;36"

HOME_URL="https://www.archlinux.org/"

DOCUMENTATION_URL="https://wiki.archlinux.org/"

SUPPORT_URL="https://bbs.archlinux.org/"

BUG_REPORT_URL="https://bugs.archlinux.org/"

C:UsersEvaggelosBalaskasDownloadsArchlinuxWSL>Archlinux.exe run bash

[root@myworklaptop ArchlinuxWSL]#

[root@myworklaptop ArchlinuxWSL]# exit

Archlinux

C:UsersEvaggelosBalaskasDownloadsArchlinuxWSL>Archlinux.exe run bash

[root@myworklaptop ArchlinuxWSL]#

[root@myworklaptop ArchlinuxWSL]# date

Thu Feb 21 21:41:41 STD 2019Remember, archlinux by default does not have any configuration. So you need to configure this instance !

Here are some basic configuration:

[root@myworklaptop ArchlinuxWSL]# echo nameserver 8.8.8.8 > /etc/resolv.conf

[root@myworklaptop ArchlinuxWSL]# cat > /etc/pacman.d/mirrorlist <<EOF

Server = http://ftp.otenet.gr/linux/archlinux/$repo/os/$arch

EOF

[root@myworklaptop ArchlinuxWSL]# pacman-key --init

[root@myworklaptop ArchlinuxWSL]# pacman-key --populate

[root@myworklaptop ArchlinuxWSL]# pacman -Syyyou are pretty much ready to use archlinux inside your windows 10 !!

Remove

You can remove Archlinux by simple:

Archlinux.exe clean

Default User

There is a simple way to use Archlinux within Windows Subsystem for Linux , by connecting with a default user.

But before configure ArchWSL, we need to create this user inside the archlinux instance:

[root@myworklaptop ArchWSL]# useradd -g 374 -u 374 ebal

[root@myworklaptop ArchWSL]# id ebal

uid=374(ebal) gid=374(ebal) groups=374(ebal)

[root@myworklaptop ArchWSL]# cp -rav /etc/skel/ /home/ebal

'/etc/skel/' -> '/home/ebal'

'/etc/skel/.bashrc' -> '/home/ebal/.bashrc'

'/etc/skel/.bash_profile' -> '/home/ebal/.bash_profile'

'/etc/skel/.bash_logout' -> '/home/ebal/.bash_logout'

chown -R ebal:ebal /home/ebal/then exit the linux app and run:

> Archlinux.exe config --default-user ebaland try to login again:

> Archlinux.exe run bash

[ebal@myworklaptop ArchWSL]$

[ebal@myworklaptop ArchWSL]$ cd ~

ebal@myworklaptop ~$ pwd -P

/home/ebal

Wednesday, 20 February 2019

I am up to no good.

I am a user of “the darknet”. I use Tor to secure my communications from curious eyes. At the latest since Edward Snowden’s leaks we know, that this might be a good idea. There are many other valid, legal use-cases for using Tor. Circumventing censorship is one of them.

But German state secretary GĂźnter Krings (49, CDU) believes something else. Certainly he “understand[s], that the darknet may have a use in autocratic systems, but in my opinion there is no legitimate use for it in a free, open democracy. Whoever uses the darknet is usually up to no good.”

GĂźnter Krings should know, that Tor is only capable to reliably anonymize users traffic, if enough “noise” is being generated. That is the case if many users use Tor, so that those who really depend their life on it can blend in with the masses. Shamed be he, who thinks evil of it.

I’d like to know, if Krings ever thought about the fact that maybe, just maybe an open, free democracy is the only political system that is even capable to tolerate “the darknet”. Forbidding its use would only bring us one step closer to becoming said autocratic system.

Instead of trying to ban our democratic people from using tor, we should celebrate the fact that we are a democracy that can afford having citizens who can avoid surveillance and that have access to uncensored information.

Integrating libext2fs with a Filesystem Framework

Given the content covered by my previous articles, there probably doesn’t seem to be too much that needs saying about the topic covered by this article. Previously, I described the work involved in building libext2fs for L4Re and testing the library, and I described a framework for separating filesystem providers from programs that want to use files. But, as always, there are plenty of little details, detours and learning experiences that help to make the tale longer than it otherwise might have been.

Although this file access framework sounds intimidating, it is always worth remembering that the only exotic thing about the software being written is that it needs to request system resources and to communicate with other programs. That can be tricky in itself in many programming environments, and I have certainly spent enough time trying to figure out how to use the types and functions provided by the many L4Re libraries so that these operations may actually work.

But in the end, these are programs that are run just like any other. We aren’t building things into the kernel and having to conform to a particularly restricted environment. And although it can still be tiresome to have to debug things, particularly interprocess communication (IPC) problems, many familiar techniques for debugging and inspecting program behaviour remain available to us.

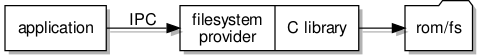

A Quick Translation

The test program I had written for libext2fs simply opened a file located in the “rom” filesystem, exposed it to libext2fs, and performed operations to extract content. In my framework, I had directed my attention towards opening and reading files, so it made sense to concentrate on providing this functionality in a filesystem server or “provider”.

The user of the framework (shielded from the details by a client library) would request the opening of a file (thus obtaining a file descriptor able to communicate with a dedicated resource object) and then read from the file (causing communication with the resource object and some transfers of data). These operations, previously done in a single program employing libext2fs directly, would now require collaboration by two separate programs.

So, I would need to insert the appropriate code in the right places in my filesystem server and its objects to open a filesystem, search for a file of the given name, and to provide the file data. For the first of these, the test program was doing something like this in the main function:

retval = ext2fs_open(devname, EXT2_FLAG_RW, 0, 0, unix_io_manager, &fs);

In the main function of the filesystem server program, something similar needs to be done. A reference to the filesystem (fs) is then passed to the server object for it to use:

Fs_server server_obj(fs, devname);

When a request is made to open a file, the filesystem server needs to locate the file just as the test program needed to. The code to achieve this is tedious, employing the ext2fs_lookup function and traversing the directory hierarchy. Ultimately, something like this needs to be done to obtain a structure for accessing the file contents:

retval = ext2fs_file_open(_fs, ino_file, ext2flags, &file);

Here, the _fs variable is our reference in the server object to the filesystem structure, the ino_file variable refers to the place in the filesystem where the file is found (the inode), some flags indicate things like whether we are reading and/or writing, and a supplied file variable is set upon the successful opening of the file. In the filesystem server, we want to create a specific object to conduct access to the file:

Fs_object *obj = new Fs_object(file, EXT2_I_SIZE(&inode_file), fsobj, irq);

Here, this resource object is initialised with the file access structure, an indication of the file size, something encapsulating the state of the communication between client and server, and the IRQ object needed for cleaning up (as described in the last article). Meanwhile, in the resource object, the read operation is supported by a pair of libext2fs functions:

ext2fs_file_lseek(_file, _obj.position, EXT2_SEEK_SET, 0); ext2fs_file_read(_file, _obj.buffer, to_transfer, &read);

These don’t appear next to each other in the actual code, but the first call is used to seek to the indicated position in the file, this having been specified by the client. The second call appears in a loop to read into a buffer an indicated amount of data, returning the amount that was actually read.

In summary, the work done by a collection of function calls appearing together in a single function is now spread out over three places in the filesystem server program:

- The initialisation is done in the main function as the server starts up

- The locating and opening of a file in the filesystem is done in the general filesystem server object

- Reading and writing is done in the file-specific resource object

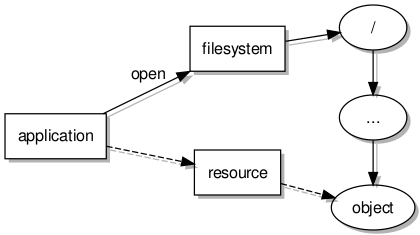

After initialisation, the performance of each part of the work only occurs upon receiving a distinct kind of message from a client program, of which more details are given below.

The Client Library

Although we cannot yet use the familiar C library functions for accessing files (fopen, fread, fwrite, fclose, and so on), we can employ functions that try to be as friendly. Thus, the following form of program may be used:

char buffer[80];

file_descriptor_t *desc = client_open("test.txt", O_RDONLY);

available = client_read(desc, buffer, 80);

if (available)

fwrite((void *) buffer, sizeof(char), available, stdout); /* using existing fwrite function */

client_close(desc);

As noted above, the existing fwrite function in L4Re may be used to write file data out to the console. Ultimately, we would want our modified version of the function to be doing this job.

These client library functions resemble lower-level C library functions such as open, read, write, close, and so on. By targeting this particular level of functionality, it is hoped that much of the logic in functions like fopen can be preserved, this logic having to deal with things like mode strings (“r”, “r+”, “w”, and so on) which have little to do with the actual job of transmitting file content around the system.

In order to do their work, the client library functions need to send and receive IPC messages, or at least need to get other functions to deal with this particular work. My approach has been to write a layer of functions that only deals with messaging and that hides the L4-specific details from the rest of the code.

This lower-level layer of functions allows us to treat interprocess interactions like normal function calls, and in this framework those calls would have the following signatures, with the inputs arriving at the server and the outputs arriving back at the client:

- fs_open: flags, buffer → file size, resource object

- fs_flush: (no parameters) → (no return values)

- fs_read: position → available

- fs_write: position, available → written, file size

The responsibilities of the client library functions can be summarised as follows:

- client_open: allocate memory for the buffer, obtain a server reference (“capability”) from the program’s environment

- client_close: deallocate the allocated resources

- client_flush: invoke fs_flush with any available data, resetting the buffer status

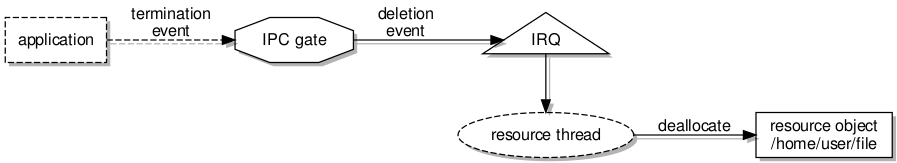

- client_read: provide data to the caller from its buffer, invoking fs_read whenever the buffer is empty

- client_write: commit data from the caller into the buffer, invoking fs_write whenever the buffer is full, also flushing the buffer when appropriate

The lack of a fs_close function might seem surprising, but as described in the previous article, the server process is designed to receive a notification when the client process discards a reference to the resource object dedicated to a particular file. So in client_close, we should be able to merely throw away the things acquired by client_open, and the system together with the server will hopefully handle the consequences.

Switching the Backend

Using a conventional file as the repository for file content is convenient, but since the aim is to replace the existing filesystem mechanisms, it would seem necessary to try and get libext2fs to use other ways of accessing the underlying storage. Previously, my considerations had led me to provide a “block” storage layer underneath the filesystem layer. So it made sense to investigate how libext2fs might communicate with a “block server” or “block device” in order to read and write raw filesystem data.

Changing the way libext2fs accesses its storage sounds like an ominous task, but fortunately some thought has evidently gone into accommodating different storage types and platforms. Indeed, the library code includes support for things like DOS and Windows, with this functionality evidently being used by various applications on those platforms (or, these days, the latter one, at least) to provide some kind of file browser support for ext2-family filesystems.

The kind of component involved in providing this variety of support is known as an “I/O manager”, and the one that we have been using is known as the “Unix” I/O manager, this employing POSIX or standard C library calls to access files and devices. Now, this may have been adequate until now, but with the requirement that we use the replacement IPC mechanisms to access a block server, we need to consider how a different kind of I/O manager might be implemented to use the client library functions instead of the C library functions.