Sunday, 18 November 2018

Apple iOS Vs your Linux Mail, Contact and Calendar Server

Evaggelos Balaskas - System Engineer | 20:51, Sunday, 18 November 2018

The purpose of this blog post is to act as a visual guide/tutorial on how to setup an iOS device (iPad or iPhone) using the native apps against a custom Linux Mail, Calendar & Contact server.

Disclaimer: I wrote this blog post after 36hours with an apple device. I have never had any previous encagement with an apple product. Huge culture change & learning curve. Be aware, that the below notes may not apply to your setup.

Original creation date: Friday 12 Oct 2018

Last Update: Sunday 18 Nov 2018

Linux Mail Server

Notes are based on the below setup:

- CentOS 6.10

- Dovecot IMAP server with STARTTLS (TCP Port: 143) with Encrypted Password Authentication.

- Postfix SMTP with STARTTLS (TCP Port: 587) with Encrypted Password Authentication.

- Baïkal as Calendar & Contact server.

Thunderbird

Thunderbird settings for imap / smtp over STARTTLS and encrypted authentication

Baikal

Dashboard

CardDAV

contact URI for user Username

https://baikal.baikal.example.org/html/card.php/addressbooks/Username/defaultCalDAV

calendar URI for user Username

https://baikal.example.org/html/cal.php/calendars/Username/default

iOS

There is a lot of online documentation but none in one place. Random Stack Overflow articles & posts in the internet. It took me almost an entire day (and night) to figure things out. In the end, I enabled debug mode on my dovecot/postifx & apache web server. After that, throught trail and error, I managed to setup both iPhone & iPad using only native apps.

Open Password & Accounts & click on New Account

Choose Other

Now the tricky part, you have to click Next and fill the imap & smtp settings.

Now we have to go back and change the settings, to enable STARTTLS and encrypted password authentication.

STARTTLS with Encrypted Passwords for Authentication

In the home-page of the iPad/iPhone we will see the Mail-Notifications have already fetch some headers.

and finally, open the native mail app:

Contact Server

Now ready for setting up the contact account

https://baikal.baikal.example.org/html/card.php/addressbooks/Username/default

Opening Contact App:

Calendar Server

https://baikal.example.org/html/cal.php/calendars/Username/default

Cloud-init with CentOS 7

Evaggelos Balaskas - System Engineer | 14:04, Sunday, 18 November 2018

Cloud-init is the defacto multi-distribution package that handles early initialization of a cloud instance

This article is a mini-HowTo use cloud-init with centos7 in your own libvirt qemu/kvm lab, instead of using a public cloud provider.

How Cloud-init works

Josh Powers @ DebConf17

How really works?

Cloud-init has Boot Stages

- Generator

- Local

- Network

- Config

- Final

and supports modules to extend configuration and support.

Here is a brief list of modules (sorted by name):

- bootcmd

- final-message

- growpart

- keys-to-console

- locale

- migrator

- mounts

- package-update-upgrade-install

- phone-home

- power-state-change

- puppet

- resizefs

- rsyslog

- runcmd

- scripts-per-boot

- scripts-per-instance

- scripts-per-once

- scripts-user

- set_hostname

- set-passwords

- ssh

- ssh-authkey-fingerprints

- timezone

- update_etc_hosts

- update_hostname

- users-groups

- write-files

- yum-add-repo

Gist

Cloud-init example using a Generic Cloud CentOS-7 on a libvirtd qmu/kvm lab · GitHub

Generic Cloud CentOS 7

You can find a plethora of centos7 cloud images here:

Download the latest version

$ curl -LO http://cloud.centos.org/centos/7/images/CentOS-7-x86_64-GenericCloud.qcow2.xz

Uncompress file

$ xz -v --keep -d CentOS-7-x86_64-GenericCloud.qcow2.xz

Check cloud image

$ qemu-img info CentOS-7-x86_64-GenericCloud.qcow2

image: CentOS-7-x86_64-GenericCloud.qcow2

file format: qcow2

virtual size: 8.0G (8589934592 bytes)

disk size: 863M

cluster_size: 65536

Format specific information:

compat: 0.10

refcount bits: 16

The default image is 8G.

If you need to resize it, check below in this article.

Create metadata file

meta-data are data that comes from the cloud provider itself. In this example, I will use static network configuration.

cat > meta-data <<EOF

instance-id: testingcentos7

local-hostname: testingcentos7

network-interfaces: |

iface eth0 inet static

address 192.168.122.228

network 192.168.122.0

netmask 255.255.255.0

broadcast 192.168.122.255

gateway 192.168.122.1

# vim:syntax=yaml

EOF

Crete cloud-init (userdata) file

user-data are data that comes from you aka the user.

cat > user-data <<EOF

#cloud-config

# Set default user and their public ssh key

# eg. https://github.com/ebal.keys

users:

- name: ebal

ssh-authorized-keys:

- `curl -s -L https://github.com/ebal.keys`

sudo: ALL=(ALL) NOPASSWD:ALL

# Enable cloud-init modules

cloud_config_modules:

- resolv_conf

- runcmd

- timezone

- package-update-upgrade-install

# Set TimeZone

timezone: Europe/Athens

# Set DNS

manage_resolv_conf: true

resolv_conf:

nameservers: ['9.9.9.9']

# Install packages

packages:

- mlocate

- vim

- epel-release

# Update/Upgrade & Reboot if necessary

package_update: true

package_upgrade: true

package_reboot_if_required: true

# Remove cloud-init

runcmd:

- yum -y remove cloud-init

- updatedb

# Configure where output will go

output:

all: ">> /var/log/cloud-init.log"

# vim:syntax=yaml

EOF

Create the cloud-init ISO

When using libvirt with qemu/kvm the most common way to pass the meta-data/user-data to cloud-init, is through an iso (cdrom).

$ genisoimage -output cloud-init.iso -volid cidata -joliet -rock user-data meta-data

or

$ mkisofs -o cloud-init.iso -V cidata -J -r user-data meta-data

Provision new virtual machine

Finally run this as root:

# virt-install

--name centos7_test

--memory 2048

--vcpus 1

--metadata description="My centos7 cloud-init test"

--import

--disk CentOS-7-x86_64-GenericCloud.qcow2,format=qcow2,bus=virtio

--disk cloud-init.iso,device=cdrom

--network bridge=virbr0,model=virtio

--os-type=linux

--os-variant=centos7.0

--noautoconsole

The List of Os Variants

There is an interesting command to find out all the os variants that are being supported by libvirt in your lab:

eg. CentOS

$ osinfo-query os | grep CentOS

centos6.0 | CentOS 6.0 | 6.0 | http://centos.org/centos/6.0

centos6.1 | CentOS 6.1 | 6.1 | http://centos.org/centos/6.1

centos6.2 | CentOS 6.2 | 6.2 | http://centos.org/centos/6.2

centos6.3 | CentOS 6.3 | 6.3 | http://centos.org/centos/6.3

centos6.4 | CentOS 6.4 | 6.4 | http://centos.org/centos/6.4

centos6.5 | CentOS 6.5 | 6.5 | http://centos.org/centos/6.5

centos6.6 | CentOS 6.6 | 6.6 | http://centos.org/centos/6.6

centos6.7 | CentOS 6.7 | 6.7 | http://centos.org/centos/6.7

centos6.8 | CentOS 6.8 | 6.8 | http://centos.org/centos/6.8

centos6.9 | CentOS 6.9 | 6.9 | http://centos.org/centos/6.9

centos7.0 | CentOS 7.0 | 7.0 | http://centos.org/centos/7.0

DHCP

If you are not using a static network configuration scheme, then to identify the IP of your cloud instance, type:

$ virsh net-dhcp-leases default

Expiry Time MAC address Protocol IP address Hostname Client ID or DUID

---------------------------------------------------------------------------------------------------------

2018-11-17 15:40:31 52:54:00:57:79:3e ipv4 192.168.122.144/24 - -

Resize

The easiest way to grow/resize your virtual machine is via qemu-img command:

$ qemu-img resize CentOS-7-x86_64-GenericCloud.qcow2 20G

Image resized.$ qemu-img info CentOS-7-x86_64-GenericCloud.qcow2

image: CentOS-7-x86_64-GenericCloud.qcow2

file format: qcow2

virtual size: 20G (21474836480 bytes)

disk size: 870M

cluster_size: 65536

Format specific information:

compat: 0.10

refcount bits: 16You can add the below lines into your user-data file

growpart:

mode: auto

devices: ['/']

ignore_growroot_disabled: falseThe result:

[root@testingcentos7 ebal]# df -h /

Filesystem Size Used Avail Use% Mounted on

/dev/vda1 20G 870M 20G 5% /

Default cloud-init.cfg

For reference, this is the default centos7 cloud-init configuration file.

# /etc/cloud/cloud.cfg users:

- default

disable_root: 1

ssh_pwauth: 0

mount_default_fields: [~, ~, 'auto', 'defaults,nofail', '0', '2']

resize_rootfs_tmp: /dev

ssh_deletekeys: 0

ssh_genkeytypes: ~

syslog_fix_perms: ~

cloud_init_modules:

- migrator

- bootcmd

- write-files

- growpart

- resizefs

- set_hostname

- update_hostname

- update_etc_hosts

- rsyslog

- users-groups

- ssh

cloud_config_modules:

- mounts

- locale

- set-passwords

- rh_subscription

- yum-add-repo

- package-update-upgrade-install

- timezone

- puppet

- chef

- salt-minion

- mcollective

- disable-ec2-metadata

- runcmd

cloud_final_modules:

- rightscale_userdata

- scripts-per-once

- scripts-per-boot

- scripts-per-instance

- scripts-user

- ssh-authkey-fingerprints

- keys-to-console

- phone-home

- final-message

- power-state-change

system_info:

default_user:

name: centos

lock_passwd: true

gecos: Cloud User

groups: [wheel, adm, systemd-journal]

sudo: ["ALL=(ALL) NOPASSWD:ALL"]

shell: /bin/bash

distro: rhel

paths:

cloud_dir: /var/lib/cloud

templates_dir: /etc/cloud/templates

ssh_svcname: sshd

# vim:syntax=yamlSunday, 11 November 2018

Publishing Applications through F-Droid

David Boddie - Updates (Full Articles) | 17:16, Sunday, 11 November 2018

In 2016 I started working on a set of Python modules for reading and writing bytecode for the Dalvik virtual machine so that I could experiment with creating applications on Android without having to write them in Java. In the time since then, on and off, I have written a few small applications to learn about Android and explore the capabilities of the devices I own. Some of these were examples, demos and tests for the compiler framework, but others were intended to be useful to me. I could have just downloaded applications to perform the tasks I wanted to do, but I wanted minimal applications that I could understand and trust, so I wrote my own simple applications and was happy to install them locally on my phone.

In September I had the need to back up some data from a phone I no longer use, so I wrote a few small applications to dump data to the phone's storage area, allowing me to retrieve it using the adb tool on my desktop computer. I wondered if other people might find applications like these useful and asked on the FSFE's Android mailing list. In the discussion that followed it was suggested that I try to publish my applications via F-Droid.

Preparations

The starting point for someone who wants to publish applications via F-Droid is F-Droid's Contribute page. The documentation contains a set of links to relevant guides for various kinds of developers, including those making applications and those deploying the F-Droid server software on their own servers.

I found the Contributing to F-Droid document to be a useful shortcut for finding out the fundamental steps needed to submit an application for publication. This document is part of the F-Droid Data repository which holds information about all the applications provided in the catalogue. However, I was a bit uncertain about how to write the metadata for my applications. The problem being that the F-Droid infrastructure builds all the applications from source code and most of these are built using the standard Android SDK, but my applications are not.

Getting Help

I joined the F-Droid forum to ask for assistance with this problem under the topic of Non-standard development tools and was quickly offered help in getting my toolchain integrated into the F-Droid build process. I had expected it was going to take a lot of effort on my part but F-Droid contributor Pierre Rudloff came up with a sample build recipe for my application, showing how simple it could be. To include my application in the F-Droid catalogue, I just needed to know how to describe it properly.

The way to describe an application, its build process and available versions is contained in the Build Metadata Reference document. It can be a bit intimidating to read, which is why it was so useful to be given an example to start with. Having an example meant that I didn't really have to look too hard at this document, but I would be returning to it before too long.

To get the metadata into the build process for the applications hosted by F-Droid, you need to add it to the F-Droid Data repository. This is done using the familiar cycle of cloning, committing, testing, pushing, and making merge requests that many of us are familiar with. The Data repository provides a useful Quickstart guide that tells you how to set up a local repository for testing. One thing I did before cloning any repositories was to fork the Data repository so that I could add my metadata to that. So, what I did was this:

git clone https://gitlab.com/fdroid/fdroidserver.git export PATH="$PATH:$PWD/fdroidserver" # Clone my fork of the Data repository: git clone https://gitlab.com/dboddie/fdroiddata.git

I added a file in the metadata directory of the repository called uk.org.boddie.android.weatherforecast.txt containing a description of my application and information about its version, dependencies, build process, and so on. Then I ran some checks using the fdroid tool from the Server repository, checking that my metadata was valid and in a suitable format for the Data repository, before verifying that a package could be built:

fdroid lint uk.org.boddie.android.weatherforecast fdroid rewritemeta uk.org.boddie.android.weatherforecast fdroid build -v -l uk.org.boddie.android.weatherforecast

The third of these commands requires the ANDROID_HOME environment variable to be set. I have a very old Android SDK installation that I had to refer to in order for this to work, even though my application doesn't use it. For reference, the ANDROID_HOME variable refers to the directory in the SDK or equivalent that contains the build-tools directory.

Build, Test, Repeat

At this point it was time to commit my new file to my fork of the Data repository and push it back up to GitLab. While logged into the site, verifying that my commit was there, I was offered the option to create a merge request for the official F-Droid Data repository, and this led to my first merge request for F-Droid. Things were moving forward.

Unfortunately, I had not managed to specify all the dependencies I needed to build my application using my own tools. In particular, I rely on PyCrypto to create digests of files, and this was not available by default in the build environment. This led to another change to the metadata and another merge request. Still, it was not plain sailing at this point because, despite producing an application package, the launcher icon was generated incorrectly. This required a change to the application itself to ensure that the SVG used for the icon was in a form that could be converted to PNG files by the tools available in the build environment. Yet another merge request updated the current version number so that the fixed application could be build and distributed. My application was finally available in the form I originally intended!

Still, not everything was perfect. A couple of users quickly came forward with suggestions for improvements and a bug report concerning the way I handled place names – or rather failed to handle them. The application was fixed and yet another merge request was created and handled smoothly by the F-Droid maintainers.

Finishing Touches

There are a few things I've not done when developing and releasing this application; there's at least one fundamental thing and at least one cosmetic enhancement that could be done to improve the experience around the installation process.

The basic thing is to write a proper changelog. Since it was really just going to be the proof of concept that showed applications written with DUCK could be published via F-Droid, the application evolved from its original form very informally. I should go back and at least document the bug fixes I made after its release.

The cosmetic improvement would be to improve its F-Droid catalogue entry to include a screenshot or two, then at least prospective users could see what they were getting.

Another meta-feature that would be useful for me to use is F-Droid's auto-update facility. I'm sure I'll need to update the application again in the future so, while this document will remind me how to do that, it would be easier for everyone if F-Droid could just pick up new versions as they appear. There seem to be quite a few ways to do that, so I think that I'll have something to work with.

In summary, it was easier than I had imagined to publish an application in the F-Droid catalogue. The process was smooth and people were friendly and happy to help. If you write your own Free Software applications for Android, I encourage you to publish them via F-Droid and to submit your own metadata for them to make publication as quick and easy as possible.

Categories: Serpentine, F-Droid, Android, Python, Free Software

Friday, 09 November 2018

KDE Applications 18.12 branches created

TSDgeos' blog | 22:31, Friday, 09 November 2018

Make sure you commit anything you want to end up in the 18.12 release to themWe're already past the dependency freeze.

The Freeze and Beta is this Thursday 15 of November.

More interesting dates

November 29: KDE Applications 18.12 RC (18.11.90) Tagging and Release

December 6: KDE Applications 18.12 Tagging

December 13: KDE Applications 18.12 Release

https://community.kde.org/Schedules/Applications/18.12_Release_Schedule

Thursday, 08 November 2018

Another Look at VGA Signal Generation with a PIC32 Microcontroller

Paul Boddie's Free Software-related blog » English | 19:15, Thursday, 08 November 2018

Maybe some people like to see others attempting unusual challenges, things that wouldn’t normally be seen as productive, sensible or a good way to spend time and energy, things that shouldn’t be possible or viable but were nevertheless made to work somehow. And maybe they like the idea of indulging their own curiosity in such things, knowing that for potential future experiments of their own there is a route already mapped out to some kind of success. That might explain why my project to produce a VGA-compatible analogue video signal from a PIC32 microcontroller seems to attract more feedback than some of my other, arguably more useful or deserving, projects.

Nevertheless, I was recently contacted by different people inquiring about my earlier experiments. One was admittedly only interested in using Free Software tools to port his own software to the MIPS-based PIC32 products, and I tried to give some advice about navigating the documentation and to describe some of the issues I had encountered. Another was more concerned with the actual signal generation aspect of the earlier project and the usability of the end result. Previously, I had also had a conversation with someone looking to use such techniques for his project, and although he ended up choosing a different approach, the cumulative effect of my discussions with him and these more recent correspondents persuaded me to take another look at my earlier work and to see if I couldn’t answer some of the questions I had received more definitively.

Picking Over the Pieces

I was already rather aware of some of the demonstrated and potential limitations of my earlier work, these being concerned with generating a decent picture, and although I had attempted to improve the work on previous occasions, I think I just ran out of energy and patience to properly investigate other techniques. The following things were bothersome or a source of concern:

- The unevenly sized pixels

- The process of experimentation with the existing code

- Whether the microcontroller could really do other things while displaying the picture

Although one of my correspondents was very complimentary about the form of my assembly language code, I rather felt that it was holding me back, making me focus on details that should be abstracted away. It should be said that MIPS assembly language is fairly pleasant to write, at least in comparison to certain other architectures.

(I was brought up on 6502 assembly language, where there is an “accumulator” that is the only thing even approaching a general-purpose register in function, and where instructions need to combine this accumulator with other, more limited, registers to do things like accessing “zero page”: an area of memory that supports certain kinds of operations by providing the contents of locations as inputs. Everything needs to be meticulously planned, and despite odd claims that “zero page” is really one big register file and that 6502 is therefore “RISC-like”, the existence of virtual machines such as SWEET16 say rather a lot about how RISC-like the 6502 actually is. Later, I learned ARM assembly language and found it rather liberating with its general-purpose registers and uncomplicated, rather easier to use, memory access instructions. Certain things are even simpler in MIPS assembly language, whereas other conveniences from ARM are absent and demand a bit more effort from the programmer.)

Anyway, I had previously introduced functionality in C in my earlier work, mostly because I didn’t want the challenge of writing graphics routines in assembly language. So with the need to more easily experiment with different peripheral configurations, I decided to migrate the remaining functionality to C, leaving only the lowest-level routines concerned with booting and exception/interrupt handling in assembly language. This effort took me to some frustrating places, making me deal with things like linker scripts and the kind of memory initialisation that one’s compiler usually does for you but which is absent when targeting a “bare metal” environment. I shall spare you those details in this article.

I therefore spent a certain amount of effort in developing some C library functionality for dealing with the hardware. It could be said that I might have used existing libraries instead, but ignoring Microchip’s libraries that will either be proprietary or the subject of numerous complaints by those unwilling to leave that company’s “ecosystem”, I rather felt that the exercise in library design would be useful in getting reacquainted and providing me with something I would actually want to use. An important goal was minimalism, whereas my impression of libraries such as those provided by the Pinguino effort are that they try and bridge the different PIC hardware platforms and consequently accumulate features and details that do not really interest me.

The Wide Pixel Problem

One thing that had bothered me when demonstrating a VGA signal was that the displayed images featured “wide” pixels. These are not always noticeable: one of my correspondents told me that he couldn’t see them in one of my example pictures, but they are almost certainly present because they are a feature of the mechanism used to generate the signal. Here is a crop from the example in question:

And here is the same crop with the wide pixels highlighted:

I have left the identification of all wide pixel columns to the reader! Nevertheless, it can be stated that these pixels occur in every fourth column and are especially noticeable with things like text, where at such low resolutions, the doubling of pixel widths becomes rather obvious and annoying.

Quite why this increase in pixel width was occurring became a matter I wanted to investigate. As you may recall, the technique I used to output pixels involved getting the direct memory access (DMA) controller in the PIC32 chip to “copy” the contents of memory to a hardware register corresponding to output pins. The signals from these pins were sent along the cable to the monitor. And the DMA controller was transferring data as fast as it could and thus producing pixel colours as fast as it could.

One of my correspondents looked into the matter and confirmed that we were not imagining this problem, even employing an oscilloscope to check what was happening with the signals from the output pins. The DMA controller would, after starting each fourth pixel, somehow not be able to produce the next pixel in a timely fashion, leaving the current pixel colour unchanged as the monitor traced the picture across the screen. This would cause these pixels to “stretch” until the first pixel from the next group could be emitted.

Initially, I had thought that interrupts were occurring and the CPU, in responding to interrupt conditions and needing to read instructions, was gaining priority over the DMA controller and forcing pixel transfers to wait. Although I had specified a “cell size” of 160 bytes, corresponding to 160 pixels, I was aware that the architecture of the system would be dividing data up into four-byte “words”, and it would be natural at certain points for accesses to memory to be broken up and scheduled in terms of such units. I had therefore wanted to accommodate both the CPU and DMA using an approach where the DMA would not try and transfer everything at once, but without the energy to get this to work, I had left the matter to rest.

A Steady Rhythm

The documentation for these microcontrollers distinguishes between block and cell transfers when describing DMA. Previously, I had noted that these terms could be better described, and I think there are people who are under the impression that cells must be very limited in size and that you need to drive repeated cell transfers using various interrupt conditions to transfer larger amounts. We have seen that this is not the case: a single, large cell transfer is entirely possible, even though the characteristics of the transfer have been less than desirable. (Nevertheless, the documentation focuses on things like copying from one UART peripheral to another, arguably failing to explore the range of possible applications for DMA and to thereby elucidate the mechanisms involved.)

However, given the wide pixel effect, it becomes more interesting to introduce a steady rhythm by using smaller cell sizes and having an external event coordinate each cell’s transfer. With a single, large transfer, only one initiation event needs to occur: that produced by the timer whose period corresponds to that of a single horizontal “scanline”. The DMA channel producing pixels then runs to completion and triggers another channel to turn off the pixel output. In this scheme, the initiating condition for the cell transfer is the timer.

When using multiple cells to transfer the pixel data, however, it is no longer possible to use the timer in this way. Doing so would cause the initiation of the first cell, but then subsequent cells would only be transferred on subsequent timer events. And since these events only occur once per scanline, this would see a single line’s pixel data being transferred over many scanlines instead (or, since the DMA channel would be updated regularly, we would see only the first pixel on each line being emitted, stretched across the entire line). Since the DMA mechanism apparently does not permit one kind of interrupt condition to enable a channel and another to initiate each cell transfer, we must be slightly more creative.

Fortunately, the solution is to chain two channels, just as we already do with the pixel-producing channel and the one that resets the output. A channel is dedicated to waiting for the line timer event, and it transfers a single black pixel to the screen before handing over to the pixel-producing channel. This channel, now enabled, has its cell transfers regulated by another interrupt condition and proceeds as fast as such a condition may occur. Finally, the reset channel takes over and turns off the output as before.

The nature of the cell transfer interrupt can take various forms, but it is arguably most intuitive to use another timer for this purpose. We may set the limit of such a timer to 1, indicating that it may “wrap around” and thus produce an event almost continuously. And by configuring it to run as quickly as possible, at the frequency of the peripheral clock, it may drive cell transfers at a rate that is quick enough to hopefully produce enough pixels whilst also allowing other activities to occur between each transfer.

One thing is worth mentioning here just to be explicit about the mechanisms involved. When configuring interrupts that are used for DMA transfers, it is the actual condition that matters, not the interrupt nor the delivery of the interrupt request to the CPU. So, when using timer events for transfers, it appears to be sufficient to merely configure the timer; it will produce the interrupt condition upon its counter “wrapping around” regardless of whether the interrupt itself is enabled.

With a cell size of a single byte, and with a peripheral clock running at half the speed of the system clock, this approach is sufficient all by itself to yield pixels with consistent widths, with the only significant disadvantage being how few of them can be produced per line: I could only manage in the neighbourhood of 80 pixels! Making the peripheral clock run as fast as the system clock doesn’t help in theory: we actually want the CPU running faster than the transfer rate just to have a chance of doing other things. Nor does it help in practice: picture stability rather suffers.

Using larger cell sizes, we encounter the wide pixel problem, meaning that the end of a four-byte group is encountered and the transfer hangs on for longer than it should. However, larger cell sizes also introduce byte transfers at a different rate from cell transfers (at the system clock rate) and therefore risk making the last pixel produced by a cell longer than the others, anyway.

Uncovering DMA Transfers

I rather suspect that interruptions are not really responsible for the wide pixels at all, and that it is the DMA controller that causes them. Some discussion with another correspondent explored how the controller might be operating, with transfers perhaps looking something like this:

DMA read from memory DMA write to display (byte #1) DMA write to display (byte #2) DMA write to display (byte #3) DMA write to display (byte #4) DMA read from memory ...

This would, by itself, cause a transfer pattern like this:

R____R____R____R____R____R ... _WWWW_WWWW_WWWW_WWWW_WWWW_ ...

And thus pixel output as follows:

41234412344123441234412344 ... =***==***==***==***==***== ... (narrow pixels as * and wide pixel components as =)

Even without any extra operations or interruptions, we would get a gap between the write operations that would cause a wider pixel. This would only get worse if the DMA controller had to update the address of the pixel data after every four-byte read and write, not being able to do so concurrently with those operations. And if the CPU were really able to interrupt longer transfers, even to obtain a single instruction to execute, it might then compete with the DMA controller in accessing memory, making the read operations even later every time.

Assuming, then, that wide pixels are the fault of the way the DMA controller works, we might consider how we might want it to work instead:

| ...

DMA read from memory | DMA write to display (byte #4)

\-> | DMA write to display (byte #1)

| DMA write to display (byte #2)

| DMA write to display (byte #3)

DMA read from memory | DMA write to display (byte #4)

\-> | ...

If only pixel data could be read from memory and written to the output register (and thus the display) concurrently, we might have a continuous stream of evenly-sized pixels. Such things do not seem possible with the microcontroller I happen to be using. Either concurrent reading from memory and writing to a peripheral is not possible or the DMA controller is not able to take advantage of this concurrency. But these observations did give me another idea.

Dual Channel Transfers

If the DMA controller cannot get a single channel to read ahead and get the next four bytes, could it be persuaded to do so using two channels? The intention would be something like the following:

Channel #1: Channel #2:

...

DMA read from memory DMA write to display (byte #4)

DMA write to display (byte #1)

DMA write to display (byte #2)

DMA write to display (byte #3)

DMA write to display (byte #4) DMA read from memory

DMA write to display (byte #1)

... ...

This is really nothing different from the above, functionally, but the required operations end up being assigned to different channels explicitly. We would then want these channels to cooperate, interleaving their data so that the result is the combined sequence of pixels for a line:

Channel #1: 1234 1234 ... Channel #2: 5678 5678 ... Combined: 1234567812345678 ...

It would seem that channels might even cooperate within cell transfers, meaning that we can apparently schedule two long transfer cells and have the DMA controller switch between the two channels after every four bytes. Here, I wrote a short test program employing text strings and the UART peripheral to see if the microcontroller would “zip up” the strings, with the following being used for single-byte cells:

Channel #1: "Adoc gi,hlo\r" Channel #2: "n neaan el!\n" Combined: "And once again, hello\r\n"

Then, seeing that it did, I decided that this had a chance of also working with pixel data. Here, every other pixel on a line needs to be presented to each channel, with the first channel being responsible for the pixels in odd-numbered positions, and the second channel being responsible for the even-numbered pixels. Since the DMA controller is unable to step through the data at address increments other than one (which may be a feature of other DMA implementations), this causes us to rearrange each line of pixel data as follows:

Displayed pixels: 123456......7890

Rearranged pixels: 135...79246...80

* *

Here, the asterisks mark the start of each channel’s data, with each channel only needing to transfer half the usual amount.

The documentation does, in fact, mention that where multiple channels are active with the same priority, each one is given control in turn with the controller cycling through them repeatedly. The matter of which order they are chosen, which is important for us, seems to be dependent on various factors, only some of which I can claim to understand. For instance, I suspect that if the second channel refers to data that appears before the first channel’s data in memory, it gets scheduled first when both channels are activated. Although this is not a significant concern when just trying to produce a stable picture, it does limit more advanced operations such as horizontal scrolling.

As you can see, trying this technique out with timed transfers actually made a difference. Instead of only managing something approaching 80 pixels across the screen, more than 90 can be accommodated. Meanwhile, experiments with transfers going as fast as possible seemed to make no real difference, and the fourth pixel in each group was still wider than the others. Still, making the timed transfer mode more usable is a small victory worth having, I suppose.

Parallel Mode Revisited

At the start of my interest in this project, I had it in my mind that I would couple DMA transfers with the parallel mode (or Parallel Master Port) functionality in order to generate a VGA signal. Certain aspects of this, particularly gaps between pixels, made me less than enthusiastic about the approach. However, in considering what might be done to the output signal in other situations, I had contemplated the use of a flip-flop to hold output stable according to a regular tempo, rather like what I managed to achieve, almost inadvertently, when introducing a transfer timer. Until recently, I had failed to apply this idea to where it made most sense: in regulating the parallel mode signal.

Since parallel mode is really intended for driving memory devices and display controllers, various control signals are exposed via pins that can tell these external devices that data is available for their consumption. For our purposes, a flip-flop is just like a memory device: it retains the input values sampled by its input pins, and then exposes these values on its output pins when the inputs are “clocked” into memory using a “clock pulse” signal. The parallel mode peripheral in the microcontroller offers various different signals for such clock and selection pulse purposes.

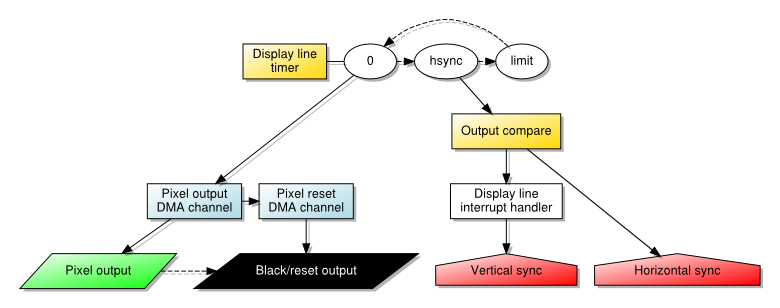

The parallel mode circuit showing connections relevant to VGA output (generic connections are not shown)

Employing the PMWR (parallel mode write) signal as the clock pulse, directing the display signals to the flip-flop’s inputs, and routing the flip-flop’s outputs to the VGA circuit solved the pixel gap problem at a stroke. Unfortunately, it merely reminded us that the wide pixel problem also affects parallel mode output, too. Although the clock pulse is able to tell an external component about the availability of a new pixel value, it is up to the external component to regulate the appearance of each pixel. A memory device does not care about the timing of incoming data as long as it knows when such data has arrived, and so such regulation is beyond the capabilities of a flip-flop.

It was observed, however, that since each group of pixels is generated at a regular frequency, the PMWR signalling frequency might be reduced by being scaled by a constant factor. This might allow some pixel data to linger slightly longer in the flip-flop and be slightly stretched. By the time the fourth pixel in a group arrives, the time allocated to that pixel would be the same as those preceding it, thus producing consistently-sized pixels. I imagine that a factor of 8/9 might do the trick, but I haven’t considered what modification to the circuit might be needed or whether it would all be too complicated.

Recognising the Obvious

When people normally experiment with video signals from microcontrollers, one tends to see people writing code to run as efficiently as is absolutely possible – using assembly language if necessary – to generate the video signal. It may only be those of us using microcontrollers with DMA peripherals who want to try and get the DMA hardware to do the heavy lifting. Indeed, those of us with exposure to display peripherals provided by system-on-a-chip solutions feel almost obliged to do things this way.

But recent discussions with one of my correspondents made me reconsider whether an adequate solution might be achieved by just getting the CPU to do the work of transferring pixel data to the display. Previously, another correspondent had indicated that it this was potentially tricky, and that getting the timings right was more difficult than letting the hardware synchronise the different mechanisms – timer and DMA – all by itself. By involving the CPU and making it run code, the display line timer would need to generate an interrupt that would be handled, causing the CPU to start running a loop to copy data from the framebuffer to the output port.

This approach puts us at the mercy of how the CPU handles and dispatches interrupts. Being somewhat conservative about the mechanisms more generally available on various MIPS-based products, I tend to choose a single interrupt vector and then test for the different conditions. Since we need as little variation as possible in the latency between a timer event occurring and the pixel data being generated, I test for that particular event before even considering anything else. Then, a loop of the following form is performed:

for (current = line_data; current < end; current++) *output_port = *current;

Here, the line data is copied byte by byte to the output port. Some adornments are necessary to persuade the compiler to generate code that writes the data efficiently and in order, but there is nothing particularly exotic required and GCC does a decent job of doing what we want. After the loop, a black/reset pixel is generated to set the appropriate output level.

One concern that one might have about using the CPU for such long transfers in an interrupt handler is that it ties up the CPU, preventing it from doing other things, and it also prevents other interrupt requests from being serviced. In a system performing a limited range of activities, this can be acceptable: there may be little else going on apart from updating the display and running programs that access the display; even when other activities are incorporated, they may accommodate being relegated to a secondary status, or they may instead take priority over the display in a way that may produce picture distortion but only very occasionally.

Many of these considerations applied to systems of an earlier era. Thinking back to computers like the Acorn Electron – a 6502-based system that formed the basis of my first sustained experiences with computing – it employs a display controller that demands access to the computer’s RAM for a certain amount of the time dedicated to each video frame. The CPU is often slowed down or even paused during periods of this display controller’s activity, making programs slower than they otherwise would be, and making some kinds of input and output slightly less reliable under certain circumstances. Nevertheless, with certain kinds of additional hardware, the possibility is present for such hardware to interrupt the CPU and to override the display controller that would then produce “snow” or noise on the screen as a consquence of this high-priority interruption.

Such issues cause us to consider the role of the DMA controller in our modern experiment. We might well worry about loading the CPU with lots of work, preventing it from doing other things, but what if the DMA controller dominates the system in such a way that it effectively prevents the CPU from doing anything productive anyway? This would be rather similar to what happens with the Electron and its display controller.

So, evaluating a CPU-driven solution seems to be worthwhile just to see whether it produces an acceptable picture and whether it causes unacceptable performance degradation. My recent correspondence also brought up the assertion that the RAM and flash memory provided by PIC32 microcontrollers can be accessed concurrently. This would actually mitigate contention between DMA and any programs running from flash memory, at least until the point that accesses to RAM needed to be performed by those programs, meaning that we might expect some loss of program performance by shifting the transfer burden to the CPU.

(Again, I am reminded of the Electron whose ROM could be accessed at full speed but whose RAM could only be accessed at half speed by the CPU but at full speed by the display controller. This might have been exploited by software running from ROM, or by a special kind of RAM installed and made available at the right place in memory, but the 6502 favours those zero-page instructions mentioned earlier, forcing RAM access and thus contention with the display controller. There were upgrades to mitigate this by providing some dedicated memory for zero page, but all of this is really another story for another time.)

Ultimately, having accepted that the compiler would produce good-enough code and that I didn’t need to try more exotic things with assembly language, I managed to produce a stable picture.

Maybe I should have taken this obvious path from the very beginning. However, the presence of DMA support would have eventually caused me to investigate its viability for this application, anyway. And it should be said that the performance differences between the CPU-based approach and the DMA-based approaches might be significant enough to argue in favour of the use of DMA for some purposes.

Observations and Conclusions

What started out as a quick review of my earlier work turned out to be a more thorough study of different techniques and approaches. I managed to get timed transfers functioning, revisited parallel mode and made it work in a fairly acceptable way, and I discovered some optimisations that help to make certain combinations of techniques more usable. But what ultimately matters is which approaches can actually be used to produce a picture on a screen while programs are being run at the same time.

To give the CPU more to do, I decided to implement some graphical operations, mostly copying data to a framebuffer for its eventual transfer as pixels to the display. The idea was to engage the CPU in actual work whilst also exercising access to RAM. If significant contention between the CPU and DMA controller were to occur, the effects would presumably be visible on the screen, potentially making the chosen configuration unusable.

Although some approaches seem promising on paper, and they may even produce promising results when the CPU is doing little more than looping and decrementing a register to introduce a delay, these approaches may produce less than promising results under load. The picture may start to ripple and stretch, and under “real world” load, the picture may seem noisy and like a badly-tuned television (for those who remember the old days of analogue broadcast signals).

Two approaches seem to remain robust, however: the use of timed DMA transfers, and the use of the CPU to do all the transfer work. The former is limited in terms of resolution and introduces complexity around the construction of images in the framebuffer, at least if optimised as much as possible, but it seems to allow more work to occur alongside the update of the display, and the reduction in resolution also frees up RAM for other purposes for those applications that need it. Meanwhile, the latter provides the resolution we originally sought and offers a straightforward framebuffer arrangement, but it demands more effort from the CPU, slowing things down to the extent that animation practically demands double buffering and thus the allocation of even more RAM for display purposes.

But both of these seemingly viable approaches produce consistent pixel widths, which is something of a happy outcome given all the effort to try and fix that particular problem. One can envisage accommodating them both within a system given that various fundamental system properties (how fast the system and peripheral clocks are running, for example) are shared between the two approaches. Again, this is reminiscent of microcomputers where a range of display modes allowed developers and users to choose the trade-off appropriate for them.

Having investigated techniques like hardware scrolling and sprite plotting, it is tempting to keep developing software to demonstrate the techniques described in this article. I am even tempted to design a printed circuit board to tidy up my rather cumbersome breadboard arrangement. And perhaps the library code I have written can be used as the basis for other projects.

It is remarkable that a home-made microcontroller-based solution can be versatile enough to demonstrate aspects of simple computer systems, possibly even making it relevant for those wishing to teach or to learn about such things, particularly since all the components can be connected together relatively easily, with only some magic happening in the microcontroller itself. And with such potential, maybe this seemingly pointless project might have some meaning and value after all!

Update

Although I can’t embed video files of any size here, I have made a “standard definition” video available to demonstrate scrolling and sprites. I hope it is entertaining and also somewhat illustrative of the kind of thing these techniques can achieve.

Thursday, 01 November 2018

Keep yourself organized

English on Björn Schießle - I came for the code but stayed for the freedom | 17:00, Thursday, 01 November 2018

Over the years I tried various tools to organize my daily work and manage my ToDo list, including Emacs org-mode, ToDo.txt, Kanban boards and simple plain text files. This are all great tools but I was never completely happy with it, over time it always became to unstructured and crowded or I didn’t managed to integrate it into my daily workflow.

Another tool I used through all this time was Zim, a wiki-like desktop app with many great features and extensions. I used it for notes and to plan and organize larger projects. At some point I had the idea to try to use it as well for my task management and to organize my daily work. The great strength of Zim, it’s flexibility can be also a weak spot because you have to find your own way to organize and structure your stuff. After reading various articles like “Getting Things Done” with Zim and trying different approaches I come up with a setup which works nicely for me.

Basic setup

Zim allows you to have multiple, completely separated “notebooks”. But I realized soon that this adds another level of complexity and makes it less likely for me to use it regularly. Therefore I decided to just have one single notebook, with the three top-level pages “FSFE”, “Nextcloud” and “Personal”. Each of this top-level pages have four sub-pages “Archive”, “Notes”, “Ideas” and “Projects”. Every project which is done or notes which are no longer needed will be moved to the archive.

Additionally I created a top-level category called “Journal”. I use this to organize my week and to prepare my weekly work report. Zim has a quite powerful journal functionality with a integrated calendar. You can have daily, weekly, monthly or yearly journals. I decided to use weekly journals because at Nextcloud we also write weekly work reports, so this fits quite well. Whenever I click on a date in the calendar the journal of the corresponding week opens. I use a template so that a newly created journal looks like the screenshot at the top.

Daily usage

As you can see at the picture at the top, the template adds directly the recurring tasks like “check support tickets” or write “work report”. If one of the task is completed I tick the checkbox. During a day typically tasks come in which I can’t handle directly. In this case I will quickly decide to add it to the current day or the next day, depending on how urgent the task is and whether I plan to handle it at the same day or not. Sometimes, if I already know that the task is for a deadline later during the week it can happen that I add it directly to a later day, in rare situations even to the next week. But in general I try to plan the work not to far in the future.

In the past I added everything to my ToDo list, also stuff for which the deadline was a few months away or stuff I wanted to do once I have some time left. My experience is that this grows the list so quickly that it becomes highly unproductive. That’s why I decided to add stuff like this to the “Ideas” category. I review this category regularly and see if some of this stuff could become a real project, task or if it is no longer relevant. If it is something which has already a deadline, but only in a few months, I create a calendar entry with a reminder and add it later to the task list once it become relevant again. This keeps the ToDo list manageable.

At the bottom of the screenshot you see the beginning of my weekly work report, so I can update it directly during the week and don’t have to remember at the end of the week what I have actually done. At the end of each day I review the tasks for the day, mark finished stuff as done and re-evaluate the other stuff: Some tasks might be no longer relevant so that I can remove them completely, otherwise I move them forward to the next day. At the end of the week the list looks something like this:

This is now the first time were I start to feel really comfortable with my workflow. The first thing I do in the morning is to open Zim, move it to my second screen and there it stays open until the end of the day. I think the reasons that it works so well is that it provides a clear structure, and it integrates well with all the other stuff like notes, project handling, writing my work report and so on.

What I’m missing

Of course nothing is perfect. This is also true for Zim. The one thing I really miss is a good mobile app to add, update or remove task while I’m away from my desktop. At the moment I sync the Zim notebook with Nextcloud and use the Nextcloud Android app to browse the notebook and open the files with a normal text editor. This works, but a dedicated Zim app for Android would make it perfect.

Sunday, 28 October 2018

Linux Software RAID mismatch Warning

Evaggelos Balaskas - System Engineer | 16:18, Sunday, 28 October 2018

I use Linux Software RAID for years now. It is reliable and stable (as long as your hard disks are reliable) with very few problems. One recent issue -that the daily cron raid-check was reporting- was this:

WARNING: mismatch_cnt is not 0 on /dev/md0

Raid Environment

A few details on this specific raid setup:

RAID 5 with 4 Drives

with 4 x 1TB hard disks and according the online raid calculator:

that means this setup is fault tolerant and cheap but not fast.

Raid Details

# /sbin/mdadm --detail /dev/md0

raid configuration is valid

/dev/md0:

Version : 1.2

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 976631296 (931.39 GiB 1000.07 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sat Oct 27 04:38:04 2018

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : ServerTwo:0 (local to host ServerTwo)

UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Events : 60352

Number Major Minor RaidDevice State

4 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

6 8 48 2 active sync /dev/sdd

5 8 0 3 active sync /dev/sda

Examine Verbose Scan

with a more detailed output:

# mdadm -Evvvvs

there are a few Bad Blocks, although it is perfectly normal for a two (2) year disks to have some. smartctl is a tool you need to use from time to time.

/dev/sdd:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Name : ServerTwo:0 (local to host ServerTwo)

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Raid Devices : 4

Avail Dev Size : 1953266096 (931.39 GiB 1000.07 GB)

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 1953262592 (931.39 GiB 1000.07 GB)

Data Offset : 259072 sectors

Super Offset : 8 sectors

Unused Space : before=258984 sectors, after=3504 sectors

State : clean

Device UUID : bdd41067:b5b243c6:a9b523c4:bc4d4a80

Update Time : Sun Oct 28 09:04:01 2018

Bad Block Log : 512 entries available at offset 72 sectors

Checksum : 6baa02c9 - correct

Events : 60355

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 2

Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing)

/dev/sde:

MBR Magic : aa55

Partition[0] : 8388608 sectors at 2048 (type 82)

Partition[1] : 226050048 sectors at 8390656 (type 83)

/dev/sdc:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Name : ServerTwo:0 (local to host ServerTwo)

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Raid Devices : 4

Avail Dev Size : 1953263024 (931.39 GiB 1000.07 GB)

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 1953262592 (931.39 GiB 1000.07 GB)

Data Offset : 259072 sectors

Super Offset : 8 sectors

Unused Space : before=258992 sectors, after=3504 sectors

State : clean

Device UUID : a90e317e:43848f30:0de1ee77:f8912610

Update Time : Sun Oct 28 09:04:01 2018

Checksum : 30b57195 - correct

Events : 60355

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 1

Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing)

/dev/sdb:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Name : ServerTwo:0 (local to host ServerTwo)

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Raid Devices : 4

Avail Dev Size : 1953263024 (931.39 GiB 1000.07 GB)

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 1953262592 (931.39 GiB 1000.07 GB)

Data Offset : 259072 sectors

Super Offset : 8 sectors

Unused Space : before=258984 sectors, after=3504 sectors

State : clean

Device UUID : ad7315e5:56cebd8c:75c50a72:893a63db

Update Time : Sun Oct 28 09:04:01 2018

Bad Block Log : 512 entries available at offset 72 sectors

Checksum : b928adf1 - correct

Events : 60355

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 0

Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing)

/dev/sda:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Name : ServerTwo:0 (local to host ServerTwo)

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Raid Devices : 4

Avail Dev Size : 1953263024 (931.39 GiB 1000.07 GB)

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 1953262592 (931.39 GiB 1000.07 GB)

Data Offset : 259072 sectors

Super Offset : 8 sectors

Unused Space : before=258984 sectors, after=3504 sectors

State : clean

Device UUID : f4e1da17:e4ff74f0:b1cf6ec8:6eca3df1

Update Time : Sun Oct 28 09:04:01 2018

Bad Block Log : 512 entries available at offset 72 sectors

Checksum : bbe3e7e8 - correct

Events : 60355

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 3

Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing)

MisMatch Warning

WARNING: mismatch_cnt is not 0 on /dev/md0

So this is not a critical error, rather tells us that there are a few blocks that are “Not Synced Yet” across all disks.

Status

Checking the Multiple Device (md) driver status:

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]We verify that none job is running on the raid.

Repair

We can run a manual repair job:

# echo repair >/sys/block/md0/md/sync_action

now status looks like:

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[=========>...........] resync = 45.6% (445779112/976631296) finish=54.0min speed=163543K/sec

unused devices: <none>

Progress

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[============>........] resync = 63.4% (619673060/976631296) finish=38.2min speed=155300K/sec

unused devices: <none>Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[================>....] resync = 81.9% (800492148/976631296) finish=21.6min speed=135627K/sec

unused devices: <none>

Finally

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>

Check

After repair is it useful to check again the status of our software raid:

# echo check >/sys/block/md0/md/sync_action

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[=>...................] check = 9.5% (92965776/976631296) finish=91.0min speed=161680K/sec

unused devices: <none>and finally

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>Tuesday, 23 October 2018

KDE Applications 18.12 Schedule finalized

TSDgeos' blog | 22:59, Tuesday, 23 October 2018

It is available at the usual place https://community.kde.org/Schedules/Applications/18.12_Release_ScheduleDependency freeze is in 2 weeks and Feature Freeze in 3 weeks, so hurry up!

Monday, 22 October 2018

Reinventing Startups

Blog – Think. Innovation. | 13:08, Monday, 22 October 2018

Once we have come to realize that the Silicon Valley model of startup-based innovation is not for the betterment of people and planet, but is a hyper-accelerated version of the existing growth-based capitalist Operating System increasing inequality, destroying nature and benefiting the 1%, the question arises: is there an alternative?

One group of people that have come to this realization is searching for an alternative model. They call their companies Zebras, opposing the Silicon Valley $ 1 Billion+ valued startups called Unicorns. Their movement is called Zebras Unite and is definitely worth checking out. I recently wrote a ‘Fairy Tale’ story to give Zebras Unite a warning as I believe they run the risk of leaving the root cause of the current faulty Operating System untouched. This warning is based on the brilliant work of Douglas Rushkoff.

This article proposes an alternative entrepreneurial-based innovation model that I believe has the potential to be good for people and planet by design. This model goes beyond the lip service of the hyped terms ‘collaboration’, ‘openness’, ‘sharing’ and ‘transparency’ and puts these terms in practice in a structural and practical way. The model is based on the following premises, while at the same time leaving opportunity for profitable business practices:

- Freely sharing all created knowledge and technology under open licenses: if we are going to solve the tremendous ‘wicked’ problems of humanity, we need to realize that no single company can solve these on their own. We need to realize that getting to know and building upon each others’ innovations via coincidental meeting, liking and contract agreements is too slow and leaves too much to chance. Instead, we need to realize that there is no time to waste and all heads, hands and inventiveness is necessarily shared immediately and openly to achieve what I call Permissionless Innovation.

- Being growth agnostic: if we are creating organizations and companies, these should not depend on growth for their survival. Instead they should thrive no matter what the size, because they provide a sustained source of value to humanity.

- Having interests aligned with people and planet: for many corporations, especially VC-backed and publicly traded companies, doing good for owners/shareholders and doing good for people and the planet is a trade-off. It should not be that way. Providing value for people and planet should not conflict with providing value for owners/shareholders.

Starting from these premises, I propose the following structural changes for customer-focused organizations and companies:

Intellectual Property

We should stop pretending that knowledge is scarce, whereas it is in fact abundant. Putting open collaboration in practice means that sharing is the default: publish technology/designs/knowledge under Open Licenses. This results in what I call ‘Permissionless Innovation’ and favors inclusiveness, as innovation is not only for the privileged anymore. Also, not one single company can pretend to have the knowledge to solve ‘wicked problems’, we need free flow of information for that. And IP protection also creates huge amounts of ‘competitive waste’.

Sustainable Product Development

Based on abundant knowledge we can co-design products that are made to be used, studied, repaired, customized and innovated upon. We get rid of the linear ‘producer => product => consumer’ and get to a ‘prosumer’ or ‘peer to peer’ dynamic model. This goes beyond the practice of Design Thinking / Service Design and crowdsourcing and puts the power of design and production outside of the company as well as inside it (open boundaries).

Company Ownership

Worker and/or customer owned cooperatives as the standard, instead of rent-seeking hands-off investor shareholder-owners. With cooperative ownership the interests of the company are automatically aligned with those of the owners. I do not have sufficient knowledge about how this could work with start-up founders who are also owners, but at some point the founders should transition their ownership.

Funding

Companies that need outside funding should be aware of the ‘growth trap’ as described above. Investment should be limited in time (capped ROI) and loans paid back. To be sustainable and ‘circular’ the company in the long run should not depend on loans or investments. Ideally in the future even the use of fiat money should be abandoned, in favor of alternative currency that does not require continuous endless growth.

Scaling

Getting a valuable solution in the hands of those who need it, should not depend on a centralized organization having control, the supply-chain model. Putting ‘distributive’ in practice means an open dynamic ecosystem model supporting local initiative and entrepreneurship, while the company has its unique place in the ecosystem. This is similar to the franchise model, but mainly permissionless except for brands/trademarks. This dynamic model is much more resilient and can scale faster, especially relevant for non-digital products.

– Diderik

The text in this article is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Photo from Stocksnap.io.

This article first appeared on reinvent.cc.

Saturday, 20 October 2018

sharing keyboard and mouse with synergy

Evaggelos Balaskas - System Engineer | 21:34, Saturday, 20 October 2018

Synergy

Mouse and Keyboard Sharing

aka Virtual-KVM

Open source core of Synergy, the keyboard and mouse sharing tool

You can find the code here:

https://github.com/symless/synergy-coreor you can use the alternative barrier

https://github.com/debauchee/barrier

Setup

My setup looks like this:

I bought a docking station for the company’s laptop. I want to use a single monitor, keyboard & mouse to both my desktop PC & laptop when being at home.

My DekstopPC runs archlinux and company’s laptop is a windows 10.

Keyboard and mouse are connected to linux.

Both machines are connected on the same LAN (cables on a switch).

Host

/etc/hosts

192.168.0.11 myhomepc.localdomain myhomepc

192.168.0.12 worklaptop.localdomain worklaptop

Archlinux

DesktopPC will be my Virtual KVM software server. So I need to run synergy as a server.

Configuration

If no configuration file pathname is provided then the first of the

following to load successfully sets the configuration:${HOME}/.synergy.conf

/etc/synergy.conf

vim ${HOME}/.synergy.confsection: screens

# two hosts named: myhomepc and worklaptop

myhomepc:

worklaptop:

end

section: links

myhomepc:

left = worklaptop

end

Testing

run in the foreground

$ synergys --no-daemonexample output:

[2018-10-20T20:34:44] NOTE: started server, waiting for clients [2018-10-20T20:34:44] NOTE: accepted client connection [2018-10-20T20:34:44] NOTE: client "worklaptop" has connected [2018-10-20T20:35:03] INFO: switch from "myhomepc" to "worklaptop" at 1919,423 [2018-10-20T20:35:03] INFO: leaving screen [2018-10-20T20:35:03] INFO: screen "myhomepc" updated clipboard 0 [2018-10-20T20:35:04] INFO: screen "myhomepc" updated clipboard 1 [2018-10-20T20:35:10] NOTE: client "worklaptop" has disconnected [2018-10-20T20:35:10] INFO: jump from "worklaptop" to "myhomepc" at 960,540 [2018-10-20T20:35:10] INFO: entering screen [2018-10-20T20:35:14] NOTE: accepted client connection [2018-10-20T20:35:14] NOTE: client "worklaptop" has connected [2018-10-20T20:35:16] INFO: switch from "myhomepc" to "worklaptop" at 1919,207 [2018-10-20T20:35:16] INFO: leaving screen [2018-10-20T20:43:13] NOTE: client "worklaptop" has disconnected [2018-10-20T20:43:13] INFO: jump from "worklaptop" to "myhomepc" at 960,540 [2018-10-20T20:43:13] INFO: entering screen [2018-10-20T20:43:16] NOTE: accepted client connection [2018-10-20T20:43:16] NOTE: client "worklaptop" has connected [2018-10-20T20:43:40] NOTE: client "worklaptop" has disconnected

Systemd

To use synergy as a systemd service, then you need to copy your configuration file under /etc directory

sudo cp ${HOME}/.synergy.conf /etc/synergy.confBeware: Your user should have read access to the above configuration file.

and then:

$ systemctl start --user synergys

$ systemctl enable --user synergys

Verify

$ ss -lntp '( sport = :24800 )'State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 3 0.0.0.0:24800 0.0.0.0:* users:(("synergys",pid=10723,fd=6))

Win10

On windows10 (the synergy client) you just need to connect to the synergy server !

And ofcourse create a startup-shortcut:

and that’s it !

Thursday, 18 October 2018

No activity

Thomas Løcke Being Incoherent | 11:32, Thursday, 18 October 2018

This blog is currently dead…. Catch me at twitter

Friday, 28 September 2018

Technoshamanism in Barcelona on October 4

agger's Free Software blog | 13:00, Friday, 28 September 2018

TECNOXAMANS, ELS HACKERS DE L’AMAZONES.

XERRADA I RITUAL DIY DE LA XARXA INTERNACIONAL TECNOXAMANISME AL CSOA LA FUSTERIA.

El dijous 4 d’octubre celebrarem al CSOA La Fusteria una xerrada amb membres de la xarxa Tecnoxamanisme, un col·lectiu internacional de producció d’imaginaris format per artistes, biohackers, pensadors, activistes, indígenes i indigenistes que intenten recuperar idees de futur perdudes al passat ancestral. La xerrada estarà conduïda per l’escriptor Francisco Jota-Pérez i després es realitzarà una performance ritual DIY on totes podreu participar.

Què té en comú el moviment hacker amb les lluites dels pobles indígenes amenaçats per allò anomenat “progrés”?

El tecnoxamanisme va sorgir el 2014 a partir de la confluència de diverses xarxes nascudes al voltant del moviment del software i la cultura lliure per promoure intercanvis de tecnologies, rituals, sinergies i sensibilitats amb les comunitats indígenes. Actuen impulsant trobades i esdeveniments que transcendeixen als rituals DIY, la música electrònica, la permacultura i els processos immersius, barrejant cosmovisions i impulsant la descolonització del pensament.

Segons els membres de la xarxa tecnoxamans: “Encara gaudim de zones autònomes temporals, d’invenció de formes de vida, d’art/vida; tractem de pensar i col·laborar amb la reforestació de la Terra amb un imaginari ancestrofuturista. El nostre principal exercici és crear xarxes d’inconscients, enfortint el desig de comunitat, així com proposar alternatives al pensament ‘productiu’ de la ciència i la tecnologia”.

Després de dos festivals internacionals realitzats en el sud de Bahia, Brasil, (produïts juntament amb l’associació indígena de l’ètnia Pataxó Aldeia Velha i Aldeia Pará, prop de Porto Seguro, on van arribar els primers vaixells portuguesos durant la colonització), organitzen el III Festival de Tecnoxamanisme els dies 5,6 i 7 d’octubre a França. I tenim la sort de que visitin Barcelona per poder compartir amb nosaltres els seus projectes i filosofia. On ens parlaran de temps espiral (no lineal), de ficcions col·lectives, de com el monoteisme i després el capitalisme van segrestar la tecnologia, o d’allò que podem aprendre de les comunitats indígenes tant a nivell de supervivència com de resistència.

Us esperem a totes a les 20.30 a La Fusteria.

C/J. Benlluire, 212 (El Cabanyal)

Col·labora Láudano Magazine.

www.laudanomag.com

Tuesday, 25 September 2018

Libre Application Summit 2018

TSDgeos' blog | 22:28, Tuesday, 25 September 2018

Earlier this month i attended Libre Application Summit 2018 in Denver.<script async="async" charset="utf-8" src="https://platform.twitter.com/widgets.js"></script>Check out the great group photo at #LASGNOME! Thank you for all the participant and speakers for posing for us :-) pic.twitter.com/v7pPmcCBV1

— LAS GNOME (@LASGNOME) 8 de setembre de 2018

Libre Application Summit wants to be a place for all people involved in doing Free Software applications to meet and share ideas, though being almost organized by GNOME it had a some skew towards GNOME/flatpak. There was a good presence of KDE, but personally I felt that we would have needed more people at least from LibreOffice, Firefox and someone from the Ubuntu/Canonical/Snap field (don't get annoyed at you if I failed to mention your group).

The Summit was kicked off by a motivational talk on how to make sure we ride the wave of "Open Source has won but people don't know it". I felt the content of the talk was nice but the speaker was hit by some issues (not being able to have the laptop in front of her due to the venue being a bit weirdly layouted) that sadly made her speech a bit too stumbly.

Then we had a bunch of flatpak related talks, ranging from the new freedesktop-sdk runtime, from very technical stuff about how ostree works and also including a talk by our own Aleix Pol on how KDE is planning to approach the release of flatpaks. Some of us ended the day having BBQ at the house the Codethink people were staying. Thanks for the good time!

<script async="async" charset="utf-8" src="https://platform.twitter.com/widgets.js"></script>Soon, @tsdgeos tells us all about KDE Applications releases in @LASGNOME. @kdecommunity pic.twitter.com/ODnxEZ77xv

— Aleix Pol (@AleixPol) 7 de setembre de 2018

I kicked off the next day talking about how we (lately mostly Christoph) are doing the KDE Applications releases. We got appreciation of the good work we're doing and some interesting follow up questions so I think people were engaged by it.

The morning continued with talks about how to engage the "non typical" free software people, designers, students, professors, etc.

<script async="async" charset="utf-8" src="https://platform.twitter.com/widgets.js"></script>The @kdecommunity and @Codethink people having interesting discussions over sushi at #LAS2018 pic.twitter.com/O5lH1hPTlk

— Albert Astals Cid (@tsdgeos) 7 de setembre de 2018

After lunch we had a few talks by the Elementary people and another talk with Aleix focused on which apps will run on your Plasma devices (hint: all of them).

The day finished with a quizz sponsored by System 76, it was fun!

<script async="async" charset="utf-8" src="https://platform.twitter.com/widgets.js"></script>The KDE spies team wasn't very successful at #LASGNOME trivial, but had lots of fun :) @tsdgeos @AleixPol @maru161803399 @albertvaka pic.twitter.com/k0172A3Vk1

— Albert Astals Cid (@tsdgeos) 8 de setembre de 2018

The last day of talks started again with me speaking, this time about how amazing Qt is and why you should use it to build your apps. There I had some questions about people worrying if QtWidgets was going to die, I told them not to worry, but it's clear The Qt Company needs to improve their messaging in that regard.

<script async="async" charset="utf-8" src="https://platform.twitter.com/widgets.js"></script>Learning about Qt in the @LASGNOME from @tsdgeos @qtproject pic.twitter.com/Vr5Raj10Su

— Aleix Pol (@AleixPol) 8 de setembre de 2018

After that we had a talk about a Fedora project to create a distro based exclusively in flaptaks, which sounds really interesting. The last talks of LAS 2018 were about how to fight vandalism in crowdsourced data, the status of Librem 5 (it's still a bit far away) and a very interesting one about the status of Free Software in Research.

All in all i think the conference was interesting, still a bit small and too GNOME controlled to appeal to the general crowd, but it's the second time it has been organized, so it will improve.

I want to thank the KDE e.V. for sponsoring my flight and hosting to attend this conference. Please Donate! so we can continue attending conferences like this and spreading the good work of KDE :)

Tuesday, 18 September 2018

No Netflix on my Smart TV

tobias_platen's blog | 19:50, Tuesday, 18 September 2018