While I was mostly unplugged on my vacation last week, with the news of Facebook’s disappointing earnings report and subsequent stock decline — the largest one-day loss by any company in U.S. stock market history — I couldn’t resist chiming in on Twitter:

42% revenue growth an “utter disaster”

I might need to extend my vacation 🙄 https://t.co/7Tyv2Y5jVU

— Ben Thompson (@benthompson) July 26, 2018

I do regret the tweet a tad, and not only because “chiming in on Twitter” is always risky. Back when Stratechery started I wrote in the very first post that one of the topics I looked forward to exploring was “Why Wall Street is not completely insane”; I was thinking at the time about Apple, a company that, especially at that time, was regularly posting eye-popping revenue and profit numbers that did not necessarily lead to corresponding increases in the stock price, much to the consternation of Apple shareholders. The underlying point should be an obvious one: a stock price is about future earnings, not already realized ones; that the iPhone maker had just had a great quarter was an important signal about the future, but not a determinant factor, and that those pointing to the past to complain about a price predicated on the future were missing the point.

Of course that is exactly what I did in that tweet.

It’s worth noting, though, that while the explicit reasoning of those Apple stockholders may have been suspect, their sentiment has proven correct: in April 2013 Apple reported quarterly revenue of $43.6 billion and profit of $9.5 billion, and the day I started Stratechery the stock price was $63.25; five years later Apple reported quarterly revenue of $61.1 billion and profit of $13.8 billion, and on Friday the stock price was $190.98.

To be clear, I agreed with the Apple-investor sentiment all along: several of my early articles — Apple the Black Swan, Two Bears, and especially What Clayton Christensen Got Wrong — were about making the case that Apple’s business was far more sustainable with much deeper moats than most people realized, and it was that sustainability and defensibility that mattered more than any one quarter’s results.

The question is if a similar case can be made for Facebook: certainly my tweet taken literally was naive for the exact reasons those Apple investor complaints missed the point five years ago; what about the sentiment, though? Just how good of a business is Facebook?

As with many such things, it all depends on what lens you use to examine the question.

Lens 1: Facebook’s Finances

As is often the case with earnings, the move in Facebook’s stock was only a bit about the results and whole lot about future expectations. On Wednesday, Facebook’s stock closed at $217, but then its earnings showed revenue of $13.2 billion, slightly below Wall Street’s expectations; unsurprisingly, the stock slid about 8% in after-hours trading to around $200. The real drop was spurred by two comments on the earnings call from Facebook CFO Dave Wehner about Facebook’s expectations going forward.

First, with regards to revenue:

Turning now to the revenue outlook; our total revenue growth rate decelerated approximately 7 percentage points in Q2 compared to Q1. Our total revenue growth rates will continue to decelerate in the second half of 2018, and we expect our revenue growth rates to decline by high-single digit percentages from prior quarters sequentially in both Q3 and Q4.

Second, with regards to operating margin:

Turning now to expenses; we continue to expect that full-year 2018 total expenses will grow in the range of 50% to 60% compared to last year…Looking beyond 2018, we anticipate that total expense growth will exceed revenue growth in 2019. Over the next several years, we would anticipate that our operating margins will trend towards the mid-30s on a percentage basis.

From a purely financial perspective, both pieces of news are are less than ideal but at least understandable. In terms of revenue, Facebook’s growth is from a very large base, which means that this quarter’s 42% year-over-year revenue growth to $13.2 billion from $9.3 billion is, in absolute terms, 36% greater than the year ago’s 45% revenue growth (from $6.4 billion). To put it in simpler terms, massive growth rates inevitably decline even as massive absolute growth remains; as a point of comparison, Google in the same relative timeframe (14 years after incorporation) grew 35 percent to $12.21 billion (i.e. Facebook is better on both metrics).

As far as the operating margin decline, in a normal company — i.e. one with marginal costs — a revenue decrease would not necessarily lead to a meaningful decline in margin since selling fewer products would mean lower costs of goods sold. Facebook, of course, is not a normal company: the only marginal costs for the ads they sell are credit card fees; like most tech companies the vast majority of costs are “below the line” (mostly in Research & Development, but also Sales & Marketing and General & Administrative); it follows, then, that a decrease in revenue growth would, absent an explicit effort to decrease unrelated (to revenue) expense growth, lead to lower operating margins.

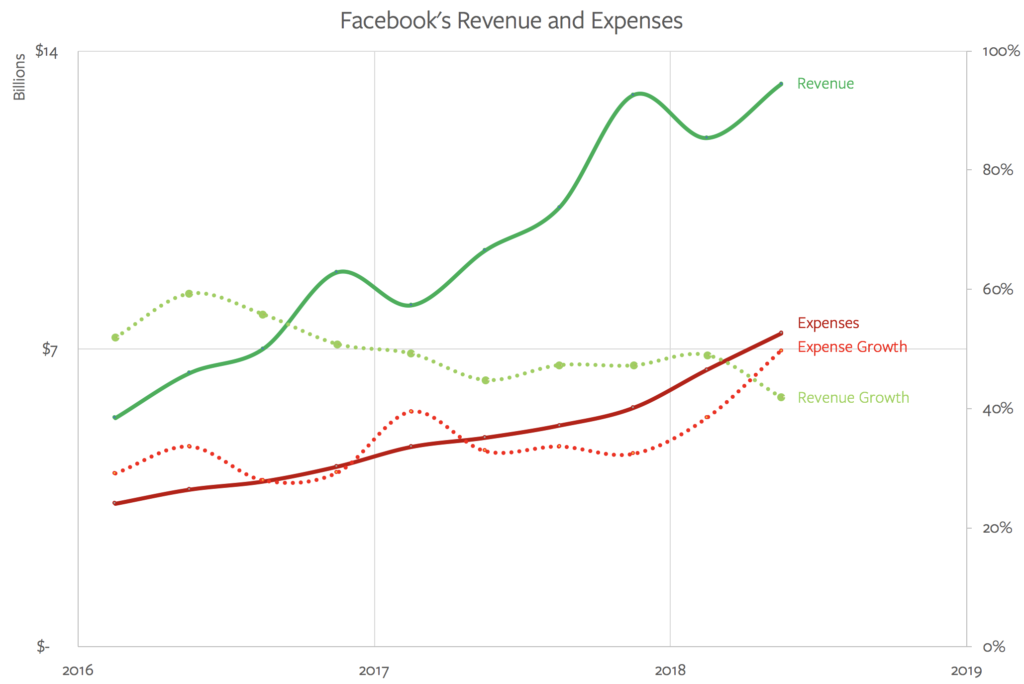

In fact, Facebook is not only not decreasing expenses, they are going in the opposite direction; expenses are growing faster than ever, even as revenue growth clearly fell off:

I suspect it is this chart, more than anything else, that explains the drop in Facebook’s stock price: it’s not one thing or the other; it is both revenue growth slowing and expenses accelerating at the same time, with all indications from management are that the trends will continue.

Again, relatively speaking Facebook is in great shape financially — I already noted the company had better revenue and growth numbers than Google at a similar point, and their operating margins are substantially better as well — but there’s no question this is a pretty substantial shift in the company’s longterm outlook. The financial lens still provides a pretty positive view, but it is indeed less positive than before.

Lens 2: Facebook’s Products

It is always a bit confusing to write about Facebook, because there is both Facebook the company and Facebook the product, and there is no question the greatest amount of negativity has, for several years now, been centered around the latter. To that end, it is tempting to conflate the two; for example, the New York Times wrote in an article headlined Facebook Starts Paying a Price for Scandals:

For nearly two years, Facebook has appeared bulletproof despite a series of scandals about the misuse of its giant social network. But the Silicon Valley company’s streak ended on Wednesday when it said that the accumulation of issues was starting to hurt its multibillion-dollar business — and that the costs are set to continue playing out for months.

This is true as far as it goes, particular when it comes to expenses: Facebook is on pace to increase its security and content review teams to 20,000 people, a three-fold increase in 18 months; that is why CEO Mark Zuckerberg warned on an earnings call last year:

I’ve directed our teams to invest so much in security on top of the other investments we’re making that it will significantly impact our profitability going forward, and I wanted our investors to hear that directly from me. I believe this will make our society stronger, and in doing so will be good for all of us over the long term. But I want to be clear about what our priority is. Protecting our community is more important than maximizing our profits.

What is much less clear is what effect, if any, Facebook’s controversies have had on the top line. There were three factors that for many years made Facebook a monster when it came to revenue growth:

- The number of users was increasing

- Ad load (the number of ads shown in the News Feed) was increasing

- The price-per-ad was increasing

A year ago, though, Facebook stopped increasing ad load; as I have documented, this did result in an even sharper increase in the price paid per-ad, but it was still a retardant on growth.

Then, over the last year, Facebook’s user growth started to slow, and in the most-profitable North American region, has effectively plateaued. That, though, isn’t because of Facebook’s controversies: it is because the app has run out of people! The company has 241 million monthly active users in the US & Canada, 65% of the total population of 372 million (including children who aren’t supposed to have accounts before the age of 13).

Given that degree of nearly total penetration, what is more important when it comes to evaluating Facebook’s health is that there is no indication the company is losing users. Sure, the numbers in North America decreased by a million in Q4 2017, but now that million is back; I expect something similar when it comes to the million users the company lost in Europe when it required affirmative consent from users to continue using the app because of GDPR.

The fact of the matter is that nothing has happened to diminish Facebook’s moat when it comes to attracting and retaining users: the number one feature of a social network is how many people are on it, and for all intents and purposes everyone is on Facebook — whether they like it or not.

Interestingly, Facebook is working to deepen that moat even further with its focus on Groups. Zuckerberg said on the earnings call:

There are more than 200 million people that are members of meaningful groups on Facebook, and these are communities that, upon joining, they become the most important part of your Facebook experience and a big part of your real world social infrastructure. These are groups for new parents, for people with rare diseases, for volunteering, for military families deployed to a new base and more.

We believe there is a community for every one on Facebook. And these meaningful communities often spend online and offline and bring people together in person. We found that every great community has an engaged leader. But running a group can take a lot of time. So we have a road map to make this easier. That will enable more meaningful groups to get formed, which will help us to find relevant ones to recommend to you, and eventually achieve our five-year goal of helping 1 billion people be a part of meaningful communities.

Zuckerberg is referring to his 2017 manifesto Building a Global Community; it is a particularly attractive goal from Facebook’s perspective because it makes the product stickier than ever.

All that noted, the most important reason to view Facebook through the lens of the company’s products is that the sheer scale of Facebook the app makes it easy to lose site of the still substantial growth potential of those other products. Instagram in particular recently passed 1 billion users, which is an incredible number that is still less than half of Facebook the app’s total users; by definition Instagram has reached less than half of its addressable market.

Moreover, Instagram has not only been untouched by Facebook’s controversies, it is such a compelling product that, anecdotally speaking, most “Facebook-nevers” or “Facebook-quitters” readily admit to using the service daily. The app also hasn’t come close to reaching its monetization potential: while the feed carries the same ad load as Facebook, the SnapChat-inspired Stories format that has exploded in usage has barely been monetized; in fact, Facebook’s executives attributed some of the company’s slowing revenue growth to increased Stories usage (instead of the feed). From a purely financial perspective this is certainly a cause for concern, but from a strategic perspective it means that Instagram is in an even stronger position that it was previously. Remember, revenue and profit are lagging indicators, and the explosion in Instagram Stories is an extreme example of why that is such an important fact to keep in mind.

WhatsApp is increasingly compelling as well: not only does the app remain the dominant communications medium in much of the world, but the addition of WhatsApp Status updates and Stories dramatically increases the monetization potential of the service — a potential that Facebook hasn’t even started to realize.1

There is some degree of long-term risk when it comes to products: Facebook acquired both Instagram and WhatsApp, but the company should not be allowed to acquire another social network of similar size and velocity to those two, and I doubt they would be. That concern, though, is very far in the future: for now the product lens suggests that Facebook is as strong as ever.

Lens 3: Facebook’s Advertising Infrastructure

This lens takes the exact opposite perspective of Lens 2; looking at the company from a product perspective shows four different apps, but looking at the company from an advertising perspective shows a single integrated machine.

This was a point Facebook executives touched on repeatedly in last week’s earnings call. Here is Wehner (emphasis mine):

In terms of Facebook versus Instagram, they’re obviously both contributing to revenue growth. Instagram is growing more quickly and making an increasing contribution to growth. And we’ve been pleased with how Instagram is growing. Facebook and Instagram are really one ads ecosystem.

Zuckerberg:

We’re also making progress developing Stories into a great format for ads. We’ve made the most progress here on Instagram, but this quarter, we started testing Stories ads on Facebook too…

COO Sheryl Sandberg added:

Since we have so many different places where you have Stories formats in Instagram and WhatsApp and Facebook, as volume increases of the opportunity, advertisers get more interested.

Zuckerberg and Sandberg were obviously talking about the potential for advertising in Stories, but that potential is simply a repeat of what has already happened with Feed ads: Facebook spent years building out News Feed advertising — not simply the display and targeting technology but also the entire back-end apparatus for advertisers, connections with non-Facebook data sources and points-of-sale, relationships with ad buyers, etc. — and then simply plugged Instagram into that infrastructure.

The payoff of this integrated approach cannot be overstated. Instagram got to scale in terms of monetization years faster than they would have on their own, even as the initial product team had the freedom to stay focused on the user experience. Facebook the app benefited as well, because Instagram both increased the surface area for Facebook ad campaigns even as it increased Facebook’s targeting capabilities.

The biggest impact, though is on potential competition. It is tempting to focus on the “R” in “ROI” — the return on investment — and as I just noted Instagram + Facebook makes that even more attractive. Just as important, though, is the “I”; there is tremendous benefit to being a one-stop shop for advertisers, who can save time and money by focusing their spend on Facebook. The tools are familiar, the buys are made across platforms, and as Zuckerberg and Sandberg alluded to with regard to Stories, the ads themselves only need to be made once to be used across multiple platforms. Why even go to the trouble to advertise anywhere else?

This is why the advertising lens is perhaps the most useful when it comes to understanding just how strong Facebook’s business remains, and why the Instagram acquisition in particular was such a big deal. For all the discussion of Facebook the app’s lock-in, it is very reasonable to wonder if engagement is decreasing over time, particularly amongst young people, or if controversies may drive down usage — or worse. Were Instagram a separate company, advertisers might find themselves with no choice but to spread out their advertising to multiple companies, and once their advertising was diversified, it would be a much smaller step to target users on other networks like SnapChat or Twitter. As it stands there is no reason to leave Facebook the advertising platform, no matter what happens with Facebook the app.

Lens 4: Facebook’s Multiplying Moats

Facebook’s advertising moat may be its most important, and its network moat its strongest, but the company has actually added moats, particularly in the last year.

The first is GDPR; this may seem counter-intuitive, given that Facebook said last week the regulation cost them a million users, and that one of the factors that would hurt revenue growth was the increased controls the company was giving users when it comes to controlling their personal information. Keep in mind, though, that GDPR applies to everyone, not just Facebook, and as Sandberg noted on the call (emphasis mine):

Advertisers are still adapting to the changes, so it’s early to know the longer-term impact. And things like GDPR and other privacy changes that may happen from us or may happen with regulation could make ads more relevant. One thing that we know that’s not going to change is that advertisers are always looking for the highest ROI opportunity. And what’s most important in winning budget is our relative performance in the industry, and we believe we’ll continue to do very well on that.

I made this exact point previously:

While GDPR advocates have pointed to the lobbying Google and Facebook have done against the law as evidence that it will be effective, that is to completely miss the point: of course neither company wants to incur the costs entailed in such significant regulation, which will absolutely restrict the amount of information they can collect. What is missed is that the increase in digital advertising is a secular trend driven first-and-foremost by eyeballs: more-and-more time is spent on phones, and the ad dollars will inevitably follow. The calculation that matters, then, is not how much Google or Facebook are hurt in isolation, but how much they are hurt relatively to their competitors, and the obvious answer is “a lot less”, which, in the context of that secular increase, means growth.

Secondly, all of those costs that Facebook are incurring for security and content review that are reducing operating margin? Perhaps the stock market would feel better if they were characterized as moat expansion, because that’s exactly what they are: any would-be Facebook competitor is going to have to make a similar investment, and do it from a dramatically lower revenue base.

Moreover, just as Facebook benefits from scaling its ad infrastructure to all of its products, it can do the same with its security efforts. Zuckerberg stated:

More broadly, our strategy is to use Facebook’s computing infrastructure, business platforms and security systems to serve people across all of our apps…We’re using AI systems in our global community operations team to fight spam, harassment, hate speech, and terrorism across all of our apps to keep people safe. And this is incredibly useful for apps like WhatsApp and Instagram as it helps us manage the challenges of hyper-growth there more effectively.

This is why the lens with which you view Facebook matters so much: the exact same set of facts viewed from a financial perspective are a clear negative; from a moat perspective they are a clear positive.

Lens 5: Facebook’s Raison D’être

Needless to say, once you view Facebook through anything but a financial lens the health of the business is hard to argue with (and frankly, the finances went from phenomenal to fantastic, but it’s all relative). That’s why I can’t help but wonder if there is something more fundamental about both the collapse in Facebook’s stock and the general celebration that followed.

To return to the early years of Stratechery, it was striking how widespread Facebook skepticism was; I first tried to argue otherwise five years ago today, and in 2015 felt compelled to write The Facebook Epoch that begins like this:

I’m fond of saying that few companies are as underrated as Facebook is, especially in Silicon Valley. Admittedly, it seems strange to say such a thing about a $245 billion company with a trailing 12-month P/E ratio of 88, but that is Wall Street sentiment; in the tech bubble many seem to simply assume the company is ever on the brink of teetering “just like MySpace”, never mind the fact that the social network pioneer barely broke 100 million registered users, less than 10% of the number of active users Facebook attracted in a single day late last month. Or, as more sober minds may argue, sure, Facebook looks unstoppable today, but then again, Google looked unstoppable ten years ago when social seemingly came out of nowhere: surely the Facebook killer is imminent!

That sentiment sure seems to be back in full force!

At the risk of veering into broad-based psychoanalysis, I think a lot of the Facebook skepticism is because so much of the content seems so shallow and petty, or in the case of the last few years, actively malicious. How can such a product survive?

In fact, it survives for the very reason it exists: Facebook began in Zuckerberg’s Harvard dorm room by quite literally digitizing offline relationships that already existed, both in real life and in actual physical “facebooks”. Facebook is so powerful because of this direct connection to the real world: it is shallow and petty and sometimes malicious — and yes, often good — because we humans are shallow and petty and sometimes malicious — and yes, often good.

By extension, to insist that Facebook will die any day now is in some respects to suggest that humanity will cease to exist any day now; granted, it is a company and companies fail, but even if Facebook failed it would only be a matter of time before another Facebook rose to replace it.

That seems unlikely: for all of the company’s travails and controversies over the past few years, its moats are deeper than ever, its money-making potential not only huge but growing both internally and secularly; to that end, what is perhaps most distressing of all to would-be competitors is in fact this quarter’s results: at the end of the day Facebook took a massive hit by choice; the company is not maximizing the short-term, it is spending the money and suppressing its revenue potential in favor of becoming more impenetrable than ever.

“Utter disaster” indeed.

- There is Messenger as well; I am more dubious of its long-term monetization potential because its natural advertising space — status updates and stories — are basically what Facebook is [↩︎]