- published: 14 Jul 2012

- views: 342964

-

remove the playlistHadoop

- remove the playlistHadoop

- published: 09 Sep 2014

- views: 835156

- published: 05 Apr 2013

- views: 539019

- published: 29 Mar 2014

- views: 751677

- published: 12 Sep 2014

- views: 139540

- published: 09 May 2017

- views: 23315

- published: 27 May 2015

- views: 63240

- published: 18 Apr 2012

- views: 106919

- published: 29 Jan 2013

- views: 131776

Big data

Big data is a broad term for data sets so large or complex that traditional data processing applications are inadequate. Challenges include analysis, capture, data curation, search, sharing, storage, transfer, visualization, querying and information privacy. The term often refers simply to the use of predictive analytics or certain other advanced methods to extract value from data, and seldom to a particular size of data set. Accuracy in big data may lead to more confident decision making, and better decisions can result in greater operational efficiency, cost reduction and reduced risk.

Analysis of data sets can find new correlations to "spot business trends, prevent diseases, combat crime and so on." Scientists, business executives, practitioners of medicine, advertising and governments alike regularly meet difficulties with large data sets in areas including Internet search, finance and business informatics. Scientists encounter limitations in e-Science work, including meteorology, genomics,connectomics, complex physics simulations, biology and environmental research.

This article is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License, which means that you can copy and modify it as long as the entire work (including additions) remains under this license.

Apache Hadoop

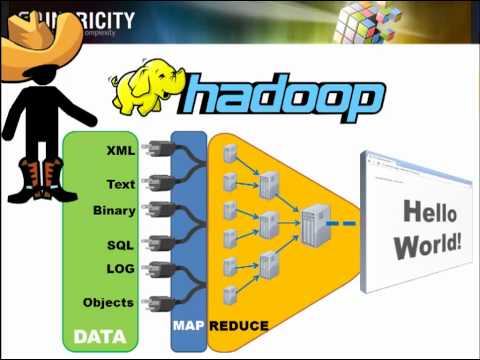

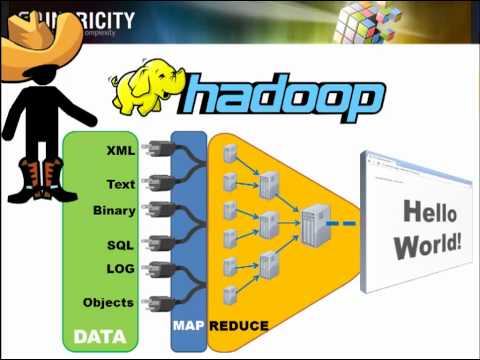

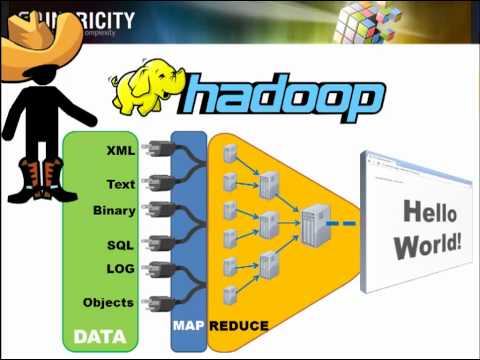

Apache Hadoop is an open-source software framework written in Java for distributed storage and distributed processing of very large data sets on computer clusters built from commodity hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common and should be automatically handled by the framework.

The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part called MapReduce. Hadoop splits files into large blocks and distributes them across nodes in a cluster. To process data, Hadoop transfers packaged code for nodes to process in parallel based on the data that needs to be processed. This approach takes advantage of data locality— nodes manipulating the data they have access to— to allow the dataset to be processed faster and more efficiently than it would be in a more conventional supercomputer architecture that relies on a parallel file system where computation and data are distributed via high-speed networking.

This article is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License, which means that you can copy and modify it as long as the entire work (including additions) remains under this license.

Data

Data (/ˈdeɪtə/ DAY-tə, /ˈdætə/ DA-tə, or /ˈdɑːtə/ DAH-tə) is a set of values of qualitative or quantitative variables; restated, pieces of data are individual pieces of information. Data is measured, collected and reported, and analyzed, whereupon it can be visualized using graphs or images. Data as a general concept refers to the fact that some existing information or knowledge is represented or coded in some form suitable for better usage or processing.

Raw data, i.e. unprocessed data, is a collection of numbers, characters; data processing commonly occurs by stages, and the "processed data" from one stage may be considered the "raw data" of the next. Field data is raw data that is collected in an uncontrolled in situ environment. Experimental data is data that is generated within the context of a scientific investigation by observation and recording.

The Latin word "data" is the plural of "datum", and still may be used as a plural noun in this sense. Nowadays, though, "data" is most commonly used in the singular, as a mass noun (like "information", "sand" or "rain").

This article is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License, which means that you can copy and modify it as long as the entire work (including additions) remains under this license.

Big

Big means large or of great size.

Big or BIG may also refer to:

Film and television

Music

Places

Organizations

This article is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License, which means that you can copy and modify it as long as the entire work (including additions) remains under this license.

File system

In computing, a file system (or filesystem) is used to control how data is stored and retrieved. Without a file system, information placed in a storage area would be one large body of data with no way to tell where one piece of information stops and the next begins. By separating the data into individual pieces, and giving each piece a name, the information is easily separated and identified. Taking its name from the way paper-based information systems are named, each group of data is called a "file". The structure and logic rules used to manage the groups of information and their names is called a "file system".

There are many different kinds of file systems. Each one has different structure and logic, properties of speed, flexibility, security, size and more. Some file systems have been designed to be used for specific applications. For example, the ISO 9660 file system is designed specifically for optical discs.

File systems can be used on many different kinds of storage devices. Each storage device uses a different kind of media. The most common storage device in use today is a hard drive whose media is a disc that has been coated with a magnetic film. The film has ones and zeros 'written' on it sending electrical pulses to a magnetic "read-write" head. Other media that are used are magnetic tape, optical disc, and flash memory. In some cases, such as with tmpfs, the computer's main memory (RAM) is used to create a temporary file system for short-term use.

This article is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License, which means that you can copy and modify it as long as the entire work (including additions) remains under this license.

- Loading...

-

3:07

3:07What is Hadoop?

What is Hadoop?What is Hadoop?

-For a deeper dive, check our our video comparing Hadoop to SQL http://www.youtube.com/watch?v=3Wmdy80QOvw&feature;=c4-overview&list;=UUrR22MmDd5-cKP2jTVKpBcQ -Or see our video outlining critical Hadoop Scalability fundamentals https://www.youtube.com/watch?v=h5vAj9FPl0I To Talk with a Specialist go to: http://www.intricity.com/intricity101/ -

8:05

8:05What is Big Data and Hadoop?

What is Big Data and Hadoop?What is Big Data and Hadoop?

The availability of large data sets presents new opportunities and challenges to organizations of all sizes. So what is Big Data? How can Hadoop help me solve problems in processing large, complex data sets? Please go to http://www.LearningTree.com/WhatIsBigData to learn more about Big Data & our Big Data training offerings. In this video expert instructor Bill Appelbe will explain what Hadoop is, actual examples of how it works and how it compares to traditional databases such as Oracle & SQL Server. And finally, what is included in the Hadoop ecosystem. -

15:42

15:42Hadoop Tutorial 1 - What is Hadoop?

Hadoop Tutorial 1 - What is Hadoop?Hadoop Tutorial 1 - What is Hadoop?

http://zerotoprotraining.com This video explains what is Apache Hadoop. You will get a brief overview on Hadoop. Subsequent videos explain the details. -

28:37

28:37Introduction to Hadoop

Introduction to HadoopIntroduction to Hadoop

DURGASOFT is INDIA's No.1 Software Training Center offers online training on various technologies like JAVA, .NET , ANDROID,HADOOP,TESTING TOOLS , ADF, INFORMATICA, SAP... courses from Hyderabad & Bangalore -India with Real Time Experts. Mail us your requirements to durgasoftonlinetraining@gmail.com so that our Supporting Team will arrange Demo Sessions. Ph:Call +91-8885252627,+91-7207212428,+91-7207212427,+91-8096969696. http://durgasoft.com http://durgasoftonlinetraining.com https://www.facebook.com/durgasoftware http://durgajobs.com https://www.facebook.com/durgajobsinfo...... -

6:14

6:14What is Hadoop?: SQL Comparison

What is Hadoop?: SQL ComparisonWhat is Hadoop?: SQL Comparison

This video points out three things that make Hadoop different from SQL. While a great many differences exist, this hopefully provides a little more context to bring mere mortals up to speed. There are some details about Hadoop that I purposely left out to simplify this video. http://www.intricity.com To Talk with a Specialist go to: http://www.intricity.com/intricity101/ -

1:41:38

1:41:38Apache Hadoop Tutorial | Hadoop Tutorial For Beginners | Big Data Hadoop | Hadoop Training | Edureka

Apache Hadoop Tutorial | Hadoop Tutorial For Beginners | Big Data Hadoop | Hadoop Training | EdurekaApache Hadoop Tutorial | Hadoop Tutorial For Beginners | Big Data Hadoop | Hadoop Training | Edureka

This Edureka "Hadoop tutorial For Beginners" ( Hadoop Blog series: https://goo.gl/LFesy8 ) will help you to understand the problem with traditional system while processing Big Data and how Hadoop solves it. This tutorial will provide you a comprehensive idea about HDFS and YARN along with their architecture that has been explained in a very simple manner using examples and practical demonstration. At the end, you will get to know how to analyze Olympic data set using Hadoop and gain useful insights. Below are the topics covered in this tutorial: 1. Big Data Growth Drivers 2. What is Big Data? 3. Hadoop Introduction 4. Hadoop Master/Slave Architecture 5. Hadoop Core Components 6. HDFS Data Blocks 7. HDFS Read/Write Mechanism 8. What is MapReduce 9. MapReduce Program 10. MapReduce Job Workflow 11. Hadoop Ecosystem 12. Hadoop Use Case: Analyzing Olympic Dataset Subscribe to our channel to get video updates. Hit the subscribe button above. Check our complete Hadoop playlist here: https://goo.gl/ExJdZs #Hadoop #Hadooptutorial #HadoopTutorialForBeginners #HadoopArchitecture #LearnHadoop #HadoopTraining #HadoopCertification How it Works? 1. This is a 5 Week Instructor led Online Course, 40 hours of assignment and 30 hours of project work 2. We have a 24x7 One-on-One LIVE Technical Support to help you with any problems you might face or any clarifications you may require during the course. 3. At the end of the training you will have to undergo a 2-hour LIVE Practical Exam based on which we will provide you a Grade and a Verifiable Certificate! - - - - - - - - - - - - - - About the Course Edureka’s Big Data and Hadoop online training is designed to help you become a top Hadoop developer. During this course, our expert Hadoop instructors will help you: 1. Master the concepts of HDFS and MapReduce framework 2. Understand Hadoop 2.x Architecture 3. Setup Hadoop Cluster and write Complex MapReduce programs 4. Learn data loading techniques using Sqoop and Flume 5. Perform data analytics using Pig, Hive and YARN 6. Implement HBase and MapReduce integration 7. Implement Advanced Usage and Indexing 8. Schedule jobs using Oozie 9. Implement best practices for Hadoop development 10. Work on a real life Project on Big Data Analytics 11. Understand Spark and its Ecosystem 12. Learn how to work in RDD in Spark - - - - - - - - - - - - - - Who should go for this course? If you belong to any of the following groups, knowledge of Big Data and Hadoop is crucial for you if you want to progress in your career: 1. Analytics professionals 2. BI /ETL/DW professionals 3. Project managers 4. Testing professionals 5. Mainframe professionals 6. Software developers and architects 7. Recent graduates passionate about building successful career in Big Data - - - - - - - - - - - - - - Why Learn Hadoop? Big Data! A Worldwide Problem? According to Wikipedia, "Big data is collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications." In simpler terms, Big Data is a term given to large volumes of data that organizations store and process. However, it is becoming very difficult for companies to store, retrieve and process the ever-increasing data. If any company gets hold on managing its data well, nothing can stop it from becoming the next BIG success! The problem lies in the use of traditional systems to store enormous data. Though these systems were a success a few years ago, with increasing amount and complexity of data, these are soon becoming obsolete. The good news is - Hadoop has become an integral part for storing, handling, evaluating and retrieving hundreds of terabytes, and even petabytes of data. - - - - - - - - - - - - - - Opportunities for Hadoopers! Opportunities for Hadoopers are infinite - from a Hadoop Developer, to a Hadoop Tester or a Hadoop Architect, and so on. If cracking and managing BIG Data is your passion in life, then think no more and Join Edureka's Hadoop Online course and carve a niche for yourself! Please write back to us at sales@edureka.co or call us at +91 88808 62004 for more information. Website: http://www.edureka.co/big-data-and-hadoop Facebook: https://www.facebook.com/edurekaIN/ Twitter: https://twitter.com/edurekain LinkedIn: https://www.linkedin.com/company/edureka Customer Review: Michael Harkins, System Architect, Hortonworks says: “The courses are top rate. The best part is live instruction, with playback. But my favorite feature is viewing a previous class. Also, they are always there to answer questions, and prompt when you open an issue if you are having any trouble. Added bonus ~ you get lifetime access to the course you took!!! ~ This is the killer education app... I've take two courses, and I'm taking two more.” -

1:51

1:51HDFS - Intro to Hadoop and MapReduce

HDFS - Intro to Hadoop and MapReduce -

16:56

16:56Apache Hadoop & Big Data 101: The Basics

Apache Hadoop & Big Data 101: The BasicsApache Hadoop & Big Data 101: The Basics

This video will walk beginners through the basics of Hadoop – from the early stages of the client-server model through to the current Hadoop ecosystem. -

6:36

6:36What Is Hadoop?

What Is Hadoop? -

8:03

8:03Hadoop MapReduce Example - How good are a city's farmer's markets?

Hadoop MapReduce Example - How good are a city's farmer's markets?Hadoop MapReduce Example - How good are a city's farmer's markets?

A walkthrough of a Hadoop Map/Reduce program which collects information about farmer's markets in American cities, and outputs the number of farmer's markets and a rating of how good that city's markets are. -

14:00

14:00Basic Introduction to Apache Hadoop

Basic Introduction to Apache HadoopBasic Introduction to Apache Hadoop

Find more resources at: http://hortonworks.com/what-is-apache-hadoop/ Hadoop lets you manage big data. In this Basic Introduction to Hadoop Video, (http://youtu.be/OoEpfb6yga8), Owen O'Malley provides an introduction to Apache Hadoop, including the roles of key and related technologies in the Hadoop ecosystem, such as: MapReduce, Hadoop Security, HDFS, Ambari, Hadoop Cluster, Datanode, Apache Pig, Hive, HBase, HCatalog, Zookeeper, Mahout, Sqoop, Oozie, Flume and associated benefits. -

14:48

14:48Hadoop Architecture

Hadoop ArchitectureHadoop Architecture

-

3:34:38

3:34:38Big Data and Hadoop 1 | Hadoop Tutorial 1 | Big Data Tutorial 1 | Hadoop Tutorial for Beginners - 1

Big Data and Hadoop 1 | Hadoop Tutorial 1 | Big Data Tutorial 1 | Hadoop Tutorial for Beginners - 1Big Data and Hadoop 1 | Hadoop Tutorial 1 | Big Data Tutorial 1 | Hadoop Tutorial for Beginners - 1

Watch our New and Updated Hadoop Tutorial For Beginners: https://goo.gl/xeEV6m Check our Hadoop Tutorial blog series: https://goo.gl/LFesy8 Big Data & Hadoop Training: http://goo.gl/QA2KaQ Click on the link to watch the updated version of this video - http://www.youtube.com/watch?v=d0coIjRJ2qQ This is Part 1 of 8 week Big Data and Hadoop course. The 3hr Interactive live class covers What is Big Data, What is Hadoop and Why Hadoop? We also understand the details of Hadoop Distributed File System ( HDFS). The Tutorial covers in detail about Name Node, Data Nodes, Secondary Name Node, the need for Hadoop. It goes into the details of concepts like Rack Awareness, Data Replication, Reading and Writing on HDFS. We will also show how to setup the cloudera VM on your machine. More details below: Welcome, Let's Get Going on our Hadoop Journey... - - - - - - - - - - - - - - How it Works? 1. This is a 8 Week Instructor led Online Course. 2. We have a 3-hour Live and Interactive Sessions every Sunday. 3. We have 3 hours of Practical Work involving Lab Assignments, Case Studies and Projects every week which can be done at your own pace. We can also provide you Remote Access to Our Hadoop Cluster for doing Practicals. 4. We have a 24x7 One-on-One LIVE Technical Support to help you with any problems you might face or any clarifications you may require during the course. 5. At the end of the training you will have to undergo a 2-hour LIVE Practical Exam based on which we will provide you a Grade and a Verifiable Certificate! - - - - - - - - - - - - - - About the Course Big Data and Hadoop training course is designed to provide knowledge and skills to become a successful Hadoop Developer. In-depth knowledge of concepts such as Hadoop Distributed File System, Setting up the Hadoop Cluster, Map-Reduce,PIG, HIVE, HBase, Zookeeper, SQOOP etc. will be covered in the course. - - - - - - - - - - - - - - Course Objectives After the completion of the Hadoop Course at Edureka, you should be able to: Master the concepts of Hadoop Distributed File System. Understand Cluster Setup and Installation. Understand MapReduce and Functional programming. Understand How Pig is tightly coupled with Map-Reduce. Learn how to use Hive, How you can load data into HIVE and query data from Hive. Implement HBase, MapReduce Integration, Advanced Usage and Advanced Indexing. Have a good understanding of ZooKeeper service and Sqoop. Develop a working Hadoop Architecture. - - - - - - - - - - - - - - Who should go for this course? This course is designed for developers with some programming experience (preferably Java) who are looking forward to acquire a solid foundation of Hadoop Architecture. Existing knowledge of Hadoop is not required for this course. - - - - - - - - - - - - - - Why Learn Hadoop? BiG Data! A Worldwide Problem? According to Wikipedia, "Big data is collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications." In simpler terms, Big Data is a term given to large volumes of data that organizations store and process. However, It is becoming very difficult for companies to store, retrieve and process the ever-increasing data. If any company gets hold on managing its data well, nothing can stop it from becoming the next BIG success! The problem lies in the use of traditional systems to store enormous data. Though these systems were a success a few years ago, with increasing amount and complexity of data, these are soon becoming obsolete. The good news is - Hadoop, which is not less than a panacea for all those companies working with BIG DATA in a variety of applications and has become an integral part for storing, handling, evaluating and retrieving hundreds of terabytes, and even petabytes of data. - - - - - - - - - - - - - - Some of the top companies using Hadoop: The importance of Hadoop is evident from the fact that there are many global MNCs that are using Hadoop and consider it as an integral part of their functioning, such as companies like Yahoo and Facebook! On February 19, 2008, Yahoo! Inc. established the world's largest Hadoop production application. The Yahoo! Search Webmap is a Hadoop application that runs on over 10,000 core Linux cluster and generates data that is now widely used in every Yahoo! Web search query. Opportunities for Hadoopers! Opportunities for Hadoopers are infinite - from a Hadoop Developer, to a Hadoop Tester or a Hadoop Architect, and so on. If cracking and managing BIG Data is your passion in life, then think no more and Join Edureka's Hadoop Online course and carve a niche for yourself! Happy Hadooping! Please write back to us at sales@edureka.co or call us at +91 88808 62004 for more information. http://www.edureka.co/big-data-and-hadoop -

25:16

25:16Aula 1 - Big data e Hadoop - Módulo I

Aula 1 - Big data e Hadoop - Módulo IAula 1 - Big data e Hadoop - Módulo I

Este curso mostra conceitos de Big Data e os fundamentos do Apache Hadoop. Este primeiro módulo começa com a teoria necessária para entender a utilização de uma plataforma Big Data corporativa e em seguida é visto o que cada ferramenta faz dentro do ecossistema Hadoop. Vamos mostrar a arquitetura Hadoop, com sua base HDFS e Map Reduce e nas aulas práticas será explicado como instalar, importar dados para o Hive/HBase, escrever programas Map Reduce e controlar jobs usando Oozie. http://cursos.escolalinux.com.br/curso/apache-hadoop-20-horas -

2:01:20

2:01:20Hadoop. Введение в Big Data и MapReduce

Hadoop. Введение в Big Data и MapReduceHadoop. Введение в Big Data и MapReduce

Техносфера Mail.ru Group, МГУ им. М.В. Ломоносова. Курс "Методы распределенной обработки больших объемов данных в Hadoop" Лекция №1 "Введение в Big Data и MapReduce" Лектор - Алексей Романенко. Что такое «большие данные». История возникновения этого явления. Необходимые знания и навыки для работы с большими данными. Что такое Hadoop, и где он применяется. Что такое «облачные вычисления», история возникновения и развития технологии. Web 2.0. Вычисление как услуга (utility computing). Виртуализация. Инфраструктура как сервис (IaaS). Вопросы параллелизма. Управление множеством воркеров. Дата-центры и масштабируемость. Типичные задачи Big Data. MapReduce: что это такое, примеры. Распределённая файловая система. Google File System. HDFS как клон GFS, его архитектура. Слайды лекции http://www.slideshare.net/Technopark/lecture-01-48215730 Другие лекции курса | https://www.youtube.com/playlist?list=PLrCZzMib1e9rPxMIgPri9YnOpvyDAL9HD Наш видеоканал | http://www.youtube.com/user/TPMGTU?sub_confirmation=1 Официальный сайт Технопарка | https://tech-mail.ru/ Официальный сайт Техносферы | https://sfera-mail.ru/ Технопарк в ВКонтакте | http://vk.com/tpmailru Техносфера в ВКонтакте | https://vk.com/tsmailru Блог на Хабре | http://habrahabr.ru/company/mailru/ #ТЕХНОПАРК #ТЕХНОСФЕРА x -

1:25:32

1:25:32Hadoop - Just the Basics for Big Data Rookies

Hadoop - Just the Basics for Big Data RookiesHadoop - Just the Basics for Big Data Rookies

Recorded at SpringOne2GX 2013 in Santa Clara, CA Speaker: Adam Shook This session assumes absolutely no knowledge of Apache Hadoop and will provide a complete introduction to all the major aspects of the Hadoop ecosystem of projects and tools. If you are looking to get up to speed on Hadoop, trying to work out what all the Big Data fuss is about, or just interested in brushing up your understanding of MapReduce, then this is the session for you. We will cover all the basics with detailed discussion about HDFS, MapReduce, YARN (MRv2), and a broad overview of the Hadoop ecosystem including Hive, Pig, HBase, ZooKeeper and more. -

10:33

10:33BIg Data Hadoop Ecosystem

BIg Data Hadoop EcosystemBIg Data Hadoop Ecosystem

A session on to understand the friends of Hadoop which form Big data Hadoop Ecosystem. Register for Free Big Data Boot camp http://www.bigdatatrunk.com/course/fr... Please feel free to contact us with any questions at info@bigdatatrunk.com or call us at +01 415-484-6702 for more information. Happy Learning with Big Data Trunk http://www.bigdatatrunk.com/ -

33:36

33:36Hadoop Tutorial: Intro to HDFS

Hadoop Tutorial: Intro to HDFSHadoop Tutorial: Intro to HDFS

In this presentation, Sameer Farooqui is going to introduce the Hadoop Distributed File System, an Apache open source distributed file system designed to run on commodity hardware. He'll cover: - Origins of HDFS and Google File System / GFS - How a file breaks up into blocks before being distributed to a cluster - NameNode and DataNode basics - technical architecture of HDFS - sample HDFS commands - Rack Awareness - Synchrounous write pipeline - How a client reads a file ** Interested in taking a class with Sameer? Check out https://newcircle.com/category/big-data -

1:16:44

1:16:44Introducing Apache Hadoop: The Modern Data Operating System

Introducing Apache Hadoop: The Modern Data Operating SystemIntroducing Apache Hadoop: The Modern Data Operating System

(November 16, 2011) Amr Awadallah introduces Apache Hadoop and asserts that it is the data operating system of the future. He explains many of the data problems faced by modern data systems while highlighting the benefits and features of Hadoop. Stanford University: http://www.stanford.edu/ Stanford School of Engineering: http://engineering.stanford.edu/ Stanford Electrical Engineering Department: http://ee.stanford.edu Stanford EE380 Computer Systems Colloquium http://www.stanford.edu/class/ee380/ Stanford University Channel on YouTube: http://www.youtube.com/stanford -

9:39

9:39Basics of Hadoop Distributed File System (HDFS)

Basics of Hadoop Distributed File System (HDFS)Basics of Hadoop Distributed File System (HDFS)

Describes how to view and create folders in HDFS, copy files from linux to HDFS, and copy files back from HDFS to linux.

-

What is Hadoop?

-For a deeper dive, check our our video comparing Hadoop to SQL http://www.youtube.com/watch?v=3Wmdy80QOvw&feature;=c4-overview&list;=UUrR22MmDd5-cKP2jTVKpBcQ -Or see our video outlining critical Hadoop Scalability fundamentals https://www.youtube.com/watch?v=h5vAj9FPl0I To Talk with a Specialist go to: http://www.intricity.com/intricity101/

published: 14 Jul 2012 -

What is Big Data and Hadoop?

The availability of large data sets presents new opportunities and challenges to organizations of all sizes. So what is Big Data? How can Hadoop help me solve problems in processing large, complex data sets? Please go to http://www.LearningTree.com/WhatIsBigData to learn more about Big Data & our Big Data training offerings. In this video expert instructor Bill Appelbe will explain what Hadoop is, actual examples of how it works and how it compares to traditional databases such as Oracle & SQL Server. And finally, what is included in the Hadoop ecosystem.

published: 09 Sep 2014 -

Hadoop Tutorial 1 - What is Hadoop?

http://zerotoprotraining.com This video explains what is Apache Hadoop. You will get a brief overview on Hadoop. Subsequent videos explain the details.

published: 05 Apr 2013 -

Introduction to Hadoop

DURGASOFT is INDIA's No.1 Software Training Center offers online training on various technologies like JAVA, .NET , ANDROID,HADOOP,TESTING TOOLS , ADF, INFORMATICA, SAP... courses from Hyderabad & Bangalore -India with Real Time Experts. Mail us your requirements to durgasoftonlinetraining@gmail.com so that our Supporting Team will arrange Demo Sessions. Ph:Call +91-8885252627,+91-7207212428,+91-7207212427,+91-8096969696. http://durgasoft.com http://durgasoftonlinetraining.com https://www.facebook.com/durgasoftware http://durgajobs.com https://www.facebook.com/durgajobsinfo......

published: 29 Mar 2014 -

What is Hadoop?: SQL Comparison

This video points out three things that make Hadoop different from SQL. While a great many differences exist, this hopefully provides a little more context to bring mere mortals up to speed. There are some details about Hadoop that I purposely left out to simplify this video. http://www.intricity.com To Talk with a Specialist go to: http://www.intricity.com/intricity101/

published: 12 Sep 2014 -

Apache Hadoop Tutorial | Hadoop Tutorial For Beginners | Big Data Hadoop | Hadoop Training | Edureka

This Edureka "Hadoop tutorial For Beginners" ( Hadoop Blog series: https://goo.gl/LFesy8 ) will help you to understand the problem with traditional system while processing Big Data and how Hadoop solves it. This tutorial will provide you a comprehensive idea about HDFS and YARN along with their architecture that has been explained in a very simple manner using examples and practical demonstration. At the end, you will get to know how to analyze Olympic data set using Hadoop and gain useful insights. Below are the topics covered in this tutorial: 1. Big Data Growth Drivers 2. What is Big Data? 3. Hadoop Introduction 4. Hadoop Master/Slave Architecture 5. Hadoop Core Components 6. HDFS Data Blocks 7. HDFS Read/Write Mechanism 8. What is MapReduce 9. MapReduce Program 10. MapReduce Job Wor...

published: 09 May 2017 -

-

Apache Hadoop & Big Data 101: The Basics

This video will walk beginners through the basics of Hadoop – from the early stages of the client-server model through to the current Hadoop ecosystem.

published: 27 May 2015 -

-

Hadoop MapReduce Example - How good are a city's farmer's markets?

A walkthrough of a Hadoop Map/Reduce program which collects information about farmer's markets in American cities, and outputs the number of farmer's markets and a rating of how good that city's markets are.

published: 18 Apr 2012 -

Basic Introduction to Apache Hadoop

Find more resources at: http://hortonworks.com/what-is-apache-hadoop/ Hadoop lets you manage big data. In this Basic Introduction to Hadoop Video, (http://youtu.be/OoEpfb6yga8), Owen O'Malley provides an introduction to Apache Hadoop, including the roles of key and related technologies in the Hadoop ecosystem, such as: MapReduce, Hadoop Security, HDFS, Ambari, Hadoop Cluster, Datanode, Apache Pig, Hive, HBase, HCatalog, Zookeeper, Mahout, Sqoop, Oozie, Flume and associated benefits.

published: 29 Jan 2013 -

Hadoop Architecture

published: 24 Mar 2013 -

Big Data and Hadoop 1 | Hadoop Tutorial 1 | Big Data Tutorial 1 | Hadoop Tutorial for Beginners - 1

Watch our New and Updated Hadoop Tutorial For Beginners: https://goo.gl/xeEV6m Check our Hadoop Tutorial blog series: https://goo.gl/LFesy8 Big Data & Hadoop Training: http://goo.gl/QA2KaQ Click on the link to watch the updated version of this video - http://www.youtube.com/watch?v=d0coIjRJ2qQ This is Part 1 of 8 week Big Data and Hadoop course. The 3hr Interactive live class covers What is Big Data, What is Hadoop and Why Hadoop? We also understand the details of Hadoop Distributed File System ( HDFS). The Tutorial covers in detail about Name Node, Data Nodes, Secondary Name Node, the need for Hadoop. It goes into the details of concepts like Rack Awareness, Data Replication, Reading and Writing on HDFS. We will also show how to setup the cloudera VM on your machine. More details belo...

published: 09 May 2013 -

Aula 1 - Big data e Hadoop - Módulo I

Este curso mostra conceitos de Big Data e os fundamentos do Apache Hadoop. Este primeiro módulo começa com a teoria necessária para entender a utilização de uma plataforma Big Data corporativa e em seguida é visto o que cada ferramenta faz dentro do ecossistema Hadoop. Vamos mostrar a arquitetura Hadoop, com sua base HDFS e Map Reduce e nas aulas práticas será explicado como instalar, importar dados para o Hive/HBase, escrever programas Map Reduce e controlar jobs usando Oozie. http://cursos.escolalinux.com.br/curso/apache-hadoop-20-horas

published: 21 Mar 2015 -

Hadoop. Введение в Big Data и MapReduce

Техносфера Mail.ru Group, МГУ им. М.В. Ломоносова. Курс "Методы распределенной обработки больших объемов данных в Hadoop" Лекция №1 "Введение в Big Data и MapReduce" Лектор - Алексей Романенко. Что такое «большие данные». История возникновения этого явления. Необходимые знания и навыки для работы с большими данными. Что такое Hadoop, и где он применяется. Что такое «облачные вычисления», история возникновения и развития технологии. Web 2.0. Вычисление как услуга (utility computing). Виртуализация. Инфраструктура как сервис (IaaS). Вопросы параллелизма. Управление множеством воркеров. Дата-центры и масштабируемость. Типичные задачи Big Data. MapReduce: что это такое, примеры. Распределённая файловая система. Google File System. HDFS как клон GFS, его архитектура. Слайды лекции http://...

published: 21 Oct 2014 -

Hadoop - Just the Basics for Big Data Rookies

Recorded at SpringOne2GX 2013 in Santa Clara, CA Speaker: Adam Shook This session assumes absolutely no knowledge of Apache Hadoop and will provide a complete introduction to all the major aspects of the Hadoop ecosystem of projects and tools. If you are looking to get up to speed on Hadoop, trying to work out what all the Big Data fuss is about, or just interested in brushing up your understanding of MapReduce, then this is the session for you. We will cover all the basics with detailed discussion about HDFS, MapReduce, YARN (MRv2), and a broad overview of the Hadoop ecosystem including Hive, Pig, HBase, ZooKeeper and more.

published: 02 Apr 2014 -

BIg Data Hadoop Ecosystem

A session on to understand the friends of Hadoop which form Big data Hadoop Ecosystem. Register for Free Big Data Boot camp http://www.bigdatatrunk.com/course/fr... Please feel free to contact us with any questions at info@bigdatatrunk.com or call us at +01 415-484-6702 for more information. Happy Learning with Big Data Trunk http://www.bigdatatrunk.com/

published: 17 Jun 2016 -

Hadoop Tutorial: Intro to HDFS

In this presentation, Sameer Farooqui is going to introduce the Hadoop Distributed File System, an Apache open source distributed file system designed to run on commodity hardware. He'll cover: - Origins of HDFS and Google File System / GFS - How a file breaks up into blocks before being distributed to a cluster - NameNode and DataNode basics - technical architecture of HDFS - sample HDFS commands - Rack Awareness - Synchrounous write pipeline - How a client reads a file ** Interested in taking a class with Sameer? Check out https://newcircle.com/category/big-data

published: 31 Oct 2012 -

Introducing Apache Hadoop: The Modern Data Operating System

(November 16, 2011) Amr Awadallah introduces Apache Hadoop and asserts that it is the data operating system of the future. He explains many of the data problems faced by modern data systems while highlighting the benefits and features of Hadoop. Stanford University: http://www.stanford.edu/ Stanford School of Engineering: http://engineering.stanford.edu/ Stanford Electrical Engineering Department: http://ee.stanford.edu Stanford EE380 Computer Systems Colloquium http://www.stanford.edu/class/ee380/ Stanford University Channel on YouTube: http://www.youtube.com/stanford

published: 05 Sep 2012 -

Basics of Hadoop Distributed File System (HDFS)

Describes how to view and create folders in HDFS, copy files from linux to HDFS, and copy files back from HDFS to linux.

published: 29 Aug 2014

What is Hadoop?

- Order: Reorder

- Duration: 3:07

- Updated: 14 Jul 2012

- views: 342964

- published: 14 Jul 2012

- views: 342964

What is Big Data and Hadoop?

- Order: Reorder

- Duration: 8:05

- Updated: 09 Sep 2014

- views: 835156

- published: 09 Sep 2014

- views: 835156

Hadoop Tutorial 1 - What is Hadoop?

- Order: Reorder

- Duration: 15:42

- Updated: 05 Apr 2013

- views: 539019

- published: 05 Apr 2013

- views: 539019

Introduction to Hadoop

- Order: Reorder

- Duration: 28:37

- Updated: 29 Mar 2014

- views: 751677

- published: 29 Mar 2014

- views: 751677

What is Hadoop?: SQL Comparison

- Order: Reorder

- Duration: 6:14

- Updated: 12 Sep 2014

- views: 139540

- published: 12 Sep 2014

- views: 139540

Apache Hadoop Tutorial | Hadoop Tutorial For Beginners | Big Data Hadoop | Hadoop Training | Edureka

- Order: Reorder

- Duration: 1:41:38

- Updated: 09 May 2017

- views: 23315

- published: 09 May 2017

- views: 23315

HDFS - Intro to Hadoop and MapReduce

- Order: Reorder

- Duration: 1:51

- Updated: 23 Feb 2015

- views: 39270

Apache Hadoop & Big Data 101: The Basics

- Order: Reorder

- Duration: 16:56

- Updated: 27 May 2015

- views: 63240

- published: 27 May 2015

- views: 63240

What Is Hadoop?

- Order: Reorder

- Duration: 6:36

- Updated: 07 Jun 2013

- views: 175279

Hadoop MapReduce Example - How good are a city's farmer's markets?

- Order: Reorder

- Duration: 8:03

- Updated: 18 Apr 2012

- views: 106919

- published: 18 Apr 2012

- views: 106919

Basic Introduction to Apache Hadoop

- Order: Reorder

- Duration: 14:00

- Updated: 29 Jan 2013

- views: 131776

- published: 29 Jan 2013

- views: 131776

Hadoop Architecture

- Order: Reorder

- Duration: 14:48

- Updated: 24 Mar 2013

- views: 81257

- published: 24 Mar 2013

- views: 81257

Big Data and Hadoop 1 | Hadoop Tutorial 1 | Big Data Tutorial 1 | Hadoop Tutorial for Beginners - 1

- Order: Reorder

- Duration: 3:34:38

- Updated: 09 May 2013

- views: 997500

- published: 09 May 2013

- views: 997500

Aula 1 - Big data e Hadoop - Módulo I

- Order: Reorder

- Duration: 25:16

- Updated: 21 Mar 2015

- views: 13971

- published: 21 Mar 2015

- views: 13971

Hadoop. Введение в Big Data и MapReduce

- Order: Reorder

- Duration: 2:01:20

- Updated: 21 Oct 2014

- views: 36130

- published: 21 Oct 2014

- views: 36130

Hadoop - Just the Basics for Big Data Rookies

- Order: Reorder

- Duration: 1:25:32

- Updated: 02 Apr 2014

- views: 269161

- published: 02 Apr 2014

- views: 269161

BIg Data Hadoop Ecosystem

- Order: Reorder

- Duration: 10:33

- Updated: 17 Jun 2016

- views: 4913

- published: 17 Jun 2016

- views: 4913

Hadoop Tutorial: Intro to HDFS

- Order: Reorder

- Duration: 33:36

- Updated: 31 Oct 2012

- views: 323411

- published: 31 Oct 2012

- views: 323411

Introducing Apache Hadoop: The Modern Data Operating System

- Order: Reorder

- Duration: 1:16:44

- Updated: 05 Sep 2012

- views: 266257

- published: 05 Sep 2012

- views: 266257

Basics of Hadoop Distributed File System (HDFS)

- Order: Reorder

- Duration: 9:39

- Updated: 29 Aug 2014

- views: 13180

- published: 29 Aug 2014

- views: 13180

- Playlist

- Chat

- Playlist

- Chat

What is Hadoop?

- Report rights infringement

- published: 14 Jul 2012

- views: 342964

What is Big Data and Hadoop?

- Report rights infringement

- published: 09 Sep 2014

- views: 835156

Hadoop Tutorial 1 - What is Hadoop?

- Report rights infringement

- published: 05 Apr 2013

- views: 539019

Introduction to Hadoop

- Report rights infringement

- published: 29 Mar 2014

- views: 751677

What is Hadoop?: SQL Comparison

- Report rights infringement

- published: 12 Sep 2014

- views: 139540

Apache Hadoop Tutorial | Hadoop Tutorial For Beginners | Big Data Hadoop | Hadoop Training | Edureka

- Report rights infringement

- published: 09 May 2017

- views: 23315

HDFS - Intro to Hadoop and MapReduce

- Report rights infringement

- published: 23 Feb 2015

- views: 39270

Apache Hadoop & Big Data 101: The Basics

- Report rights infringement

- published: 27 May 2015

- views: 63240

Hadoop MapReduce Example - How good are a city's farmer's markets?

- Report rights infringement

- published: 18 Apr 2012

- views: 106919

Basic Introduction to Apache Hadoop

- Report rights infringement

- published: 29 Jan 2013

- views: 131776

Hadoop Architecture

- Report rights infringement

- published: 24 Mar 2013

- views: 81257

Big Data and Hadoop 1 | Hadoop Tutorial 1 | Big Data Tutorial 1 | Hadoop Tutorial for Beginners - 1

- Report rights infringement

- published: 09 May 2013

- views: 997500

Aula 1 - Big data e Hadoop - Módulo I

- Report rights infringement

- published: 21 Mar 2015

- views: 13971

-

Lyrics list:lyrics

-

Headup, Deftones

-

How Deep, Lee Aaron

-

How Deep, Color Me Badd

-

How Deep, Magnets

-

How To Be A, Weerd Science

-

Headup, Apparat

-

Hey Du, Boy

-

How Deep, Sovereign Grace Music

-

How Deep, Cold World

Headup

Got back out, back off the forefront

i never said, or got to say bye to my boy, but

its often i try

i think about how id be screaming

and the times would be bumping

all our minds would be flowing

taking care of shit like, hey holmes what you needing

as lifes coming off whack it will open your eyes

As i proceed to get loose

You seem to have some doubt

i feel you next to me fiending getting spacey

with the common love of music

think of this as the sun and the mind as a tool

but we could bounce back from this one with attitude will and some spirit

with attitude will and your spirit we'll shove it aside

soulfly

fly high

soulfly

fly free

Shut your shit, please say what you will.

I can't think. Sidestep around

I'm bound to the freestyle.

Push down to the ground.

With a nova dash but they watch you.

Now climb up, super slide,

the spirits so low it's coming over you!!!

soulfly

fly high

soulfly

fly free

when you walk in to this world

walk in to this world, with your head up high