Riffing off a nice post by John at Ktismatics on whether we have direct access to our own minds…

Whenever there’s a discussion about the neuronal vs. the mental, issues of causation and reduction often come up. Can conscious activity be reduced to an explanation of neuronal activity? Does the neuronal level of organization *cause* the level at which qualia are experienced? What form does that causation take?

My stance is that causality is really a much, much looser concept than physical science would make it seem. Over time, physical science has corralled causality into a smaller and smaller area — but that area is occupied by some pretty inscrutable things — things like “forces”, which end up being mostly tautological at a paradigmatic level (“it’s a force because it makes things move — it makes things move because it’s a force”), and metaphorically hinky at the level of theory (gauge bosons as “virtual particles”).

So when we think about the neuronal “causing” the mental, we usually have in mind some sort of physical-science-like efficient causality, because that’s what we see as operating at the molecular level of description that neural networks inhabit.

But the question is — why are there multiple levels of organization at all? Is reality really separated into strata of magnification, with causality operating horizontally within a layer and vertically between layers? If so, are the vertical and horizontal causalities the same *kind* of causality?

This is where reduction comes in. It seems that a lot of people think that if we can describe something at, say, a molecular level, we have reduced it, and we no longer need the description at the higher level, because we’ve explained everything that needs to be explained. Let’s say that we have a particular arrangement of a certain sort of molecules, and we know exactly why the regularity of that arrangement and the nature of the forces between the molecules allow photons to pass through without being absorbed. Have we “reduced” the emergent property of transparency? A scientist would probably say that we have — that the perceptual level of “seeing through” something doesn’t add anything to the explanation.

But that’s just one idea of reduction. Here’s another. Let’s say that we have a game that’s defined by the manipulation of yellow and blue marbles on a grid according to a set of rules. We’re given an initial row, from left to right, of, say, a thousand marbles on the grid, some yellow, some blue — and we’re given eight simple rules about how to place marbles on the next row of the grid. The rules tell us to look at each marble in the row, and place a marble below it with a color that’s based on the marbles directly to the right and left of the marble we’re looking at. For example, a rule might say, “if you’re on a yellow marble with a blue to the right and a yellow to the left, place a blue marble below it”, or “when a yellow marble’s neighbors are both blue, place a yellow marble below it”. It will take five hundred steps, but eventually we will run out of marbles, because the ones on the ends don’t have neighbors, and therefore don’t get marbles placed below them.

So what we have is an extremely simple system with only two entities, eight rules, and 1000 objects. Reductively, we would say that we have fully explained the system, right? We know all of the things that there are (red and blue marbles), all of the possible ways that they can be manipulated (exactly eight ways), and the exact configuration of the entire universe at its inception (a line of 1000 marbles). We know everything there is to know about the system.

Okay. So what if I now asked you to tell me, given a particular row of a thousand marbles and a particular set of eight rules, what the sequence of yellow and blue marbles will be after 250 steps of applying the rules. But wait, there’s a catch — the *only* thing you’re *not* allowed to do in figuring it out is *actually carry out the 250 steps*.

Why this prohibition? Well, the set of rules and the initial lineup of marbles are what constitute the *reduction* of the system. If you actually carry out the rules to find out the configuration after 250 steps, you haven’t *reduced* anything — you are actually *running* the real, unreduced system.

So — is it possible? Can you do it?

The answer is that in some cases, it’s impossible.

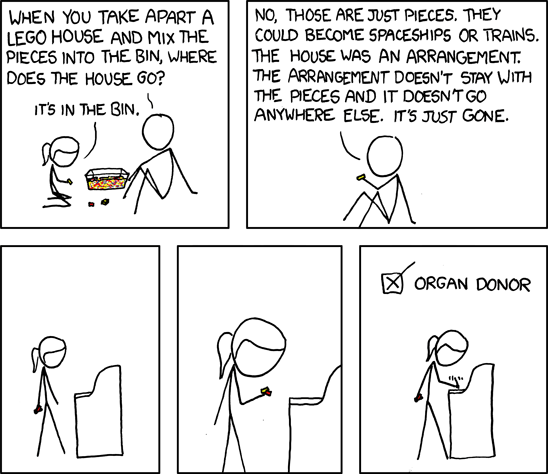

Now, many people would say that the example I just gave confuses reduction with predictability. But what if, instead of asking you to predict the sequence of marbles after 250 steps, I asked you to tell me, in a general way, if the rows of marbles produced by following the rules would make a pattern, and, if so, what sorts of features (in general) that pattern would exhibit. Could you do that? The answer, once again, is that in some cases, you couldn’t. Some configurations of marbles and rules produce weird repeating patterns that look like spaceships. The spaceships are not in the rules or the marbles — they emerge from them, but are not explained by them.

What I’m getting at is that although predictability and reduction are not the same thing, they are intimately related and not really separable. Predictability is the only real test we have to tell us if we have explained something fully. Reduction is a way of formulating a prediction about how something will behave.