Planet Python

Last update: July 06, 2017 09:48 PM

July 06, 2017

Semaphore Community

Writing, Testing, and Deploying a Django API to Heroku with Semaphore

This article is brought with ❤ to you by Semaphore.

Introduction

In this tutorial, you will learn how to write and deploy a Django API to Heroku using Semaphore. You'll also learn how to run Django tests on Semaphore, and how to use Heroku pipelines and Heroku review apps with Semaphore. If you'd like to use Semaphore to deploy to other platforms, you can find guides to setting up automatic and manual deployment in Semaphore documentation.

The API we will build is a very simple movie API, which will have CRUD

routes for operations on movies.

A movie object will have this very simple representation:

{

"name": "A movie",

"year_of_release": 2012

}

The routes we will be implementing are:

\movies - GET & POST

\movies\<pk> - GET, PUT & DELETE

To keep it simple, we won't implement any authentication in our API.

Prerequisites

To follow this tutorial, you need to have the following installed on your machine:

- Python 3.6.1 (This is what I will be using. Any 3.x or 2.7.x version should also be fine).

- Git.

You'll also need to have Github, Semaphore, and Heroku accounts.

Note: we won't cover how to use Git or Github in this tutorial. For more information on that, this is a good place to start.

Setting Up the Environment

Create a Github repo. We'll name it movies-api. Make sure to add a Python .gitignore before clicking Create Repository.

After that, clone it to your local machine and cd into it.

Once inside the movies-api directory, we are going to create a few branches.

- A

stagingbranch for testing deployments.

git branch staging && git push --set-upstream origin staging

- A

developbranch for code review.

git branch develop && git push --set-upstream origin develop

- A

featurebranch which we will be working on.

git checkout -b ft-api && git push --set-upstream origin ft-api

The last command that created the ft-api branch also moved us to it. We should now be on the ft-api branch, ready to start.

Now let's create a Python virtual environment and install the dependencies we need.

python3 -m venv venv

That command creates an environment called venv which is already ignored in our .gitignore.

Next, start the environment.

source venv/bin/activate

After that, we'll install the libraries we will be using, Django and Django Rest Framework for the API.

pip install django djangorestframework gunicorn

Create the requirements.txt file.

pip freeze requirements.txt

Next, let's create our Django project, and simply name it movies.

django-admin startproject movies

cd into movies and create an application called api.

./manage.py startapp api

That's it for the setup. For your reference, we'll be using Django v1.11 and Django Rest Framework v3.6.3.

Writing tests

If you inspect the directory structure of movies-api, you should see something resembling this:

movies-api/\

├── movies\

├──├── api\

├──├──├── migrations\

├──├──├── \_\_init__.py\

├──├──├── admin.py\

├──├──├── apps.py\

├──├──├── models.py\

├──├──├── tests.py\

├──├──├── views.py\

├──├── movies\

├──├──├── \_\_init__.py\

├──├──├── settings.py\

├──├──├── urls.py\

├──├──├── wsgi.py\

├──├── manage.py\

├── venv\

├──.gitignore\

├──LICENSE

We shall be working mostly in the first movies inner folder, where manage.py is located. If you are not in it, cd into it now.

Firstly, we'll register all the applications we introduced under INSTALLED_APPS in settings.py.

# movies-api/movies/movies/settings.py

INSTALLED_APPS = [

...

'rest_framework', # add this

'api' # add this

]

In the api application folder, create the files urls.py and serializers.py.

Also, delete the tests.py file and create a tests folder. Inside the tests folder, create test_models.py and test_views.py. Make sure to add an __init__.py file as well.

Once done, your api folder should have the following structure:

api/\

├── migrations/\

├── tests/\

├──├── \_\_init_\_.py\

├──├── test_views.py\

├── \_\_init_\_.py\

├── admin.py\

├── apps.py\

├── models.py\

├── serializers.py\

├── urls.py\

├── views.py

Let's add the tests for the movie model we'll write inside test_models.py.

# movies-api/movies/api/tests/test_models.py

from django.test import TestCase

from api.models import Movie

class TestMovieModel(TestCase):

def setUp(self):

self.movie = Movie(name="Split", year_of_release=2016)

self.movie.save()

def test_movie_creation(self):

self.assertEqual(Movie.objects.count(), 1)

def test_movie_representation(self):

self.assertEqual(self.movie.name, str(self.movie))

The model tests simply create a Movie record in the setUp method. We then test that the movie was saved successfully to the database.

We also test that the string representation of the movie is its name.

We shall add the tests for the views which will be handling our API requests inside of test_views.py.

# movies-api/movies/api/tests/test_views.py

from django.shortcuts import reverse

from rest_framework.test import APITestCase

from api.models import Movie

class TestNoteApi(APITestCase):

def setUp(self):

# create movie

self.movie = Movie(name="The Space Between Us", year_of_release=2017)

self.movie.save()

def test_movie_creation(self):

response = self.client.post(reverse('movies'), {

'name': 'Bee Movie',

'year_of_release': 2007

})

# assert new movie was added

self.assertEqual(Movie.objects.count(), 2)

# assert a created status code was returned

self.assertEqual(201, response.status_code)

def test_getting_movies(self):

response = self.client.get(reverse('movies'), format="json")

self.assertEqual(len(response.data), 1)

def test_updating_movie(self):

response = self.client.put(reverse('detail', kwargs={'pk': 1}), {

'name': 'The Space Between Us updated',

'year_of_release': 2017

}, format="json")

# check info returned has the update

self.assertEqual('The Space Between Us updated', response.data['name'])

def test_deleting_movie(self):

response = self.client.delete(reverse('detail', kwargs={'pk': 1}))

self.assertEqual(204, response.status_code)

For the views, we have four main test cases. We test that the a POST to movies/ creates a movie record successfully. We also test that a GET to movies/ returns the correct result. Lastly, we test that PUT and DELETE to movies/<pk> return correct data and status codes.

You can run the tests using manage.py:

python manage.py test

You should see a lot of errors, 6 to be exact. Don't worry, we'll be fixing them in the following sections, in a TDD manner.

Defining the Routes

Let's define the URLs for the API.

We are going to start by editing movies-api/movies/movies/urls.py to look as follows:

# movies-api/movies/movies/urls.py

...

from django.conf.urls import url, include # add include as an import here

from django.contrib import admin

urlpatterns = [

url(r'^admin/', admin.site.urls),

url(r'^api/v1/', include('api.urls')) # add this line

]

The modifications are to tell Django that any request starting with api/v1 should be routed to the api application and they will be handled there.

Now let's go the urls.py you created inside the api application folder and add this to it:

# movies-api/movies/api/urls.py

from django.conf.urls import url

from api.views import MovieCreateView, MovieDetailView

urlpatterns = [

url(r'^movies/$', MovieCreateView.as_view(), name='movies'),

url(r'^movies/(?P<id>[0-9]+)$', MovieDetailView.as_view(), name='detail'),

]

Simply put, we have defined two forms of URLs; api/v1/movies/ which will use the MovieCreateView view, and api/v1/movies/<pk> which will use the MovieDetailView view.

The next section will focus on building the movie models & views.

Building the Views

Let's start with the model definition in models.py.

We are going to be storing only the movie's name and year_of_release. Our very simple model should look something like this:

# movies-api/movies/api/models.py

from django.db import models

class Movie(models.Model):

name = models.CharField(max_length=100)

year_of_release = models.PositiveSmallIntegerField()

def __str__(self):

return self.name

Once you have created the model, go to your terminal and make new migrations:

./manage.py makemigrations

Then, run the migrations:

./manage.py migrate

Running the tests at this point using ./manage.py test should result in only 4 errors since the 2 tests we wrote for the model are now satisfied.

Let's now move to the views. We will first need to create the serializer for the model in serializers.py. Django Rest Framework will use that serializer when serializing Django querysets to JSON.

# movies-api/movies/api/serializers.py

from rest_framework.serializers import ModelSerializer

from api.models import Movie

class MovieSerializer(ModelSerializer):

class Meta:

model = Movie

fields = ('id', 'name', 'year_of_release')

extra_kwargs = {

'id': {'read_only': True}

}

We are using Rest Framework's ModelSerializer. We pass our Movie model to it and specify the fields we would like to be serialized.

We also specify that id will be read only because it is system generated, and not required when creating new records.

Let's finish by defining the views inside views.py. We will be using Rest Framework's generic views.

# movies-api/movies/api/views.py

from rest_framework.generics import ListCreateAPIView, RetrieveUpdateDestroyAPIView

from api.models import Movie

from api.serializers import MovieSerializer

class MovieCreateView(ListCreateAPIView):

queryset = Movie.objects.all()

serializer_class = MovieSerializer

class MovieDetailView(RetrieveUpdateDestroyAPIView):

queryset = Movie.objects.all()

serializer_class = MovieSerializer

In short, we are using ListCreateAPIView to allow GET and POST and RetrieveUpdateDestroyAPIView to allow GET, PUT and DELETE.

The queryset defines how the view should access objects from the database. The serializer_class attribute defines which serializer the view should use.

At this point, our API is complete. If you run the test cases, you should see 6 successful test cases.

You can also run ./manage.py runserver and point your browser to http://localhost/8000/api/v1/movies to play with Django Rest Framework's web browsable API.

Lastly, we need to make sure that our code is deployable to Heroku.

Create a file called Procfile in the root of your application i.e in the movies-api folder. Inside it, add this:

web: gunicorn movies.wsgi --pythonpath=movies --log-file -

Make sure all your code is committed and pushed to Github on the ft-api branch.

Running Tests on Semaphore

First, sign up for a free Semaphore account if you don’t have one already.

Log in to your Semaphore account then click Add new project.

We'll add the project from GitHub, but Semaphore supports Bitbucket as well.

After that, select the repository from the list presented, and then select the branch ft-api.

Once the analysis is complete, you will see an outline of the build plan. We'll customize it to look like this:

Note that we're using Python v3.6 here, and our Job commands are cd movies && python manage.py test.

After that, scroll down and click Build with these settings.

Your tests should run and pass successfully.

After that, go to Github and merge ft-api into the develop branch. Delete the ft-api branch. Then merge develop into staging, and then staging into master.

At this point, you should have the develop, staging and master branches with similar up to date code and no ft-api branch.

Go to your movies-api project page on Semaphore and click the + button to see a list of your available branches.

Then, select each and run builds for them.

You should now have 3 successful builds for those branches.

Deploying to Heroku

Semaphore makes deploying to Heroku very simple. You can read a shorter guide on deploying to Heroku from Semaphore here.

First of all, create two applications in your Heroku account, one for staging and one for production (i.e movie-api-staging & movie-api-prod in our case).

Make sure to disable collectstatic by setting DISABLE_COLLECTSTATIC=1 in the config for both applications.

Things to note:

- You will have to choose different application names from the ones above.

- You will have to add the URLs for your two applications into

ALLOWED_HOSTSinsettings.py, so that Django will allow requests to those URLs.

Edit movies-api/movies/movies/settings.py:

# movies-api/movies/movies/settings.py

...

ALLOWED_HOSTS = ['your-staging-app.herokuapp.com', 'your-production-app.herokuapp.com']

Then, push your changes to Github and update your branches acccordingly.

From the movies-api page on your Semaphore account, click Set Up Deployment.

Select Heroku in the next screen.

We will be going with the Automatic deployment option.

Next, let's deploy the staging branch.

The next page needs your Heroku API key. You can find it under the account page in your Heroku account.

Once you have entered the API key, you will see a list of your available Heroku applications. We are deploying the staging version so select the application you created for staging.

After that, give your server a name and create it.

On the next page, click the Edit Server button. Make sure to edit the server deploy commands to look like the following before deploying:

Your staging application should now be deployed to your-staging-app-name.herokuapp.com.

On your movies-api project page, you can see the staging server was deployed.

Click on the + to add a new server for production, and then follow the same procedure to deploy the master branch to your production application.

Working with Heroku Pipeline and Review App

Go to your Heroku account and create a new Pipeline. Then, attach the Github repo for movies-api to it.

Once you've attached the correct Github repo, click Create Pipeline.

In the next page, add the staging application you created to the staging section and the existing production application to the production section.

Next, enable Review Apps by clicking the Enable Review Apps... button.

We are going to use the staging application as the parent, i.e config variables from the staging application will be used for Review Apps.

The next page contains the configuration options for defining the app.json file that will specify how the review application is to be created.

You can leave it as is and click Commit to Repo to have it committed to Github.

Finally, you can enable review apps to create new apps automatically for every PR or destroy them automatically when they become stale.

From now on, every PR to staging will spin up a review application automatically. The pipeline will easily enable promoting applications from review to staging, and to production.

Conclusion

In this tutorial, we covered how to write a Django and Django Rest Framework API, and how to test it.

We also covered how to use Semaphore to run Django tests and continuously deploy an application to Heroku.

You can find the code we wrote in this tutorial in this GitHub repository. Feel free to leave any comments or questions you may have in the comment section below.

This article is brought with ❤ to you by Semaphore.

Catalin George Festila

Python Qt4 - part 003.

Today I've taken a simple example with PyQt4 compared to the other tutorials we have done so far.

The main reason was to understand and use PyQt4 to display an important message.

To make this example I have set the following steps for my python program.

- importing python modules

- creating the application in PyQt4 as a tray icon class

- establishing an exit from the application

- setting up a message to display

- display the message over a period of time

- closing the application

- running the python application

#! /usr/bin/env python

import sys

from PyQt4 import QtGui, QtCore

class SystemTrayIcon(QtGui.QSystemTrayIcon):

def __init__(self, parent=None):

QtGui.QSystemTrayIcon.__init__(self, parent)

self.setIcon(QtGui.QIcon("mess.svg"))

menu = QtGui.QMenu(parent)

exitAction = menu.addAction("Exit")

self.setContextMenu(menu)

QtCore.QObject.connect(exitAction,QtCore.SIGNAL('triggered()'), self.exit)

def click_trap(self, value):

if value == self.Trigger: #left click!

self.left_menu.exec_(QtGui.QCursor.pos())

def welcome(self):

self.showMessage("Hello user!", "This is a message from PyQT4")

def show(self):

QtGui.QSystemTrayIcon.show(self)

QtCore.QTimer.singleShot(600, self.welcome)

def exit(self):

QtCore.QCoreApplication.exit()

def main():

app = QtGui.QApplication([])

tray = SystemTrayIcon()

tray.show()

app.exec_()

if __name__ == '__main__':

main()About running application: the main function will run application.

The python class SystemTrayIcon will work only if we used QApplication to make like any application.

This is the reason I used variable app.

The tray variable is used to run it like tray icon application.

Into the SystemTrayIcon class I put some functions to help me with my issue.

Under __init__ I used all settings for my tray icon application: the icon, the exit menu, signal for exit.

The next functions come with:

- click_trap - take the click of user ;

- welcome - make message to display;

- show - display the welcome message;

- exit - exit from application

About the showMessage then this help you:

QSystemTrayIcon.showMessage (self, QString title, QString msg, MessageIcon icon = QSystemTrayIcon.Information, int msecs = 10000)

Shows a balloon message for the entry with the given title, message and icon for the time specified in millisecondsTimeoutHint. title and message must be plain text strings.

Message can be clicked by the user; the messageClicked() signal will emitted when this occurs.

Note that display of messages are dependent on the system configuration and user preferences, and that messages may not appear at all. Hence, it should not be relied upon as the sole means for providing critical information.

On Windows, the millisecondsTimeoutHint is usually ignored by the system when the application has focus.

On Mac OS X, the Growl notification system must be installed for this function to display messages.

This function was introduced in Qt 4.3.

Mike Driscoll

PyDev of the Week on Hiatus

I don’t know if anyone noticed something amiss this week, but the PyDev of the Week series is currently on hiatus. I have been having trouble getting interviewees to get the interviews done in a timely manner the last month or so and actually ended up running out.

While I have a bunch of new interviewees lined up, none of them have actually finished the interview. So I am suspending the series for the month of July 2017. Hopefully I can get several lined up for August and get the series kicked back into gear. If not, then it will be suspended until I have a decent number of interviews done.

If you happen to have any suggestions for Pythonistas that you would like to see featured here in the PyDev of the Week series, feel free to leave a comment or contact me.

July 05, 2017

NumFOCUS

Belinda Weaver joins Carpentries as Community Development Lead

Belinda Weaver was recently hired by the Carpentries as their new Community Development Lead. We are delighted to welcome her to the NumFOCUS family! Here, Belinda introduces herself to the community and invites your participation and feedback on her work. I am very pleased to take up the role of Community Development Lead for Software […]

Reuven Lerner

Raw strings to the rescue!

Whenever I teach Python courses, most of my students are using Windows. And thus, when it comes time to do an exercise, I inevitably end up with someone who does the following:

Whenever I teach Python courses, most of my students are using Windows. And thus, when it comes time to do an exercise, I inevitably end up with someone who does the following:

for one_line in open('c:\abc\def\ghi'):

print(one_line)

The above code looks like it should work. But it almost certainly doesn’t. Why? Because backslashes (\) in Python strings are used to insert special characters. For example, \n inserts a newline, and \t inserts a tab. So when we create the above string, we think that we’re entering a simple path — but we’re actually entering a string containing ASCII 7, the alarm bell.

Experienced programmers are used to looking for \n and \t in their code. But \a (alarm bell) and \v (vertical tab), for example, tend to surprise many of them. And if you aren’t an experienced programmer? Then you’re totally baffled why the pathname you’ve entered, and copied so precisely from Windows, results in a “file not found” error.

One way to solve this problem is by escaping the backslashes before the problematic characters. If you want a literal “\n” in your text, then put “\\n” in your string. By the same token, you can say “\\a” or “\\v”. But let’s be honest; remembering which characters require a doubled backslash is a matter of time, experience, and discipline.

(And yes, you can use regular, Unix-style forward slashes on Windows. But that is generally met by even more baffled looks than the notion of a “vertical tab.”)

You might as well double all of the backslashes — but doing that is really annoying. Which is where “raw strings” come into play in Python.

A “raw string” is basically a “don’t do anything special with the contents” string — a what-you-see-is-what-you-get string. It’s actually not a different type of data, but rather a way to enter strings in which you want to escape all backslashes. Just preface the opening quote (or double quotes) with the “r” character, and your string will be defined with all backslashes escaped. For example, if you say:

print("abc\ndef\nghi")

then you’ll see

abc def ghi

But if you say:

print(r"abc\ndef\nghi")

then you’ll see

abc\ndef\nghi

I suggest using raw strings whenever working with pathnames on Windows; it allows you to avoid guessing which characters require escaping. I also use them whenever I’m writing regular expressions in Python, especially if I’m using \b (for word boundaries) or using backreferences to groups.

Raw strings are one of those super-simple ideas that can have big implications on the readability of your code, as well as your ability to avoid problems. Avoid such problems; use raw strings for all of your Windows pathnames, and you’ll be able to devote your attention to fixing actual bugs in your code.

The post Raw strings to the rescue! appeared first on Lerner Consulting Blog.

Dataquest

Understanding SettingwithCopyWarning in pandas

SettingWithCopyWarning is one of the most common hurdles people run into when learning pandas. A quick web search will reveal scores of Stack Overflow questions, GitHub issues and forum posts from programmers trying to wrap their heads around what this warning means in their particular situation. It’s no surprise that many struggle with this; there are so many ways to index pandas data structures, each with its own particular nuance, and even pandas itself does not guarantee one single outcome for two lines of code that may look identical.

This guide explains why the warning is generated and shows you how to solve it. It also includes under-the-hood details to give you a better understanding of what’s happening and provides some history on the topic, giving you perspective on why it all works this way.

In order to explore SettingWithCopyWarning, we’re going to use a data set of the prices of Xboxes sold in 3-day auctions on eBay from the book Modelling Online Auctions. Let’s take a look:

Caktus Consulting Group

Python Tool Review: Using PyCharm for Python Development - and More

Back in 2011, I wrote a blog post on using Eclipse for Python Development.

I've never updated that post, and it's probably terribly outdated by now. But there's a good reason for that - I haven't used Eclipse in years. Not long after that post, I came across PyCharm, and I haven't really looked back.

Performance

Eclipse always felt sluggish to me. PyCharm feels an order of magnitude more responsive. Sometimes it takes a minute to update its indices after I've checked out a new branch of a very large project but usually, even that is barely noticeable. Once the indices are updated, everything is very fast.

Responding quickly is very important. If I'm deep in a problem and suddenly have to stop and wait for my editor to finish something, it can break my concentration and end up slowing me down much more than you might expect simply because an operation took a few seconds longer than it should.

It's not just editing that's fast. I can search for things across every file in my current project faster than I can type in the search string. It's amazing how useful that simple ability becomes.

Python

PyCharm knows Python. My favorite command is Control-B, which jumps to the definition of whatever is under the cursor. That's not so hard when the variable was just assigned a constant a few lines before. But most of the time, knowing the type of a variable at a particular time requires understanding the code that got you there. And PyCharm gets this right an astonishing amount of the time.

I can have multiple projects open in PyCharm at one time, each using its own virtual environment, and everything just works. This is another absolute requirement for my workflow.

The latest release even understands Python type annotations from the very latest Python, Python 3.6.

Django

PyCharm has built-in support for Django. This includes things like knowing the syntax of Django templates, and being able to run and debug your Django app right in PyCharm.

Git

PyCharm recognizes that your project is stored in a git repo and has lots of useful features related to that, like adding new files to the repo for you and making clear which files are not actually in the repo, showing all changes since the last commit, comparing a file to any other version of itself, pulling, committing, pushing, checking out another branch, creating a branch, etc.

I use some of these features in PyCharm, and go back to the command line for some other operations just because I'm so used to doing things that way. PyCharm is fine with that; when I go back to PyCharm, it just notices that things have changed and carries on.

Because the git support is so handy, I sometimes use PyCharm to edit files in projects that have no Python code at all, like my personal dotfiles and ansible scripts.

Code checking

PyCharm provides numerous options for checking your code for syntax and style issues as you write it, for Python, HTML, JavaScript, and probably whatever else you need to work on. But every check can be disabled if you want to, so your work is not cluttered with warnings you are ignoring, just the ones you want to see.

Cross-platform

When I started using PyCharm, I was switching between Linux at work and a Mac at home. PyCharm works the same on both, so I didn't have to keep switching tools.

(If you're wondering, I'm always using Linux now, except for a few hours a year when I do my taxes.)

Documentation

Admittedly, the documentation is sparse compared to, say, Django. There seems to be a lot of it on their support web site, but when you start to use it, you realize that most pages only have a paragraph or two that only touch on the surface of things. It's especially frustrating to look for details of how something works in PyCharm, and find a page about it, but all it says is which key invokes it.

Luckily, most of the time you can manage without detailed documentation. But I often wonder how many features could be more useful for me but I don't know it because what they do isn't documented.

Commercial product

PyCharm has a free and a paid version, and I use the paid version, which adds support for web development and Django, among other things. I suspect I'm like a lot of my peers in usually looking for free tools and passing over the paid ones. I might not ever have tried PyCharm if I hadn't received a free or reduced-cost trial at a conference.

But I'm here to say, PyCharm is worth it if you write a lot of Python. And I'm glad they have revenue to pay programmers to keep PyCharm working, and to update it as Python evolves.

Conclusion

I'm not saying PyCharm is better than everything else. I haven't tried everything else, and don't plan to. Trying a new development environment seriously is a significant investment in time.

What I can say is that I'm very happy and productive using PyCharm both at work and at home, and if you're dissatisfied with whatever you're using now, it might be worth checking it out.

(Editor’s Note: Neither the author nor Caktus have any connection with JetBrains, the makers of PyCharm, other than as customers. No payment or other compensation was received for this review. This post reflects the personal opinion and experience of the author and should not be considered an endorsement by Caktus Group.)

PyCharm

PyCharm 2017.2 EAP 6

We’re approaching the release of PyCharm 2017.2, and we’ve been busy putting the finishing touches on it. This week we have the sixth early access program (EAP) version ready for you, go to our website to get it now!

New in this version:

- Many bugs have been fixed

- React Native applications are now debugged using headless Chrome rather than Node.js

- For more details, see the release notes.

Please let us know how you like it! Users who actively report about their experiences with the EAP can win prizes in our EAP competition. To participate: just report your findings on YouTrack, and help us improve PyCharm.

To get all EAP builds as soon as we publish them, set your update channel to EAP (go to Help | Check for Updates, click the ‘Updates’ link, and then select ‘Early Access Program’ in the dropdown). If you’d like to keep all your JetBrains tools updates, try JetBrains Toolbox!

-PyCharm Team

The Drive to Develop

Codementor

Using Django with Elasticsearch, Logstash, and Kibana (ELK Stack)

Using Django with Elasticsearch, Logstash and Kibana

EuroPython

EuroPython 2017: Beginners’ Day workshop revived

Our Beginners’ Day host Harry Percival cannot attend EuroPython due to personal reasons, but thanks to our brilliant community, we have managed to find trainers who are willing to help out and run the workshop:

- Ilian Iliev

- Juan Manuel Santos

- Petr Viktorin

- Lasse Schuirmann

- Michał Bultrowicz

- Maximilian Scholz

A big thanks for the quick offers of help. So once more, we’re pleased to present the…

Beginners’ Day Workshop

We will have a Beginners’ Day workshop, on Sunday, July 9th, from 10:00 until 17:00, at the Palacongressi di Rimini (Via della Fiera 23, Rimini), the same location as the main conference.

The session will be presented in English (although a few of the coaches do speak other languages as well).

Please bring your laptop, as a large part of the day will be devoted to learning Python on your own PC.

For more information and the session list, please see the Beginners’ Day workshop page on our website.

Enjoy,

–

EuroPython 2017 Team

EuroPython Society

EuroPython 2017 Conference

July 04, 2017

Python Sweetness

py-lmdb Needs a Maintainer

[copied verbatim from README.md]

I simply don’t have time for this project right now, and still the issues keep piling in. Are you a heavy py-lmdb user and understand most bits of the API? Got some spare time to give a binding you use a little love? Dab hand at C and CFFI? Access to a Visual Studio build machine? Please drop me an e-mail: dw at botanicus dot net. TLC and hand-holding will be provided as necessary, I just have no bandwidth left to write new code.

EuroPython

EuroPython 2017: Free Intel Distribution for Python

We are very pleased to have Intel as Diamond Sponsor for EuroPython 2017. You can visit them at the most central booth in our exhibit area, the Sala della Piazza, and take the opportunity to chat with their staff.

Please find below a hosted blog post from Intel, that offers us an exciting glimpse at the recently released free, Intel® Distribution for Python.

Enjoy,

–

EuroPython 2017 Team

EuroPython Society

EuroPython 2017 Conference

Intel® Distribution for Python: speeding up Python performance

The Python language has transformed computing in the age of big data and massive compute power, bringing high productivity through its easy to learn syntax and large collection of packages and libraries. Its popularity and adoption extends to domains such as data analytics, machine learning, web development, high performance computing and general purpose scripting. High productivity normally comes at the expense of performance, often relegating Python to a great prototyping environment that eventually needs to be rewritten in native languages for production level robustness.

At Intel Software, our clear objective in working with Python software tools is to bring Python performance closer to native code speeds, possibly eliminating the need to rewrite production code in C or C++. We introduce the recently released, free, Intel® Distribution for Python - a performance accelerated Python Distribution that is highly optimized for Intel architecture, by leveraging advanced threading and vectorization instruction sets. And while we’re at it, we also added features that make it easily installable (without you having to deal with dependency nightmares), and broadly available to the entire Python community.

Join us at EuroPython and learn of the many ways Intel contributes to this versatile language and vibrant developer community. Our workshop will demonstrate the inner workings of the Intel Distribution for Python, how we accelerate Python’s core packages NumPy, SciPy, scikit-learn & others through linking with performance libraries such as the powerful Intel® Math Kernel Library & Intel® Data Analytics Acceleration Library, techniques for composable parallelism, and profiling Python mixed with native code with Intel® VTune Amplifier. The talks will highlight Python Profiling with Intel® VTuneT Amplifier, Easy methods of Profiling & Tuning of Python applications for performance, and Infrastructure design considerations using Python with Buildbot & Linux containers.

Come, check out the Intel booth #18, and chat with our knowledgeable staff to learn more about Intel tools, technologies & applications in the Python space.

PyCharm

Remote Development on Raspberry Pi: Checking Internet Quality (Part 1)

We all know that ISPs have a habit of overselling their connections, and this sometimes leads our connections to not be as good as we’d like them to be. Also, many of us have Raspberry Pi’s laying around waiting for cool projects. So let’s put that Pi to use to check on our internet connection!

One of the key metrics of an internet connection is its ping time to other hosts on the internet. So let’s write a program that regularly pings another host and records the results. Some of you may have heard of smokeping, which does exactly that, but that’s written in Perl and a pain to configure. So let’s go and reinvent the wheel, because we can.

PS: For those of you wanting to execute code remotely on other remote computers, like an AWS instance or a DigitalOcean droplet, the process is exactly the same.

Raspberry Ping

The app we will build consists of two parts: one part does the measurements, and the other visualizes previous measurements. To measure the results we can just call the ping command-line tool that every Linux machine (including the Raspberry Pi) ships with. We will then store the results in a PostgreSQL database.

In the part 2 of this blog post (coming next week) we’ll have a look at our results: we will view them using a Flask app, which uses Matplotlib to draw a graph of recent results.

Preparing the Pi

As we will want to be able to view the webpage with the results later, it’s important to give our Pi a fixed IP within our network. To do so, edit /etc/network/interfaces. See this tutorial for additional details. NOTE: don’t do this if you’re on a company network, your network administrator will cut your hands off with a rusty knife, don’t ask me how I know or how I’m typing this.

After you’ve set the Pi to use a static IP, use raspi-config on the command line. Go to Advanced Options, choose SSH, and choose Yes. When you’ve done this, you’re ready to get started with PyCharm.

Let’s connect PyCharm to the Raspberry Pi. Go to File | Create New Project, and choose Pure Python (we’ll add Flask later, so you could choose Flask here as well if you’d prefer). Then use the gear icon to add an SSH remote interpreter. Use the credentials that you’ve set up for your Raspberry Pi. I’m going to use the system interpreter. If you’d like to use a virtualenv instead, you can browse to the python executable within your virtualenv as well.

After you’ve created the project, there are a couple things we need to take care of before we can start coding. So let’s open an SSH terminal to do these things. Within PyCharm press Ctrl+Shift+A, then type and choose ‘Start SSH session’, then pick your Raspberry Pi from the list, and you should be connected.

We now need to install several items:

- PostgreSQL

- Libpq-dev, needed for Psycopg2

- Python-dev, needed to compile Psycopg2

Run sudo apt-get update && sudo apt-get install -y postgresql libpq-dev python-dev to install everything at once.

After installing the prerequisites, we now need to set up the permissions in PostgreSQL. The easiest way to do this is to go back to our SSH terminal, and run sudo -u postgres psql to get an SQL prompt as the postgres user. Now we’ll create a user (called a ‘role’ in Postgres terminology) with the same name as the user that we run the process with:

CREATE ROLE pi WITH LOGIN PASSWORD ‘hunter2’;

Make sure that the role in PostgreSQL has the same name as your linux username. You might also want to substitute a better password. It is important to end your SQL statements with a semicolon (;) in psql, because it will assume you’re writing a multi-line statement until you terminate with a semicolon. We’re granting the pi user login rights, which just means that the user can log in. Roles without login rights are used to create groups.

We also need to create a database. Let’s create a database named after the user (this makes running psql as pi very easy):

CREATE DATABASE pi WITH OWNER pi;

Now exit psql with \q.

Capturing the Pings

To get information on the quality of the internet connection, let’s ping a server using the system’s ping utility, and then read the result with a regex. So let’s take a look at the output of ping:

PING jetbrains.com (54.217.236.18) 56(84) bytes of data. 64 bytes from ec2-54-217-236-18.eu-west-1.compute.amazonaws.com (54.217.236.18): icmp_seq=1 ttl=47 time=32.9 ms 64 bytes from ec2-54-217-236-18.eu-west-1.compute.amazonaws.com (54.217.236.18): icmp_seq=2 ttl=47 time=32.9 ms 64 bytes from ec2-54-217-236-18.eu-west-1.compute.amazonaws.com (54.217.236.18): icmp_seq=3 ttl=47 time=32.9 ms 64 bytes from ec2-54-217-236-18.eu-west-1.compute.amazonaws.com (54.217.236.18): icmp_seq=4 ttl=47 time=32.9 ms --- jetbrains.com ping statistics --- 4 packets transmitted, 4 received, 0% packet loss, time 3003ms rtt min/avg/max/mdev = 32.909/32.951/32.992/0.131 ms

All lines with individual round trip times begin with ‘64 bytes from’. Let’s create a file ‘ping.py’, and start coding: we can first get the output of ping, and then iterate over the lines, picking the one that start with a number and ‘bytes from’.

host = 'jetbrains.com'

ping_output = subprocess32.check_output(["ping", host, "-c 5"])

for line in ping_output.split('\n'):

if re.match("\d+ bytes from", line):

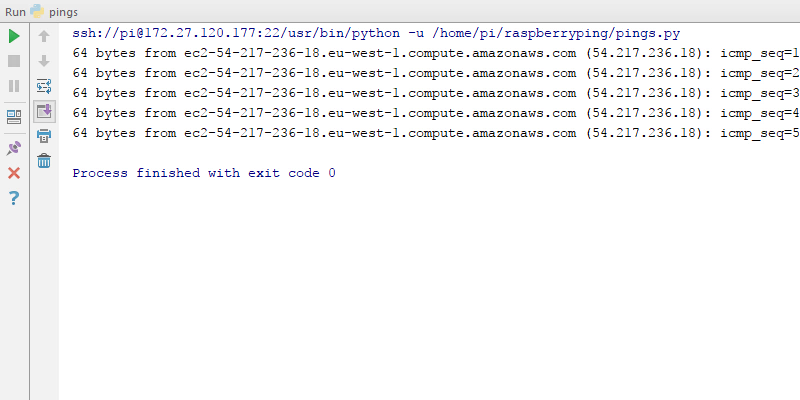

print(line)At this point if you run the code (Ctrl+Shift+F10), you should see this code running, remotely on the Raspberry Pi:

If you get a file not found problem, you may want to check if the deployment settings are set up correctly: Tools | Deployment | Automatic Upload should be checked.

Storing the Pings

We wanted to store our pings in PostgreSQL, so let’s create a table for them. First, we need to connect to the database. As our database is only exposed to localhost, we will need to use an SSH tunnel:

After you’ve connected, create the table by executing the setup_db.sql script. To do this, copy paste from GitHub into the SQL console that opened up right after connecting, and then use the green play button.

Now that we’ve got this working, let’s expand our script to record the pings into the database. To connect to the database from Python we’ll need to install psycopg2, you can do this by going to File | Settings | Project Interpreter, and using the green ‘+’ icon to install the package. If you’d like to see the full script, you can have a look on GitHub.

Cron

To make sure that we actually regularly record the pings, we need to schedule this script to be run. For this we will use cron. As we’re using peer authentication to the database, we need to make sure that the script is run as the pi user. So let’s open an SSH session (making sure we’re logged in as pi), and then run crontab -e to edit our user crontab. Then at the bottom of the file add:

*/5 * * * * /home/pi/raspberryping/ping.py jetbrains.com >> /var/log/raspberryping.log 2>&1

Make sure you have a newline at the end of the file.

The first */5 means that the script will be run every 5 minutes, if you’d like a different frequency you can learn more about crontabs on Wikipedia. Now we also need to create the log file and make sure that the script can write to it:

sudo touch /var/log/raspberryping.log sudo chown pi:pi /var/log/raspberryping.log

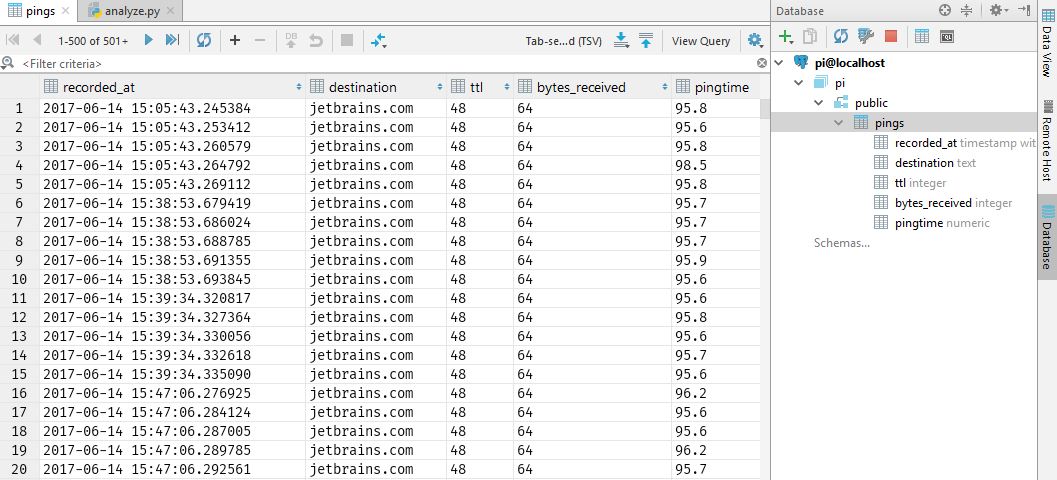

At this point you may want to grab a cup of coffee, and when you come back a while later some ping times should have been logged. To verify, let’s check with PyCharm’s database tools. Open the database tool window (on the right of the screen) and double click the table. You should see that it contains values:

That’s all for this week. Check back next week, we’ll write an analysis script. For the analysis we’ll create a small webapp (with Flask) where we show graphs of recent ping times. We’ll compare and contrast using advanced SQL versus Pandas, and then use Matplotlib to graph the results. In the meantime, let us know in the comments what projects you’d like to make for your Raspberry Pi!

Kushal Das

Looking back at the history of dgplug and my journey

During a session of the summer training this year, someone asked about the history of DGPLUG and how I started contributing to it. The story of the dpglug has an even longer back-story about my history with Linux. I’ll start there, and then continue with the story of dgplug.

Seeing Linux for the first time

During my class 11-12, I used to spend a lot of time in the hostels of the Regional Engineering College, Durgapur, (or as we call it, REC, Durgapur.) This institute is now known as NIT, Duragpur. I got to lay my hands on and use a computer (mostly to play games) in my uncle’s hostel room. All the machines I saw there, were Windows only.

My Join Entrance Examination(JEE) center was the REC (year: 2001). That was a known & familiar environment for me. During breaks on day 1, I came back to hall 2 (my uncle’s room) for lunch. The room was unusually full; I think more than 7 people were looking at the computer with very anxious faces. One guy was doing something on the computer. My food was kept in the corner, and someone told me to eat quietly. I could not resist, and asked what was going on.

We’re installing Linux in the computer, and this is a very critical phase.

If the mouse works, then it will just work, or else we will not be able to use it in the system at all, someone replied

I had to ask again, What is this Linux? “Another Operating System” came the reply. I knew almost everyone in the room, I knew that they used computers daily. I also saw a few of them also writing programs to solve their chemical lab problems (my uncle was in Chemical Engineering department). There were people from the computer science department too. The thing stuck in my head, going back to the next examination, was that one guy, who knew something which others did not. It stuck deeper, than the actual exam in hand, and I kept thinking about it all day, that day. Later after the exam, I actually got some time to sit in front of the Linux computer, and then I tried to play with it, trying things and clicking around on the screen. Everything was so different than I used to see on my computer screen. That was it, my mind was set; I was going to learn Linux, since not many know about it. I will also be able to help others as required, just like my uncle’s friend.

Getting my own computer

After the results of the JEE were announced, I decided to join the Dr. B. C. Roy Engineering College, Durgapur. It was a private college, opened a year back. On 15th August, I also got my first computer, a Pentium III, with 128MB of RAM, and a 40GB hard drive. I also managed to convince my parents to get a mechanical keyboard somehow, (which was costly and unusual at the time) with this setup. I also got Linux (RHL 7.x IIRC) installed on that computer along with Windows 98. Once it got home, I kept clicking on everything in Linux, ran the box ragged and tried to find all that I could do with Linux.

After a few days, I had some trouble with Windows and had to reinstall it, and when I rebooted, I could not boot to Linux any more. The option had disappeared. I freaked out at first, but guessed that it had something to do with my Windows reinstall. As I had my Linux CDs with me, I went ahead and tried to install it again. Installing and reinstalling the operating systems over and over, gave me the idea that I will had to install Windows first, and only then, should I install Linux. Otherwise, we can not boot into Linux.

Introduction to the Command Line

I knew a few Windows commands by that time. Someone in REC pointed me to a book written by Sumitabha Das (I still have a copy at home, in my village). I started reading from there, and learning commands one by one.

Becoming the Linux Expert in college

This is around the same time when people started recognizing me as a Linux Expert; at least in my college.

Of course I knew how to install Linux, but the two major things that helped get that tag, were

- the mount command, I knew how to mount Windows partitions in Linux

- xmms-mp3 rpm package. I had a copy, and I could install it on anyone’s computer.

The same song, on the same hardware, but playing in XMMS always used to give much better audio quality than Windows ever did. Just knowing those two commands gave me a lot of advantage over my peers in that remote college (we never had Internet connection in the college, IIRC).

The Unix Lab & Introduction to computer class

We were introduced to computers in our first semester in some special class. Though many of my classmates saw a computer for the first time in their life, we were tasked to practice many (DOS) commands in the same day. I spent most of my time, helping others learn about the hardware and how to use it.

In our college hostel, we had a few really young professors who also stayed with us. Somehow I started talking a lot with them, and tried to learn as many things as I could. One of them mentioned something about a Unix lab in the College which we were supposed to use in the coming days. I went back to the college the very next day and managed to find the lab; the in-charge (same person who told me about it) allowed me to get in, and use the setup (there were 20 computers).

Our batch started using the lab, only for 2-3 days at the most. During the first day in the lab, I found a command to send out emails to the other users. I came back during some off hours, and wrote a long mail to one of my classmates (not going to talk about the details of the mail) and sent it out.

As we stopped using that lab, I was sure no one had read that mail. Except for one day, the lab in-charge asked me how my email writing was going on. I was stunned, how did he find out about the email? I was all splutteringly, tongue tied! Later at night, he explained to me, the idea of sysadmins, and all that a superuser can do in a Linux/Unix environment. I started thinking about privacy in the electronic world from that night itself :)

Learning from friends

The only other people who were excited about Linux, were two people from same batch in REC. Bipendra Shrestha, and Jitu. I used to spend a lot of time in their hostel and learned so many things from them.

Internet access and the start of dgplug

In 2004, I managed to get more regular access to the Internet (by saving up a bit more money to visit Internet cafes regularly). My weekly allowance was Rs.100, and regular one hour Internet access was around Rs.30-50.

While reading about Linux, I found the term LUG, or Linux User groups. As I was the only regular Linux user in college, I knew that I never had much chance to learn more on my own there, and that somehow I will have to find more people like me and learn together. Also around the same time, I started learning about words like upstream, contribution, Free Software, & FSF. I managed to contact Sankarshan Mukhopadhyay, who sent me a copy of Ankur Bangla, a Linux running in my mother tongue, Bengali. I also came to know about all the ilug chapters in India. That inspired me. Having our own LUG in Durgapur was my next goal. Soumya Kanti Chakrabarty was the first person, I convinced, to join with me to form this group.

The first website came up on Geocities (fun times), and we also had our yahoo group. Later in 2005, we managed to register our domain name; the money came in as a donation from my uncle (who by this time was doing his Ph.D. in IIT Kanpur).

I moved to Bangalore in July 2005, and Soumya was running the local meetings. After I started using IRC regularly, we managed to have our own IRC channel, and we slowly moved most of our discussion over to IRC only. I attended FOSS.IN in December 2005. I think I should write a complete post about that event, and how it changed my life altogether.

Physical meetings in 2006-2007

A day with Fedora on 4th April 2006 was the first big event for us. Sayamindu Dasgupta, Indranil Dasgupta, and Somyadip Modak came down for this event to Durgapur. This is the same time when we started the Bijra project, where we helped the school to have a Linux (LTSP) based setup, completely in Bengali. This was the first big project we took on as a group. This also gave us some media coverage back then. This led to the bigger meetup during 2007, when NRCFOSS members including lawgon, and Rahul Sundaram came down to Durgapur.

dgplug summer training 2008

In 2008, I pitched the idea of having a summer training over IRC, following the same rules of meetings as Fedora marketing on IRC. Shakthi Kannan also glommed onto the idea, and that started a new chapter in the history of dgplug.

Becoming the active contributor community

I knew many people who are better than me when it comes to brain power, but generally, there was no one to push the idea of always learning new things to them. I guess the motto of dgplug, “Learn and teach others” helped us go above this obstacle, and build a community of friends who are always willing to help.

শেখ এবং শেখাও (Learn & Teach others).

Our people are from different backgrounds, from various countries, but the idea of Freedom and Sharing binds us together in the group known as dgplug. Back in 2015 at PyCon India, we had a meeting of all the Python groups in India. After listening to the problems of all the other groups, I suddenly realized that we had none of those problems. We have no travel issues, no problems getting speakers, and no problem getting new people to join in. Just being on the Internet, helps a lot. Also, people in the group have strong opinions, this means healthy but long discussions sometimes :)

Now, you may have noticed that I did not call the group a GNU/Linux users group. Unfortunately, by the time I learned about the Free Software movement, and its history, it was too late to change the name. This year in the summer training, I will take a more in-depth session about the history of hacker ethics, and Free Software movement. and I know few other people will join in.

The future

I wish that DGPLUG continues to grow along with the members. The group does not limit itself only to be about software, or technology. Most of the regular members met each other in conferences, and we keep meeting every year in PyCon India, and PyCon Pune. We should be able to help other to learn and use the same freedom (be it in technology or in other walks of life) we have. The IRC channel should continue to be the happy place it always has been; where we all meet every day, and have fun together.

Jaime Buelta

$7.11 in four prices and the Decimal type, revisited

I happen to take a look to this old post in this blog. The post is 7 years old, but still presents an interesting problem. “A mathematician purchased four items in a grocery store. He noticed that when he added the prices of the four items, the sum came to $7.11, and when he multiplied the … Continue reading $7.11 in four prices and the Decimal type, revisited![]()

Catalin George Festila

The pdb python interactive debugging - part 001.

This is a short intro tutorial on python debugger to summarize this topic related to Python.

According to the development team, this python module called pdb has the following objectives:

The module pdb defines an interactive source code debugger for Python programs. It supports setting (conditional) breakpoints and single stepping at the source line level, inspection of stack frames, source code listing, and evaluation of arbitrary Python code in the context of any stack frame. It also supports post-mortem debugging and can be called under program control.

Let's start it with some example:

C:\Python27>python

Python 2.7.13 (v2.7.13:a06454b1afa1, Dec 17 2016, 20:42:59) [MSC v.1500 32 bit (Intel)] on win32

Type "help", "copyright", "credits" or "license" for more information.

>>> import pdb

>>> pdb.pm()

Traceback (most recent call last):

File "", line 1, in

File "C:\Python27\lib\pdb.py", line 1270, in pm

post_mortem(sys.last_traceback)

AttributeError: 'module' object has no attribute 'last_traceback'

>>> import os

>>> pdb.pm()

> c:\python27\lib\pdb.py(1270)pm()

-> post_mortem(sys.last_traceback)

(Pdb) ?

Documented commands (type help ):

========================================

EOF bt cont enable jump pp run unt

a c continue exit l q s until

alias cl d h list quit step up

args clear debug help n r tbreak w

b commands disable ignore next restart u whatis

break condition down j p return unalias where

Miscellaneous help topics:

==========================

exec pdb

Undocumented commands:

======================

retval rvWith the argument? we can see the commands that we can execute.

Let's see the list command ( l ):

(Pdb) l

1265 p = Pdb()

1266 p.reset()

1267 p.interaction(None, t)

1268

1269 def pm():

1270 -> post_mortem(sys.last_traceback)

1271

1272

1273 # Main program for testing

1274

1275 TESTCMD = 'import x; x.main()'Post-mortem debugging is a method that requires an environment that provides dynamic execution of code.

One the greatest benefit of post-mortem debugging is that you can use it directly after something has gone wrong.

You can move between frames within the current call stack using up and down.

This moves towards older frames on the stack.

The debugger prints the current location with where, see example:

(Pdb) where

(1)()

> c:\python27\lib\pdb.py(1270)pm()

-> post_mortem(sys.last_traceback)The until command can be used to step past the end of a loop.

Use break command used for setting break points, example: (Pdb) break 4.

Turning off a breakpoint with disable tells the debugger not to stop when that line is reached, example: (Pdb) disable 1.

Also we can have the other breakpoints like: conditional breakpoints and temporary breakpoint.

Use clear to delete a breakpoint entirely.

Changing execution flow with the jump command lets you alter the flow of your program.

The jump can be ahead and back and moves the point of execution past the location without evaluating any of the statements in between.

We can also have illegal jumps in and out of certain flow control statements prevented by the debugger.

When the debugger reaches the end of your program, it automatically starts it over.

With run command the program can be restarted.

We can avoid typing complex commands repeatedly by using alias and unalias to define the shortcuts.

The pdb python module lets you save configuration using text files read and interpreted on startup.

Brad Lucas

Tokenwatch (Part 2)

Once part 1 of TokenWatch was done the next step appeared when you saw all the details on each entries interior page. There I was most interested in the links to the Whitepapers so I could collect them and read through them more easily as a group.

For this part of the project I created another script tokenwatch_details.py which extends the previous tokenwatch.py script.

Code

To start I'll be getting the dataframe from the tokenwatch.py script sorted by NAME.

df = t.process().sort_values(['NAME'])

Each row in the dataframe has a link to the details page. The gist here will be to get this page and parse out the details I need. When inspecting the page it is noted that all the tables are classed with table-asset-data. The last one on the page is the most interesting. To grab that table see the following.

html = requests.get(url, headers={'User-agent': 'Mozilla/5.0'}).text

soup = BeautifulSoup(html, "lxml")

tables = soup.findAll("table", {"class": "table-asset-data"})

# Last table

table = tables[-1]

For convience I grab the tables data into a dictionary.

details = {}

for td in table.find_all('td'):

key = td.text.strip().split(' ')[0].lower()

vals = td.find_all('a')

if vals:

value = vals[0]['href']

else:

value = '-'

details[key] = value

return details

If available there will be a link to a whitepaper. Most are links to pdf files so I defend against errors by checking the type and the url before downloading.

def get_whitepaper(name, details):

try:

whitepaper_link = details['whitepaper']

if whitepaper_link != '-':

# only download if the link has a pdf in it

print whitepaper_link

head = requests.head(whitepaper_link, headers={'User-agent': 'Mozilla/5.0'})

# Some servers doesn't return the applcation/pdf type properly

# As a double check look at the url

if head.headers['Content-Type'] == 'application/pdf' or head.url.find(".pdf") > 0:

whitepaper_filename = get_dir(name) + "/" + name + "-whitepaper.pdf"

download_file(whitepaper_filename, whitepaper_link)

print whitepaper_filename

else:

print "Unknown whitepaper type: " + whitepaper_link

else:

print "Unavailable whitepaper for " + name

except:

print "No whitepaper link in dictionary"

See the complete proje ct in the GitHub repo listed below.

Links

- [https://github.com/bradlucas/tokenwatch/tree/part2[(https://github.com/bradlucas/tokenwatch/tree/part2)

Curtis Miller

Get Started Learning Python for Data Science with “Unpacking NumPy and Pandas”

I'm announcing my new video course Unpacking NumPy and Pandas, available from Packt Publishing, introducing Anaconda, NumPy and Pandas for Python data analytics beginners.![]()

Daniel Bader

Array Data Structures in Python

Array Data Structures in Python

How to implement arrays in Python using only built-in data types and classes from the standard library. Includes code examples and recommendations.

An array is a fundamental data structure available in most programming languages and it has a wide range of uses across different algorithms.

In this article we’ll take a look at array implementations in Python that only use core language features or functionality included in the Python standard library.

You’ll see the strengths and weaknesses of each approach so you can decide which implementation is right for your use case.

But before we jump in—let’s cover some of the basics first.

So, how do arrays work in Python and what are they used for?

Arrays consist of fixed-size data records that allow each element to be efficiently located based on its index.

Because arrays store information in adjoining blocks of memory they’re considered contiguous data structures (as opposed to a linked data structure like a linked list, for example.)

A real world analogy for an array data structure is a parking lot:

You can look at the parking lot as a whole and treat it as a single object. But inside the lot there are parking spots indexed by a unique number. Parking spots are containers for vehicles—each parking spot can either be empty or have a car, a motorbike, or some other vehicle parked on it.

But not all parking lots are the same:

Some parking lots may be restricted to only one type of vehicle. For example, a motorhome parking lot wouldn’t allow bikes to be parked on it. A “restricted” parking lot corresponds to a “typed array” data structure that only allows elements that have the same data type stored in them.

Performance-wise it’s very fast to look up an element contained in an array given the element’s index. A proper array implementation guarantees a constant O(1) access time for this case.

Python includes several array-like data structures in its standard library that each have slightly different characteristics. If you’re wondering how to declare an array in Python, this list will help pick the right data structure.

Let’s take a look at the available options:

✅ list – Mutable Dynamic Arrays

Lists are a part of the core Python language. Despite their name, Python’s lists are implemented as dynamic arrays behind the scenes. This means lists allow elements to be added or removed and they will automatically adjust the backing store that holds these elements by allocating or releasing memory.

Python lists can hold arbitrary elements—“everything” is an object in Python, including functions. Therefore you can mix and match different kinds of data types and store them all in a single list.

This can be a powerful feature, but the downside is that supporting multiple data types at the same time means that data is generally less tightly packed and the whole structure takes up more space as a result.

>>> arr = ['one', 'two', 'three'] >>> arr[0] 'one' # Lists have a nice repr: >>> arr ['one', 'two', 'three'] # Lists are mutable: >>> arr[1] = 'hello' >>> arr ['one', 'hello', 'three'] >>> del arr[1] >>> arr ['one', 'three'] # Lists can hold arbitrary data types: >>> arr.append(23) >>> arr ['one', 'three', 23]

✅ tuple – Immutable Containers

Tuples are a part of the Python core language. Unlike lists Python’s tuple objects are immutable, this means elements can’t be added or removed dynamically—all elements in a tuple must be defined at creation time.

Just like lists, tuples can hold elements of arbitrary data types. Having this flexibility is powerful, but again it also means that data is less tightly packed than it would be in a typed array.

>>> arr = 'one', 'two', 'three' >>> arr[0] 'one' # Tuples have a nice repr: >>> arr ('one', 'two', 'three') # Tuples are immutable: >>> arr[1] = 'hello' TypeError: "'tuple' object does not support item assignment" >>> del arr[1] TypeError: "'tuple' object doesn't support item deletion" # Tuples can hold arbitrary data types: # (Adding elements creates a copy of the tuple) >>> arr + (23,) ('one', 'two', 'three', 23)

✅ array.array – Basic Typed Arrays

Python’s array module provides space-efficient storage of basic C-style data types like bytes, 32-bit integers, floating point numbers, and so on.

Arrays created with the array.array class are mutable and behave similarly to lists—except they are “typed arrays” constrained to a single data type.

Because of this constraint array.array objects with many elements are more space-efficient than lists and tuples. The elements stored in them are tightly packed and this can be useful if you need to store many elements of the same type.

Also, arrays support many of the same methods as regular lists. For example, to append to an array in Python you can just use the familiar array.append() method.

As a result of this similarity between Python lists and array objects, you might be able to use it as a “drop-in replacement” without requiring major changes to your application.

>>> import array >>> arr = array.array('f', (1.0, 1.5, 2.0, 2.5)) >>> arr[1] 1.5 # Arrays have a nice repr: >>> arr array('f', [1.0, 1.5, 2.0, 2.5]) # Arrays are mutable: >>> arr[1] = 23.0 >>> arr array('f', [1.0, 23.0, 2.0, 2.5]) >>> del arr[1] >>> arr array('f', [1.0, 2.0, 2.5]) >>> arr.append(42.0) >>> arr array('f', [1.0, 2.0, 2.5, 42.0]) # Arrays are "typed": >>> arr[1] = 'hello' TypeError: "must be real number, not str"

✅ str – Immutable Arrays of Unicode Characters

Python 3.x uses str objects to store textual data as immutable sequences of Unicode characters. Practically speaking that means a str is an immutable array of characters. Oddly enough it’s also a recursive data structure—each character in a string is a str object of length 1 itself.

String objects are space-efficient because they’re tightly packed and specialize in a single data type. If you’re storing Unicode text you should use them. Because strings are immutable in Python modifying a string requires creating a modified copy. The closest equivalent to a “mutable string” is storing individual characters inside a list.

>>> arr = 'abcd' >>> arr[1] 'b' >>> arr 'abcd' # Strings are immutable: >>> arr[1] = 'e' TypeError: "'str' object does not support item assignment" >>> del arr[1] TypeError: "'str' object doesn't support item deletion" # Strings can be unpacked into a list to # get a mutable representation: >>> list('abcd') ['a', 'b', 'c', 'd'] >>> ''.join(list('abcd')) 'abcd' # Strings are recursive data structures: >>> type('abc') "<class 'str'>" >>> type('abc'[0]) "<class 'str'>"

✅ bytes – Immutable Arrays of Single Bytes

Bytes objects are immutable sequences of single bytes (integers in the range of 0 <= x <= 255). Conceptually they’re similar to str objects and you can also think of them as immutable arrays of bytes.

Like strings, bytes have their own literal syntax for creating objects and they’re space-efficient. Bytes objects are immutable, but unlike strings there’s a dedicated “mutable byte array” data type called bytearray that they can be unpacked into. You’ll hear more about that in the next section.

>>> arr = bytes((0, 1, 2, 3)) >>> arr[1] 1 # Bytes literals have their own syntax: >>> arr b'\x00\x01\x02\x03' >>> arr = b'\x00\x01\x02\x03' # Only valid "bytes" are allowed: >>> bytes((0, 300)) ValueError: "bytes must be in range(0, 256)" # Bytes are immutable: >>> arr[1] = 23 TypeError: "'bytes' object does not support item assignment" >>> del arr[1] TypeError: "'bytes' object doesn't support item deletion"

✅ bytearray – Mutable Arrays of Single Bytes

The bytearray type is a mutable sequence of integers in the range 0 <= x <= 255. They’re closely related to bytes objects with the main difference being that bytearrays can be modified freely—you can overwrite elements, remove existing elements, or add new ones. The bytearray object will grow and shrink appropriately.

Bytearrays can be converted back into immutable bytes objects but this incurs copying the stored data in full—an operation taking O(n) time.

>>> arr = bytearray((0, 1, 2, 3)) >>> arr[1] 1 # The bytearray repr: >>> arr bytearray(b'\x00\x01\x02\x03') # Bytearrays are mutable: >>> arr[1] = 23 >>> arr bytearray(b'\x00\x17\x02\x03') >>> arr[1] 23 # Bytearrays can grow and shrink in size: >>> del arr[1] >>> arr bytearray(b'\x00\x02\x03') >>> arr.append(42) >>> arr bytearray(b'\x00\x02\x03*') # Bytearrays can only hold "bytes" # (integers in the range 0 <= x <= 255) >>> arr[1] = 'hello' TypeError: "an integer is required" >>> arr[1] = 300 ValueError: "byte must be in range(0, 256)" # Bytearrays can be converted back into bytes objects: # (This will copy the data) >>> bytes(arr) b'\x00\x02\x03*'

Which array implementation should I use in Python?

There are a number of built-in data structures you can choose from when it comes to implementing arrays in Python. In this article we’ve concentrated on core language features and data structures included in the standard library only.

If you’re willing to go beyond the Python standard library, third-party packages like NumPy offer a wide range of fast array implementations for scientific computing.

But focusing on the array data structures included with Python, here’s what your choice comes down to:

-

You need to store arbitrary objects, potentially with mixed data types? Use a

listor atuple, depending on whether you want an immutable data structure or not. -

You have numeric (integer / floating point) data and tight packing and performance is important? Try out

array.arrayand see if it does everything you need. Consider going beyond the standard library and try out packages like NumPy. -

You have textual data represented as Unicode characters? Use Python’s built-in

str. If you need a “mutable string” use alistof characters. -

You want to store a contiguous block of bytes? Use

bytes(immutable) orbytearray(mutable).

Personally, I like to start out with a simple list in most cases and only specializing later on if performance or storage space becomes an issue.

This is especially important when you need to make a choice between using a Python list vs an array. The key difference here is that Python arrays are more space-efficient than lists, but that doesn’t automatically make them the right choice in your specific use case.

Most of the time using a general purpose array data structure like list in Python gives you the fastest development speed and the most programming convenience.

I found that this is usually much more important in the beginning than squeezing out every last drop of performance from the start.

Read the full “Fundamental Data Structures in Python” article series here. This article is missing something or you found an error? Help a brother out and leave a comment below.

July 03, 2017

GoDjango

Django 1.11+ django.contrib.auth Class Based Views - Part 1 - Login & Logout

This is the first in a long series on creating a CryptoCurrency management site, but first we need to lock things down so people can't see all of our stuff. So we will start by setting up our app and logging in and out. We will do this with the new Class Based Views in django.contrib.auth system.

Watch Now...

Simple is Better Than Complex

Django Tips #20 Working With Multiple Settings Modules

Usually, it’s a good idea to avoid multiple configuration files, instead, keep your project setup simple. But that’s

not always possible, as a Django project starts to grow, the settings.py module can get fairly complex. In those

cases, you also want to avoid using if statements like if not DEBUG: # do something.... For clarity and strict

separation of what is development configuration and what is production configuration, you can break down

the settings.py module into multiple files.

Basic Structure

A brand new Django project looks like this:

mysite/

|-- mysite/

| |-- __init__.py

| |-- settings.py

| |-- urls.py

| +-- wsgi.py

+-- manage.pyFirst thing we want to do is to create a folder named settings, rename the settings.py file to base.py and

move it inside the newly created settings folder. Make sure you also add a __init__.py in case you are working with

Python 2.x.

mysite/

|-- mysite/

| |-- __init__.py

| |-- settings/ <--

| | |-- __init__.py <--

| | +-- base.py <--

| |-- urls.py

| +-- wsgi.py

+-- manage.pyAs the name suggests, the base.py will provide the common settings among all environments (development, production, staging, etc).

Next step now is to create a settings module for each environment. Common use cases are:

- ci.py

- development.py

- production.py

- staging.py

The file structure would look like this:

mysite/

|-- mysite/

| |-- __init__.py

| |-- settings/

| | |-- __init__.py

| | |-- base.py

| | |-- ci.py

| | |-- development.py

| | |-- production.py

| | +-- staging.py

| |-- urls.py

| +-- wsgi.py

+-- manage.pyConfiguring a New Settings.py

First, take as example the following base.py module:

settings/base.py

from decouple import config

SECRET_KEY = config('SECRET_KEY')

INSTALLED_APPS = [

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'mysite.core',

'mysite.blog',

]

MIDDLEWARE = [

'django.middleware.security.SecurityMiddleware',

'django.contrib.sessions.middleware.SessionMiddleware',

'django.middleware.common.CommonMiddleware',

'django.middleware.csrf.CsrfViewMiddleware',

'django.contrib.auth.middleware.AuthenticationMiddleware',

'django.contrib.messages.middleware.MessageMiddleware',

'django.middleware.clickjacking.XFrameOptionsMiddleware',

]

ROOT_URLCONF = 'mysite.urls'

WSGI_APPLICATION = 'mysite.wsgi.application'There are a few default settings missing, which I removed so the example doesn’t get too big.

Now, to create a development.py module that “extends” our base.py settings module, we can achieve it like this:

settings/development.py

from .base import *

DEBUG = True

INSTALLED_APPS += [

'debug_toolbar',

]

MIDDLEWARE += ['debug_toolbar.middleware.DebugToolbarMiddleware', ]

EMAIL_BACKEND = 'django.core.mail.backends.console.EmailBackend'

DEBUG_TOOLBAR_CONFIG = {

'JQUERY_URL': '',

}And a production.py module could be defined like this:

settings/production.py

from .base import *

DEBUG = False

ALLOWED_HOSTS = ['mysite.com', ]

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': '127.0.0.1:11211',

}

}

EMAIL_BACKEND = 'django.core.mail.backends.smtp.EmailBackend'

EMAIL_HOST = 'smtp.mailgun.org'

EMAIL_PORT = 587

EMAIL_HOST_USER = config('EMAIL_HOST_USER')

EMAIL_HOST_PASSWORD = config('EMAIL_HOST_PASSWORD')

EMAIL_USE_TLS = TrueTwo important things to note: avoid using star imports (import *). This is one of the few exceptions. Star imports

may put lots of unecessary stuff in the namespace which in some cases can cause issues. Another important thing, even

though we are using different files for development and production, you still have to protect sensitive data! Make sure

you keep passwords and secret keys in environment variables or use a library like Python-Decouple which I highly

recommend!

How to Use It

Since we no longer have a settings.py in the project root, running commands like python manage.py runserver will

no longer work. Instead, you have to pass which settings.py module you want to use in the command line:

python manage.py runserver --settings=mysite.settings.developmentOr

python manage.py migrate --settings=mysite.settings.productionThe next step is optional, but since we use manage.py often during the development process, you can edit it to set

the default settings module to your development.py module.

To do that, simply edit the manage.py file, like this:

manage.py

#!/usr/bin/env python

import os

import sys

if __name__ == "__main__":

os.environ.setdefault("DJANGO_SETTINGS_MODULE", "mysite.settings.development") # <-- Change here!

try:

from django.core.management import execute_from_command_line

except ImportError:

# The above import may fail for some other reason. Ensure that the