The (failing) New York Times has many apps and services. Some live on physical servers, others reside in The Cloud™. I manage an app that runs on multiple containers orchestrated by Kubernetes in (A)mazon (W)eb (S)ervices. Many of our apps are migrating to (G)oogle (C)loud (P)latform. Containers are great, and Kubernetes is (mostly) great. “Scaling” usually happens horizontally: you add more replicas of your app to match your traffic/workload. Kubernetes does the scaling and rolling updates for you.

Most apps at the Times are adopting the 12 Factor App methodology – if you haven’t been exposed, give this a read. tl;dr containers, resources, AWS/GCP, run identical versions of your app everywhere. For the app I manage, the network of internationalized sites including Español, I use a container for nginx and another for php-fpm – you can grab it here. I use RDS for the database, and Elasticache for the Memcached service. All seems good. We’re set for world domination.

Enter: your application’s codebase.

If your app doesn’t talk to the internet or a database, it will probably run lightning fast. This is not a guarantee, of course, but network latency will not be an issue. Your problem would be mathematical. Once you start asking the internet for data, or connect to a resource to query data, you need a game plan.

As you may have guessed, the network of sites I alluded to above is powered by WordPress™. WordPress, on its best day, is a large amount of PHP 5.2 code that hints at caching and queries a database whose schema I would generously call Less Than Ideal. WP does not promise you fast queries, but it does cache data in a non-persistent fashion out of the box. What does this mean? It sets a global variable called $wp_object_cache and stores your cache lookups for the length of the request. For the most part, WP will attempt to read from the cache before making expensive database queries. Because it would be nice to make this cache persistent (work across multiple requests/servers), WP will look for an object cache drop-in: wp-content/object-cache.php, and if it is there, will use your implementation instead of its own.

Before we go any further, let me say this: it is basically mandatory that you use persistent caching.

Keep in mind: you haven’t written any code yet – you have only installed WP. Many of us do this and think we are magically done. We are not. If you are a simple blog that gets very little traffic: you are probably ok to move on and let your hosting company do all of the work.

If you are a professional web developer/software engineer/devops ninja, you need to be aware of things – probably before you start designing your app.

Network requests are slow

When it comes to dealing with the internals of WordPress in regards to database queries, there is not a lot you can do to speed things up. The queries are already written in a lowest common denominator way against a schema which is unforgiving. WP laces caching where it can, and simple object retrieval works as expected. WordPress does not cache queries, what it does is limit complex queries to only return IDs (primary keys), so that single item lookups can be triggered and populate the cache. Here’s a crude example:

$ids = $wpdb->get_results(...INSERT SLOW QUERY...);

_prime_post_caches( $ids, true, true );

// recursively get each item

$posts = array_map('get_post', $ids);

get_post() will get the item from the cache and hydrate it, or go to the database, populate the cache, and hydrate the result. This is the default behavior of WP_Query internals: expensive query, followed by recursive lookups from the cache after it has been primed. Showing 20 posts on the homepage? Uncached, that’s at least 2 database queries. Each post has a featured image? More queries. A different author? More queries. You can see where this is going. An uncached request is capable of querying everything in sight. Like I said, it is basically mandatory that you use an object cache. You will still have to make the query for items, but each individual lookup could then be read from the cache.

You are not completely out of the woods when you read from the cache. How many individual calls to the cache are you making? Before an item can be populated in $wp_object_cache non-persistently, it must be requested from Memcached. Memcached has a mechanism for requesting items in batch, but WordPress does not use it. Cache drop-ins could implement it, but the WP internals do not have a batch mechanism implemented to collect data and make one request in batch for all items.

We know that we could speed this all up by also caching the query, but then invalidation becomes a problem. YOU would have to implement a query cache, YOU would have to write the unit tests, YOU would have to write the invalidation logic. Chances are that YOUR code will have hard-to-track-down bugs, and introduce more complexity than is reasonable.

WP_Query might be doing the best it can, for what it is and what codebase it sits in. It does do one thing that is essential: it primes the cache. Whether the cache is persistent or not, get_post() uses the Cache API internally (via WP_Post::get_instance()) to reduce the work of future lookups.

Prime the Cache

Assuming you are writing your own code to query the database, there are 3 rules of thumb:

1) make as few queries as is possible

2) tune your queries so that they are as fast as possible

3) cache the results

Once again, cache and invalidation can be hard, so you will need unit tests for your custom code (more on this in a bit).

This is all well and good for the database, but what about getting data from a web service, another website, or a “microservice,” as it were. We already know that network latency can pile up by making too many requests to the database throughout the lifecycle of a request, but quering the database is usually “pretty fast” – at least not as slow as making requests over HTTP.

HTTP requests are as fast as the network latency to hit a server and how slow that server is to respond with something useful. HTTP requests are somewhat of a black box and can time out. HTTP requests cannot be made serially – meaning, you can’t make a request, wait, make a request, wait, make a request…

HTTP requests must be batched

If I need data from 3 different web services that don’t rely on each other’s responses, you need to request them asynchronously and simultaneously. WordPress doesn’t officially offer this functionality unless you reach down into Requests and write your own userland wrapper and cache. I highly suggest installing Guzzle via Composer and including it in your project where needed.

Here is some example Guzzle code that deals with Concurrency:

use GuzzleHttp\Client;

use GuzzleHttp\Promise;

$client = new Client(['base_uri' => 'http://httpbin.org/']);

// Initiate each request but do not block

$promises = [

'image' => $client->getAsync('/image'),

'png' => $client->getAsync('/image/png'),

'jpeg' => $client->getAsync('/image/jpeg'),

'webp' => $client->getAsync('/image/webp')

];

// Wait on all of the requests to complete. Throws a ConnectException

// if any of the requests fail

$results = Promise\unwrap($promises);

// Wait for the requests to complete, even if some of them fail

$results = Promise\settle($promises)->wait();

// You can access each result using the key provided to the unwrap

// function.

echo $results['image']['value']->getHeader('Content-Length')[0]

echo $results['png']['value']->getHeader('Content-Length')[0]

Before you start doing anything with HTTP, I would suggest taking a hard look at how your app intends to interact with data, and how that data fetching can be completely separated from your render logic. WordPress has a bad habit of mixing user functions that create network requests with PHP output to do what some would call “templating.” Ideally, all of your network requests will have completed by the time you start using data in your templates.

In addition to batching, almost every HTTP response is worthy of a cache entry – preferably one that is long-lived. Most responses are for data that never changes, or does so rarely. Data that might need to be refreshed can be given a short expiration. I would think in minutes and hours where possible. Our default cache policy at eMusic for catalog data was 6 hours. The data only refreshed every 24. If your cache size grows beyond its limit, entries will be passively invalidated. You can play with this to perhaps size your cache in way that even entries with no expiration will routinely get rotated.

A simple cache for an HTTP request might look like:

$response = wp_cache_get( 'meximelt', 'taco_bell' );

if ( false === $response ) {

$endpoint = 'http://tacobell.com/api/meximelt?fire-sauce';

$response = http_function_that_is_not_wp_remote_get( $endpoint );

wp_cache_set( 'meximelt', $response, 'taco_bell' );

}

How your app generates its cache entries is probably its bottleneck

The key take-away from the post up until now is: how your app generates its cache entries is probably its bottleneck. If all of your network requests are cached, and especially if you are using an edge cache like Varnish, you are doing pretty well. It is in those moments that your app needs to regenerate a page, or make a bunch of requests over the network, that you will experience the most pain. WordPress is not fully prepared to help you in these moments.

I would like to walk through a few common scenarios for those who build enterprise apps and are not beholden to WordPress to provide all of the development tools.

WordPress is not prepared to scale as a microservice

WordPress is supposed to be used as a one-size-fits-all approach to creating a common app with common endpoints. WordPress assumes it is the router, the database abstraction, the thin cache layer, the HTTP client, the rendering engine, and hey why not, the API engine. WordPress does all of these things while also taking responsibility for kicking off “cron jobs” (let’s use these words loosely) and phoning home to check for updates. Because WordPress tries to do everything, its code tries to be everywhere all at once. This is why there are no “modules” in WordPress. There is one module: all of it.

A side effect of this monolith is that none of the tools accomplish everything you might expect. A drawback: because WordPress knows it might have to do many tasks in the lifecycle of one request, it eagerly loads most of its codebase before performing hardly any worthwhile functions. Just “loading WordPress” can take more than 1 second on a server without opcache enabled properly. The cost of loading WordPress at all is high. Another reason it has to load so much code is that the plugin ecosystem has been trained to expect every possible API to be in scope, regardless of what is ever used. This is the opposite of service discovery and modularity.

The reason I bring this up: microservices are just abstractions to hide the implementation details of fetching data from resources. The WordPress REST API, at its base, is just grabbing data from the database for you, at the cost of loading all of WordPress and adding network latency. This is why it is not a good solution for providing data to yourself over HTTP, but a necessary solution for providing your data to another site over HTTP. Read-only data is highly-cacheable, and eventual consistency is probably close enough for jazz in the cache layer.

Wait, what cache layer? Ah, right. There is no HTTP cache in WordPress. And there certainly is no baked-in cache for served responses. wp-content/advanced-cache.php + WP_CACHE allows you another opportunity to bypass WordPress and serve full responses from the cache, but I haven’t tried this with Batcache. If you are even considering being a provider of data to another site and want to remain highly-available, I would look at something like Varnish or Fastly (which is basically Varnish).

A primer for introducing Doctrine

There are several reasons that I need to interact with custom tables on the network of international sites I manage at the Times. Media is a global concept – one set of tables is accessed by every site in the network. Each site has its own set of metadata, to store translations. I want to be able to read the data and have it cached automatically. I want to use an ORM-like API that invalidates my cache for me. I want an API to generate SELECT statements, and I want queries to automatically prime the cache, so that when I do:

$db->query('EXPENSIVE QUERY that will cache itself (also contains item #1)!');

// already in the cache

$db->find(1);

All of this exists in Doctrine. Doctrine is an ORM. Doctrine is powerful. I started out writing my own custom code to generate queries and cache them. I caused bugs. I wrote some unit tests. I found more bugs. After enough headaches, I decided to use an open source tool, widely accepted in the PHP universe as a standard. Rather than having to write unit tests for the APIs themselves, I could focus on using the APIs and write tests for our actual implementation.

-

WordPress is missing an API for SELECT queries

Your first thought might be: “phooey! we got

WP_Query!” Indeed we do. But what about tables that are not namedwp_posts… that’s what I thought. There are a myriad of valid reasons that a developer needs to create and interact with a custom database table, tables, or an entirely separate schema. We need to give ourselves permission to break out of the mental prison that might be preventing us from using better tools to accomplish tasks that are not supported by WP out of the box. We are going to talk about Doctrine, but first we are going to talk about Symfony. -

Symfony

Symfony is the platonic ideal for a modular PHP framework. This is, of course, debatable, but it is probably the best thing we have in the PHP universe. Symfony, through its module ecosystem, attempts to solve specific problems in discrete ways. You do not have to use all of Symfony, because its parts are isolated modules. You can even opt to just use a lighter framework, called Silex, that gives you a bundle of powerful tools with Rails/Express-like app routing. It isn’t a CMS, but it does make prototyping apps built in PHP easy, and it makes you realize that WP doesn’t attempt to solve technical challenges in the same way.

-

Composer

You can use Composer whenever you want, with any framework or codebase, without Composer being aware of all parts of your codebase, and without Composer knowing which framework you are using.

composer.jsonispackage.jsonfor PHP. Most importantly, Composer will autoload all of your classes for you.require_once 'WP_The_New_Class_I_added.php4'is an eyesore and unnecessary. If you have noticed, WP loads all of its library code inwp-settings.php, whether or not you are ever going to use it. This is bad, so bad. Remember: without a tuned opcache, just loading all of these files can take upwards of 1 second. Bad, so so bad. Loading classes on-demand is the way to go, especially when much of your code only initializes to *maybe* run on a special admin screen or the like.Here is how complicated autoloading with Composer is – in

wp-config.php, please add:require_once 'vendor/autoload.php'

I need a nap.

-

Pimple

Pimple is the Dependency Injection Container (sometimes labeled without irony as a DIC), also written by Fabien. A DI container allows us to lazy-load resources at runtime, while also keeping their implementation details opaque. The benefit is that we can more simply mock these resources, or swap out the implementation of them, without changing the details of how they are referenced. It also allows us to group functionality in a sane way, and more cleanly expose a suite of services to our overall app container. Finally, it makes it possible to have a central location for importing libraries and declaring services based on them.

A container is an instance of Pimple, populated by service providers:

namespace NYT; use Pimple\Container; class App extends Container {} // and then later... $app = new App(); $app->register( new AppProvider() ); $app->register( new Cache\Provider() ); $app->register( new Database\Provider() ); $app->register( new Symfony\Provider() ); $app->register( new AWS\Provider() );Not to scare you, and this is perhaps beyond the scope of this post, but here is what our Database provider looks like:

namespace NYT\Database; use Pimple\Container; use Pimple\ServiceProviderInterface; use Doctrine\ORM\EntityManager; use Doctrine\ORM\Configuration; use Doctrine\Common\Persistence\Mapping\Driver\StaticPHPDriver; use Doctrine\DBAL\Connection; use NYT\Database\{Driver,SQLLogger}; class Provider implements ServiceProviderInterface { public function register( Container $app ) { $app['doctrine.metadata.driver'] = function () { return new StaticPHPDriver( [ __SRC__ . '/lib/php/Entity/', __SRC__ . '/wp-content/plugins/nyt-wp-bylines/php/Entity/', __SRC__ . '/wp-content/plugins/nyt-wp-media/php/Entity/', ] ); }; $app['doctrine.config'] = function ( $app ) { $config = new Configuration(); $config->setProxyDir( __SRC__ . '/lib/php/Proxy' ); $config->setProxyNamespace( 'NYT\Proxy' ); $config->setAutoGenerateProxyClasses( false ); $config->setMetadataDriverImpl( $app['doctrine.metadata.driver'] ); $config->setMetadataCacheImpl( $app['cache.array'] ); $cacheDriver = $app['cache.chain']; $config->setQueryCacheImpl( $cacheDriver ); $config->setResultCacheImpl( $cacheDriver ); $config->setHydrationCacheImpl( $cacheDriver ); // this has to be on, only thing that caches Entities properly $config->setSecondLevelCacheEnabled( true ); $config->getSecondLevelCacheConfiguration()->setCacheFactory( $app['cache.factory'] ); if ( 'dev' === $app['env'] ) { $config->setSQLLogger( new SQLLogger() ); } return $config; }; $app['doctrine.nyt.driver'] = function () { // Driver calls DriverConnection, which sets the internal _conn prop // to the mysqli instance from wpdb return new Driver(); }; $app['db.host'] = function () { return getenv( 'DB_HOST' ); }; $app['db.user'] = function () { return getenv( 'DB_USER' ); }; $app['db.password'] = function () { return getenv( 'DB_PASSWORD' ); }; $app['db.name'] = function () { return getenv( 'DB_NAME' ); }; $app['doctrine.connection'] = function ( $app ) { return new Connection( // these credentials don't actually do anything [ 'host' => $app['db.host'], 'user' => $app['db.user'], 'password' => $app['db.password'], ], $app['doctrine.nyt.driver'], $app['doctrine.config'] ); }; $app['db'] = function ( $app ) { $conn = $app['doctrine.connection']; return EntityManager::create( $conn, $app['doctrine.config'], $conn->getEventManager() ); }; } }I am showing you this because it will be relevant in the next 2 sections.

-

wp-content/db.phpWordPress allows you to override the default database implementation with your own. Rather than overriding

wpdb(this is a class, yet eschews all naming conventions), we are going to instantiate it like normal, but leak the actual database resource in our override file so that we can share the connection with our Doctrine code. This gives us the benefit of using the same connection on both sides. In true WP fashion,$dbhis aprotectedmember, and should not be publicly readable, but a loophole in the visibility scheme for the class allows us to anyway – for backwards compatibility, members that were previously initialized withvar(whadup PHP4) are readable through the magic methods that decorate the class.Here is what we do in the DB drop-in:

$app = NYT\getApp(); $wpdb = new wpdb( $app['db.user'], $app['db.password'], $app['db.name'], $app['db.host'] ); $app['dbh'] = $wpdb->dbh;

The container allows us to keep our credentials opaque, and allows us to switch out *where* our credentials originate without having to change the code here. The Provider class exposes “services” for our app to use that don’t get initialized until we actually use them. Wouldn’t this be nice throughout 100% of WordPress? The answer is yes, but as long as PHP 5.2 support is a thing, Pimple cannot be used, as it requires closures (the

= function () {}bit). -

WP_Object_CacheDoctrine uses a cache as well, so it would be great if we could not only share the database connection, but also share the Memcached instance that is powering the

WP_Object_Cacheand the internal Doctrine cache.Here’s our cache Provider:

namespace NYT\Cache; use Pimple\Container; use Pimple\ServiceProviderInterface; use Doctrine\ORM\Cache\DefaultCacheFactory; use Doctrine\ORM\Cache\RegionsConfiguration; use Doctrine\Common\Cache\{ArrayCache,ChainCache,MemcachedCache}; use \Memcached; class Provider implements ServiceProviderInterface { public function register( Container $app ) { $app['cache.array'] = function () { return new ArrayCache(); }; $app['memcached.servers'] = function () { return [ [ getenv( 'MEMCACHED_HOST' ), '11211' ], ]; }; $app['memcached'] = function ( $app ) { $memcached = new Memcached(); foreach ( $app['memcached.servers'] as $server ) { list( $node, $port ) = $server; $memcached->addServer( // host $node, // port $port, // bucket weight 1 ); } return $memcached; }; $app['cache.memcached'] = function ( $app ) { $cache = new MemcachedCache(); $cache->setMemcached( $app['memcached'] ); return $cache; }; $app['cache.chain'] = function ( $app ) { return new ChainCache( [ $app['cache.array'], $app['cache.memcached'] ] ); }; $app['cache.regions.config'] = function () { return new RegionsConfiguration(); }; $app['cache.factory'] = function ( $app ) { return new DefaultCacheFactory( $app['cache.regions.config'], $app['cache.chain'] ); }; } }WP_Object_Cacheis an idea more than its actual implementation details, and with so many engines for it in the wild, the easiest way to insure we don’t blow up the universe is to have our cache class implement an interface. This will ensure that our method signatures match the reference implementation, and that our class contains all of the expected methods.namespace NYT\Cache; interface WPCacheInterface { public function key( $key, string $group = 'default' ); public function get( $id, string $group = 'default' ); public function set( $id, $data, string $group = 'default', int $expire = 0 ); public function add( $id, $data, string $group = 'default', int $expire = 0 ); public function replace( $id, $data, string $group = 'default', int $expire = 0 ); public function delete( $id, string $group = 'default' ); public function incr( $id, int $n = 1, string $group = 'default' ); public function decr( $id, int $n = 1, string $group = 'default' ); public function flush(); public function close(); public function switch_to_blog( int $blog_id ); public function add_global_groups( $groups ); public function add_non_persistent_groups( $groups ); }Here’s our custom object cache that uses the Doctrine cache internals instead of always hitting Memcached directly:

namespace NYT\Cache; use NYT\App; class ObjectCache implements WPCacheInterface { protected $memcached; protected $cache; protected $arrayCache; protected $globalPrefix; protected $sitePrefix; protected $tablePrefix; protected $global_groups = []; protected $no_mc_groups = []; public function __construct( App $app ) { $this->memcached = $app['memcached']; $this->cache = $app['cache.chain']; $this->arrayCache = $app['cache.array']; $this->tablePrefix = $app['table_prefix']; $this->globalPrefix = is_multisite() ? '' : $this->tablePrefix; $this->sitePrefix = ( is_multisite() ? $app['blog_id'] : $this->tablePrefix ) . ':'; } public function key( $key, string $group = 'default' ): string { if ( false !== array_search( $group, $this->global_groups ) ) { $prefix = $this->globalPrefix; } else { $prefix = $this->sitePrefix; } return preg_replace( '/\s+/', '', WP_CACHE_KEY_SALT . "$prefix$group:$key" ); } /** * @param int|string $id * @param string $group * @return mixed */ public function get( $id, string $group = 'default' ) { $key = $this->key( $id, $group ); $value = false; if ( $this->arrayCache->contains( $key ) && in_array( $group, $this->no_mc_groups ) ) { $value = $this->arrayCache->fetch( $key ); } elseif ( in_array( $group, $this->no_mc_groups ) ) { $this->arrayCache->save( $key, $value ); } else { $value = $this->cache->fetch( $key ); } return $value; } public function set( $id, $data, string $group = 'default', int $expire = 0 ): bool { $key = $this->key( $id, $group ); return $this->cache->save( $key, $data, $expire ); } public function add( $id, $data, string $group = 'default', int $expire = 0 ): bool { $key = $this->key( $id, $group ); if ( in_array( $group, $this->no_mc_groups ) ) { $this->arrayCache->save( $key, $data ); return true; } elseif ( $this->arrayCache->contains( $key ) && false !== $this->arrayCache->fetch( $key ) ) { return false; } return $this->cache->save( $key, $data, $expire ); } public function replace( $id, $data, string $group = 'default', int $expire = 0 ): bool { $key = $this->key( $id, $group ); $result = $this->memcached->replace( $key, $data, $expire ); if ( false !== $result ) { $this->arrayCache->save( $key, $data ); } return $result; } public function delete( $id, string $group = 'default' ): bool { $key = $this->key( $id, $group ); return $this->cache->delete( $key ); } public function incr( $id, int $n = 1, string $group = 'default' ): bool { $key = $this->key( $id, $group ); $incr = $this->memcached->increment( $key, $n ); return $this->cache->save( $key, $incr ); } public function decr( $id, int $n = 1, string $group = 'default' ): bool { $key = $this->key( $id, $group ); $decr = $this->memcached->decrement( $key, $n ); return $this->cache->save( $key, $decr ); } public function flush(): bool { if ( is_multisite() ) { return true; } return $this->cache->flush(); } public function close() { $this->memcached->quit(); } public function switch_to_blog( int $blog_id ) { $this->sitePrefix = ( is_multisite() ? $blog_id : $this->tablePrefix ) . ':'; } public function add_global_groups( $groups ) { if ( ! is_array( $groups ) ) { $groups = [ $groups ]; } $this->global_groups = array_merge( $this->global_groups, $groups ); $this->global_groups = array_unique( $this->global_groups ); } public function add_non_persistent_groups( $groups ) { if ( ! is_array( $groups ) ) { $groups = [ $groups ]; } $this->no_mc_groups = array_merge( $this->no_mc_groups, $groups ); $this->no_mc_groups = array_unique( $this->no_mc_groups ); } }object-cache.phpis the PHP4-style function set that we all know and love:use NYT\Cache\ObjectCache; use function NYT\getApp; function _wp_object_cache() { static $cache = null; if ( ! $cache ) { $app = getApp(); $cache = new ObjectCache( $app ); } return $cache; } function wp_cache_add( $key, $data, $group = '', $expire = 0 ) { return _wp_object_cache()->add( $key, $data, $group, $expire ); } function wp_cache_incr( $key, $n = 1, $group = '' ) { return _wp_object_cache()->incr( $key, $n, $group ); } function wp_cache_decr( $key, $n = 1, $group = '' ) { return _wp_object_cache()->decr( $key, $n, $group ); } function wp_cache_close() { return _wp_object_cache()->close(); } function wp_cache_delete( $key, $group = '' ) { return _wp_object_cache()->delete( $key, $group ); } function wp_cache_flush() { return _wp_object_cache()->flush(); } function wp_cache_get( $key, $group = '' ) { return _wp_object_cache()->get( $key, $group ); } function wp_cache_init() { global $wp_object_cache; $wp_object_cache = _wp_object_cache(); } function wp_cache_replace( $key, $data, $group = '', $expire = 0 ) { return _wp_object_cache()->replace( $key, $data, $group, $expire ); } function wp_cache_set( $key, $data, $group = '', $expire = 0 ) { if ( defined( 'WP_INSTALLING' ) ) { return _wp_object_cache()->delete( $key, $group ); } return _wp_object_cache()->set( $key, $data, $group, $expire ); } function wp_cache_switch_to_blog( $blog_id ) { return _wp_object_cache()->switch_to_blog( $blog_id ); } function wp_cache_add_global_groups( $groups ) { _wp_object_cache()->add_global_groups( $groups ); } function wp_cache_add_non_persistent_groups( $groups ) { _wp_object_cache()->add_non_persistent_groups( $groups ); } -

All of the above is boilerplate. Yes, it sucks, but once it’s there, you don’t really need to touch it again. I have included it in the post in case anyone wants to try some of this stuff out on their own stack. So, finally:

Show Me Doctrine! This is an

Entity– we will verbosely describe anAsset, but as you can probably guess, we get a kickass API for free by doing this:namespace NYT\Media\Entity; use Doctrine\ORM\Mapping\ClassMetadata as DoctrineMetadata; use Symfony\Component\Validator\Mapping\ClassMetadata as ValidatorMetadata; use Symfony\Component\Validator\Constraints as Assert; class Asset { /** * @var int */ protected $id; /** * @var int */ protected $assetId; /** * @var string */ protected $type; /** * @var string */ protected $slug; /** * @var string */ protected $modified; public function getId() { return $this->id; } public function getAssetId() { return $this->assetId; } public function setAssetId( $assetId ) { $this->assetId = $assetId; } public function getType() { return $this->type; } public function setType( $type ) { $this->type = $type; } public function getSlug() { return $this->slug; } public function setSlug( $slug ) { $this->slug = $slug; } public function getModified() { return $this->modified; } public function setModified( $modified ) { $this->modified = $modified; } public static function loadMetadata( DoctrineMetadata $metadata ) { $metadata->enableCache( [ 'usage' => $metadata::CACHE_USAGE_NONSTRICT_READ_WRITE, 'region' => static::class ] ); $metadata->setIdGeneratorType( $metadata::GENERATOR_TYPE_IDENTITY ); $metadata->setPrimaryTable( [ 'name' => 'nyt_media' ] ); $metadata->mapField( [ 'id' => true, 'fieldName' => 'id', 'type' => 'integer', ] ); $metadata->mapField( [ 'fieldName' => 'assetId', 'type' => 'integer', 'columnName' => 'asset_id', ] ); $metadata->mapField( [ 'fieldName' => 'type', 'type' => 'string', ] ); $metadata->mapField( [ 'fieldName' => 'slug', 'type' => 'string', ] ); $metadata->mapField( [ 'fieldName' => 'modified', 'type' => 'string', ] ); } public static function loadValidatorMetadata( ValidatorMetadata $metadata ) { $metadata->addGetterConstraints( 'assetId', [ new Assert\NotBlank(), ] ); } }

Now that we have been exposed to some new nomenclature, and a bunch of frightening code, let’s look at what we now get. First we need a Media Provider:

namespace NYT\Media;

use NYT\{App,LogFactory};

use Pimple\Container;

use Pimple\ServiceProviderInterface;

class Provider implements ServiceProviderInterface {

public function register( Container $app ) {

.....

$app['media.repo.asset'] = function ( $app ) {

return $app['db']->getRepository( Entity\Asset::class );

};

$app['media.repo.post_media'] = function ( $app ) {

return $app['db']->getRepository( Entity\PostMedia::class );

};

}

}

Now we want to use this API to do some powerful stuff:

// lazy-load repository class for media assets $repo = $app['media.repo.asset']; // find one item by primary key, will prime cache $asset = $repo->find( $id );

We can prime the cache whenever we want by requesting assets filtered by params we pass to ->findBy( $params ). I hope you’ve already figured it out, but we didn’t have to write any of this magic code, it is exposed automatically. Any call to ->findBy() will return from the cache or generate a SQL query, cache the resulting IDs by a hash, and normalize the cache by creating an entry for each found item. Subsequent queries have access to the same normalized cache, so queries can be optimized, prime each other, and invalidate entries when mutations occur.

// pull a bunch of ids from somewhere and get a bunch of assets at once

// will prime cache

$repo->findBy( [ 'id' => $ids ] );

// After priming the cache, sanity check items

$assets = array_reduce( $ids, function ( $carry, $id ) use ( $repo ) {

// all items will be read from the cache

$asset = $repo->find( $id );

if ( $asset ) {

$carry[] = $asset;

}

return $carry;

}, [] );

$repo->findBy( [ 'slug' => $oneSlug ] );

$repo->findBy( [ 'slug' => $manySlugs ] );

Here’s an example of a WP_Query-type request with 10 times more horsepower underneath:

// get a page of assets filtered by params $assets = $repo->findBy( $params, [ 'id' => $opts['order'] ], $opts['perPage'], ( $opts['page'] - 1 ) * $opts['perPage'] );

With Doctrine, you never need to write SQL, and you certainly don’t need to write a Cache layer.

Lesson Learned

Let me explain a complex scenario where the cache priming in Doctrine turned a mess of a problem into an easy solution. The media assets used on our network of internationalized sites do not live in WordPress. WordPress has reference to the foreign IDs in the database in a custom table: nyt_media. We also store a few other pieces of identifying data, but only to support the List Table experience in the admin (we write custom List Tables, another weird nightmare of an API). The media items are referenced in posts via a [media] shortcode. Shortcodes have their detractors, but the Shortcode API, IMO, is a great way to store contextually-placed object references in freeform content.

The handler for the media shortcode does A LOT. For each shortcode, we need to request an asset from a web service over HTTP or read it from the cache. We need to read a row in the database to get the id to pass to the web service. We need to read site-specific metadata to get the translations for certain fields that will override the fields returned from the web service.

To review, for each item [media id="8675309"]:

1. Go to the database, get the nyt_media row by primary key (8675309)

2. Request the asset from a web service, using the asset_id returned by the row

3. Get the metadata from nyt_2_mediameta by nyt_media_id (8675309)

4. Don’t actually make network requests in the shortcode handler itself

5. Read all of these values from primed caches

Shortcodes get parsed when 'the_content' filters runs. To short circuit that, we hook into 'template_redirect', which happens before rendering. We match all of the shortcodes in the content for every post in the request – this is more than just the main loop, this could also be Related Content modules and the like. We build a list of all of the unique IDs that will need data.

Once we have all of the IDs, we look in the cache for existing entries. If we parsed 85 IDs, and 50 are already in the cache, we will only request the missing 35 items. It is absolutely crucial for the HTTP portion that we generate a batch query and that no single requests leak out. We are not going to make requests serially (one at a time), our server would explode and time out. Because we are doing such amazing work so far, and we are so smart about HTTP, we should be fine, right?

Let us open our hymnals to my tweet-storm from Friday:

1) spent a full day optimizing retrieval of data from the database over the lifecycle of a request – priming the cache to avoid row lookups

— Scott Taylor ⛵️ (@wonderboymusic) March 24, 2017

I assumed all primary key lookups would be fast and free. Not so.

Let’s look at these lines:

// ids might be a huge array

$assets = array_reduce( $ids, function ( $carry, $id ) use ( $repo ) {

// all items will not be read from the cache

// we did not prime the cache yet

$asset = $repo->find( $id );

if ( $asset ) {

$carry[] = $asset;

}

return $carry;

}, [] );

// later on in the request

// we request the bylines (authors) for all of the above posts

A ton of posts, each post has several media assets. We also store bylines in a separate table. Each post was individually querying for its bylines. The cache was not primed.

When the cache is primed, this request has a chance of being fast-ish, but we are still hitting Memcached a million times (I haven’t gotten around to the batch-requesting cache lookups).

The Solution

Thank you, Doctrine. Once I realized all of the cache misses stampeding my way, I added calls to prime both caches.

// media assets (shortcodes) // many many single lookups reduced to one $repo->findBy( [ 'id' => $ids ] );

Remember, WP_Query always makes an expensive query. Doctrine has a query cache, so this call might result in zero database queries. When it does make a query, it will prime the cache in a normalized fashion that all queries can share. Winning.

For all of those bylines, generating queries in Doctrine remains simple. Once I had a list of master IDs:

// eager load bylines for all related posts (there were many of these)

$app['bylines.repo.post_byline']->findBy(

[

'postId' => array_unique( $ids ),

'blogId' => $app['blog_id'],

]

);

Conclusion

Performance is hard. Along the way last week, I realized the the default Docker image for PHP-FPM does not enable the opcache by default for PHP 7. Without it being on, wp-settings.php can take more than 1 second to load. I turned on the opcache. I will prime my caches from now on. I will not take primary key lookups for granted. I will consistently profile my app. I will use power tools when necessary. I will continue to look outside of the box and at the PHP universe as a whole.

Final note: I don’t even work on PHP that much these days. I have been full throttle Node / React / Relay since the end of last year. All of these same concepts apply to the Node universe. Your network will strangle you. Your code cannot overcome serial network requests. Your cache must be smart. We are humans, and this is harder than it looks.

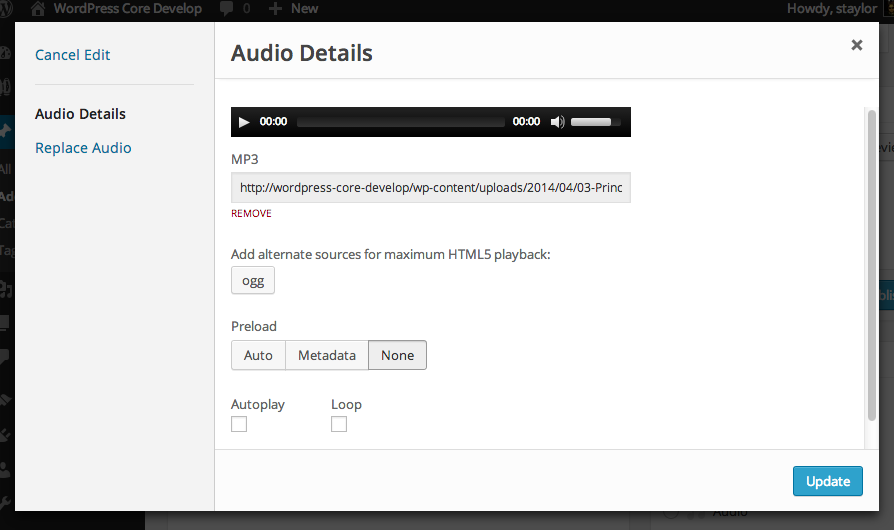

TinyMCE is the visual editor in WordPress. Behind the scenes, the visual editor is an iframe that contains markup. In 3.9, gcorne and azaozz did the mind-bending work of making it easier to render “MCE views” – or content that had connection to the outside world of the visual iframe via a TinyMCE plugin and mce-view.js. A lot of the work I did in building previews for audio and video inside of the editor was implementing the features and APIs they created. gcorne showed us the possibilities by making galleries appear in the visual editor. Everything else followed his lead.

TinyMCE is the visual editor in WordPress. Behind the scenes, the visual editor is an iframe that contains markup. In 3.9, gcorne and azaozz did the mind-bending work of making it easier to render “MCE views” – or content that had connection to the outside world of the visual iframe via a TinyMCE plugin and mce-view.js. A lot of the work I did in building previews for audio and video inside of the editor was implementing the features and APIs they created. gcorne showed us the possibilities by making galleries appear in the visual editor. Everything else followed his lead.