AWS Glue is a fully managed ETL service that makes it easy to move data between your data stores. AWS Glue simplifies and automates the difficult and time consuming data discovery, conversion, mapping, and job scheduling tasks. AWS Glue guides you through the process of moving your data with an easy to use console that helps you understand your data sources, prepare the data for analytics, and load it reliably from data sources to destinations.

AWS Glue is integrated with Amazon S3, Amazon RDS, and Amazon Redshift, and can connect to any JDBC-compliant data store. AWS Glue automatically crawls your data sources, identifies data formats, and then suggests schemas and transformations, so you don’t have to spend time hand-coding data flows. You can then edit these transformations, if necessary, using the tools and technologies you already know, such as Python, Spark, Git and your favorite integrated developer environment (IDE), and share them with other AWS Glue users. AWS Glue schedules your ETL jobs and provisions and scales all the infrastructure required so your ETL jobs run quickly and efficiently at any scale. There are no servers to manage, and you pay only for resources consumed by your ETL jobs.

For latest information about service availability, sign up here and we will keep you updated via email.

Step 1. Build Your Data Catalog

First, you use the AWS Management Console to register your data sources with AWS Glue. AWS Glue crawls your data sources and constructs a data catalog using pre-built classifiers for many popular source formats and data types, including JSON, CSV, Parquet, and more. You can also add your own classifiers or choose classifiers from the AWS Glue community to add to your crawls.

Step 2. Generate and Edit Transformations

Next, select a data source and target, and AWS Glue will generate Python code to extract data from the source, transform the data to match the target schema, and load it into the target. The auto-generated code handles common error cases, such as bad data or hardware failures. You can edit this code using your favorite IDE and test it with your own sample data. You can also browse code shared by other AWS Glue users and pull it into your jobs.

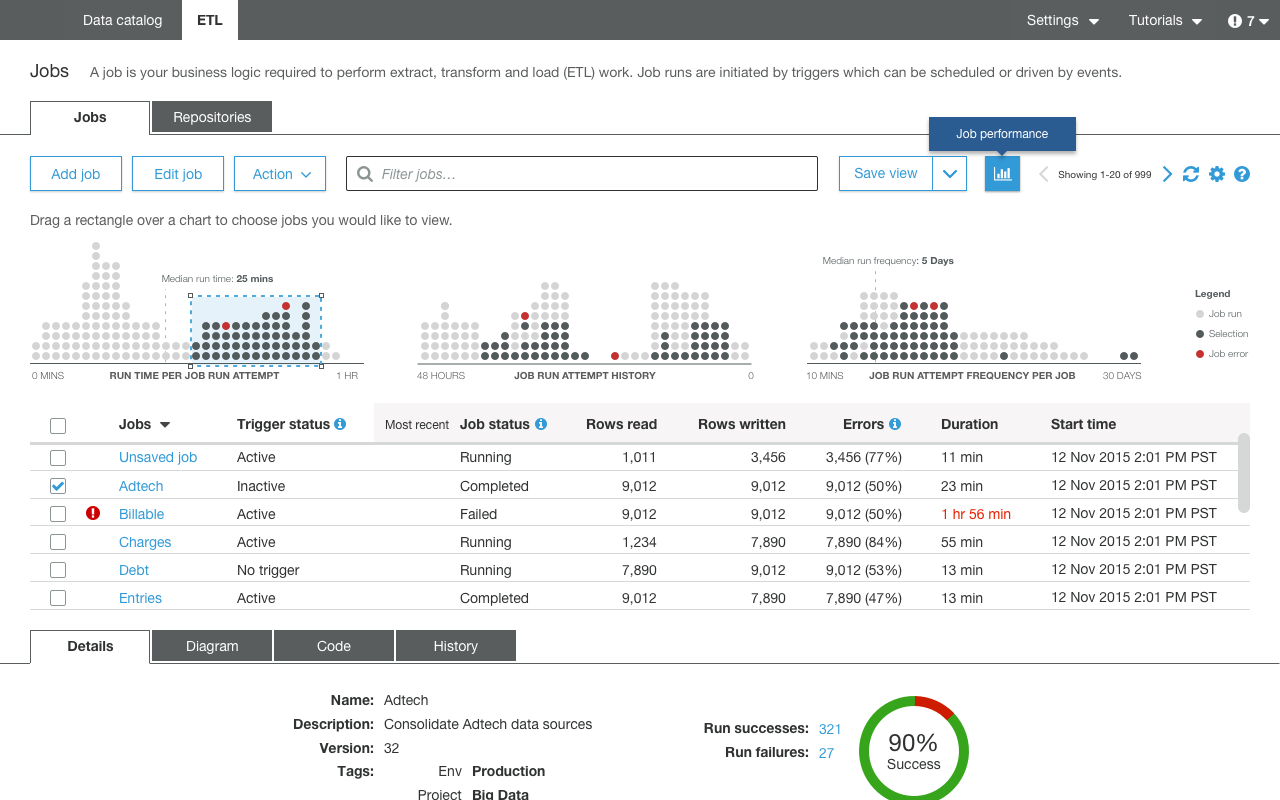

Step 3. Schedule and Run Your Jobs

Lastly, you can use AWS Glue’s flexible scheduler to run your flows on a recurring basis, in response to triggers, or even in response to AWS Lambda events. AWS Glue automatically distributes your ETL jobs on Apache Spark nodes, so that your ETL run times remain consistent as data volume grows. AWS Glue coordinates the execution of your jobs in the right sequence, and automatically re-tries failed jobs. AWS Glue elastically scales the infrastructure required to complete your jobs on time and minimize costs.

Done.

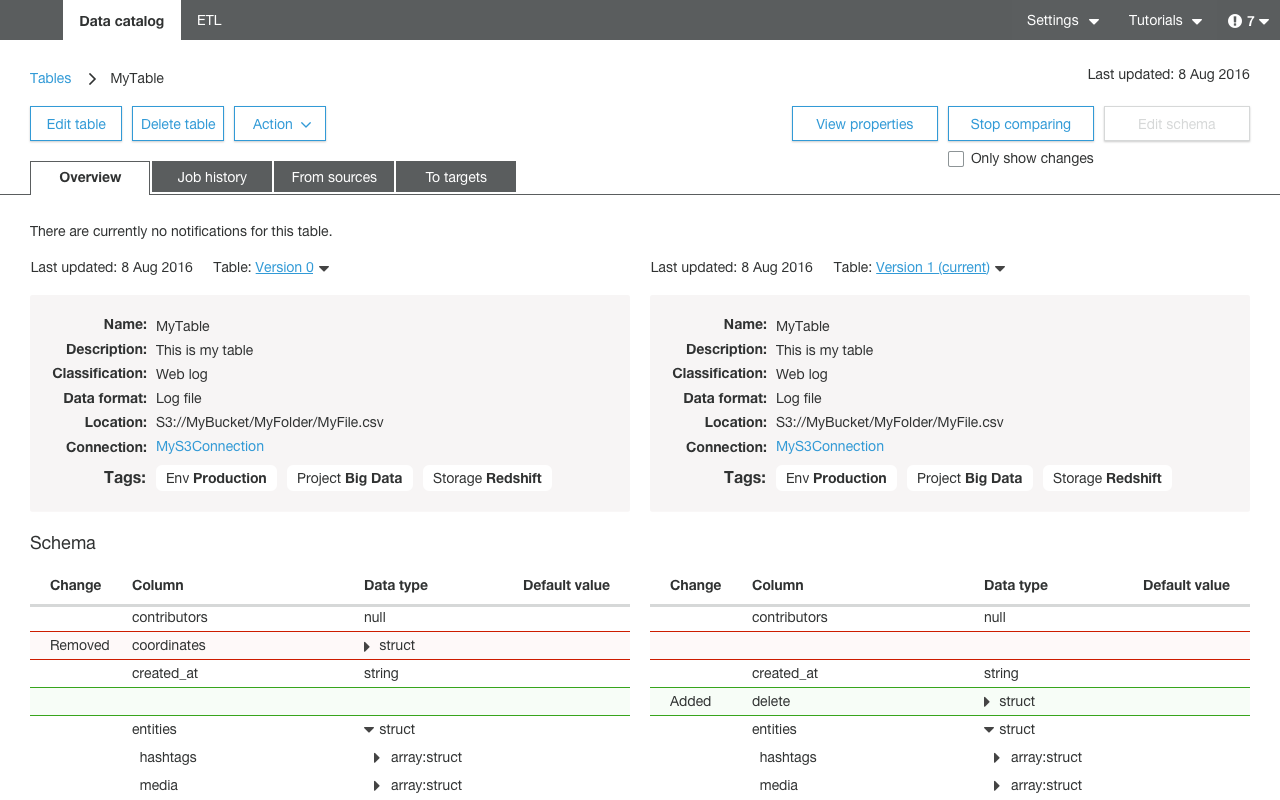

That’s it! Once the ETL jobs are in production, AWS Glue helps you track changes to metadata such as schema definitions and data formats, so you can keep your ETL jobs up to date.

AWS re:Invent is the largest gathering of the global AWS community. The conference allows you to gain a deeper knowledge of AWS services and learn best practices. We announced AWS Glue at re:Invent 2016. Watch the sessions below to learn more about AWS Glue and other related analytics, or check out the entire big data breakout sessions playlist.

Sign up for the AWS Glue preview program here. Once approved, you can try the service for free.