I was awake soon after 5:30 yesterday morning. As I got to my computer, the EU referendum results weren’t confirmed, but it was looking certain that the country had voted (narrowly, but decisively) to leave the European Union. My thoughts during the day are nicely summed up by my tweets and retweets.

My initial reaction was anger.

Oh, you fuckers! "EU referendum: BBC forecasts UK votes to leave" – https://t.co/nc207O5PFB

— Dave Cross (@davorg) June 24, 2016

(Hmm… the downside of rolling news coverage – that story has changed dramatically since I first linked to it.)

A few minutes later I was slightly more coherent (and almost philosophical)

Waking up to find myself a stranger in my own country.

— Dave Cross (@davorg) June 24, 2016

Then the reality of the situation started to sink in

Who's looking forward to months of Johnson, Gove and Farage looking smug? :/

— Dave Cross (@davorg) June 24, 2016

I tried to be positive

Looking on the bright side, at least the NHS will get an extra £350m a week. When does that start?

— Dave Cross (@davorg) June 24, 2016

I was being sarcastic, of course. We’ll return to this subject later on.

I started to see life imitating art in a quite frightening way.

Can I suggest an appropriate hashtag – #EnglandPrevails – https://t.co/4a5OtIlCG0

— Dave Cross (@davorg) June 24, 2016

(And, yes, I know I should replace that picture with one of Boris Johnson)

Nigel Farage is (and, apparently, always has been) a despicable man. So it should have come as no surprise that his victory speech was insulting and divisive.

Farage says it's a victory for "real people", "ordinary people" and "decent people". I'm clearly none of those – https://t.co/qiHszxiR4D

— Dave Cross (@davorg) June 24, 2016

I don’t mind not being considered ordinary, but I’m certain I’m real and I like to think I’m decent. Tom Coates inverted Farage’s phrase nicely.

I'm clearly one of the unreal, extraordinary and indecent people in whose name Farage did NOT win this referendum.

— Tom Coates (@tomcoates) June 24, 2016

When Cameron resigned, I immediately became worried about the fall-out.

I'm no fan of Cameron, of course. But all the obvious replacements seem far worse :-/

— Dave Cross (@davorg) June 24, 2016

Really, if your best option is a man who stuck his penis into a pig’s mouth, then it must be clear that you’re in trouble.

Then I checked the stock market and realised that many of the Brexit supporters may have shot themselves in the foot.

FTSE in freefall. Which is a shame, because most Brexit supports will need their pensions long before the rest of us.

— Dave Cross (@davorg) June 24, 2016

A story in the FT illustrated the fall nicely (“nicely” isn’t really the right word!)

Is this what you voted for? "FTSE 250 drops 11.4%, worst drop ever" https://t.co/Ohb69YxB9u

— Dave Cross (@davorg) June 24, 2016

The markets bounced back a bit later in the day – but it was one of the most volatile days of trading in history.

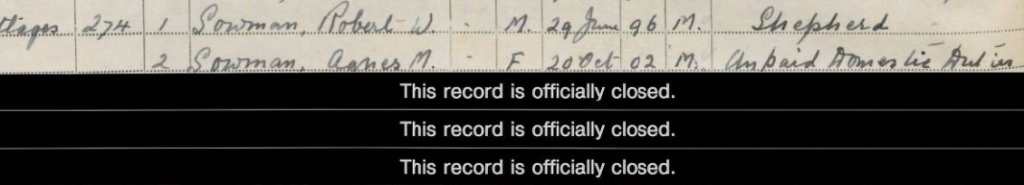

Fox News can, of course, always be relied on to get important facts wrong.

FOX News – a little confused! pic.twitter.com/K0n1QdOiBV

— Sam Kiley (@kileysky) June 24, 2016

Then I started to see data on the demographics of the voting – where it became obvious that it was mainly the older generations who were voting against the EU

Depressingly, it's the baby-boomers (my age and older) voting for a future that is clearly not wanted by the people who have to live in it

— Dave Cross (@davorg) June 24, 2016

Can I just point out that it’s #NotAllBabyBoomers :-/

Remember the £350m a week that was going to be diverted to the NHS. Turns out that was a lie.

See! You just can't trust these people. https://t.co/PljtmCNmsh

— Dave Cross (@davorg) June 24, 2016

WATCH: @Nigel_Farage tells @susannareid100 it was a 'mistake' for Leave to claim there'd be £350M a week for NHShttps://t.co/JNkl5k8IlK

— Good Morning Britain (@GMB) June 24, 2016

It was a lie on many fronts.

- It was a lie because the UK doesn’t send £350m a week to the EU

- It was a lie because it ignored the money that we get back from the EU

- It was a lie because any money saved was never going to be spent on the NHS

It was a lie that the Leave campaign were called out on many times, but they refused to retract it.

To be fair to Farage (and that’s not a phrase I ever expected to write) he wasn’t part of the official Leave campaign, so he wasn’t the right person to ask about this. But someone should certainly take Johnson or Gove to task over it.

Going back to the baby-boomers, I retweeted a friend’s innocent question

How much money could we add back into the economy in the next 15 years if we declined to pay the boomers their pensions? Asking for a friend

— Simon Wistow (@deflatermouse) June 24, 2016

Then it started to look like Cameron might not be the only party leader to go in the fallout from the referendum

That was quicker than I expected… https://t.co/VHFJmxKyYv

— Dave Cross (@davorg) June 24, 2016

Challenge to Corbyn- Margaret Hodge and Ann Coffey submit no confidence motion in Corbyn – could be voted on on Tuesday night

— Laura Kuenssberg (@bbclaurak) June 24, 2016

Incidentally, has anyone seen any evidence of the Lib Dems in this campaign? A couple of days ago I saw footage of Tim Farron in a crowd somewhere. Took me a few seconds to remember who he was; and then another minute or so to remember that he was the leader of the Lib Dems.

Euro-myths have always really annoyed me

Billions off the stock market. The pound plunges. But at least we can have whatever shape bananas we want. Sounds like a bargain :-/

— Dave Cross (@davorg) June 24, 2016

More bad news from the City

o/` This town…. / Is coming like a ghost town…. o/` https://t.co/LlV0FCDtfE

— Dave Cross (@davorg) June 24, 2016

BREAKING: Morgan Stanley has begun moving 2,000 investment banking staff from London to Dublin or Frankfurt: report

— Reuters Business (@ReutersBiz) June 24, 2016

I should point out that Morgan Stanley have denied the story. I guess time will tell who is telling the truth here.

By mid-afternoon, I was working on alternative plans

Idly browsing property in Christchurch, NZ.

— Dave Cross (@davorg) June 24, 2016

A final thought struck me

Haven't seen any news about @UKIP shutting up shop yet. Won't they all be going back to the Tories now?

— Dave Cross (@davorg) June 24, 2016

I mean, they were a single-issue party. And they’ve won that battle. Surely, there’s no need for the party to exist any longer. They can’t surely expect people to vote for them now (although, UK voters are a very strange bunch). If they closed down, they could all go back to the Tories and Farage and Carswell could get places in the new Johnson/Gove cabinet.

Oh, now I’m really depressed.