Abstract

Wikipedia guidelines for authors stress the importance of references since only knowledge already published somewhere else should be inserted in the collaborative encyclopedia. However most bibliographic references are not normalized because various citation styles are allowed in referencing and few are references to books and/or peer reviewed scientific papers marked with a unique identification number. This makes it difficult to automatically analyze Wikipedia citations that would allow a number of scientific and practical issues. The purpose of this paper is two-fold. First we empirically verify the potential of automatic identification of incomplete references in Wikipedia with our machine learning based software. Second we propose a normalization method on the identified result to group identical references. We evaluate the reference parsing performance of our system on author and title fields then provide Wikipedia citation statistics on the identified and normalized result.

1. Introduction

Wikipedia is widely used as supplementary knowledge resource in many advanced Information Retrieval and Natural Processing applications[4] as well as by most popular web search engines. Its unexpected reliability mainly relies on its guidelines for anonymous authors, stressing the importance of references since only knowledge already published somewhere else should be inserted. There are two type of references in the Wikipedia. On one hand there are numerous footnote type references for fact checking, most of them are url pointing out to external web pages. On the other hand there is a restricted list of bibliographic references for further reading. Our assumption is that this short list characterizes the real knowledge background of each article and our purpose is to extract the resulting citation index over the whole encyclopedia.

Since the late ninety’s, automatic reference parsing has received much attention in citation indexing as its direct usage and in information retrieval and extraction for the purpose of improving performance using cross-linking capabilities. A practical example is CiteSeer [2], a public search engine and digital library for scientic and academic papers that first realized automatic citation indexing. Citation-based information retrieval and extraction concentrate in taking advantage of recognized reference fields for other tasks such as scientific paper summarization [6], text retrieval [7] and document clustering [1].

The purpose of this paper is two-fold. First we empirically verify the potential of automatic identification of incomplete references in Wikipedia with our advanced machine learning tool. Second we propose a simple normalization method on the identified results to group identical references. We empirically show that our system is able to successfully identify references then provide Wikipedia citation statistics on the identified and normalized result.

2. Reference parsing tool

In this section we briefly present our bibliographic reference parsing system. It has been developed for an electronic article publishing platform in social sciences and humanities (SSH) domain. The system is developed to deal with the platform’s articles, expecting to recognize general bibliographic patterns of SSH. However, as the citation styles in the journals are already quite diverse, we focused on constructing a general system, which can deal with various citation types. The system is currently handling two levels of reference data: classical bibliography and notes, based on a machine learning technique, Conditional Random Fields (CRFs) as other state-of-the-art tools [3, 5]. In brief, a reference is considered as a word sequence string so as to treat the reference parsing as a sequence labeling task.

2.1 Conditional Random Fields

A Conditional Random Field is a discriminative probabilistic model developed to supplement Hidden Markov Model (HMM). Unlike HMM, factors only depending on input data are not considered as modeling factors, instead they are considered as constant factors to output [8]. This is a key characteristic of CRFs: the ability to include a lot of user-defined input features in modeling. The conditional distribution of a linear-chain CRF for a set of label y given an input x is written as follows:

where w = {wk} ∈ RK is a parameter vector indicating weights for feature functions, {fk (yt, yt – 1, xt)}K/k = 1 is a set of real-valued feature functions, and Z(x) is a normalization function. Instead of the word identity xt , a vector xt , which contains all necessary components of x for computing features at time t , is substituted. A feature function can measure a special character of input token xt such as capitalized word. And it also measures the characteristics related with a state transition yt – 1 → yt. Parameters are estimated by maximizing conditional log likelihood, l (w) = ∑Ni=1 log p (y(i) | x(i)).

where w = {wk} ∈ RK is a parameter vector indicating weights for feature functions, {fk (yt, yt – 1, xt)}K/k = 1 is a set of real-valued feature functions, and Z(x) is a normalization function. Instead of the word identity xt , a vector xt , which contains all necessary components of x for computing features at time t , is substituted. A feature function can measure a special character of input token xt such as capitalized word. And it also measures the characteristics related with a state transition yt – 1 → yt. Parameters are estimated by maximizing conditional log likelihood, l (w) = ∑Ni=1 log p (y(i) | x(i)).

2.2 System specification

Due to the nature of the training data extracted from our target journal platform whose dominant language is French (90%), reference parsing basically works better on French data than other languages. However since the system is designed to actively capture language independent properties of bibliographic data, it also gives comparable results on English. This is not surprising since scientific papers in French often involve several references in English.

The system is able to handle two different levels of bibliographic data as mentioned above: traditional references with a heading, and footnotes including bibliography information maybe. Meanwhile, because of the diversity of citation styles in the training data, our system can identify reference fields more in detail than the others using more than 30 different labels. However, this full label set is optimized for the original target platform, so a more simple set with around 20 labels is selected for external references. In the simple label set, `author’ field is detailed with `surname’ and `forename’ and title field is divided in `title’ and `booktitle’. When the system is used to identify unique references rather than full annotations, only two fields, author name and title are essential.

We can actually extract the digital object identifier (DOI) of a specific reference via crossref site, once the surname of first author and several starting words of title are correctly identified.

3. Wikipedia reference parsing

By applying our system to Wikipedia references, we expect not only to evaluate the system applicability to external references, but also to verify if the parsed result is really helpful to identify unique reference scattered throughout the encyclopedia. However, these two objectives are both challenging because first, the concerned references are not manually annotated and second, identical references are often in various formats. We try to overcome the first obstacle by evaluating only references to books, written with ISBN. These references can be identified using Google Books API that exports a detailed reference information in xml when receiving a ISBN. Then we conduct a normalization process on the result to finally well identity same references.

3.1 Evaluation procedure

A unique reference can be identified in general with the first author name and the title of reference. So we take just these two fields from the detailed information provided by Google API. On the other hand, an exact evaluation for all reference fields is very difficult because the provided result has its own form, which is often different from the original reference. It can be thought of as a normalization problem but since the API result is reliable in this case, we match our parsing result to that rather than normalize both.

Algorithm 1: Name matching algorithm for tth author

Algorithm 1: Name matching algorithm for tth author

Author name

In the literature, we count the number of correctly recognized words per field to compute precision and recall. We conduct the same evaluation but choose only precision for author name, because the API frequently provides all author names, which are not written in the original string. It is not always easy to judge whether a name token is added or not, because name field is one of the most unnormalized fields. Let Gt be a real name set of tth author and Bt be that of ours, then the matching process is presented in Algorithm 1. First we check surname, then compare remained tokens between Gt and Bt. For not matched token in Bt, check if it is initialized in one of two sets. Also check if it is shortened or differently written for example with accents. If the algorithm does not find any correct token in Bt, we compare Bt with Gt’ ≠ t, ∀t’ until we found at least one token. The procedure is repeated for all authors and references by summing up ft and respectively nct. Finally we compute the micro averaged precision with ∑ref ∑t ft / (∑ref ∑t ft + ∑ref ∑t nct).

Title

For the title evaluation, the most frequent problem is Google API often ignores some parts of title. In that case, precision calculation is not possible because the ignored parts probably include correctly recognized tokens by our system. Therefore we instead take recall to evaluate the title field. Of course there are variations for writing same title in different references but much less than author field. That is why we first try to preprocess both results then compare title fields in them. Let TG be a title string sequence found by Google API, let TB be that of ours. The comparing procedure is:

- Eliminate punctuation, capitalize words for both sets.

- If the first token of TG is in TB, find its position p.

- Compare TG and TB one by one from the position p.

- If the order of parts of sequence is reversed, compare again with different starting point.

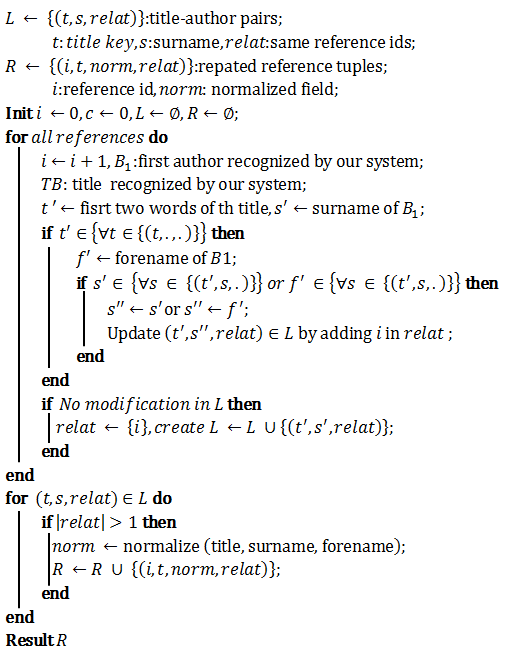

Algorithm 2: Author name and title normalization

Algorithm 2: Author name and title normalization

During the comparison, we count the number of correctly recognized title tokens. This value is summed up for all references then the sum is divided by total number of title tokens in the Google API result. As in author name field, the API sometimes adds tokens not existing in the original reference string. We check this case and eliminate the added tokens from calculation.

3.2 Normalization

Another objective of this paper is to show that we are able to find identical references using author and title fields automatically recognized by our system. This inevitably involves the normalization of the recognized fields because a reference string can be written in different formats. Recall that in the above evaluation, we compared two sets of result without normalization. The principal reason is that an exact normalization is dificult and time consuming because normalizing a recognized field means that we should consider all possible writing formats for this field. Under the circumstances, we try to normalize author and title with a compact representation. In short, we construct a set of normalized strings for both author and title that will be used to find identical references. The detailed process presented in Algorithm 2. We first fill a tuple set L having key value with title (starting two words) and author surname. We stock the id of references having same key value as well asall recognize field information. Once we finish filling L, we fill R by normalizing surname, forename and title per reference, which appears at least two times in all references. Finally we have a set of overlapped references in the dataset with normalized author name and title.

3.3 Experiments

Data

Our target is incomplete references, which do not have identified field information such as author and title, instead just written in a plain text string. `References’ section of each page have complete references whereas most of `Further reading’ section do not have complete filed information. We extract references in this `Further reading’ section using the MediaWiki API. Somme errored ones are dumped then finally a good part of full references in `Further reading’ section is extracted for both English and French Wikipedia. Table 1 shows a simple statistics of the data sets. For English Wikipedia, we have about 95k references, of which 28% are books with ISBN. Google Books API successfully identified 92% of these books. In case of French, about 39k references are tested and 9% of them are books with ISBN, among which 89% are identi_ed by Google API.

Reference parsing evaluation

Reference parsing evaluation

The following text box shows a reference parsing result of our system and the corresponding Goolge API result wrapped by `google’ tag. As previously stated, there is a difference between original text and API result and this justifies why we choose only precision for author and recall for title.

<wikititle>Immigration policy</wikititle> <bibl> <author> <surname>Aristide</surname> <forename>Zolberg</forename> </author> , " <title> A Nation by Design: Immigration Policy in the Fashioning of America </title> ", <publisher>Harvard University Press</publisher> <date>2006</date> , <isbn>ISBN</isbn> <isbnno>0-674-02218-1</isbnno> <google> <titlemain>A Nation by Design</titlemain> <subtitle>immigration policy in the fashioning of America</subtitle> <authors>Aristide R. ZOLBERG</authors> <authors>Aristide R Zolberg</authors> <publisherinfo>Harvard University Press</publisherinfo> <publishedDate>2009-06-30</publishedDate> </google> </bibl>

Table 2 presents the performance of our system on Wikipedia reference data. We obtain 0.85 for author precision and 0.90 for title recall on English data, and 0.83 and 0.91 respectively on French data. Even though the target book references are comparably simple, it is encouraging that we obtain that result on a out-of-domain references. Moreover, even though 90% of training data is in French, we obtain a similar result on both languages. This signifies first that the system can extract language independent properties of reference data and finally allows a multi-language reference parsing in terms of English and French. This might be effected by the fact that references in French wikipedia are more freely written in general.

Wikipedia citation statistics via normalization

In section 3.2, we presented how to find identical references using automatically recognized author and title. Unique reference verification is done with a gradual normalization process on these two fields. While the result can not be directly evaluated, it would contribute to the performance of other related tasks such as information retrieval. However, we do not deal with that expansion in this paper but try first to produce useful statistics, which might give ideas for an effective use of the identification result. Figure 1 presents some statistics concerning number of references obtained during normalization process. The upper figure shows number of references for five different criteria in order: total tested references, unique title by selecting two starting title words, unique references identified by title key and first author, citations at least referred once in other pages, and finally unique references among repeated citations. Therefore in English Wikipedia, about 66.8k unique references are found among 97k citations, and 6228 unique references are cited at least two times with 18.7k citations. It means that we can construct a author co-citation map using about 20% (18.7k/97k) of total citations in English Wikipedia. In case of French Wikipedia, about 14% of total citations come under this case. If we take only references having ISBN, these percentages dramatically decrease to 8% and even to 2% for English and French respectively. This phenomena confirms that automatic reference parsing process is essential to find real distribution of identical references.

Figure 1: Wikipedia citation statistics

The lower figure shows how many times a unique reference occurs in total dataset that corresponds to the horizontal axis. We extract that information for all unique references, then count their frequency for each occurrence number in the dataset. For example, see the fi rst left point of English dataset. About 4.6k unique references occur 2 times in the dataset and it means that about 50% (2 4:6k=18:7k) of repeated citations occur only two times in total. Mean occurrence of these repeated unique references are 2.91, therefore if a unique reference is cited more than once, it would be cited averagely 3 times total.

4. Conclusions

Reference analysis on Wikipedia articles necessarily involves an automatic parsing of incomplete references to identify their bibliographic fields. On the recognized reference fields, a normalization procedure is applied to find identifical references that might be written with different manners.This identification-normalization process can contribute directly to completing references, then to more analytical tasks like co-citation map construction, citation pattern finding, and further to other related tasks such as enhancing information retrieval or constructing recommender system.

The experimental result confirms that our machine learning based software successfully recognizes Wikipedia references although it has been constructed on a completely different training set in SSH domain. It is an interesting aspect because many of machine learning based applications suffer from domain adaptation problem. It seems that our system even overcomes language dependency seeing that similar performance has been obtained for both languages. This is verified via our proper truth-based evaluation algorithm. We also propose a normalization technique based on the identified references and it allows to find a set of identical references. However, since an evaluation has been done on a relatively simple references for convenience, we need to check the real performance on more complicated ones. We have discovered that parsing errors often occur for several special cases, so the performance would be improved by applying some post-processing strategies.

As a future work, we are thinking about using this result to make a visualization of co-citation map, with an external software, CiteSpace. We expect that this allows both quantitative and qualitative evaluations. In the long run, other tasks using the result would be applied.

5. References

[1] B. Aljaber, N. Stokes, J. Bailey, and J. Pei. Document clustering of scientific texts using citation contexts. Inf. Retr., 13:101-131, April 2010.

[2] C. L. Giles, K. D. Bollacker, and S. Lawrence. Citeseer: an automatic citation indexing system. In International Conference on Digital Libraries, pages 89-98. ACM Press, 1998.

[3] J. D. Lafferty, A. McCallum, and F. C. N. Pereira. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the Eighteenth International Conference on Machine Learning, ICML ’01, pages 282-289, 2001.

[4] O. Medelyan, D. N. Milne, C. Legg, and I. H. Witten. Mining meaning from wikipedia. Int. J. Hum.-Comput. Stud., 67(9):716-754, 2009.

[5] F. Peng and A. McCallum. Information extraction from research papers using conditional random fields. Inf. Process. Manage., 42:963-979, July 2006.

[6] V. Qazvinian and D. R. Radev. Scientific paper summarization using citation summary networks. In Proceedings of the 22nd International Conference on Computational Linguistics – Volume 1, pages 689-696. Association for Computational Linguistics, 2008.

[7] A. Ritchie, S. Robertson, and S. Teufel. Comparing citation contexts for information retrieval. In Proceeding of the 17th ACM conference on Information and knowledge management, CIKM ’08, pages 213-222. ACM, 2008.

[8] C. Sutton and A. McCallum. An introduction to conditional random fields. Foundations and Trends in Machine Learning, 4:267-373, 2012.