In this post we present the influence of part-of-speech and stemming labeling on automatic annotation of bibliographical references. Our reference annotator software Bilbo combines the following global features and local features :

| Feature Name | Description | ||||||

|---|---|---|---|---|---|---|---|

| ALLCAPS | All characters are capital letters | ||||||

| FIRSTCAP | First character is capital letter | ||||||

| ALLSMALL | All characters are lower cased | ||||||

| NONIMPCAP | Capital letters are mixed | ||||||

| ALLNUMBERS | All characters are numbers | ||||||

| NUMBERS | One or more characters are numbers | ||||||

| DASH | One or more dashes are included in numbers | ||||||

| BIBL_START | Position is in the first one-third of reference | ||||||

| BIBL_IN | Position is between the one-third and two-third | ||||||

| BIBL_END | Position is between the two-third and the end | ||||||

| INITIAL | Initialized expression | ||||||

| WEBLINK | Regular expression for web pages and emails | ||||||

| ITALIC | Italic characters | ||||||

| POSSEDITOR | Possible for the abbreviation of editor | ||||||

| POSSMONTH | Possible for the abbreviation of month and day | ||||||

| POSSBIBLSCOP | Possible for the abbreviation of Editing feature | ||||||

| POSSROLE | Possible for the abbreviation of Noun feature | ||||||

| SURNAMELIST | External list of surname | ||||||

| FORENAMELIST | External list of forename | ||||||

| PLACELIST | External list of city and country | ||||||

| JOURNALLIST | External list of journals | ||||||

| Descriptive features table | |||||||

As part of our evaluation, all the above features are part of our baseline testing (sliding window of five words). These evaluations were performed on Bilbo’s Corpus 1 which consists of 715 references prepared for learning and testing (10 fold cross-validation). The external tools used are Tree tagger for part-of-speech tagging and Pystemming for stemming.

Evaluations

We give the results of the micro-average F-measure(1) obtained throughout the labels(2) and the macro-average F-measure(1) over three major labels for annotating bibliographical reference, namely the titles, the forenames and the surnames (the target labels).

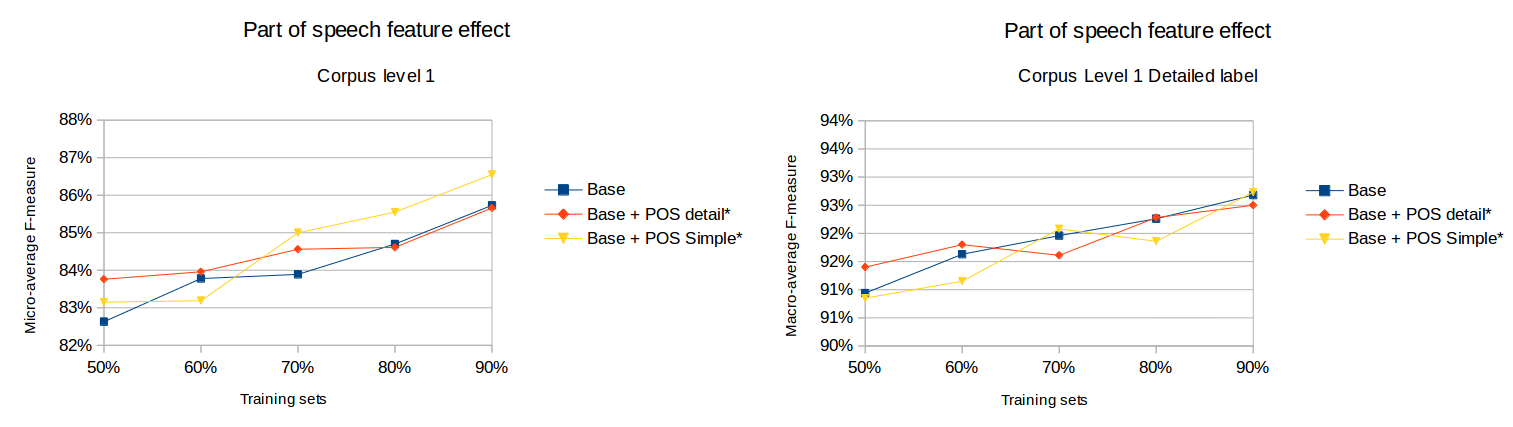

Part of speech feature effect

This first evaluation allows us to observe that the best results are obtained with harmonized part of speech detection. We can also see a slight improvement in target label detection. It is interesting to note that the impact of part of speech detection is better on general labelling that on target labels. The largest impact adding POS tags is obtained on dates (baseline : 89%-94% F-measure, Pos Simple : 90%-96% F-measure) and on biblscopes TEI tag (baseline : 76%-91% F-measure, Pos Simple : 80%-94% F-measure). This phenomenon can be explained by a rather redundant syntactic or morphological behaviour for these labels.

Part of speech feature with lemma tokens effect

This evaluation incorporates the lemma tokens and part of speech detection. We can observe that the results are globally similar to the baseline but slightly downgraded for the target labels. We can state that the use of lemma(3) tokens has little impact on the performance of general labelling and a negative influence on the target labels.

Stemming feature effect

This third evaluation allows us to see that the stemmes have very little impact on the performance both for the complete annotation and for the target labels. However, we can observe an improvement when the ratio training/testing set is less than 80% for the general labelling.

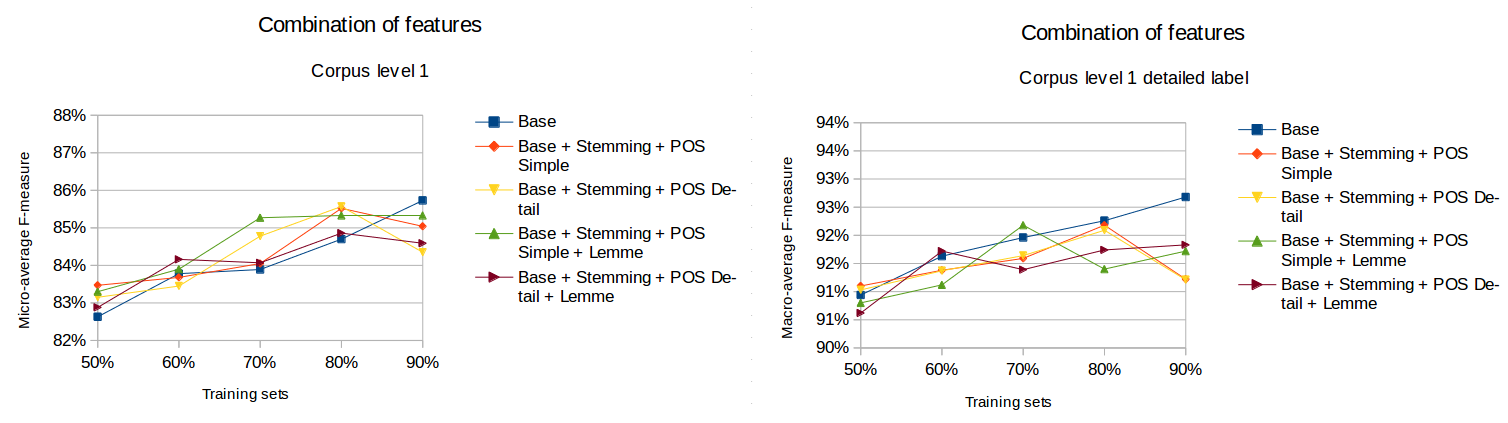

Combination feature effect

On this evaluation, we combined together all the linguistic features. We can see that the performance on general labeling is not improved over the baseline for the target labels (on the right). For the whole set of labels, combining all the features (green curve on the left) improves the overall annotation (the ratio training/testing @ 0.9 is likely to be not significant because overfitting and too few examples for testing).

Conclusion

In conclusion we have seen a slight improvement on our previous baselines by means of harmonized part-of-speech tags. Harmonized version provided in all cases better results than the detailed version where the tags are too sparse and too specific. We can note very little impact by adding stemmes and lemmas as features. We can also see some stability all throughout the different variations while language features seem more unstable depending on the amount of training data (they augment the sensitivity of overfitting). Indeed, adding linguistic features improves the global quality of reference annotation when the training corpus is less than 80% of the set of references as a whole.

Our future work will carry on adding contextual and multi-scaled features.

Notes

(Note*) POS detail : detailed label (ex: Ver:INF), POS simple : harmonized label (ex Ver.INF –> Ver)

(1) Micro-averaged values are calculated by constructing a global contingency table and then calculating precision and recall using these sums. In contrast macro-averaged scores are calculated by first calculating precision and recall for each category and then taking the average of these.

(2) Comparison of Cora data tags and Revues.org tags

(3) difference between lemmas and stemmes