- published: 26 Jan 2013

- views: 183741

-

remove the playlistCorrelation

-

remove the playlistLatest Videos

-

remove the playlistLongest Videos

- remove the playlistCorrelation

- remove the playlistLatest Videos

- remove the playlistLongest Videos

- published: 01 May 2014

- views: 95322

- published: 13 Jul 2015

- views: 6694

- published: 14 Nov 2011

- views: 120127

- published: 18 Aug 2011

- views: 337429

- published: 30 Aug 2013

- views: 170261

- published: 05 Nov 2012

- views: 81320

- published: 24 Feb 2014

- views: 22251

- published: 19 Jan 2013

- views: 92487

In statistics, dependence refers to any statistical relationship between two random variables or two sets of data. Correlation refers to any of a broad class of statistical relationships involving dependence.

Familiar examples of dependent phenomena include the correlation between the physical statures of parents and their offspring, and the correlation between the demand for a product and its price. Correlations are useful because they can indicate a predictive relationship that can be exploited in practice. For example, an electrical utility may produce less power on a mild day based on the correlation between electricity demand and weather. In this example there is a causal relationship, because extreme weather causes people to use more electricity for heating or cooling; however, statistical dependence is not sufficient to demonstrate the presence of such a causal relationship.

Formally, dependence refers to any situation in which random variables do not satisfy a mathematical condition of probabilistic independence. In loose usage, correlation can refer to any departure of two or more random variables from independence, but technically it refers to any of several more specialized types of relationship between mean values. There are several correlation coefficients, often denoted ρ or r, measuring the degree of correlation. The most common of these is the Pearson correlation coefficient, which is sensitive only to a linear relationship between two variables (which may exist even if one is a nonlinear function of the other). Other correlation coefficients have been developed to be more robust than the Pearson correlation – that is, more sensitive to nonlinear relationships.

This article is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License, which means that you can copy and modify it as long as the entire work (including additions) remains under this license.

Karl Pearson FRS (27 March 1857 – 27 April 1936) was an influential English mathematician who has been credited for establishing the discipline of mathematical statistics.

In 1911 he founded the world's first university statistics department at University College London. He was a proponent of eugenics, and a protégé and biographer of Sir Francis Galton.

A sesquicentenary conference was held in London on 23 March 2007, to celebrate the 150th anniversary of his birth.

Carl Pearson, later known as Karl Pearson (1857–1936), was born to William Pearson and Fanny Smith, who had three children, Aurthur, Carl (Karl) and Amy. William Pearson also sired an illegitimate son, Frederick Mockett.

Pearson's mother, Fanny Pearson née Smith, came from a family of master mariners who sailed their own ships from Hull; his father read law at Edinburgh and was a successful barrister and Queen's Counsel (QC). William Pearson's father's family came from the North Riding of Yorkshire.

"Carl Pearson" inadvertently became "Karl Pearson" when he enrolled at the University of Heidelberg in 1879, which changed the spelling. He used both variants of his name until 1884 when he finally adopted Karl — supposedly also after Karl Marx[citation needed], though some argue otherwise. Eventually he became universally known as "KP".

This article is licensed under the Creative Commons Attribution-ShareAlike 3.0 Unported License, which means that you can copy and modify it as long as the entire work (including additions) remains under this license.

- Loading...

-

27:06

27:06Statistics 101: Understanding Correlation

Statistics 101: Understanding CorrelationStatistics 101: Understanding Correlation

Statistics 101: Understanding Correlation In this video we discuss the basic concepts of another bivariate relationship; correlation. Previous videos examined covariance and in this lesson we tie the two concepts together. Correlation comes with certain caveats and we talk about those as well. Finally we walk through a simple example involving correlation and its interpretation. Enjoy! For my complete video library organized by playlist, please go to my video page here: http://www.youtube.com/user/BCFoltz/videos?flow=list&view;=1&live;_view=500&sort;=dd -

6:56

6:56What Is Correlation?

What Is Correlation?What Is Correlation?

Basic overview of correlation -

6:54

6:54The Correlation Coefficient - Explained in Three Steps

The Correlation Coefficient - Explained in Three StepsThe Correlation Coefficient - Explained in Three Steps

The correlation coefficient is a really popular way of summarizing a scatter plot into a single number between -1 and 1. In this video, I'm showing how the correlation coefficient does this in three steps, and explain them without any formulas. This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.(http://creativecommons.org/licenses/by-nc/4.0/) -

27:36

27:36Correlation & Regression Video 1

Correlation & Regression Video 1Correlation & Regression Video 1

full course on : www.ngclasses.com. -

5:27

5:27How Ice Cream Kills! Correlation vs. Causation

How Ice Cream Kills! Correlation vs. CausationHow Ice Cream Kills! Correlation vs. Causation

To make better decisions and improve your problem solving skills it is important to understand the difference between correlation and causation. -

9:15

9:15Correlation Coefficient

Correlation CoefficientCorrelation Coefficient

A step by step problem on how to use the Correlation Coefficient formula. -

10:45

10:45Correlation and causality | Statistical studies | Probability and Statistics | Khan Academy

Correlation and causality | Statistical studies | Probability and Statistics | Khan AcademyCorrelation and causality | Statistical studies | Probability and Statistics | Khan Academy

Understanding why correlation does not imply causality (even though many in the press and some researchers often imply otherwise) Practice this lesson yourself on KhanAcademy.org right now: https://www.khanacademy.org/math/probability/statistical-studies/types-of-studies/e/types-of-statistical-studies?utm_source=YT&utm;_medium=Desc&utm;_campaign=ProbabilityandStatistics Watch the next lesson: https://www.khanacademy.org/math/probability/statistical-studies/types-of-studies/v/analyzing-statistical-study?utm_source=YT&utm;_medium=Desc&utm;_campaign=ProbabilityandStatistics Missed the previous lesson? https://www.khanacademy.org/math/probability/statistical-studies/types-of-studies/v/types-statistical-studies?utm_source=YT&utm;_medium=Desc&utm;_campaign=ProbabilityandStatistics Probability and statistics on Khan Academy: We dare you to go through a day in which you never consider or use probability. Did you check the weather forecast? Busted! Did you decide to go through the drive through lane vs walk in? Busted again! We are constantly creating hypotheses, making predictions, testing, and analyzing. Our lives are full of probabilities! Statistics is related to probability because much of the data we use when determining probable outcomes comes from our understanding of statistics. In these tutorials, we will cover a range of topics, some which include: independent events, dependent probability, combinatorics, hypothesis testing, descriptive statistics, random variables, probability distributions, regression, and inferential statistics. So buckle up and hop on for a wild ride. We bet you're going to be challenged AND love it! About Khan Academy: Khan Academy offers practice exercises, instructional videos, and a personalized learning dashboard that empower learners to study at their own pace in and outside of the classroom. We tackle math, science, computer programming, history, art history, economics, and more. Our math missions guide learners from kindergarten to calculus using state-of-the-art, adaptive technology that identifies strengths and learning gaps. We've also partnered with institutions like NASA, The Museum of Modern Art, The California Academy of Sciences, and MIT to offer specialized content. For free. For everyone. Forever. #YouCanLearnAnything Subscribe to KhanAcademy’s Probability and Statistics channel: https://www.youtube.com/channel/UCRXuOXLW3LcQLWvxbZiIZ0w?sub_confirmation=1 Subscribe to KhanAcademy: https://www.youtube.com/subscription_center?add_user=khanacademy -

14:48

14:48Linear Regression and Correlation - Introduction

Linear Regression and Correlation - Introduction -

8:47

8:47How to Calculate Pearson's Correlation Coefficient

How to Calculate Pearson's Correlation CoefficientHow to Calculate Pearson's Correlation Coefficient

How to Calculate Pearson's Correlation Coefficient by hand. Visit http://www.StatisticsHowTo.com for more videos and hundreds of how to articles for elementary statistics. -

5:58

5:58The danger of mixing up causality and correlation: Ionica Smeets at TEDxDelft

The danger of mixing up causality and correlation: Ionica Smeets at TEDxDelftThe danger of mixing up causality and correlation: Ionica Smeets at TEDxDelft

Ionica Smeets (@ionicasmeets) is joining TEDxDelft Never Grow Up: A mathematician and science journalist with plenty of media experience. Using her vast knowledge and enthusiasm, she can explain everything about her favorite topics in science and statistics. She does it well on paper and face-to-face: She writes blogs, columns and books and is also asked to appear as a speaker, live, on television and on radio shows. Since 2006, Ionica has taken on the Internet with interesting and fun mathematics together with PhD Partner in Crime Jeanine Daems on the website wiskundemeisjes.nl. She and Jeanine now write a bi-weekly column in the Volkskrant about mathematics and the website also resulted in a book titled 'I Was Never Good At Math' (Ik was altijd heel slecht in wiskunde) in 2011. Ionica appears on De Wereld Draait Door to talk about mathematics; trying to explain the most complicated things and developments in the field of mathematics to the host Matthijs van Nieuwkerk and the audience. Even for the most mathematically challenged, it's educative and entertaining to listen to. In 2012 she became a reporter for KRO in a series called 'De Rekenkamer' investigating the financial aspects of giving blood and illegal minor immigrants. Together with Bas Haring she wrote 'Vallende Kwartjes' which are essays in which scientific processes and concepts are explained with straight-forward, easy-to-get examples and stories. The science journalist has an eye for this sort of thing; defying and destroying the idea that science is boring and/or cannot be explained well. In collaboration with Govert Schilling (amongst others) she also makes YouTube videos for the channel Science 101 (Wetenschap 101) where scientific and mathematic concepts are explained clearly in under 101 seconds! She's charming, adds good natured comments and jokes to her writing and her live appearances (when Matthijs van Nieuwkerk asked her how she celebrated Pi Day, she answered 'by drinking Pina Colada's). What more could be asked for in a TEDxDelft performer? In the spirit of ideas worth spreading, TEDx is a program of local, self-organized events that bring people together to share a TED-like experience. At a TEDx event, TEDTalks video and live speakers combine to spark deep discussion and connection in a small group. These local, self-organized events are branded TEDx, where x = independently organized TED event. The TED Conference provides general guidance for the TEDx program, but individual TEDx events are self-organized.* (*Subject to certain rules and regulations) -

10:12

10:12Correlation Explanation with Demo

Correlation Explanation with DemoCorrelation Explanation with Demo

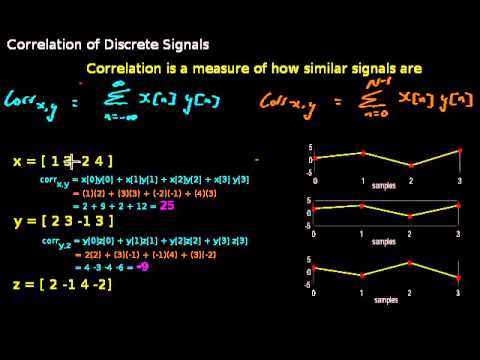

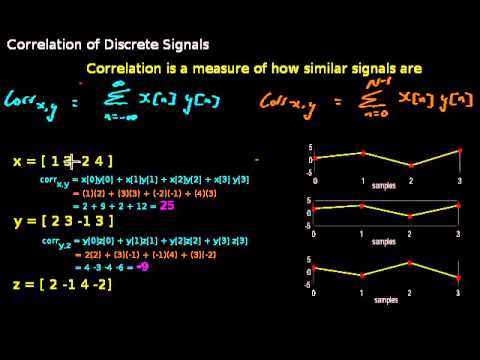

Correlation provides a measure of similarity between two signals. This video explains process of correlating discrete signals and highlights when normalised correlation is required. -

11:43

11:43Correlation analysis using Excel

Correlation analysis using ExcelCorrelation analysis using Excel

How to run a correlation analysis using Excel and write up the findings for a report -

18:38

18:38Phase Correlation Meter - Creating Tracks

Phase Correlation Meter - Creating TracksPhase Correlation Meter - Creating Tracks

This quick tutorial shares how to use a correlation meter, what to do with the results it gives you and how to troubleshoot and resolve phasing issues in your music. Phasing issues can haunt us as modern producers. From boxiness to completely missing audio, phase alignment problems are something you always want to avoid. Luckily, most DAWs have a correlation meter for you to use. These small utility plug-ins can be a real lifesaver, as unassuming as they may appear at first glance. They are very simple in their design, providing you with meter showing a range of -1 to 1 but most do not offer any kind of help or explanation on the surface. So we are here to explain how it all works. A correlation meter registers a +1 value when audio signals being measured are in perfect phase alignment and a -1 value when the signals are offset to the point that they can cause serious issues. These helpful meters cannot always alert you of phase issues in your projects, but they can be a big help in identifying and tracking them down. For instance, two duplicate sounds become slightly offset in your arrangement resulting in a very boxy and warped quality of sound. Obviously this is unwanted and it is because the two audio signals are not in perfect phase alignment. The correlation meter may not show anything to make you aware of this particular problem. Therefore we still need to be very aware of any small audible changes as we work to avoid surprises like this one. If a correlation meter does happen to show you a problem exists in your mix, you may try (slightly) shifting one or more of the audio regions in your arrangement to alleviate the problem. If this does not work, you can use a simple gain plug-in to invert part of the signal or even convert the signal to mono right there on the spot. Certain types of plug-ins may cause stereo imaging and phase correlation issues. If you are having a tough time tracking down where the phasing issue is originating from, you may want to bypass any stereo imaging/spread plug-ins to see if they are the cause. There are many ways a phasing issue may creep into one of your music projects. But knowing how they happen, being as pro-active as possible to avoid them and being systematic in your approach to isolating them when they occur will help you greatly in your battle against them. Always use a correlation meter! Cheers, OhmLab -

16:33

16:33Spearman's Rank Correlation Coefficient : ExamSolutions Maths Revision

Spearman's Rank Correlation Coefficient : ExamSolutions Maths RevisionSpearman's Rank Correlation Coefficient : ExamSolutions Maths Revision

Go to http://www.examsolutions.net/ for the index, playlists and more maths videos on Spearman's rank correlation coefficient and other maths topics.

- Actuarial science

- Analysis of variance

- Annals of Statistics

- Anscombe's quartet

- Arithmetic mean

- Autocorrelation

- Bar chart

- Bayes estimator

- Bayes factor

- Bayesian inference

- Bayesian probability

- Bias of an estimator

- Binomial regression

- Bioinformatics

- Biometrics

- Biostatistics

- Biplot

- Box plot

- Box–Jenkins

- Brownian covariance

- Cartography

- Categorical data

- Category Statistics

- Causality

- Census

- Chemometrics

- Chi-squared test

- Clinical trial

- Cluster analysis

- Cohen's kappa

- Confidence interval

- Confounding

- Consistent estimator

- Contingency table

- Control chart

- Copula (statistics)

- Correlation function

- Correlation ratio

- Correlogram

- Covariance

- Covariance matrix

- Credible interval

- Crime statistics

- Cross-correlation

- Data

- Data collection

- Demand curve

- Density estimation

- Distance correlation

- Econometrics

- Effect size

- Epidemiology

- Estimator

- Expected value

- Experiment

- Exponential family

- F-test

- Factor analysis

- Factorial experiment

- Failure rate

- Forest plot

- Francis Anscombe

- Francis Galton

- Frequency domain

- General linear model

- Genetic correlation

- Geometric mean

- Geostatistics

- Graphical model

- Grouped data

- Harmonic mean

- Histogram

- Human height

- Illusory correlation

- Index of dispersion

- Information entropy

- Interquartile range

- Isotonic regression

- JSTOR

- Karl Pearson

- Kendall's tau

- Kriging

- Kurtosis

- L-moment

- Linear dependence

- Linear regression

- Location parameter

- Logistic regression

- Logrank test

- Mann–Whitney U

- Maximum likelihood

- McNemar's test

- Mean

- Median

- Medical statistics

- Meta-analysis

- Methods engineering

- Mixed model

- Mode (statistics)

- Moment (mathematics)

- Monotone function

- Multiple correlation

- Mutual information

- National accounts

- Natural experiment

- Nonlinear regression

- Normal distribution

- Observational study

- Official statistics

- Opinion poll

- Optimal design

- Outlier

- Partial correlation

- Percentile

- Poisson regression

- Portal Statistics

- Prior probability

- Probabilistic design

- Psychometrics

- Q-Q plot

- Quality control

- Quantile

- Quasi-experiment

- Questionnaire

- Radar chart

- Random assignment

- Random variable

- Random variables

- Range (statistics)

- Rank correlation

- Regression analysis

- Robust regression

- Robust statistics

- Run chart

- Scaled correlation

- Scatter plot

- Scatterplot

- Seasonal adjustment

- Shapiro–Wilk test

- Skewness

- Social statistics

- Spatial analysis

- Standard deviation

- Standard error

- Stationary process

- Statistical graphics

- Statistical power

- Statistical theory

- Statistics

- Stemplot

- Stratified sampling

- Student's t-test

- Subindependence

- Sufficient statistic

- Survey methodology

- Survival analysis

- Survival function

- Tautology (logic)

- Template Statistics

- Time domain

- Time series

- Total correlation

- Trend estimation

- Uncorrelated

- Variance

- Wald test

- Z-test

-

Statistics 101: Understanding Correlation

Statistics 101: Understanding Correlation In this video we discuss the basic concepts of another bivariate relationship; correlation. Previous videos examined covariance and in this lesson we tie the two concepts together. Correlation comes with certain caveats and we talk about those as well. Finally we walk through a simple example involving correlation and its interpretation. Enjoy! For my complete video library organized by playlist, please go to my video page here: http://www.youtube.com/user/BCFoltz/videos?flow=list&view;=1&live;_view=500&sort;=dd -

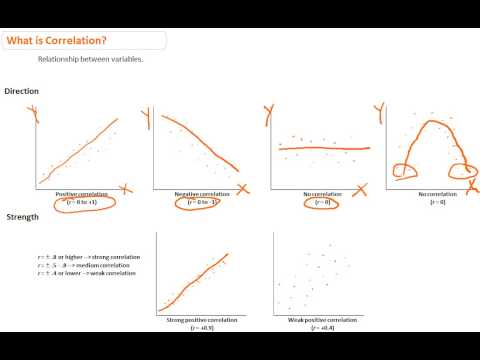

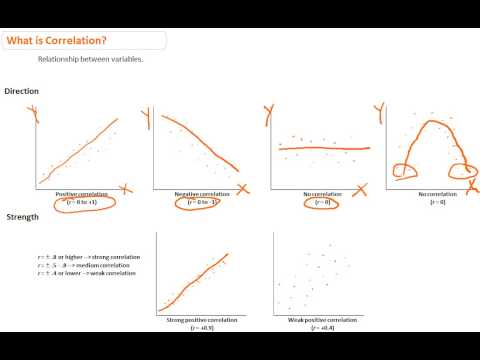

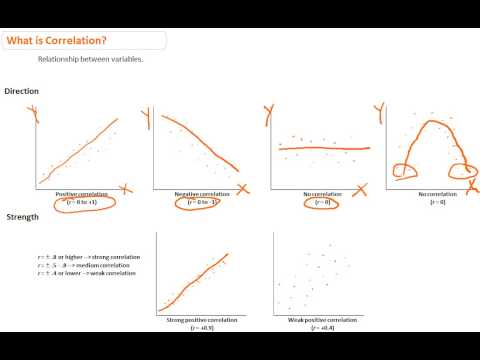

What Is Correlation?

Basic overview of correlation -

The Correlation Coefficient - Explained in Three Steps

The correlation coefficient is a really popular way of summarizing a scatter plot into a single number between -1 and 1. In this video, I'm showing how the correlation coefficient does this in three steps, and explain them without any formulas. This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.(http://creativecommons.org/licenses/by-nc/4.0/) -

Correlation & Regression Video 1

full course on : www.ngclasses.com. -

How Ice Cream Kills! Correlation vs. Causation

To make better decisions and improve your problem solving skills it is important to understand the difference between correlation and causation. -

Correlation Coefficient

A step by step problem on how to use the Correlation Coefficient formula. -

Correlation and causality | Statistical studies | Probability and Statistics | Khan Academy

Understanding why correlation does not imply causality (even though many in the press and some researchers often imply otherwise) Practice this lesson yourself on KhanAcademy.org right now: https://www.khanacademy.org/math/probability/statistical-studies/types-of-studies/e/types-of-statistical-studies?utm_source=YT&utm;_medium=Desc&utm;_campaign=ProbabilityandStatistics Watch the next lesson: https://www.khanacademy.org/math/probability/statistical-studies/types-of-studies/v/analyzing-statistical-study?utm_source=YT&utm;_medium=Desc&utm;_campaign=ProbabilityandStatistics Missed the previous lesson? https://www.khanacademy.org/math/probability/statistical-studies/types-of-studies/v/types-statistical-studies?utm_source=YT&utm;_medium=Desc&utm;_campaign=ProbabilityandStatistics Probability an... -

-

How to Calculate Pearson's Correlation Coefficient

How to Calculate Pearson's Correlation Coefficient by hand. Visit http://www.StatisticsHowTo.com for more videos and hundreds of how to articles for elementary statistics. -

The danger of mixing up causality and correlation: Ionica Smeets at TEDxDelft

Ionica Smeets (@ionicasmeets) is joining TEDxDelft Never Grow Up: A mathematician and science journalist with plenty of media experience. Using her vast knowledge and enthusiasm, she can explain everything about her favorite topics in science and statistics. She does it well on paper and face-to-face: She writes blogs, columns and books and is also asked to appear as a speaker, live, on television and on radio shows. Since 2006, Ionica has taken on the Internet with interesting and fun mathematics together with PhD Partner in Crime Jeanine Daems on the website wiskundemeisjes.nl. She and Jeanine now write a bi-weekly column in the Volkskrant about mathematics and the website also resulted in a book titled 'I Was Never Good At Math' (Ik was altijd heel slecht in wiskunde) in 2011. Ionica a... -

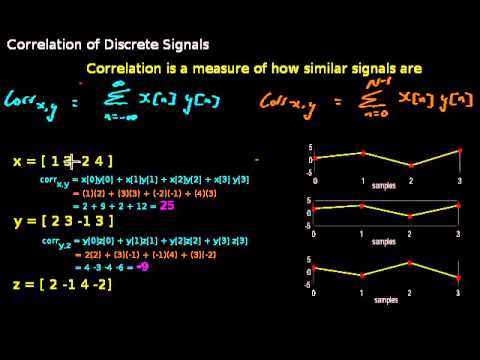

Correlation Explanation with Demo

Correlation provides a measure of similarity between two signals. This video explains process of correlating discrete signals and highlights when normalised correlation is required. -

Correlation analysis using Excel

How to run a correlation analysis using Excel and write up the findings for a report -

Phase Correlation Meter - Creating Tracks

This quick tutorial shares how to use a correlation meter, what to do with the results it gives you and how to troubleshoot and resolve phasing issues in your music. Phasing issues can haunt us as modern producers. From boxiness to completely missing audio, phase alignment problems are something you always want to avoid. Luckily, most DAWs have a correlation meter for you to use. These small utility plug-ins can be a real lifesaver, as unassuming as they may appear at first glance. They are very simple in their design, providing you with meter showing a range of -1 to 1 but most do not offer any kind of help or explanation on the surface. So we are here to explain how it all works. A correlation meter registers a +1 value when audio signals being measured are in perfect phase alignment ... -

Spearman's Rank Correlation Coefficient : ExamSolutions Maths Revision

Go to http://www.examsolutions.net/ for the index, playlists and more maths videos on Spearman's rank correlation coefficient and other maths topics. -

-

Pearson r Correlation in SPSS - How to Calculate and Interpret (Part 1)

Pearson r Correlation in SPSS - How to Calculate and Interpret Correlation (Part 1). Check out our next text, 'SPSS Cheat Sheet,' here: http://goo.gl/b8sRHa. Prime and 'Unlimited' members, get our text for free! (Only $4.99 otherwise, but will likely increase soon.) For additional SPSS/Statistics videos: SPSS Descriptive Statistics Videos: http://tinyurl.com/lyxnk72 SPSS Inferential Statistics Videos: http://tinyurl.com/lm9hpwc Our four-part YouTube video series on regression: http://youtu.be/ubZT2Fl2UkQ How to calculate the correlation coefficient in SPSS is covered in this video. The correlation is also tested for significance and a scatterplot is constructed. YouTube Channel: https://www.youtube.com/user/statisticsinstructor Channel Description: For step by step help with stati... -

How to calculate Karl Pearson Coefficient of Correlation Part 1 (in Hindi)

This video provides learning on finding correlation between two variables by Karl Pearson coefficient of correlation -

SPSS for questionnaire analysis: Correlation analysis

Basic introduction to correlation - how to interpret correlation coefficient, and how to chose the right type of correlation measure for your situation. I could make this video real quick and just show you Pearson's correlation coefficient, which is commonly taught in a introductory stats course. However, the Pearson's correlation IS NOT always applicable as it depends on whether your data satisfies certain conditions. So to do correlation analysis, it's better I bring together all the types of measures of correlation given in SPSS in one presentation. To support this site, I am giving the 1st 100 people to sign up access to the SPSS Newbies online course for $10 https://www.udemy.com/spss-for-newbies-part-1/?couponCode=10dollar -

The Correlation Coefficient - Part 1

The Correlation Coefficient - Part 1. Just a quick little introduction and general overview to the correlation coefficient. I give the formula and then do a quick discussion about its properties. -

-

Covariance and Correlation Coefficient Video

Video for finding the covariance and correlation coefficient by hand. -

Correlations

descriptions and examples of positive correlations, negative correlations, and no correlations -

Correlation and Regression

This clip describes what correlation represents and how to use a graphing calculator to determine what the correlation of a set of data. It also provides steps for graphing scatterplots and the linear regression line, or best-fit line, for your data. Residuals are also explained as well. Most of the examples are done on the calculator. If correlation, r does not show up in the screen where it should, the following steps must be done: 1) Push the 2nd key, followed by the number 0 (which should take you to the catalog). 2) Press the x-1 (located under the math key) This will take you to the "d"s of the catalog. 3) Arrow down to the DiagnosticOn option and press ENTER. 4) Once that takes you back to the homescreen, press ENTER again. The screen should say "done". Now when you br...

Statistics 101: Understanding Correlation

- Order: Reorder

- Duration: 27:06

- Updated: 26 Jan 2013

- views: 183741

- published: 26 Jan 2013

- views: 183741

What Is Correlation?

- Order: Reorder

- Duration: 6:56

- Updated: 05 Jun 2009

- views: 154715

The Correlation Coefficient - Explained in Three Steps

- Order: Reorder

- Duration: 6:54

- Updated: 01 May 2014

- views: 95322

- published: 01 May 2014

- views: 95322

Correlation & Regression Video 1

- Order: Reorder

- Duration: 27:36

- Updated: 11 Dec 2013

- views: 28282

- published: 11 Dec 2013

- views: 28282

How Ice Cream Kills! Correlation vs. Causation

- Order: Reorder

- Duration: 5:27

- Updated: 13 Jul 2015

- views: 6694

- published: 13 Jul 2015

- views: 6694

Correlation Coefficient

- Order: Reorder

- Duration: 9:15

- Updated: 14 Nov 2011

- views: 120127

- published: 14 Nov 2011

- views: 120127

Correlation and causality | Statistical studies | Probability and Statistics | Khan Academy

- Order: Reorder

- Duration: 10:45

- Updated: 18 Aug 2011

- views: 337429

- published: 18 Aug 2011

- views: 337429

Linear Regression and Correlation - Introduction

- Order: Reorder

- Duration: 14:48

- Updated: 12 Feb 2014

- views: 37940

How to Calculate Pearson's Correlation Coefficient

- Order: Reorder

- Duration: 8:47

- Updated: 30 Aug 2013

- views: 170261

- published: 30 Aug 2013

- views: 170261

The danger of mixing up causality and correlation: Ionica Smeets at TEDxDelft

- Order: Reorder

- Duration: 5:58

- Updated: 05 Nov 2012

- views: 81320

- published: 05 Nov 2012

- views: 81320

Correlation Explanation with Demo

- Order: Reorder

- Duration: 10:12

- Updated: 24 Feb 2014

- views: 22251

- published: 24 Feb 2014

- views: 22251

Correlation analysis using Excel

- Order: Reorder

- Duration: 11:43

- Updated: 19 Jan 2013

- views: 92487

- published: 19 Jan 2013

- views: 92487

Phase Correlation Meter - Creating Tracks

- Order: Reorder

- Duration: 18:38

- Updated: 19 Sep 2015

- views: 2068

- published: 19 Sep 2015

- views: 2068

Spearman's Rank Correlation Coefficient : ExamSolutions Maths Revision

- Order: Reorder

- Duration: 16:33

- Updated: 28 Feb 2015

- views: 8924

- published: 28 Feb 2015

- views: 8924

Linear Regression and Correlation - Example

- Order: Reorder

- Duration: 24:59

- Updated: 13 Feb 2014

- views: 79077

Pearson r Correlation in SPSS - How to Calculate and Interpret (Part 1)

- Order: Reorder

- Duration: 5:43

- Updated: 16 Sep 2013

- views: 55184

- published: 16 Sep 2013

- views: 55184

How to calculate Karl Pearson Coefficient of Correlation Part 1 (in Hindi)

- Order: Reorder

- Duration: 24:40

- Updated: 03 Dec 2013

- views: 11522

- published: 03 Dec 2013

- views: 11522

SPSS for questionnaire analysis: Correlation analysis

- Order: Reorder

- Duration: 20:01

- Updated: 24 Dec 2013

- views: 155570

- published: 24 Dec 2013

- views: 155570

The Correlation Coefficient - Part 1

- Order: Reorder

- Duration: 8:42

- Updated: 22 Feb 2011

- views: 44311

- published: 22 Feb 2011

- views: 44311

Pearson's Product Moment Correlation Coefficient : ExamSolutions

- Order: Reorder

- Duration: 14:10

- Updated: 05 May 2011

- views: 93151

Covariance and Correlation Coefficient Video

- Order: Reorder

- Duration: 7:01

- Updated: 03 Jun 2014

- views: 21574

- published: 03 Jun 2014

- views: 21574

Correlations

- Order: Reorder

- Duration: 5:22

- Updated: 25 Feb 2009

- views: 31547

- published: 25 Feb 2009

- views: 31547

Correlation and Regression

- Order: Reorder

- Duration: 9:35

- Updated: 29 Jan 2010

- views: 115100

- published: 29 Jan 2010

- views: 115100

-

correlation weight loss and anemia

50781 -

correlation between walking and weight loss

82779 -

correlation between weight loss and anemia

13024 -

Spurious Correlations

Crazy correlations! Students can analyze different correlations and learn the difference between correlation and causation. -

cPacket Networks' Threat Vector Correlation - RSA San Francisco, 2016

Julia Reilly discusses how cPacket can work in conjunction with your security tools to provide critical analytics to your InfoSec team. Recorded at RSA San Francisco, 2016. -

14-5 Simple Linear Regression in SPSS

Table of Contents: 03:59 - SPSS Output: ANOVA for r2 05:35 - SPSS Output: Model Parameters the a and b values 06:37 - Prediction 08:06 - Correlation Regression with SPSS 09:19 - Correlation Regression with SPSS 09:46 - Correlation Regression with SPSS 10:32 - Scatterplot for Regression 11:39 - Correlation Regression with SPSS -

13-6 Nonparametric Correlation

Table of Contents: 00:18 - Nonparametric Correlation 02:33 - Doing a Correlation 03:07 - Correlation Output -

13-5 Correlation in SPSS

Table of Contents: 02:29 - Correlation Output 03:10 - Reporting the Results -

13-4 Correlation by Hand

Table of Contents: 00:16 - Computing Correlation Coefficient 00:46 - Computing Correlation Coefficient 01:37 - Computing Correlation Coefficient -

Image Correlation for Shape, Motion and Deformation Measurements Basic Concepts,Theory and Applicati

-

13-2 The Correlation Coefficient

Table of Contents: 01:18 - Type of Relationships 02:01 - Type of Relationships 02:44 - Strength of a Relationship 04:52 - Interpreting Correlation 05:48 - Interpreting Correlation -

13-1 Relationships Between Variables

Table of Contents: 00:11 - Storks and Babies 01:17 - What is a Correlation? 03:12 - What is a Correlation? 04:52 - Correlation and Causality 06:11 - Correlation and Causality 07:33 - Correlational v. Experimental 10:02 - Correlational Research -

Bio-Membrane Image Correlation Spectroscopy (ICS) Demo Video

This video demonstrates the functionality of our CMPUT 401 project, Bio-Membrane Image Correlation Spectroscopy. This software runs either as a local Windows or Linux application or as a web server, and runs correlations on bio-membrane images from microscopes to output a series of graphs and values. More information on this project can be found on the GitHub repository at https://github.com/nklose/ICS -

Correlation 2

-

Correlation Between Depression & Stress - Dr. Piskuric

-

Spearman rank correlation coefficient

Summary 1) Calculation of the Spearman coefficient : 3:44 2) Statistical test : 6:13 3) Interpretation 9:27 4) Assumptions and limitations: 10:06 -

rank correlation

-

Correlation Between Receiving a College Degree and Employment Rates

After Effects Animation Research Project - SYG 2000 -

MATH 533 / MATH533 / MATH/533 Week 7 Project Part C Regression and Correlation Analysis

MATH 533 Week 7 Project Part C Regression and Correlation Analysis http://class-tutor.com/doc/math-533/math-533-week-7-project-part-c-regression-and-correlation-analysis/ MATH 533 Week 7 Project Part C Regression and Correlation Analysis Using MINITAB perform the regression and correlation analysis for the data on CREDIT BALANCE (Y) and SIZE (X) by answering the following. Generate a scatterplot for CREDIT BALANCE vs. SIZE, including the graph of the “best fit” line. Interpret. Determine the equation of the “best fit” line, which describes the relationship between CREDIT BALANCE and SIZE. Determine the coefficient of correlation. Interpret. Determine the coefficient of determination. Interpret. Test the utility of this regression model (use a two tail test with α =.05). Interpret your re... -

MATH 221 / MATH221 / MATH/221 WEEK 2 ILAB CORRELATION AND REGRESSION

MATH 221 WEEK 2 ILAB CORRELATION AND REGRESSION Complete course guide available here – http://class-tutor.com/doc/math-221/math-221-week-2-ilab-correlation-and-regression/ HOMEWORK Description MATH 221 Week 2 Ilab Correlation and Regression gives the solution to: 1.Create a Pie Chart for the variable Car -

Correlation and Regression Applications for Industrial Organizational Psychology and Management Orga

-

The Hamptons correlation: What beach house prices say about the market

Nick Colas of ConvergEx Group has taken a closer look at real estate out in the Hamptons for the past several years and has drawn some interesting conclusions about the Wall Streeters that head there for the summer and the markets they largely leave behind. -

PSY 325 Week 4 Discussions Correlation

BUY HERE⬊ http://www.homeworkmade.com/products-28/psy-325-new-ashford/psy-325-week-4-discussions-correlation/ PSY 325 Week 4 Discussions Correlation

correlation weight loss and anemia

- Order: Reorder

- Duration: 2:22

- Updated: 30 Mar 2016

- views: 0

- published: 30 Mar 2016

- views: 0

correlation between walking and weight loss

- Order: Reorder

- Duration: 2:22

- Updated: 30 Mar 2016

- views: 0

- published: 30 Mar 2016

- views: 0

correlation between weight loss and anemia

- Order: Reorder

- Duration: 2:22

- Updated: 30 Mar 2016

- views: 0

- published: 30 Mar 2016

- views: 0

Spurious Correlations

- Order: Reorder

- Duration: 3:03

- Updated: 30 Mar 2016

- views: 0

- published: 30 Mar 2016

- views: 0

cPacket Networks' Threat Vector Correlation - RSA San Francisco, 2016

- Order: Reorder

- Duration: 3:34

- Updated: 30 Mar 2016

- views: 0

- published: 30 Mar 2016

- views: 0

14-5 Simple Linear Regression in SPSS

- Order: Reorder

- Duration: 11:55

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

13-6 Nonparametric Correlation

- Order: Reorder

- Duration: 4:34

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

13-5 Correlation in SPSS

- Order: Reorder

- Duration: 5:06

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

13-4 Correlation by Hand

- Order: Reorder

- Duration: 3:06

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

Image Correlation for Shape, Motion and Deformation Measurements Basic Concepts,Theory and Applicati

- Order: Reorder

- Duration: 0:32

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

13-2 The Correlation Coefficient

- Order: Reorder

- Duration: 7:02

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

13-1 Relationships Between Variables

- Order: Reorder

- Duration: 10:55

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

Bio-Membrane Image Correlation Spectroscopy (ICS) Demo Video

- Order: Reorder

- Duration: 9:27

- Updated: 29 Mar 2016

- views: 5

- published: 29 Mar 2016

- views: 5

Correlation 2

- Order: Reorder

- Duration: 1:05

- Updated: 29 Mar 2016

- views: 1

- published: 29 Mar 2016

- views: 1

Correlation Between Depression & Stress - Dr. Piskuric

- Order: Reorder

- Duration: 1:18

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

Spearman rank correlation coefficient

- Order: Reorder

- Duration: 13:36

- Updated: 29 Mar 2016

- views: 16

- published: 29 Mar 2016

- views: 16

rank correlation

- Order: Reorder

- Duration: 6:42

- Updated: 29 Mar 2016

- views: 2

- published: 29 Mar 2016

- views: 2

Correlation Between Receiving a College Degree and Employment Rates

- Order: Reorder

- Duration: 8:00

- Updated: 29 Mar 2016

- views: 2

MATH 533 / MATH533 / MATH/533 Week 7 Project Part C Regression and Correlation Analysis

- Order: Reorder

- Duration: 0:21

- Updated: 29 Mar 2016

- views: 2

- published: 29 Mar 2016

- views: 2

MATH 221 / MATH221 / MATH/221 WEEK 2 ILAB CORRELATION AND REGRESSION

- Order: Reorder

- Duration: 0:21

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

Correlation and Regression Applications for Industrial Organizational Psychology and Management Orga

- Order: Reorder

- Duration: 0:17

- Updated: 29 Mar 2016

- views: 0

- published: 29 Mar 2016

- views: 0

The Hamptons correlation: What beach house prices say about the market

- Order: Reorder

- Duration: 3:08

- Updated: 29 Mar 2016

- views: 0

PSY 325 Week 4 Discussions Correlation

- Order: Reorder

- Duration: 0:09

- Updated: 28 Mar 2016

- views: 0

- published: 28 Mar 2016

- views: 0

-

Statistics 20: Multiple Regression and Correlation

In today's class we discussed multiple regression and correlation. We take a look at the partial and semi-partial correlation coefficients and partial regression coefficients. We also show how they can be calculated using the simple correlation coefficients and appropriate standard deviations. Sorry about the breaks in the video, I had some battery problems in addition to forgetting to restart the camera. The formula that was provided for the coefficient of determination needs to be squared. This change has been reflected in updated lecture slides that you can find at http://wknapp.com/classes . -

Forex Correlation Trading Idea

In this video, I discuss a simple concept that banks have been using for years -

LoadRunner Training - Manual correlation - LoadRunner videos

LoadRunner Online Training - Manual correlation - LoadRunner Training videos. You can also subscribe to 27 HD quality videos on HP LoadRunner here http://www.qatestingtraining.com/course/hp-loadrunner-training-online/ . Packages starts from 75$ 45 days -

Simple Linear Regression, Coefficient of Determination, and Correlation Coefficient Explained

This tutorial discusses the basic concepts of simple linear regression and how to calculate the slope and y intercept to get the line of regression. The coefficient of determination and the correlation coefficient are also calculated and discussed, as are the concepts of Total Variation (SST), Explained Variation (SSR), and Unexplained Variation (SSE). Finally, an hypothesis test on the slope is conducted using both the critical value approach and the p value approach. -

Statistics - How to find Rank Correlation by Spearman's Method (in Hindi)

This video provides the learning on what is rank correlation and how to find rank correlation by Spearman's Method. -

Correlation Formula Derivation, Karl Pearson's Coefficient

Correlation Formula Derivation, Karl Pearson's Coefficient video by Edupedia World (www.edupediaworld.com). All Rights Reserved. -

LoadRunner Training Tutorial #7 - Vugen Correlation

LoadRunner LIVE Online Training @ http://www.softwaretestinghelp.org Load Runner Vugen Correlation - LoadRunner Training with detailed LoadRunner Tutorials. Want to learn software testing from the experts? Visit below page: http://softwaretestinghelp.org Correlation is done for the dynamic value or the value return by server for any request. Want to learn software testing from the experts? Visit below page: http://softwaretestinghelp.org -

Spearman's Rank Correlation: Case of Tied Ranks

Spearman's Rank Correlation: Case of Tied Ranks video by Edupedia World (www.edupediaworld.com). All Rights Reserved. -

Correlation and Multiple Regression in Excel

This video walk you through how to run Correlation and Multiple Regression in Excel. -

Percent Change and Correlation Tables - p.8 Data Analysis with Python and Pandas Tutorial

Welcome to Part 8 of our Data Analysis with Python and Pandas tutorial series. In this part, we're going to do some of our first manipulations on the data. Tutorial sample code and text: http://pythonprogramming.net/percent-change-correlation-data-analysis-python-pandas-tutorial/ http://pythonprogramming.net https://twitter.com/sentdex -

Stats 21 Covariance and Correlation of Joint Random Variables

Definitions, examples, and how to calculate covariances and correlations for two random variables. If you find these videos useful, I hope that you will consider signing up for my online statistics workshop on Udemy, which contains additional videos and lots of problems to help you apply and reinforce the important concepts: https://www.udemy.com/statshelp/?couponCode=coefficient -

Bivariate, Zero Order Correlation Tutorial

http://thedoctoraljourney.com/ This tutorial defines a bivariate, zero order correlation, provides examples for when this analysis might be used by a researcher, walks through the process of conducting this analysis, and discusses how to set up an SPSS file and write an APA results section for this analysis. For more statistics, research and SPSS tools, visit http://thedoctoraljourney.com/. -

9. Biostatistics lecture - Correlation coefficient

This biostatistics lecture explains about correlation coefficient and the use of correlation coefficient in statistical analysis. For more information, log on to- http://shomusbiology.weebly.com/ Download the study materials here- http://shomusbiology.weebly.com/bio-materials.html -

Canonical Correlation in SPSS

-

Lecture 21: Covariance and Correlation | Statistics 110

We introduce covariance and correlation, and show how to obtain the variance of a sum, including the variance of a Hypergeometric random variable. -

-

Regression And Correlation

-

LoadRunner Correlation

Thisi

Statistics 20: Multiple Regression and Correlation

- Order: Reorder

- Duration: 61:17

- Updated: 13 Dec 2012

- views: 7003

- published: 13 Dec 2012

- views: 7003

Forex Correlation Trading Idea

- Order: Reorder

- Duration: 22:25

- Updated: 05 Jan 2015

- views: 3883

- published: 05 Jan 2015

- views: 3883

LoadRunner Training - Manual correlation - LoadRunner videos

- Order: Reorder

- Duration: 30:15

- Updated: 09 Aug 2013

- views: 16562

- published: 09 Aug 2013

- views: 16562

Simple Linear Regression, Coefficient of Determination, and Correlation Coefficient Explained

- Order: Reorder

- Duration: 45:33

- Updated: 05 May 2015

- views: 10840

- published: 05 May 2015

- views: 10840

Statistics - How to find Rank Correlation by Spearman's Method (in Hindi)

- Order: Reorder

- Duration: 20:03

- Updated: 29 Nov 2013

- views: 4994

- published: 29 Nov 2013

- views: 4994

Correlation Formula Derivation, Karl Pearson's Coefficient

- Order: Reorder

- Duration: 35:55

- Updated: 13 Dec 2014

- views: 4525

- published: 13 Dec 2014

- views: 4525

LoadRunner Training Tutorial #7 - Vugen Correlation

- Order: Reorder

- Duration: 22:39

- Updated: 11 Dec 2013

- views: 26585

- published: 11 Dec 2013

- views: 26585

Spearman's Rank Correlation: Case of Tied Ranks

- Order: Reorder

- Duration: 21:47

- Updated: 19 Dec 2014

- views: 4694

- published: 19 Dec 2014

- views: 4694

Correlation and Multiple Regression in Excel

- Order: Reorder

- Duration: 33:47

- Updated: 30 Apr 2014

- views: 29071

- published: 30 Apr 2014

- views: 29071

Percent Change and Correlation Tables - p.8 Data Analysis with Python and Pandas Tutorial

- Order: Reorder

- Duration: 20:20

- Updated: 05 Oct 2015

- views: 2457

- published: 05 Oct 2015

- views: 2457

Stats 21 Covariance and Correlation of Joint Random Variables

- Order: Reorder

- Duration: 24:56

- Updated: 04 Jan 2014

- views: 7800

- published: 04 Jan 2014

- views: 7800

Bivariate, Zero Order Correlation Tutorial

- Order: Reorder

- Duration: 66:09

- Updated: 26 Aug 2013

- views: 6003

- published: 26 Aug 2013

- views: 6003

9. Biostatistics lecture - Correlation coefficient

- Order: Reorder

- Duration: 29:57

- Updated: 12 Mar 2014

- views: 4282

- published: 12 Mar 2014

- views: 4282

Canonical Correlation in SPSS

- Order: Reorder

- Duration: 39:29

- Updated: 28 Apr 2015

- views: 4599

- published: 28 Apr 2015

- views: 4599

Lecture 21: Covariance and Correlation | Statistics 110

- Order: Reorder

- Duration: 49:26

- Updated: 29 Apr 2013

- views: 22975

- published: 29 Apr 2013

- views: 22975

CFA Level II 2014 - Reading 11 - Correlation and Regression

- Order: Reorder

- Duration: 155:30

- Updated: 05 Mar 2012

- views: 30616

Regression And Correlation

- Order: Reorder

- Duration: 20:55

- Updated: 22 Feb 2012

- views: 36869

- published: 22 Feb 2012

- views: 36869

LoadRunner Correlation

- Order: Reorder

- Duration: 69:11

- Updated: 18 Dec 2015

- views: 315

- published: 18 Dec 2015

- views: 315

- Playlist

- Chat

- Playlist

- Chat

Statistics 101: Understanding Correlation

- Report rights infringement

- published: 26 Jan 2013

- views: 183741

What Is Correlation?

- Report rights infringement

- published: 05 Jun 2009

- views: 154715

The Correlation Coefficient - Explained in Three Steps

- Report rights infringement

- published: 01 May 2014

- views: 95322

Correlation & Regression Video 1

- Report rights infringement

- published: 11 Dec 2013

- views: 28282

How Ice Cream Kills! Correlation vs. Causation

- Report rights infringement

- published: 13 Jul 2015

- views: 6694

Correlation Coefficient

- Report rights infringement

- published: 14 Nov 2011

- views: 120127

Correlation and causality | Statistical studies | Probability and Statistics | Khan Academy

- Report rights infringement

- published: 18 Aug 2011

- views: 337429

Linear Regression and Correlation - Introduction

- Report rights infringement

- published: 12 Feb 2014

- views: 37940

How to Calculate Pearson's Correlation Coefficient

- Report rights infringement

- published: 30 Aug 2013

- views: 170261

The danger of mixing up causality and correlation: Ionica Smeets at TEDxDelft

- Report rights infringement

- published: 05 Nov 2012

- views: 81320

Correlation Explanation with Demo

- Report rights infringement

- published: 24 Feb 2014

- views: 22251

Correlation analysis using Excel

- Report rights infringement

- published: 19 Jan 2013

- views: 92487

Phase Correlation Meter - Creating Tracks

- Report rights infringement

- published: 19 Sep 2015

- views: 2068

Spearman's Rank Correlation Coefficient : ExamSolutions Maths Revision

- Report rights infringement

- published: 28 Feb 2015

- views: 8924

- Playlist

- Chat

correlation weight loss and anemia

- Report rights infringement

- published: 30 Mar 2016

- views: 0

correlation between walking and weight loss

- Report rights infringement

- published: 30 Mar 2016

- views: 0

correlation between weight loss and anemia

- Report rights infringement

- published: 30 Mar 2016

- views: 0

Spurious Correlations

- Report rights infringement

- published: 30 Mar 2016

- views: 0

cPacket Networks' Threat Vector Correlation - RSA San Francisco, 2016

- Report rights infringement

- published: 30 Mar 2016

- views: 0

14-5 Simple Linear Regression in SPSS

- Report rights infringement

- published: 29 Mar 2016

- views: 0

13-6 Nonparametric Correlation

- Report rights infringement

- published: 29 Mar 2016

- views: 0

13-5 Correlation in SPSS

- Report rights infringement

- published: 29 Mar 2016

- views: 0

13-4 Correlation by Hand

- Report rights infringement

- published: 29 Mar 2016

- views: 0

Image Correlation for Shape, Motion and Deformation Measurements Basic Concepts,Theory and Applicati

- Report rights infringement

- published: 29 Mar 2016

- views: 0

13-2 The Correlation Coefficient

- Report rights infringement

- published: 29 Mar 2016

- views: 0

13-1 Relationships Between Variables

- Report rights infringement

- published: 29 Mar 2016

- views: 0

Bio-Membrane Image Correlation Spectroscopy (ICS) Demo Video

- Report rights infringement

- published: 29 Mar 2016

- views: 5

Correlation 2

- Report rights infringement

- published: 29 Mar 2016

- views: 1

- Playlist

- Chat

Statistics 20: Multiple Regression and Correlation

- Report rights infringement

- published: 13 Dec 2012

- views: 7003

Forex Correlation Trading Idea

- Report rights infringement

- published: 05 Jan 2015

- views: 3883

LoadRunner Training - Manual correlation - LoadRunner videos

- Report rights infringement

- published: 09 Aug 2013

- views: 16562

Simple Linear Regression, Coefficient of Determination, and Correlation Coefficient Explained

- Report rights infringement

- published: 05 May 2015

- views: 10840

Statistics - How to find Rank Correlation by Spearman's Method (in Hindi)

- Report rights infringement

- published: 29 Nov 2013

- views: 4994

Correlation Formula Derivation, Karl Pearson's Coefficient

- Report rights infringement

- published: 13 Dec 2014

- views: 4525

LoadRunner Training Tutorial #7 - Vugen Correlation

- Report rights infringement

- published: 11 Dec 2013

- views: 26585

Spearman's Rank Correlation: Case of Tied Ranks

- Report rights infringement

- published: 19 Dec 2014

- views: 4694

Correlation and Multiple Regression in Excel

- Report rights infringement

- published: 30 Apr 2014

- views: 29071

Percent Change and Correlation Tables - p.8 Data Analysis with Python and Pandas Tutorial

- Report rights infringement

- published: 05 Oct 2015

- views: 2457

Stats 21 Covariance and Correlation of Joint Random Variables

- Report rights infringement

- published: 04 Jan 2014

- views: 7800

Bivariate, Zero Order Correlation Tutorial

- Report rights infringement

- published: 26 Aug 2013

- views: 6003

9. Biostatistics lecture - Correlation coefficient

- Report rights infringement

- published: 12 Mar 2014

- views: 4282

Canonical Correlation in SPSS

- Report rights infringement

- published: 28 Apr 2015

- views: 4599

-

Lyrics list:text lyricsplay full screenplay karaoke

Porn and the Threat to Virility

Edit Time Magazine 31 Mar 2016Kim Kardashian, Emily Ratajkowski post topless bathroom pic

Edit New York Daily News 30 Mar 2016Archaeologists Find Rare Etruscan Stone From 2,500 years Ago in Florence

Edit WorldNews.com 30 Mar 2016At least 14 killed, 70 injured as flyover collapses in Kolkata, India

Edit Dawn 31 Mar 2016Test Your News Knowledge With WN.com's March News Quiz

Edit WorldNews.com 31 Mar 2016Is anyone there - the science of consciousness (UNSW - The University of New South Wales)

Edit Public Technologies 31 Mar 2016What USD moves tell us about commodities

Edit CNBC 31 Mar 2016Busy Weekend Ahead For IRFU Referees (Irish Rugby Football Union)

Edit Public Technologies 31 Mar 2016Ruble Extends Best Advance in 11 Months as Fed Trumps Oil's Drop

Edit Bloomberg 31 Mar 2016Let them not come

Edit The Economist 31 Mar 2016Brain appears to have different mechanisms for reconciling sight and sound (UCLA Health System)

Edit Public Technologies 31 Mar 2016Conflicts Between Science and Spirituality Are Rooted In Your Brain

Edit Huffington Post 31 Mar 2016Another forestry worker killed at work (NZCTU - New Zealand Council of Trade Unions)

Edit Public Technologies 31 Mar 2016Brain appears to have different mechanisms for reconciling sight and sound (UCLA - University of California - Los Angeles)

Edit Public Technologies 31 Mar 2016Updating State Theft Laws Can Bring Less Incarceration—and Less Crime (The Pew Charitable Trusts)

Edit Public Technologies 31 Mar 2016PhD lecture by Peng Wang (Aalborg Universitet)

Edit Public Technologies 31 Mar 2016Humidity rather than heat is the number one enemy of the hard disk

Edit TechRadar 31 Mar 201649ers Museum to Host 150 San Mateo Students for Interactive STEM Experience (San Francisco 49ers)

Edit Public Technologies 31 Mar 2016- 1

- 2

- 3

- 4

- 5

- Next page »