OMG! Ubuntu ran a postmortem yesterday: Why Did Geary’s Fundraiser Fail? It’s a question we’ve been asking ourselves at Yorba, obviously. Quite a number of people have certainly stepped forward to offer their opinions, in forums and comment boards and social media and via email. OMG!’s article is the first attempt I’ve encountered at a complete list of theories.

Although I didn’t singlehandedly craft and execute the entire Geary crowdfunding campaign, I organized it and held its hand over those thirty days. Ultimately I take responsibility for the end result. That’s why I’d like to respond to OMG’s theories as well as offer a few of my own.

Some preliminary throat-clearing

Let me state a few things before I tackle the various theories.

First, it’s important to understand that the Geary campaign was a kind of experiment. We wanted to know if crowdfunding was a potential route for sustaining open-source development. We weren’t campaigining to create a new application; Geary exists today and has been under development for two years now. Unlike OpenShot and VLC, we weren’t porting Geary to Windows or the Mac, we wanted to improve the Linux experience. And we had no plans on using the raised money as capital to later sell a product or service, which is the usual route for most crowdfunded projects. Our pitch was simply this: donate money so we can make Geary on Linux even better than it is today.

Also, we didn’t go into this campaign thinking Yorba “deserved” the money. We weren’t asking for the community to reward us for what we’ve done. We were asking the community to help fund the things we wanted to develop and share.

Nor did we think that everyone in the FOSS world needed to come to our aid. Certainly we would’ve appreciated that, but our goal was to entice current and prospective Geary users to open their wallets and donate.

I hope you keep that in mind as I go through OMG!’s list of theories.

OMG’s possible reasons

“$100,000 was too much”

This was by far the most voiced complaint about the campaign. It was also the most frustrating because of its inexact nature — too much compared to what? When asked to elucidate, I either never heard back from the commenter or got the hand-wavey response “It’s just too much for an email client.”

It’s important to point out — and we tried! — that Yorba was not asking you for $100,000, we were asking the community for a lot of small donations. Your email account is your padlock on the Internet. Just about every online account you hold is keyed to it. It’s also the primary way people and companies will communicate with you on the Internet. Is a great email experience — a program you will use every day, often hours every day — worth $10, $25, $50?

Another point I tried to make: How much did it cost to produce Thunderbird? Ten thousand, fifteen thousand dollars? According to Ohloh, Thunderbird cost $18 million to develop. Even if that’s off by a factor of ten (and I’m not sure it is) we were asking for far less. (Incidentally, Ohloh puts Geary’s current development cost at $380,000. I can attest that’s not far off the mark.) Writing a kickin’ video game, a Facebook clone, or an email client takes developer time, and that’s what costs money, whether the software is dazzling, breathtaking, revolutionary, disruptive, or merely quietly useful in your daily routine.

The last Humble Indie Bundle earned $2.65 million in two weeks. Linux users regularly contribute 25% of the Humble Haul. Is that too much for a few games? Not at all.

“The Proposition Wasn’t Unique”

I agree, there are a lot of email client options for Linux. What I don’t see are any that look, feel, or interact quite like Geary, and that’s one reason Yorba started this project.

We were in a bind on this matter. We wanted the Geary campaign to be upbeat, positive, and hopeful. We saw no need to tear down other projects by name. We preferred to talk about what Geary offers and what it could grow into rather than point-by-point deficiencies in other email clients. (Just my generic mention in the video of Geary organizing by conversations rather than “who-replied-to-whom” threading was criticized.)

That there are so many email clients for Linux does not necessarily mean the email problem is “solved”. It may be that none of them have hit on the right combination of features and usability. That jibes with the positive reaction we’ve received from many Geary users.

“People Consider it an elementary App”

I honestly don’t recall anyone saying this. If this notion stopped anyone from contributing, it’s news to me.

To clarify a couple of points: OMG! states there are a few elementary-specific features in Geary. That’s not true. The cog wheel is not elementary’s. We planned that feature before writing the first line of code. Geary once had a smidgen of conditionally-compiled code specific to elementary, but it was ripped out before 0.3 shipped.

elementary has been a fantastic partner to work with and they supported Yorba throughout the campaign. Still, Yorba’s mission is to make applications available to as many free desktop users as possible, and that’s true for Geary as well.

“They Chose the Wrong Platform”

Why did we go with Indiegogo over Kickstarter? We had a few reasons.

A number of non-U.S. users told us that Paypal was the most widely-available manner of making international payments. Kickstarter uses Amazon Payments in the United States and a third-party system in the United Kingdom. We have a lot of users in continental Europe and elsewhere, and we didn’t want to make donating inconvenient for them.

Unlike Indiegogo, Kickstarter vets all projects, rejecting 25% of their applications. And one criteria for Kickstarter is that a project must be creating something new. We’re not doing that. One key point we tried to stress was that Geary was built and available today. (We even released a major update in the middle of the campaign.) There is no guarantee they would’ve accepted our campaign.

Another point about Kickstarter: a common complaint was that we should’ve done a flexible funding campaign (that is, we take whatever money is donated) rather than the fixed funding model we elected to run, where we must meet a goal in a time period to receive anything. Kickstarter only allows fixed funding. A few people said we should’ve done a flexible funding campaign and then said we should’ve used Kickstarter. It doesn’t work that way.

“Not Enough Press”

I agree with OMG!, we received ample press, but more would not have hurt. The Tech Crunch article was a blessing, but what we really needed was more coverage from the Ubuntu-specific press. Even if that happened, I don’t feel it would’ve bridged the gap we needed to cross to reach the $100,000 mark.

A couple OMG! missed

There are two more categories that go unmentioned in the OMG! article:

“You Should Improve Thunderbird / Evolution / (my favorite email app)”

We considered this before launching Geary. What steered us away from this approach was our criteria for conversations over threading, fast search, and a lightweight UI and backend.

Thunderbird is 1.1 million lines of code. It was still under development when we started Geary. We attended a UDS meeting where the Thunderbird developers were asked point-blank about making its interface more like Gmail’s (that is, organizing by conversations rather than threads). The suggestion was flatly rebuffed: use an extension. For us, that’s an unsatisfying answer.

Evolution is 900,000 lines of code, and includes many features we did not want to take on. Its fifteen years of development also bring with it what Federico Mena Quintero succinctly calls “technical debt”.

(Even if you quibble with Ohloh’s line-counting methodology, I think everyone can agree Evolution and Thunderbird are Big, Big Projects.)

In both cases, we would want to make serious changes to them. We would also want to rip features out in order to simplify the interface and the implementation. Most projects will flatly deny those kinds of patches.

In comparison, Geary stands at 30,000 lines of code today.

“I Use Web Mail. No One Uses a Desktop Client Anymore”

Web mail is convenient and serves a real need. Web mail is also, with all but the rarest exceptions, closed source.

Think of it this way: you probably don’t like the idea of installing Internet Explorer on your Linux box. If you do, you probably would at least like to have an open-source alternative. (Heck, even Windows users want a choice.) Web mail locks you out of alternatives. People are screaming about Gmail’s new compose window. What can they do about it? Today they can temporarily disable it. Some time soon, even that won’t be available.

Consider the astonishingly casual way Google has end-of-lifed Google Reader. Come July 31st, Google Reader is dust. I don’t predict Gmail is going away any time soon — it’s too profitable — but every Gmail user should at least have a fallback plan. And if Gmail did go away, Google would take with it all that code. (This is why Digg, Feedly, and others are rushing to create Google Reader lookalikes rather than forking what exists today.) Not so with open-source email clients. That’s why asking Yorba to improve Thunderbird or Evolution is even askable. Yorba improving Gmail? Impossible.

That’s the pragmatic advantage of open-source over closed: code never disappears. Even if you change your email provider, you’re not stuck relying on your new provider’s software solution, if they even have one.

The principled advantage of free software is that you’re supporting open development for applications that don’t carry riders, waivers, or provisos restricting your use of it.

My theory

That’s the bulk of the criticism we received over the course of the campaign. However, I don’t think any or all get to the heart of what went wrong. Jorge Castro echoes my thinking:

Lesson learned here? People don’t like their current email clients but not so much that they’re willing to pay for a new one.

All I’d add is that over one thousand people were willing to donate a collective sum of $50,000 for a new email client. Let’s say Jorge is half-right.

I don’t intend this post to be argumentative, merely a chance to air my perspective.

Next time I’ll talk about the lessons we learned and offer advice for anyone interested in crowdfunding their open-source project.

24 hours a day, 7 days a week, 365 days per year...

24 hours a day, 7 days a week, 365 days per year...

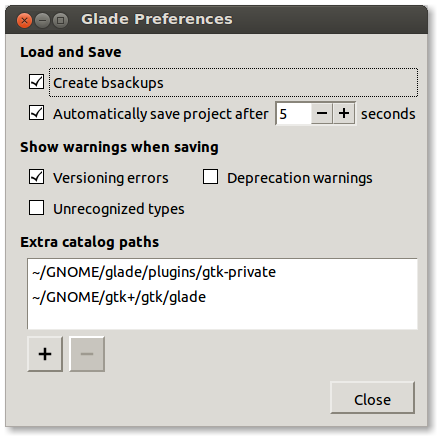

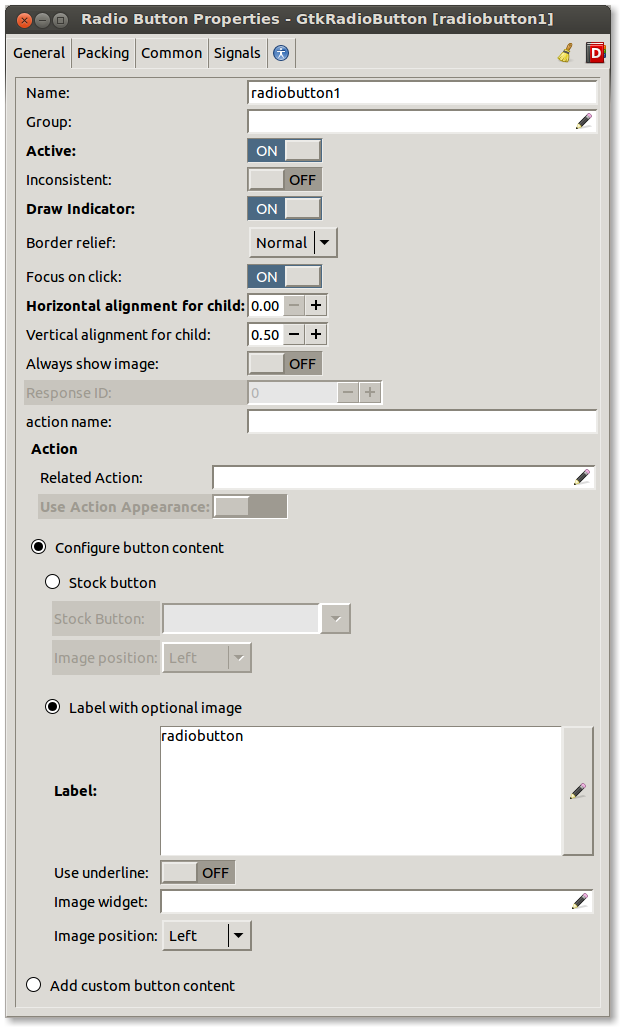

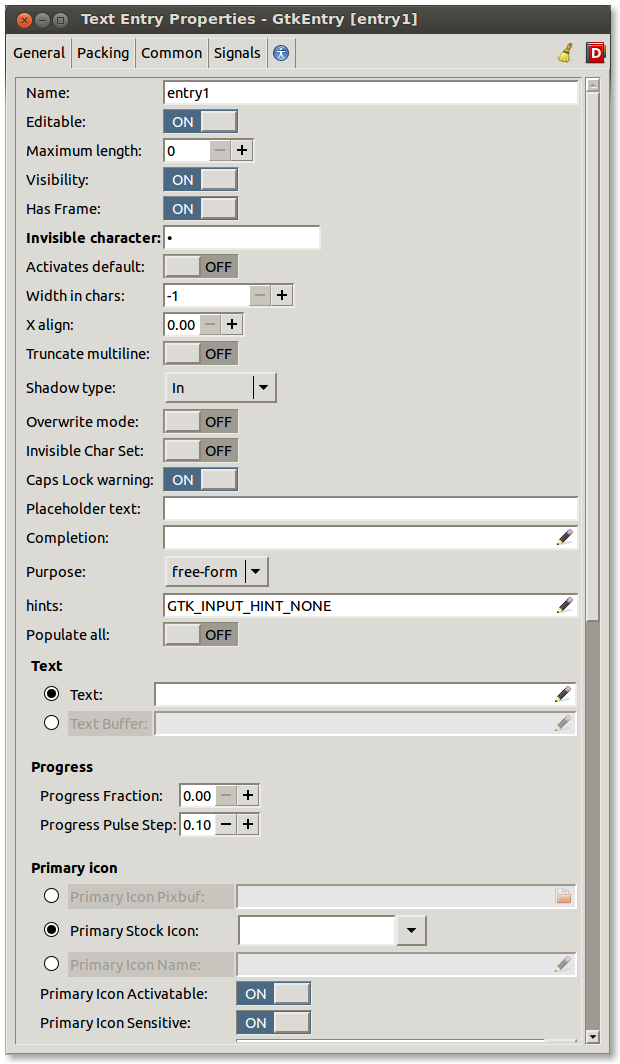

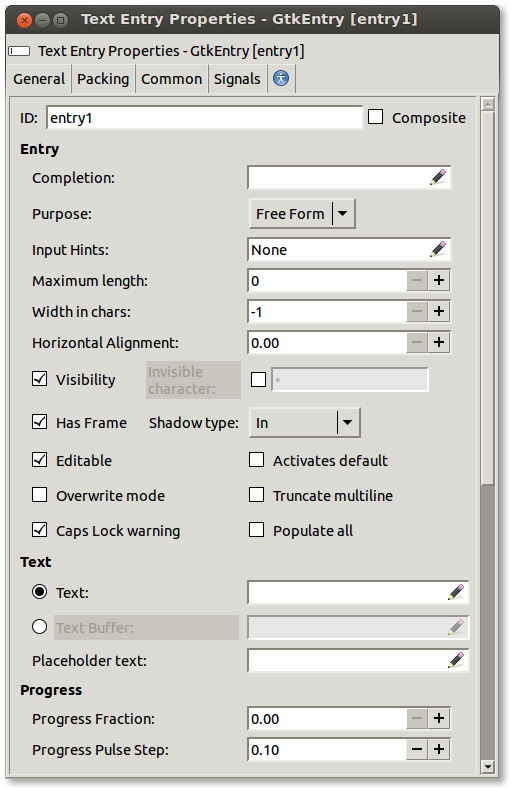

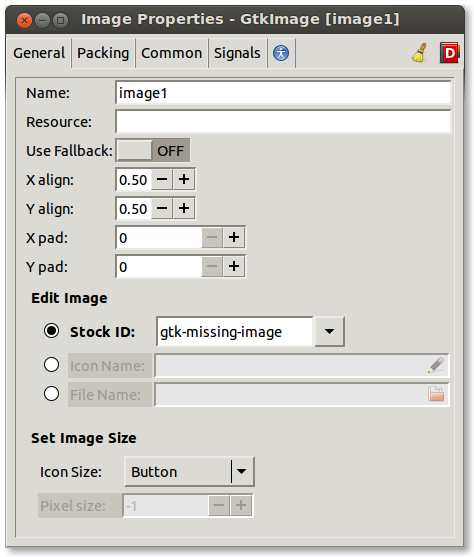

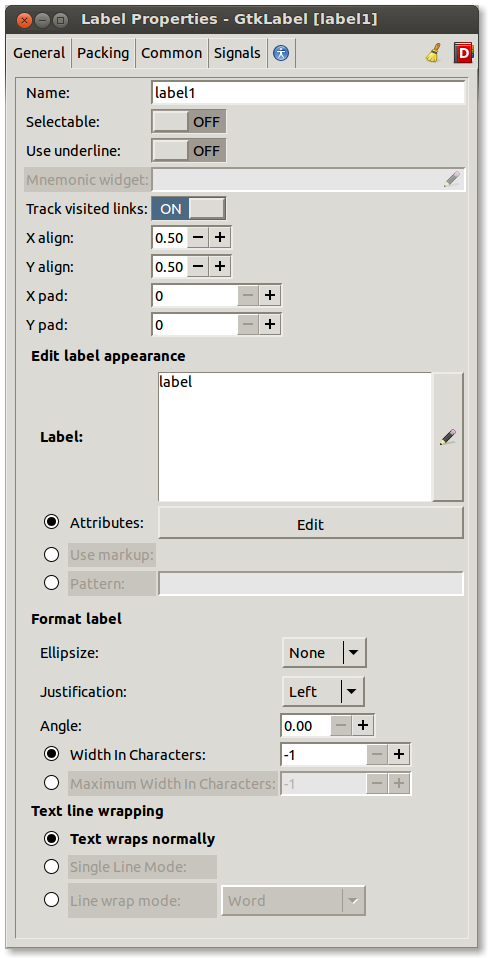

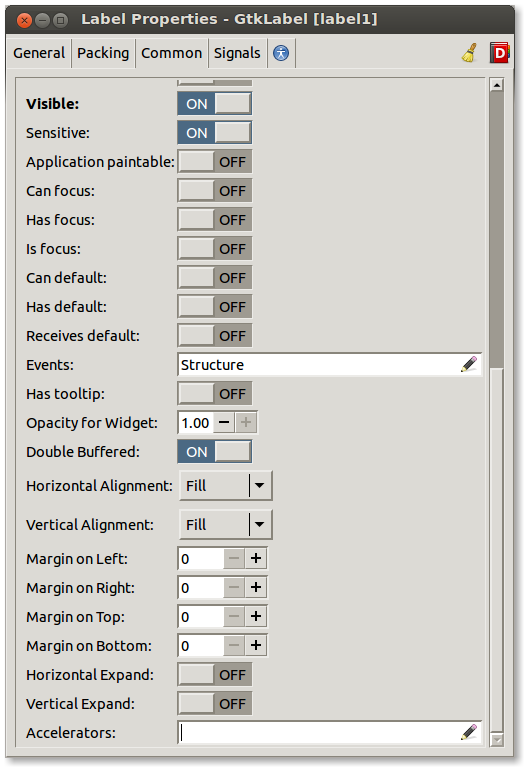

) better designs in the future. Also, we’re open to ideas, if you have any great ideas on how to make widget property editing more fun, more obvious, more usable… please share them with us in bugzilla or on our mailing list.

) better designs in the future. Also, we’re open to ideas, if you have any great ideas on how to make widget property editing more fun, more obvious, more usable… please share them with us in bugzilla or on our mailing list.

it was mostly high level discussion, to frame the problems and bring people up to speed with each problem space and potential/proposed solutions.

it was mostly high level discussion, to frame the problems and bring people up to speed with each problem space and potential/proposed solutions.