Mon Nov 21 17:33:26 EST 2011

unsubscribed

(Attention conservation notice: You'll note that this post, unlike the last six, isn't tagged "important." That's because it ain't.)

Things I've unsubscribed from recently:

- Ribbonfarm. Prime example that the Gell-Mann amnesia effect isn't just for newspapers. I wrote a refutation of another Ribbonfarm post eight months ago, where I concluded that he had no idea what he was talking about... but didn't unsubscribe from his blog. It took reading this post for me to realize, "Hey, wait, this guy's an idiot!" Also, he won't shut up about his book.

- Megatokyo. I realized that I had been reading Megatokyo since middle school, yet I couldn't tell you what the last year of plot was about, nor did I particularily care about any of the characters.

- Twenty sided.

This one was kinda hard. About a month ago, Shamus started writing

a series of autobiographical posts. I unsubscribed in disgust, (I

didn't really want to read what was pretty much "bbot's childhood,

yet worse") intending to pick it up again once he stopped. I

checked back, saw that the autobiography series was over... and

then noticed that almost all of the front page were daily posts

about Shamus' video LP series.

I don't really want to watch other people play video games I've already completed. What's even worse is that all of Shamus' high-level video games criticism work goes into his LP, now, which means no more traditional game reviews. Obviously some people enjoy them, since they get thousands of views. I just have better ways to spend half a hour a day.

This is hard because a big chunk of my readership comes directly from Twenty sided, and this post will probably result in some unsubscriptions. But man, I just give no shits about his LP. None at all. If it was possible to just subscribe to his code projects, I would, but I can't, so I won't. - Gunnerkrigg Court. Got tired of

the stupid shit it kept saying about the philosophy of science, and

AI. That, and the moronic

error it made about the underwater dorms, (10 metres is not

terribly deep, but if you spend 8 hours at depth, decompression is

required before returning to the surface, or else you're in for the

full spectrum of

amusing neurological effects resulting from nitrogon fizzing

out of your blood and shredding brain tissue) finally pushed me

over the edge.

I briefly flirted with the idea of doing a long-form post about the fundamental errors of thought underlying Gunnerkrigg Court, and I got a couple hundred words into it before I realized what a collosal waste of time this was. You would have to pay me money to get me to write about that crap. Haha, wait, hold on.

(EDIT: Donation button removed, because I forgot that I don't have access to that paypal account right now, for various reasons.)

Okay, here, you can pay me money to write about that crap. Donations will go towards a hamburger, and some of Burger King's awful, terrible coffee.

Thu Nov 17 19:02:58 EST 2011

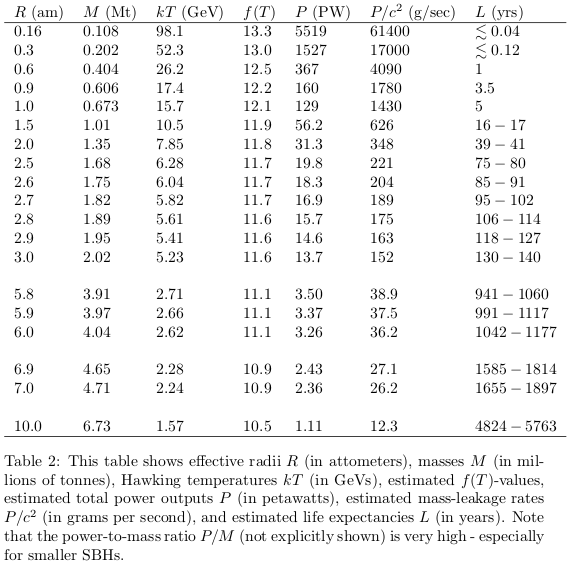

table of the day

I've seen a lot of neat tables in my day, but this one is really something else.

(From "Are Black Hole Starships Possible?", 2009)

It's not every day you learn that a one-attometre black hole would mass 673,000 tonnes, and radiate 129 petawatts of Hawking radiation. Some of that's in fairly harmless neutrinos, but the 15.7 gigaelectronvolt (GeV) gamma rays most decidedly ain't. (The gamma radiation coming off of a mass of Cobalt-60, (which is excitingly radioactive) by comparison, is a mere 1.33 MeV, 11,804 times less energetic. Attometre-gauge black holes pack a punch.)

The paper makes a pretty good case that using a microscopic black hole as a starship drive is at least physically possible, though there's a whole host of amusing practical problems that should be of any interest to the aspiring megaproject engineer with a couple state vector backups safely stored behind a kilometre of lead shielding.

Firstly is the problem of making them. Apparently Messrs. Crane and Westmoreland are the first people to seriously consider how to generate an artificial black hole, (!) and they conclude that the most practical method (!!) is "by firing a huge number of gamma rays from a spherically converging laser." (!!!)

One can easily imagine just how huge this would have to be, of course, since you're aiming to get the energy density of a couple cubic attometres high enough to spontaneously generate an object that masses 673,000 tonnes.

Once you've built your absolutely gigantic gamma ray laser array, and accompanying solar panel satellite well within the orbit of Mercury, you get to the fun part of calibrating the thing.

The best case scenario is that you generate a fairly large black hole, radiating at a sedate 130 petawatts or so. But if you don't get the power density high enough, then you might end up with a smaller black hole. The smaller the black hole, the more energy it radiates, and the faster it evaporates. At .9 attometres, it radiates 160 petawatts. At .6, 367 petawatts. At .3, 1527. At .16, 5519 petawatts and a lifetime measured in days! It's a short and steep slope to kaboomville. You can see here that a misaligned laser array is mostly a big machine for producing gigantic explosions.

The authors helpfully point out that if you're worried about the radiation flux affecting the Earth, you can just move evaporating black holes to the other side of the Sun. Which reminds me of a famous maxim: if you're using a star as radiation shielding, then you're having a fun time.

Then there's the problems of how to use something that mostly shines in the gamma ray spectrum as a propulsion device, and how to postpone the inevitable kaboom-date. You wouldn't think this would be a problem, since the popular conception of a black hole starts and ends with "it eats things", but take another look at that table. The attometre hole loses 1.43 kilograms a second to Hawking radiation. How are you going to cram 1.43 kilograms of mass into a point 2 attometres across?

The authors, who I absolutely cannot fault in the "imagination" or "audacity" departments, conclude that "this point must remain as a challenge for the future."

Fri Nov 11 08:10:36 EST 2011

guerilla archiving I

Remember when I downloaded everything2.com and a dozen people sent me screamingly angry emails, and the whole thing was generally stressful and unrewarding?

Well shit, let's do that again, but with a a different site. This time, though, I sent them an email first:

Hi, I'm Sam Bierwagen, a volunteer with the Archiveteam project. (http://archiveteam.org/) We make independent backups of sites of historical or cultural interest that, for whatever reason, (are being shut down by yahoo, like Geocities; or are being crippled by the host company, like Delicious) are at risk of disappearing. AO3 is dedicated to hosting copyright-infringing content, and depends on donations to keep operating; a combination that, in my experience, does not result in spectacular longevity.

Typically, we operate under extreme time pressure, which requires tactics that tend to generate some friction with operators that don't appreciate a dozen pageloads per second from our web spiders. Even extremely conservative spidering jobs can impact a site negatively, if done via an unusual API. (I downloaded all two million pages of everything2.com at the pace of one per second, which averaged out to three kilobytes per second, and took a full month; yet apparently was enough to crush their antiquated database backend.)

I've had enough legal threats to last me a lifetime, so I'm trying a softer approach this time. We're looking for database dumps, like the ones wikipedia publicly offers. (http://dumps.wikimedia.org/)

Have any?

I'll give them a week.

Thu Nov 10 05:51:33 EST 2011

thank you, .xxx

Something amusing one of my eagle-eyed readers spotted: the about page for the new .xxx TLD has a banner image with a couple example domains on it.

Wait a minute. What's that domain right there?

Oh boy. Glad to see my name has joined the hallowed company of "milf", "nude" and "gays" as "extremely stereotypical porn keywords."

Now, I didn't bother registering any other domains besides bbot.org. Why? Firstly, sour grapes.

I started using "bbot" way back in 2003, but I didn't get around to registering the domain until 2005, which was real late in the game for four (ha) letter domain names. (My advice to 16-year-olds: register that domain name you're thinking of. Don't wait. Do it now. If you don't have your own bank account, then go to a grocery store and pick up a prepaid debit card. If you're 12, do the same thing, but try not to use a regrettable name. If you're six months old, then do the same thing. Most people get by just using Facebook as their canonical internet presence, because most people are stupid. Facebook is a for-profit company, and you really don't want a for-profit company owning your name.)

This meant that I didn't even have the option of registering the other permutations of bbot.org. They're all taken.

Secondly, trying to register every single variation on a name is an exercise in futility. There's 280 top level domains. Are you going to register all of them?

Then there's typosquatting,where attackers register misspelled versions of your domain. How many possible misspellings are there? Then there's registering variations of your domain, or X-sucks.com, etc etc etc.

This is all a waste of time. Nobody types in domain names anymore, they just use google, or a bookmark, or the browser history. It's just a cynical money grab, siphoning money from large corporations that are still under the delusion that they can manage their brand on the global internet.

But I might have to register bbot.xxx.

Sat Nov 5 13:28:54 EDT 2011

shooting yourself in the foot with great verve and accuracy

So I was doing my usual morning routine, which is looking at the tumblr themes of homestuck fans while sighing heavily, when I noticed something even more egregiously stupid than the usual fare.

That page loads 322 files. (Ugh) One of them is... different.

It would save 118kb? But wait, judging from the filename, that's a 40x40 avatar image!

Turns out, in total, it's one hundred and twenty one fucking kilobytes. Running it through PNGsquash takes it down to 3.54 kilobytes. The old file is thirty four times bigger. Just for shits and giggles, I popped it into gimp and saved it as an entirely uncompressed 32-bit BMP file. Here it is:

Double the size! Awful, terrible! It's now 6.3 kilobytes.

Now, not everyone can be as awesome as me, and use a 296 byte avatar image, but still, a 121 kilobyte 40x40 image file is a bit bloody much. Let's run it through pngchunks:

Chunk: Data Length 13 (max 2147483647), Type 1380206665 [IHDR] Critical, public, PNG 1.2 compliant, unsafe to copy IHDR Width: 40 IHDR Height: 40 IHDR Bitdepth: 8 IHDR Colortype: 6 IHDR Compression: 0 IHDR Filter: 0 IHDR Interlace: 0 IHDR Compression algorithm is Deflate IHDR Filter method is type zero (None, Sub, Up, Average, Paeth) IHDR Interlacing is disabled Chunk CRC: -1929463699 Chunk: Data Length 106022 (max 2147483647), Type 1346585449 [iCCP] Ancillary, public, PNG 1.2 compliant, unsafe to copy ... Unknown chunk type Chunk CRC: -1377520713 Chunk: Data Length 6 (max 2147483647), Type 1145523042 [bKGD] Ancillary, public, PNG 1.2 compliant, unsafe to copy ... Unknown chunk type Chunk CRC: -113001601 Chunk: Data Length 9 (max 2147483647), Type 1935231088 [pHYs] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: 1976496277 Chunk: Data Length 6991 (max 2147483647), Type 1951945850 [zTXt] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: 1156069395 Chunk: Data Length 6313 (max 2147483647), Type 1951945850 [zTXt] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: -331828581 Chunk: Data Length 52 (max 2147483647), Type 1951942004 [tEXt] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: -1807344212 Chunk: Data Length 1491 (max 2147483647), Type 1951945850 [zTXt] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: 1166967249 Chunk: Data Length 3325 (max 2147483647), Type 1413563465 [IDAT] Critical, public, PNG 1.2 compliant, unsafe to copy IDAT contains image data Chunk CRC: -384872633 Chunk: Data Length 37 (max 2147483647), Type 1951942004 [tEXt] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: 437683276 Chunk: Data Length 37 (max 2147483647), Type 1951942004 [tEXt] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: 1800092912 Chunk: Data Length 17 (max 2147483647), Type 1951942004 [tEXt] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: 745887135 Chunk: Data Length 32 (max 2147483647), Type 1951942004 [tEXt] Ancillary, public, PNG 1.2 compliant, safe to copy ... Unknown chunk type Chunk CRC: -376046480 Chunk: Data Length 0 (max 2147483647), Type 1145980233 [IEND] Critical, public, PNG 1.2 compliant, unsafe to copy IEND contains no data Chunk CRC: -1371381630

As you would know if you had read the wikipedia article on PNG, (Neil also has a good overview of the format) it's one of the modern "container" types, with various types of chunks, most of them compressed with DEFLATE (which most people know as gzip). This is why compressing a PNG file does little to nothing: it's already compressed. PNGchunks just lists the chunks inside the container format.

As wikipedia will tell you, there's four critical chunks, IHDR, (header) PLTE, (palette) IDAT, (the actual image) and IEND. (image end) This image doesn't have a palette, since it's in full 24-bit RGB color. Here's IDAT, again:

Chunk: Data Length 3325 (max 2147483647), Type 1413563465 [IDAT] Critical, public, PNG 1.2 compliant, unsafe to copy IDAT contains image data Chunk CRC: -384872633

3,325 bytes. That makes sense.

Then there's 8 tEXt and zTXt fields. One contains EXIF metadata, one contains separate (?) IPTC XMP metadata. Then there's what is probably another copy of the image, in Adobe 8BIM format. These, combined, use up 14,970 bytes, four and a half times bigger than the image itself.

That's dumb. But it gets dumber. So far we've only accounted for 18,308 bytes of the file. But if we look at the chunk list again...

Chunk: Data Length 106022 (max 2147483647), Type 1346585449 [iCCP] Ancillary, public, PNG 1.2 compliant, unsafe to copy ... Unknown chunk type Chunk CRC: -1377520713

That, my friends, is a 106 kilobyte Kodak sRGB color profile for a 3 kilobyte image file. Gaze in awe.

This is not exactly an unknown problem. Google constantly harps on optimizing images, but Tumblr blindly reuses images that its users hand it. This makes me sad.

Sun Oct 23 06:35:44 EDT 2011

server log fun

I was obsessively poring over my web logs, as usual, when I noticed something unusual.

207.46.199.30 - - [20/Oct/2011:06:15:08 -0400] "GET

/aboutlogo.html HTTP/1.1" 200 415 "-" "Mozilla/4.0 (compatible;

MSIE 6.0; Windows NT 5.1; SLCC1; .NET CLR 1.1.4322)"

207.46.199.30 - - [20/Oct/2011:06:15:11 -0400] "GET /style.css

HTTP/1.1" 200 265 "http://bbot.org/aboutlogo.html" "Mozilla/4.0

(compatible; MSIE 6.0; Windows NT 5.1; SLCC1; .NET CLR

1.1.4322)"

This is odd, since aboutlogo.html hasn't actually been linked anywhere on bbot.org for at least a year. It's a relic of an old version of the front page, which I forgot to delete. Unless 207.46.199.30 maintains the world's most boring bookmark collection, this means that it's a web spider, refreshing a stored link.

Now, I see a lot of stealth-spiders, but it's rare to see one that's clever enough to request the CSS as well, like a real human. Google and Yahoo will occasionally do it, so they can generate accurate screenshots, but generally nobody else bothers, or they don't give themselves away like this. (It didn't ask for favicon, which is understandable, since it Expires in 2037, but it didn't ask for the image on that page, which was a bit of a blunder.) Let's run a WHOIS on the IP, and see who owns it.

NetRange: 207.46.0.0 - 207.46.255.255

CIDR: 207.46.0.0/16

NetName: MICROSOFT-GLOBAL-NET

NetType: Direct Assignment

RegDate: 1997-03-31

And nslookup says:

msnbot-207-46-199-30.search.msn.com.

Now that's interesting.

What's (very mildly) alarming is that they didn't ask for a robots.txt. That /16 is apparently used by bingbot, so it's entirely possible that they requested my robots.txt officially, got a 404, and concluded that I don't give a shit.

Which is true, of course, I give no shits about web spiders; but there's a lot of hysterical pansies on the Internet who hate it when people actually look at the stuff they've published publicly. And, of course, it contradicts Microsoft's stated policy.

So, who knows.

Thu Sep 29 14:50:05 EDT 2011

do not buy REAMDE

Neal Stephenson's last few books have not been good. But his latest cinderblock, REAMDE, is bad in an altogether different manner from the Baroque Cycle, or Anathem.

At the turn of the millennium, Neal wrote

Snow Crash and

Cryptonomicon.

These two books

are straight up, no kidding, masterpieces. I so very rarely have

occasion to speak of creative works that aren't complete piles of

shit on this blog that I could talk at great and tedious length

about the overwhelming genius of both books, but I won't. These

novels pretty much created the category of "nerd philosopher"[1],

and catapulted him to the top, next to Eliezer Yudkowsky, Maciej Cegłowski, Paul Graham and Eric Raymond.

After writing these savagely brilliant, enormously relevant novels, Stephenson essentially said "Thank god that's over, now it's time to write what I really want." And what he really wanted to write was The Baroque Cycle and Anathem. These are also genius, insofar as Stephenson totally gave up on making anything readable, or interesting; and instead pounded out a couple million words that only he, personally, wanted to read. But despite their numerous failures, they are very obviously pet projects. Stephenson wanted to write about some very big ideas, so he did, completely ignoring the matter of convincing people to buy them.

And then we have REAMDE. It's 1056 pages of generic techno-thriller, with a whole lot of action but not much actually happening. The vast majority of the book is taken up by viewpoint character A being thrust into desperate situation B, taking inventory of their possessions, and executing plucky plan C. If you want to read hundreds of pages of people searching rooms for inventory items, or putting guns in various states of readiness[2], then REAMDE is your book. The last hundred pages or so have the floaty, unpolished feel of a first draft, written by someone in a very great hurry to finish this goddamn thing so he can finally get paid and stop having to think about it.

REAMDE, very clearly, was written to make cash. Stephenson didn't have any ideas to tell the reader, or anything interesting to say about the human condition, but he did have mortgage payments; and so we get REAMDE.

Which makes it awkward when Stephenson introduces the fantasy novel authors. Their explicit, in-story purpose is to write very large books about a video game, very quickly, in return for lots of money. These books are shown to be terrible, (there's an extended quote that's one of the better jokes in REAMDE) and the people themselves are talentless hacks.

Character 1 manipulates the writers into keeping a region in a MMO in a state of chaos, so he can interrogate character 2 as to the whereabouts of character 3. The killer part is that not only is this a huge waste of time, (Character 2 doesn't know anything) but the reader knows all this ahead of time. Dead ends in an investigation are great from a verisimilitude standpoint, but storywise, this entire subplot is a hundred page cul-de-sac which leads nowhere, and serves only to pad out the page count.

The book ends (SPOILER) with a shootout between far-right survivalist nut-bags and literal jihadi terrorists, a scenario straight out of the masturbatory fantasies of a Stormfront user.[4] After the terrorists are throughly perforated by bits of hot lead by Our Heroes, the male and female characters instantly pair off, and live happily ever after.[5]

It's bad. The ending is bad, the beginning is bad, the middle is bad; the whole thing, bad. I'd like to say that I don't understand why Stephenson wrote this, but that would be a lie. His intent is plainly clear; so all that remains is disappointment.

1: The real term for them is "Hacker philosopher", but Eric was fighting a desperate rearguard action to preserve the correct definition of the word way back in 2001, and using "hacker" in 2011 requires a lot of waving your arms and narrowly qualifying your words and generally having to jump through a lot of loops in order to use a word defined one way by a tiny technical subculture, and defined another way by the other billion internet users.

2: I have the epub version of the book, so I could could a search for how many times the sentence "he disengaged the safety" occurs in the book, but the answer would probably just depress me.

3: There's a very surprising reference to the sea burial of Osama Bin Laden. Judging from when the book came out, this must have been the result of a smart editor adding it to the galley proofs at the very last second.

4: Which drags on and on, of course, and really, really could have used a map or two, Stephenson not being the greatest at conveying a layout at the best of times, and his headlong rush to finish the book does not make his writing any more lucid.

5: (MORE SPOILERS) One of the main characters is actually the Chinese hacker responsible for the Reamde virus, which kicks off the events of the book. At best he's guilty of grand larceny, having stolen millions of dollars and destroyed the files of millions of people, and at worst he caused the deaths of a couple dozen bystanders and plot-relevant characters. Does he go to jail? No, of course not.

Fri Aug 26 19:02:57 EDT 2011

good job, penny arcade

So Penny Arcade recently redesigned their site, to add more javascript, and to make the navigation buttons smaller. That's cool, I'm all for that. Except in the process, they changed the RSS URL from "http://www.penny-arcade.com/rss.xml" to "http://penny-arcade.com/feed". Hilariously, requests to the old URL return HTTP 200, and the front page. Apparently whoever pioneered this exciting new redesign has never heard of a 301 redirect.

Now, changing things around and breaking the RSS feed is not terribly uncommon. It happens fairly often, on small sites, usually by people who don't know what they're doing. But Penny Arcade have their own video games, their own books, a television show, and a giant downtown Seattle convention, which I can't afford to attend this year, due to being poor. They're kind of a big deal. They've got a lot of readers. How many?

Well, I don't know how many total but the one hundred and sixty nine thousand who were subscribed to their RSS feed was probably a pretty big chunk.

Sat Aug 6 14:53:24 EDT 2011

deus ex, and the problem of player choice

A recurring thought I had while powering through Deus Ex

this week last month, so that I could play the

leaked version of Deus Ex: Human

Revolution on an informed basis; was that Deus Ex would have

been a really awesome game to play... eleven years ago, when it

came out.

(Massive, unmarked plot spoilers ahead. Also, no screenshots, since I wasn't expecting to review either game.)

In the lonely, neckbearded association of freaks and other, bigger, freaks known as "PC gamers", Deus Ex is regarded with reverence shading into outright worship. Its very name is a shibboleth, separating real gamers from the casuals, distinguishing the Holy Elect from the debased fratboys who teabag each other in MW2, play video games based on real world sports, drink Coors light, and maintain an ever-changing collection of exciting venereal diseases.

Deus Ex is a big deal.

Personally, I didn't think it was that great.

The graphics aren't great. Well, of course of course they aren't, the game's more than a decade old, so no rational person could possibly knock it on that. I'll give it a similar age-related pass on the indifferent level design, though it's odd that Deus Ex is so bland when Unreal Tournament, using the exact same engine and coming out at the same time, had real architecture. I have to give the vague attempt at facial animation a pass as well.

Some things aren't quite so forgivable. The voice acting is just really horrifically bad, even by the standards of late 90s video games. The gunplay is terrible, so the game punts halfway through and gives you a lightsaber that will let you instantly kill any human in the game, which makes combat short and unexciting. The stealth gameplay is lousy, lacking any meaningful feedback, and the enemy AI is absolutely brain dead.

The standard response of every enemy in the game upon seeing you, heavily armed super-soldier JC Denton, is to stand in the open and start shooting. If you damage them enough, they'll eventually put away their weapon and try to run away... except the game never flags them as non-hostile, if you actually spare their life, they'll re-arm and try to kill you again. Whoops.

These are all quibbles. The real problem is: I read about the game before playing it. In fact, I've been reading about the game for eleven years before playing it, and in the course of doing so, acquired some preconceptions, something that didn't work out real well for me with Phoenix Wright either.

Every single person who has reviewed, played, or glanced at the box art of Deus Ex has raved about player choice. At great length they have spoken regarding the paramount importance Deus Ex places on player choice, in tedious monologues they have rhapsodized about the unlimited possibilities open to the player.

And so, the preconception that I acquired, was that player choice was critical to the plot of Deus Ex.

It's not. Deus Ex is as linear as Ronald Reagan sitting on a horse, holding a straightedge.

To it's mild credit, there are three different endings. You can choose to side with the Illuminati, destroy technological civilization, or merge with the Helios AI.

To additional credit, all three endings are quite morally ambiguous. The Illuminati, while not quite as outright evil as their offshoot, Majestic 12; are about as amoral as a starving rat, and display a contempt for individual liberty somewhere between Stalin and Reileen Kawahara.

You can destroy the worldwide communications infrastructure, killing billions and insuring "perfect liberty". This is known as the "blithering idiot" option.

You can also merge with Helios, the most attractive option for a soft-hearted old transhumanist like me. Except Helios is about as stable as a pencil balanced on its tip, its reasons for wanting to merge don't make a whole lot of sense, and it's not at all clear how much influence Denton would have over the resulting gestalt. I certainly wouldn't have jumped at the chance to plug my brain into it.

Crucially, you don't really see the outcome of any of the endings, besides the Illuminati path. This is because Ion Storm were planning a sequel, and didn't want to ruin its story. So there's zero closure, and worse yet, when they actually made the sequel, they made all the endings a little bit true, (Denton sided with the Illuminati and merged with Helios and killed the internet) and the resulting game was terrible.

The problem with this three way "choice" is that you make it at the very end. There's something like ten minutes of gameplay after various crucial choices, but there's no branching. You can't rule out one ending by siding with a faction early on. No matter who you kill, and you can kill a lot of the NPCs, you're presented with the same exact same choice. If you save your brother in Brooklyn, he'll show up later to advise you on the One Choice, but it doesn't affect the fucking plot. Player choice is meaningless if the choices have no effect!

As I alluded to earlier, Deus Ex lets you kill a lot of plot-critical NPCs. This is unusual in video games, since you have to a lot more work behind the scenes to wire up the plot so that it doesn't completely fall apart when big chunks are missing.

This is a noble aspiration, and actually quite interesting in-game, but of course there are hard limits at play. You can't let the player kill everyone he meets, because then there wouldn't be any plot at all. So Deus Ex does have invincible NPCs, but the way it treats them is bizarrely schizophrenic.[1]

For example, at one point, you are ordered by UNATCO agent Anna Navarre, your superior, to kill NSF terrorist Juan Lebedev. You can do that, or you can instead kill her. This is hard, because she's way better equipped than the player is at this point, but it actually is possible, which is cool.

If you kill her, her partner, Gunther Hermann, is very upset. You're ambushed by him and a small army of UNATCO troops and combat robots at a subway platform.

This is a very tough fight, but it actually is possible to kill all the soldiers. Except for Hermann, who is invincible, because this was a fight you're supposed to lose! Even if you're gibbed by a rocket in the fight, you'll "wake up" in a Majestic 12 secret jail. Later on you'll meet him in Paris, and you have to kill him to proceed.

This is Deus Ex's vaunted "player choice". Enemies you can kill, except when you can't, except when you're required to.

It goes further. Doors in Deus Ex are actually destructible, if a door is locked and you don't want to lockpick it, you can just blow it open with a rocket launcher. Yay! Except, of course, there are some doors they don't want you to open, which can't be lockpicked and infinitely durable. Come on, guys, seriously?

I had seen hardassed-designer types talk about Deus Ex's freedom of choice, and so I had made the foolish mistake of assuming that player choice would be implemented in a hardassed-designer way. Like how hardasses have dictated that Gordon Freeman will never talk and that there will never be third-person cutscenes in the Half Life series; or how Nethack is a game centered around, obsessed, utterly focused on the permanence of player death, with no take-backs or compromise; I assumed that Deus Ex would stick to its guns, that it would follow its own rules, be internally consistent.

It's not, of course. Like Fallout 3, Deus Ex follows a set of rules until they become inconvenient, then it breaks them. If I had come into it without preconceptions, I might have enjoyed the game. But I did, so I didn't.

And so we come to Deus Ex: Human Revolution.

Human Revolution is not a game without flaws. The leaked build has performance issues, low resolution game assets, a very console-like 60 degree field of vision. Jensen has about three different idle animations, and you're going to get real familiar with them during conversation. The pre-rendered cinematics probably knock em dead on the Xbox 360, but are startlingly low resolution when viewed on a PC monitor.

But it's so much more polished than Deus Ex 1.

Consider the XP system of both games. DX1 had skills, and augmentations. There were a lot of skills in DX1, several of which were completely useless. (There was no reason, ever, to put points into Swimming. And every computer in the game could be hacked with a single level in Computers.) It also was badly unbalanced: depending on how you created your character, the game was either a breeze or an utter grind.[2] Augmentations required canisters to install, and upgrade canisters to upgrade. Install cans require a medi-bot to install, upgrade cans could be used at any time. Both of them were irreversible, one-time use items! And the augmentation system was also badly unbalanced. There's the EMP shield augmentation, which is useless, (There is one single enemy in the game that uses EMP attacks, and there's EMP grenades, which are used against you maybe... once.) and the health regeneration augmentation, which is absolutely essential and which the player will always want, no matter how else they've set up their character. You have to activate each augmentation manually, and they consume bioelectricity (BE) points, which you refill using BE cells, much like how you refill HP points with medikits.

Before fighting gresels I had to press a chord of activation keys. Power recirculator, regeneration, environmental resistance, ballistic protection, combat strength. Press five keys before fighting, hit enemy once, wait for HP to regenerate, then don't forget to press all five keys again, or else they'll drain all the power!

Absolute madness. A dozen keypresses, but no actual meaningful choices being asked of the player, no skill required.

In contrast, there's DX3. One single XP system, which unlocks new augmentations. Most augs are passive, requiring no busywork to use. If you're pressing a button, you're actually deciding something. Hit points regenerate, as well as the final cell of bioelectric energy.

Now, there's certainly an argument to be made that things have been dumbed down for the consoles.

It is known far and wide that I am no friend of the Halo kiddies, and if I thought that DX3 had been oversimplified, I would certainly be saying it.

But there is a difference between Farmville and Go. One is casual, the other is elegant.

I spent the last couple hours of DX1 walking up to enemies and slapping them in the face with a sword. With several upgrades sunk into the ballistic protection augmentation, you're a walking tank. With the ADS augment, rockets and plasma rifles can't even touch you. You consume bioelectric energy at a fearsome rate, but there's plenty of BE cells on the bodies of your enemies, and your sword never runs out of ammo.

This never happens in Human Revolution. At least, in the first three hours, and on normal difficulty. Ammo and energy are light on the ground, and Adam Jensen is rather squishy. Point blank, you're carbon fiber death on wheels, but charging straight at an alerted enemy is a fast death. You can do Gears of War style third person cover based shooting, but there just is not a lot of ammo, and the AI uses the big, nonlinear levels to flank the player, and flush them out with grenades quite well.

I haven't finished the game, and the endings of games offer me limitless opportunity for disappointment, but based on the first few hours, I like Human Revolution more than Deus Ex 1.

1: Personally, the way I'd like to see it work is that the game would let you kill any NPC, but if you broke the plot, it would invite you to reload an earlier save, but still let you keep playing, like in Morrowind.

2: Several in-game characters encourage you to use nonlethal weapons, like the tranquilizer darts for the mini-crossbow. Surprise! All the end-game enemies are immune to tranquilizer darts! In the beginning, your brother offers you the GEP gun, and if you accept it, he gets pissy. Surprise! The GEP gun is the single most useful weapon in the game. You can one-shot every boss with it, and the ammo is common! I digress.

Mon Jul 25 21:31:53 EDT 2011

computer security is hard, let's give up

Attention conservation notice: 1140 words about a blog post that's more than a year old, and was written about a guy who doesn't know very much about computer security.

"Digital Security, the Red Queen, and Sexual Computing"

There is a technology trend which even the determinedly non-technical should care about. The bad guys are winning. And even though I am only talking about the bad guys in computing — writers of viruses, malware and the like — they are actually the bad guys of all technology, since computing is now central to every aspect of technology. They might even be the bad guys of civilization in general, since computing-driven technology is central to our attacks on all sorts of other global problems ranging from global poverty to AIDS, cancer, renewable energy and Al Qaeda.

[...]

What we want is an architectural paradigm that can churn the gene pool of computing design at a controllable rate, independently of advances in functionality. In other words, if you have a Windows PC, and I have one, we should be able to have our computers date, mix things up, and replace themselves with two new progeny, every so many weeks, while leaving the functional interface of the systems essentially unchanged. Malware threat levels go up? Reproduce faster.

EDIT: The plot thickens! The book Venkat refers to was written in 1993, by noted dirtbag Matt Ridley, as in, the guy who ran Northern Rock into the ground in 2007, burning twenty six billion pounds of taxpayer money in the process. So not only is the idea bad, but it was based on a book written by a literal criminal. Neat.

Non sequitur. Venkat has extended a metaphor too far.

I've got a whole cloud of objections to this idea. There's just so much wrong with this post that I'm staring at a blank page, wondering where to even begin.

Hell, starting with the most shallow objection first: I can't imagine the solution to "software is poorly designed" is "randomly designed software."

Just how the hell is this supposed to work, anyway? What's the implementation path? Do you randomly permute API names? This would certainly prevent remote exploits, but how do you communicate API names to benign programs without allowing malicious programs access to the trusted computing path?

You can use cryptographic signing of executables, which has worked great at preventing exploits, but has also been great at inciting widespread anger, since step one to get a certificate from the OS vendor tends to be "give us a hundred bucks". When the Feds are explicitly allowing hackers to break your security schemes, you've got a problem.

Even if this scheme was technically possible without vast pointless expenditure of money, the big problem with evolution is that is only works by death. It advances via the death of the unfit organisms, which means that, in practice, the users will see a lot of broken computers. How is an evolutionary computer that constantly crashes any better than a conventional computer that constantly crashes?

Additionally, while I could at least hypothetically visualize a computer that randomized API calls, there's a very important interface that you can't touch, and that's the user interface. Randomizing the locations and captions of buttons would make it completely impossible to use, or document, so that part of the program would have to remain static.

And so the attacker would just programatically simulate mouse clicks, and completely circumvent the randomized APIs.

Also, the hypothesis is that sexual reproduction prevents parasite infection, which as anyone who has spent a hypochondrial hour browsing the "parasites that infect human beings" category on wikipedia knows, parasites still exist. Now, strictly speaking, this is unfair, since the Red Queen hypothesis is that sexual reproduction only discourage parasitism, but in his essay Venkat goes on to pitch this as some kind of revolution in computer security, which will entirely prevent infection by computer viruses, for everyone, everywhere. That isn't true in the original system, and I doubt it would be true in a computer system.

It's also unnecessary.

One of the (many, many) differences between computer virii and chemical virii is that, in the real world, physics doesn't privilege the defender. Chemical reactions run at the same rate in the bacteria as they do in the bacteriophage. The defender can discourage infection by spamming the local environment with oxygen, or by poking holes in their cell membranes, or changing the pH, but they cannot deny them the passage of time.

The same cannot be said of a computer.

On a computer, the processor has to be ordered to execute code. You can download a virus, and it'll sit on your hard drive until the end of time, and will never do any harm unless you execute it.

Unlike organic life, computers are secure by default. This is an absolutely fundamental difference, and it makes a lot of comparisons with organic life invalid. Calling malicious programs "viruses" was a comparison more clever than informative, since the popular conception of a virus is "invisible thing that makes you sick", when in point of fact parvovirus has more in common with a catchy song than it does with an infectious organism, such as e. coli.

Indeed this false parallel has resulted in real harm, since Rao has now wasted some of his precious life writing a fundamentally incorrect essay, rather than writing amusing newsletters on how to be a jerk.

A Linux machine with Apache Nginx configured to serve static,

unencrypted HTML, like the machine that runs bbot.org, cannot be

hacked. There's nothing to hack. There hasn't been a

remote exploit in Apache Nginx in years. It's a

goddamn rock, and if someone discovered a remote exploit

in static Apache Nginx today I'd eat my hat. You

can hammer a web server with traffic until it

falls over, but that isn't a hack.[1]

So how is randomly designed software supposed to improve on that?

Software sucks, yeah. But it's not doomed to suck. It can be improved, and it can be perfected, using automated theorem proving to prove a program to be formally correct. As in, a hardcore, mathematical proof, without doubt or flaw.

Automated theorem proving is in its infancy, however. It's pretty easy to prove trivial programs to be correct, but for any program that does something interesting, you tend to run into various amusing NP-hard problems. It's entirely possible that we'll never see a formal proof for Firefox.

But that's no reason to not try.

1: This sounds like a specious cop-out, but there is a valid

distinction. A single hacker can spend an hour to take advantage of

an exploit, and sit back and watch the

internet explode but it would probably take a hundred

computers with consumer-grade internet connections to take bbot.org

offline, or ten times that number if you didn't want the owners of

those computers to start wondering why their computer had abruptly

become so slow; and it would only stay offline as long as those

computers were dedicated to keeping it down.

Or exactly one computer. Whoops.

I still stand by my original statement, though. Denial of service is not remote code execution.

Sat Jul 2 16:41:16 PDT 2011

baying apocalyptic death cult

I'm reading Thailand's Moment Of Truth: A Secret History Of 21st Century Siam after it was linked on /r/DepthHub, and it's pretty good.

Apparently Marshall had to quit his (twelve-year) job to publish it, which displays some extraordinary testicular fortitude. It also allows him a certain degree of editorial freedom he perhaps did not enjoy while working at Reuters.

The Yellow Shirts were initially a broad-based and relatively good-humoured alliance from across the ideological and political spectrum that drew together royalists and liberals, radical students and middle-class aunties, progressive activists and patrician establishment patriarchs, united in opposition to the increasingly baleful influence of Thaksin Shinawatra; over the years they morphed into a proto-fascist mob of hateful extremists addicted to the bloodcurdling rhetoric of rabble- rousing demagogues. The Yellow Shirts proclaim their undying love for the king, but it is the flipside of that love that has transformed them into a baying apocalyptic death cult: they are utterly petrified about what will happen once Rama IX is gone.

PROTO-FASCIST MOB OF HATEFUL EXTREMISTS

BAYING APOCALYPTIC DEATH CULT!

Fri Jul 1 00:33:30 PDT 2011

how to make reddit suck less

Reddit's a pretty cool site. Well, parts of it are. Unfortunately, those parts are pretty obscure, and the Reddit that the vast majority of its users see is more or less pure shit. Here's what the front page of Reddit looks like to someone who isn't logged in.

"Funny" youtube videos, "funny" comics, shriekingly partisan political news, crap, crap, crap. Reddit is not, in fact, a terrible site where the SNR approaches zero, but you wouldn't know it from this screenshot. We can do quite a lot better than this.

First, create an account. It's pretty damn easy, and you don't even need to provide an email address.

Now click on the "EDIT" link in the the top right corner of the screen. This will take you to the subreddit editing page.

Subreddits are the lego blocks of Reddit. Each one is dedicated to a certain topic, and if a link in a subreddit gets enough upvotes, it bubbles up to the front page, where millions of people immediately crush whatever webserver is being linked to. Unfortunately, the default subreddits all have hundreds of thousands of subscribers, and are all absolutely terrible. So let's unsubscribe from them all.

There won't be a lot left. In fact, at the time of writing, doing this resulted in nothing on the front page. While this has a certain "we had to destroy the village in order to save it" appeal, you probably actually want to see stuff when you go to Reddit. So I suggest subscribing to:

- /r/cerebral

- /r/DepthHub

- /r/Foodforthought

- /r/hardscience

- /r/indepthstories

- /r/MethodHub

- /r/science2

- /r/spaceflight

- /r/tldr

- /r/TrueReddit

And a screenshot of what it looks like afterwards:

Beauty. But we can do better!

In my experience, links to imgur, youtube, or ted.com are always crap. Reddit doesn't let you block links to certain domains, but Reddit Enhancement Suite does. Go there, and install it. Now click on [RES] in the upper right corner, go to "Configure Modules" and scroll to "filteReddit" in the drop down menu. Add the (un)desired domains in the "domains" section.

While we're changing settings, go to the standard Reddit preferences page, and set "don't show me comments with a score less than" to "10".

Congrats! You are now the owner of a much-improved Reddit.

(some subreddit recommendations credit this nerd.)

Thu Jun 16 14:40:02 PDT 2011

guerrilla web design

So I started up Firefox today, and was greeted by the NoScript upgrade page.

Typically I just close the tab, but I was waiting for some other programs to start up, so I actually read the changelog. Some interesting new features... Then I looked at the page itself. Man is it ugly:

I count two donation buttons, and six different ads... three of them in the same font as the rest of the page! I kinda regarded NoScript to be in the same company as AdBlock+, a pro-consumer extension, but this is a little too evil.

I don't often look at the source of advertising-encrusted pages, so this was a bit of a jaunt into the unknown. NoScript embeds the ads inside several layers of divs, possibly to foil adblockers.

Personally, I think asking for donations on a page filled with ads is kinda lame. So let's strip them out. First we save the HTML file to disk, which weighs in at a whopping 97 kilobytes. Doing this breaks the gradient on the left side, for some reason. Never mind that, we must soldier on. Removing the ads is easy enough, since they come prepackaged in comments like: <!-- START QSVQ7 -->. Amusingly, they contain Data URIs, which must account for some of the massive size of this page. Near the Flattr button, (Flattr is a micropayments scheme crossed with a pyramid scam) there's an empty element named "easylist_sux", a not so subtle zing on AdBlock+.

Turns out, those data URIs are big, because now we're down to 9.75kb. Now I move the changenotes into the left column, and delete some divs. Looking into the CSS, I find the missing background image, which isn't in the files folder. Apparently when you tell Firefox to save a page, it doesn't bother parsing the CSS file for links, which is just absolutely hilarious. Pretty funny, Firefox! You a funny guy! Then I tore out all the javascript, (except the paypal code) and hard-coded the main content column to 600 pixels.

You can find the final result here, a mere 7.10kb.

Fri Jun 10 09:41:29 PDT 2011

adventures in HTML optimization

[1]

[1]

Last week, someone linked me to Google Page Speed. This sucked, since it directly resulted in me spending rather a lot more time than strictly necessary dicking around with Apache configuration files.

My server doesn't get a whole lot of traffic,[2] so I hadn't bothered setting Expires: headers, under the "who cares" school of thought. When Apache CPU utilization doesn't get above .01% when you're getting 5 hits a second from HN, there's not a whole lot of incentive to aggressively cache files. But when I ran bbot.org through Page Speed, I received the humiliating news that it only scored 68/100. 68! That's a low number!

Resolving most of the issues was easy, (Turning on Expires, bzip compression, changing the black and white logo image from full 24-bit color to grayscale, etc.) but going from 98/100 to 100/100 was kinda painful.

Page Speed is a vast improvement over YSlow, but it shares some of the inherent problems of an automated performance tool.

For one, it doesn't seem to care much about actual page load times, as long as you follow its rules. It gives cracked.com, ("Auschwitz for javascript engines") a phat 90/100, even though the front page takes 8.2 seconds to load, makes 188 HTTP requests from about a billion domains, loads 36 javascript files, throws 166 warnings in Chrome's audit tab, has 466 unused CSS rules, and is, in fact, pure evil. For a period of time while I was testing things, the links div loaded its own font-face, the smallcaps version of Linux Libertine. Now, anyone with half a brain can tell you that loading a 300 kilobyte font just to style 20 words of text, something you can just do in CSS with font-variant:small-caps anyway, is pants-on-head retarded; but Page Speed was totally fine with it.

For two, while it doesn't actually come out and say that you should "Use a CMS" like YSlow does, (A monumentally useless piece of "advice") it sure does wink a lot and nod suggestively towards it.

The sticking point, that robbed me of two points and kept from that tantalizing perfect score, was "Inline small CSS". I, of course, kept the stylesheet in an external file, and linked to it from every page, because it's easier to maintain that way. Except, of course, it's a small stylesheet, and page rendering would be faster if you just stuck it in the HTML file. This would be a pain... unless you used a CMS, which could just seamlessly inline a stylesheet when publishing a document. Funny.

Inlining the CSS granted me the two points, but then page speed turned right around and docked me one, since the stylesheet had a lot of whitespace, and pushed the file over the tipping point where Google thought it would be worthwhile to minify the source code. Now this is a pain, since I hand-edit my code, and minifying makes code hideously ugly. Would have been trivially easy to do if I used a CMS, of course. Minifying was tedious and fiddly, since the tool I used liked to munge the inlined CSS, and scribble all over my link formatting. It was worth it, though! After twenty minutes of swearing, I finally trimmed off that last 120 bytes, and scored a perfect 100/100! Yeah!

Now, if you'll excuse me, I have to go put everything back to the way it was.

1: Google showing off their mad UI skillz there on the "refresh results" button.

2: How much traffic does it get? Last month, my host called in a panic. Apparently, my box had consumed 1000% more bandwidth than it did from the month before-- it had used up 10 megabytes! My site doesn't get a lotta traffic, I tell ya, every page load takes 30 seconds, because the disks end up spinning down between hits! My traffic is so low, my Alexia site rank is measured in scientific notation! It's low, I tell ya!

Thu Jun 2 20:43:31 PDT 2011

slower than light

SUNDAY SUNDAY SUNDAY

Sunday, June 5th! 6 PM, 18:00 o'clock PDT! 10PM EDT! Be there or be square!

Now for your regularly scheduled blog post:

I keep thinking about this in the shower, so here's hoping that doing a post on it will turn my mind to something more productive.

First off, naming. Calling it "a Starcraft clone with less clicking" will get old, and since I'm a sucker for theme naming, let's call it Slower Than Light. (viz.) The humans will be the Border Administration, the squishy aliens will be the Red Plague, and the glowy aliens will be the No. (Opposite of Yes. Insert your own Starship Trooper joke here.)

I wrote about the BA in the previous post, so I'll skip over them here. No, wait! I just thought of something.

The only humans on the battlefield are the ones inside the command buildings, controlling the units, right? So they're essentially pro gamers. It would be amusing if you hired real life pro gamers to voice them, like Idra, Flash, et al.

The Red Plague, as suggested by the name, is a microscopic bacteria that subverts larger species, rather than being a macroscopic animal itself. This would be bad news for the opposing factions if the BA didn't fight with robots, or if the No didn't have a... unique biology.

The No are a race of four-dimensional aliens from the core of the Milky Way, where things are considerably hotter and more exciting, and they are as far above the human race as we are above a basket of newborn kittens. If it was a real war; if the No considered humanity to be any kind of threat at all, then the extermination would take hours. Indeed, the No have ended several unaesthetic wars by exploding the local star.

But you don't prune a bonsai with a flamethrower, and most of the time when the No feel intervention is needed to guide the war to a more elegant state, they deploy small combat teams. Even when they're pulling their punches, they're awesomely powerful. The Red Plague fields a swarm, the Border Administration deploys armies, and the No sends individuals. Each No unit is a tiny core of exotic matter that reacts explosively on contact with normal matter, surrounded by vast bulwarks of force fields, which recharge quickly when not in combat, and encourage hit and run tactics, rather than the steady plodding of the BA tanks, or the overwhelming rushes of the Plague swarm.

The No "bases" are closer to sculpture parks than the litter strewn factories of the BA (Terran bases accumulate trash, and smelter fumes kill off nearby vegetation) or the "whale dropped from a great height" aesthetic of Red Plague hives.

The No buildings are attractive, (literal works of art) expensive, (most of the player's income will go into constructing them) temporary, (they slowly decay and fall apart, which is part of the aesthetic) and useless; since all units come through the gateway back to the homeworld, rather than be constructed by specialized buildings. Much like how web designers refuse to work in cities without at least two Apple stores, high-tier No units won't step foot on a planet without a twenty metre tall gold statue of Desiderius Erasmus.

Sun May 22 19:10:51 PDT 2011

the failure of second life

In July of 2005, when I created my account, Second Life was the cool new thing. Moving between regions was kinda wonky, loading textures and objects was slow, and it was pretty ugly when compared to other games of the time; but it was generally assumed that these were teething issues, which would be quickly sorted out as new versions of the software were released.

At the time, I used an Athlon XP 2500+ processor, a not enormously distinctioned graphics card, 768 megabytes of ram, and phat 2 megabit/512 kilobit DSL.

Today I have an Intel Core i7 940, an eight-threaded processor that can access 6 gigabytes of ram, and Radeon HD4860 video card with a further 512 megabytes of ram, and I have symmetrical 25 megabit fiber internet. The processor's an easy six times faster, there's eight times as much memory, the internet pipe is 12 times wider, and the video card is, well, it's real quick.

Moving between regions is still kinda wonky, loading textures and objects is slow, and it's still pretty ugly... when compared to games made in 2005. Second Life has gained features, many of them, but it has improved not at all. Its problems are profound and architectural, and won't be solved by any minor patch, but rather, a complete redesign. Something that won't happen, as even in its broken, half-assed state, it still makes rather a lot of money from furries, (like Zarla) and it takes quite the brave company indeed to break a profitable product to instead create something that may make no money at all.

And so, let us speak of architecture.

(Above: downloading a file from my ISP's datacenter at 25mbit/s. Below: Flying through Second Life with the draw distance set to 512 metres, and consuming so much bandwidth that the game disconnected me. My mark I eyeball figures that graph peaks at 4mbit/s.)

Go and grab a software engineer. There's plenty of them around, so it should be easy to find one. Tell her that she'll have to design a social MMO for a brand new console. This should make her eyes light up, because, as a rule, engineers prefer new technology over stuff that actually works, since new technology is cool and sexy, and old technology makes money.

Tell her that the new console is pretty much a stacked modern PC, with a dozen processor cores and heaps of memory. This will be well received. Then tell her, due to budget constraints, there's only four megabits of bandwidth between the processor and the disk drive, where all the game assets are.

Brace yourself. There will be swearing, threats to go back to Google, various and sundry recriminations. Placate her with twenty million dollars and two years later, go pick up your social MMO.

That game, a game designed with a major, major bandwidth limitation in mind, will be very careful to control player location. Maps will be linear, and areas of high detail will be strictly gated. Player movement will be slow. All this is done so that the game has time to load level geometry and textures into memory before the player gets there.

Second life allows the player to move at arbitrary speed (And height! Players can fly!) in an open world environment. There are no loading screens except during teleports. Players design the levels, and they can use all the huge textures and as many polys as they want. The majority of player-owned land is continental, where the tragedy of the commons is in full effect. Geometry pop-up is endemic. Textures can take a full minute to load. FPS on my stacked gaming computer is in the teens with any draw distance above "myopic". Performance is completely, absolutely unacceptable for an eight year old game!

Second Life as a game concept, sounds good, but was made with no thought of the fact that all the game assets are on the wrong side of a narrow, high-latency bus.

Second Life attempted to emulate Real Life in design. This was laudable, but misguided. Perhaps that's not the right word. Incorrect? Wrong? Colossally wrong?

It's been eight years, now, since Second Life launched. It hasn't come close to living up to its promise. My wild ass guess is that their asset servers will have sustain two hundred megabits a second to the customer in order to make Second Life even vaguely playable.[1] That's for SL's current, not great, graphics, nor a terribly inspiring draw distance, maybe 300 metres.

Not only would this require better internet service than pretty much anybody, outside of South Korea, has; it would take an absolutely massive investment in data centers all around the world. It only takes 40 megabits to stream high quality 1080p video. Sustaining five times that bandwidth, and maintaining consistency across all the caches would be a real trick.

I doubt Second Life will ever do it.

1: The PS3's drive can read a DVD at 8x speed, or 86mbit/s. (Blu-ray discs are actually slower, for some zany reason.) A top of the line solid state hard drive will do 4000 mbit/s.

Thu May 19 15:53:30 PDT 2011

bitcoin, five months later

Five months ago I wrote about upstart cryptocurrency Bitcoin. In the intervening time, they've gotten a lot of press, a meandering UR essay, and neat-looking physical tokens. They've also increased in value. A lot.

In December, one bitcoin was worth .21 dollars. Today, they're trading at seven dollars each, a 3270% increase. If you had bought $10,000 worth of bitcoins five months ago, it would be worth $327,000 today, phowar, dude. Like, whoa.

But past performance is no guarantee of future results. When a mainstream media news outlet puts "criminals" right in the title of a piece about a currency exchange, it's not a good sign that it will continue unmolested by very serious people in very conservative suits.

Even without official intervention, bitcoin still isn't a sure bet. Right now, in its nearly completely unmonitized state, bitcoin looks a lot more like a commodities market than a currency market. And commodities markets, as any foole kno, are twitchy, excitable beasts, prone to dramatic price shifts as bubbles inflate, then pop. Buying into bitcoin now would be pretty dumb. Or maybe not, you might not want to take my advice, seeing as how I didn't buy all the bitcoins I could find back in December.

Thu May 19 02:36:28 PDT 2011

self-promotion

Recently, Valve added replay functionality to TF2. The above video is 47 seconds of me failing to do anything of value at all, then dying. If you, for some reason, want to download a 243 megabyte file of that, rather than just watching it on Youtube, you can.

The Replay update seems to just be a better UI frontend to the existing demo recording and Source Filmmaker program. (Responsible for the pretty good, Valve-created, Meet The Team series; and the pretty stupid, fan-created, nope.avi.) "Better", but not "great"; the replay feature has to, by definition, be able to edit video, which can charitably be described as a fiendishly hard unsolved problem, with a bunch of different competing UI metaphors in use. Valve doesn't quite nail it, so editing is a bit fiddly and non-intuitive, which might account for the... unpolished feel of the above video. Yes, that's right. Entirely the fault of the tools, uh huh.

Heck if I know why they made this update, besides "because the old tools were kinda crappy", and "for the heck of it." Well, except that the proximate result of letting your users edit together movies of your game and upload them directly to youtube is piles and piles of free advertising. Other than that, nuthin'.

Sun May 8 06:59:05 PDT 2011

valve isn't going to make a console

A persistent rumor on 4chan[1] recently has been that Valve is going to release a games console, which would presumably take the form of a locked down commodity PC, which would use their Steam content delivery platform. (For both of my readers who aren't familiar with modern gaming, think of, in order, Apple, the iPhone, and the iTunes software store.) Following the established naming scheme, I'll refer to Valve's hypothetical console as the Pipe. Or maybe Concorde it up, call it Pipe.

Even I, with my legendary propensity to confidently assert the inevitability of events on the basis of how much I'd like them to take place, rather than anything resembling their actual probability, have a hard time believing in this rumor.

Here's the problem: If the Pipe is going to use Steam, then it has to run Steam games natively. And Steam only runs on Windows and OS X, which means Valve would have to buy million-unit licenses for one of those operating systems.

Except that Microsoft already has a console. Why would they enable a competitor?

The alternative is OS X. Except that Apple has a long history of never ever licensing their OS, and suing the crap out of anyone who tries to install it on unauthorized hardware. Even if they were friendly to licensees, the fact that I used the iPhone as an example of just this kind of scheme indicates that this is a core competency of the company, something they would never farm out to someone else. Steve would want all of the money, not a pittance to be made from a fiercely bargained contract to just sell one component.

No matter how hard I want it, the Pipe just ain't gonna happen.

1: Incidentally, this:

The backstory: For the last week there's been about a million threads a day about Brink, a not-terribly-interesting-looking cover-based-shooter by international ultra-conglomerate, ZeniMax Media. Typically you only see threads for games that have actually been released, or display even one single new gameplay element. The anomaly is obvious, and other people have commented on it.

It is not as if guerrilla advertising is unknown in the gaming world. Time and time again large companies decide their advertising dollars are best spent lying directly to individual gamers in online forums, rather than lying on big impersonal billboards. My accusation is not a shocking revelation. This has happened, you know, on previous occasions.

And it is not as if this is a thing of the past. The Terraria morons are advertising on (spamming) 4chan as we speak.

Guerrilla advertising is hard enough to combat when posts are associated with names, and the administrators actually have a will to fight. An anonymous forum lacks the first, and getting banned for complaining about advertising would seem to indicate a lack of the second.

The conspiracy theory starts here: Just where does 4chan get its money? It obviously consumes massive amounts of bandwidth, but has minimal advertising, and donations are (no kidding) refused. Other imageboards hold hysterical fundraisers and frequently die, but 4chan soldiers on, immortal, an apparent perpetual motion machine. Moot does not appear to be Bill Gates, who can fund an outrageously unprofitable venture for pure kicks. The money has to be coming from somewhere.

In fact, 4chan would make an ideal platform for guerrilla advertising. The users are anonymous, and so are the administration staff. 4chan is neither a public company, nor a nonprofit, so their financial records aren't published. They're about as transparent as a lead brick. Selling discussion threads would be both profitable and invisible. Banning malcontents such as myself could be a premium feature.

But hey. Maybe I'm just paranoid.

Thu May 5 00:03:06 PDT 2011

web standards deathmatch

Shit's fucked! God damn it, WHATWG!

Let me start at the beginning.

A not terribly well known feature of OpenID is delegated authentication. In brief, it's two <link> elements that point to an OpenID identity provider, in my case, myopenid.com. When I poke "bbot.org" into the login form on a site that accepts OpenID, (in specificationese, an "OpenID consumer") it's supposed to follow the links to myopenid.com, (an "identity provider") which does all the heavy lifting, and in the end, I show up as "bbot.org". I can change the identity provider to whatever, (google, AOL, livejournal) and still retain the same identity, which is actually pretty neat. There's also the benefit of hiding myopenid's ugly URL. (bbot.myopenid.com, ugh)

So far, so easy.

However, in HTML 4.01, <link> elements are supposed to be contained in a pair of matched <head></head> tags. But people are fallible, and so they tend to forget to close tags, or they misspell them, or they leave them out entirely; and so when a browser sees a <link> tag all by itself, it tries to do the right thing, and just parses it, rather than erroring out.

This kind of thing just drives the variety of person who writes specifications for programming languages up a wall. Zere vill be order in mein markup language! So the W3C wasted no time setting up a working group for XHTML, which specifies that tags must always be closed, everywhere, and single element tags like <br> have to be "self closing", viz. <br />. This didn't make a whole lot of sense, but the standards wonks waved their hands a lot and repeated "XML" a few dozen times, and since this was 2005, back when XML was shit-hot and everybody loved it, this settled the argument.

However, programmers (like those at wordpress.com) tended to use the special tags that declared their documents to be XHTML in their templates, because they deeply cared about web standards, and wanted to promote their use. Then they would pass them off to customers, who would write outrageously malformed code, then complain when it failed validation.

Obviously the problem with this situation was ideological impurity. Since users didn't care about validation, the W3C announced, they would make them care. XHTML 2.0 would require that browsers would stop rendering and display an error message at the very first parsing error. That'll show them!

Unfortunately, the W3C doesn't make browsers. They just write standards. Browsers are actually written by software vendors such as Apple, Google, Microsoft and the Mozilla Foundation. And none of these groups were terribly enamoured with a markup language that didn't really resemble HTML at all, was enormously fragile, and used by exactly nobody on the internet.[1]

So the browser vendors took their toys and went home, forming the WHATWG, and started work on HTML 5, completely bypassing XHTML 2. It rapidly became apparent that nobody was actually going to implement XHTML 2, so the W3C killed it off.

My more boring readers will recall that I recently rewrote the front page of bbot.org to validate as HTML 5. One of the more amusing tricks of HTML 5 is that the <html>, <head>, and <body> tags aren't actually required, since the browser has to render a page correctly even if they're missing. I obligingly removed them, then chortled to myself as the page validated perfectly. (Standards wonks find their kicks in odd places.)

Except! No! As the even more boring among you noticed immediately, the OpenID spec says the delegated authentication links have to be inside a <head> element! Damn you, OpenID!

This is a bug that has taken me seven months to discover, mostly because nobody uses OpenID anymore. I'd complain about compromising my perfect garden of pure ideology to make delegated authentication actually work, but that would be too ironic for words.

1: There's a whole bucket of implementation issues, too. Pop quiz, hotshot. What do you do when some user innocently forgets to close a tag in a comment on a blog post? Should the invalid markup in the comment wang the entire page? XML parsing is seriously expensive, computationally. Are you going to write a parser that checks the validity of every comment by Disqus' 35 million users, then reads the user's mind to figure out how they actually wanted to markup the text?