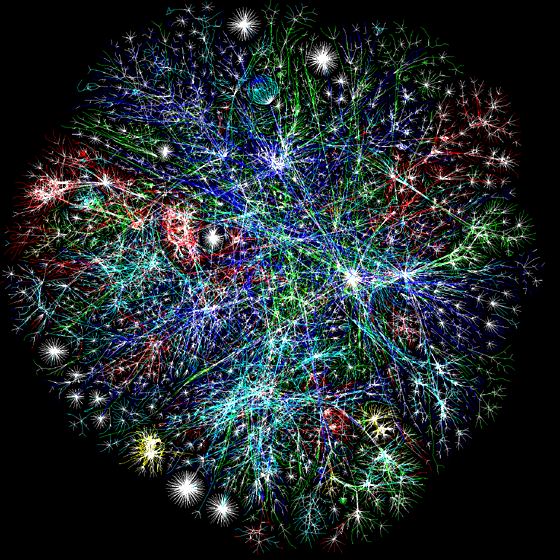

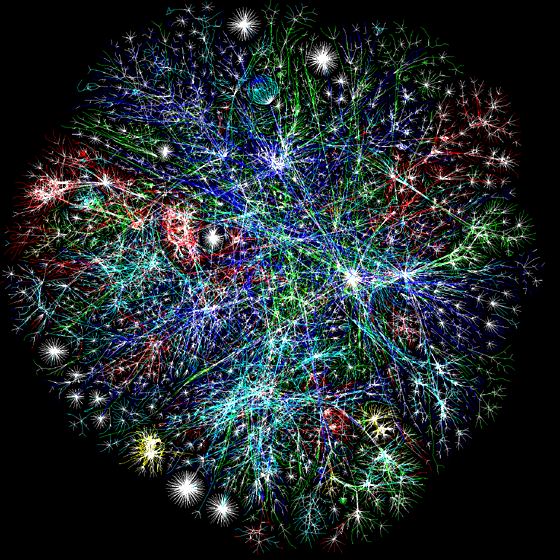

Semantic. {Credit: Lifeboat Foundation}

It seems like only yesterday that cyberspace was a-buzz with excited predictions of the coming Nirvana of the Internet – the Semantic Web (SemWeb). But commentators were split into two distinct camps. The enthusiasts talked of nothing less than a revolution bringing greatly enriched search and navigation experiences, while the snarks muttered ‘jam tomorrow’ and slunk away to their slithy dens. Well, as might have been expected, neither was quite right. The SemWeb will come, but it will creep up upon us incrementally, often unnoticeably. And guess what? It’s happening already.

Taming the Mashup

I’m not going to be trapped into appearing to describe a precise chronology, but the first evidence I came across that the SemWeb was putting down real roots, was Google Squared. I posted briefly in May 2009 about this and also Google’s Smarter Search, suggesting that these enhancements to the regular Google experience might prove attractive to the users of ‘popular search’, but reserving judgement on their usefulness for enterprise users.

I now think that the ability of Google Squared to pull-in a variety of ‘related’ information is useful, but that ‘useful’ here falls far short of ‘meaningful’. That’s because Google is fixated upon ad hoc keyword frequency correlations, with little or no consideration of semantics. Semantics are key to useful discovery, and the success of search enhancements like Google Smarter Search and Google Squared, will depend upon how much they are willing to trust the SemWeb as it develops, and consequently to what degree they are willing to shift further towards a semantics-based model, as they have done to some degree with these products.

Maintaining GoodRelations with your SearchMonkey

Hot on the heels of Google Squared, comes Yahoo! SearchMonkey. Much like Google’s SemWeb-aware offerings, Yahoo! SearchMonkey provides a means to enhance search results with relevant links, images and structured data derived not only from microformats and RDF embedded in the page, but also from various remote web service APIs. However, SearchMonkey appears to differ from the Google product in one vital respect.

While I can find no reference to Google Squared offering any kind of developer interface, Yahoo! SearchMonkey leaves no doubt that it is designed to allow any host website, or third-party developers, to develop and configure a custom SearchMonkey application tailored to specific requirements. The application can then be distributed virally via site badges and buttons allowing others to add it to their search profiles.

For those interested, you can try-out the Yahoo! SearchMonkey developer interface via a quickstart tutorial for developers, which should answer any nagging questions you may have. Suffice it to say here, that the application allows the developer to specify a URL pattern which will trigger the fetching and display of the enhanced information.

You then have a choice of presenting this information either as an ‘Enhanced Result’ (first figure) that reconfigures the search result itself, or as an ‘Infobar’ pane (second figure) displayed below the result that contains expandable lists of additional information. The example here shows three infobars.

Yahoo! have even gone so far as to explain the psychology of the expected user interaction with each of these devices, and their comments are worth repeating here:

Because Infobars and Enhanced Results behave so differently, users deal with them in fundamentally different ways.

When a user views an Enhanced Result, there is a critical fraction of a second where the user is subconsciously trying to determine whether the result has any relevance. Hence, Enhanced Results use a standard template designed to make it clear that the Enhanced Result is in fact a search result, not an advertisement.

By contrast, when a user views an Infobar, this is always a conscious act. The user has already decided that the result might be relevant, and they are looking for more information. For this reason, Infobars lift some of the restrictions described for Enhanced Result applications.

It seems to me that Yahoo! SearchMonkey is streets ahead of the comparable Google offering. While Google Squared is essentially self-serving (enhanced search results = more users = greater advertising exposure), Yahoo! SearchMonkey opens its technology to the developer community with no strings attached – yet. Of course, it’s entirely possible that once SearchMonkey has caught-on, a revenue model will emerge and become a condition of use. We’ll have to wait and see.

That issue aside, we now come to the really interesting bit (for me, anyway). Which is that Yahoo! SearchMonkey seems to have gone one step further than Google Squared in another sense too. The Google product, to its credit, acknowledges the usefulness of the RDF triple as a source of (inferred) related information. But because its semantic reasoning is still governed largely by ad hoc associations between keywords, it has not yet taken that next step of embracing the SemWeb concept of a defining ontology.

In contrast, Yahoo! SearchMonkey has taken that bold step. In particular, it has adopted an ontology dubbed GoodRelations, which describes the often subtle relationships which can occur between the web resources online vendors maintain, their product or service domain, the precise products or services they are offering online, and the associated prices and terms & conditions.

GoodRelations, Monkey or no Monkey

GoodRelations is a lightweight ontology designed to be used for enriching the information associated with goods and services on the Web. The GoodRelations ontology complements products and services ontologies like eClassOWL, by providing a vocabulary for expressing things such as:

- Web site X is offering cellphones of a certain make and model at a certain price

- Company Y offers maintenance for pianos that weigh less than 150 kg

- Company Z, a car rental company, leases out cars of a certain make and model from a particular set of branches across (this or that) country

GoodRelations has been under development since 2003, by a team led by Prof. Dr. Martin Hepp at the Bundeswehr University München, Germany, supported by various other institutions such as the Austrian BMVIT/FFG, a Young Researcher’s Grant (Nachwuchsförderung 2005-2006) from the Leopold-Franzens-Universität Innsbruck, and by the European Commission under the project SUPER (FP6-026850). It offers comprehensive support for every aspect of e-commerce, details of which are available on the GoodRelations web site.

Officially released on July 28, 2008, the GoodRelations ontology is available under the Creative Commons Attribution 3.0 licence. Under this licence, you are free to copy, distribute and transmit the work; to remix/adapt the work (e.g. to import the ontology and create specializations of its elements), as long as you attribute the work, e.g. by stating “This work is based on the GoodRelations ontology, developed by Martin Hepp” with a link back to http://purl.org/goodrelations/. The GoodRelations ontology has a full range of features, including support for all ISO 4217 currencies, international standards such as ISO 3166, ISO 4217, UN/CEFACT, eCl@ss, and UNSPSC, and for even the oddest product bundles. For instance, it can easily handle an offer of 2 Kg butter + 2 cellphones for €99.

Yahoo!’s adoption of the GoodRelations ontology indicates to me a somewhat ‘purer’ commitment to SemWeb standards than the apparently revenue-besotted Google developments, and also rather validates what Herr Hepp and his team have taken pains to develop. And the release by GoodRelations in April 2009 of the GoodRelations Annotator is the icing on the cake. This is an on-line service where anyone can create a machine-readable description of their business and their range of products using the GoodRelations vocabulary for e-commerce. When the SemWeb finally goes global, such metadata will be worth its weight – or maybe bit-count – in gold.

Juice up Your Web Site

So, that’s the USA and Germany spoken for. What about the UK? Well, as it happens, I can report that UK-based company Talis which specializes in extending the range of the public library OPAC – Online Public Access Catalogue – has unleashed a technology evangelist into the wild, and he’s come up with something rather neat.

Juice in action

Richard Wallis thinks that Internet-savvy library users increasingly want enriched results from the OPAC – links to Amazon, to Google Books, WorldCat, Open Library, LibraryThing, whatever. Consequently, he has developed a couple of JavaScript libraries which can easily be configured to fetch and display related information from selected sources to enhance any search. It goes by the name of Juice – Javascript User Interface Componentised Extension framework. What’s more, Juice is not confined to OPACs; it can be embedded in any web page with just a few lines of code.

Wallis’ innovation doesn’t pretend to conform to SemWeb standards, but instead utilizes common web technologies (Javascript, Ajax) to aggregate data from a variety of sources into a ‘hole in the page’ which you make for that purpose. With just a few tweaks to the standard, downloadable code (available as standard extensions), you can include links to information from such diverse sources as those library-oriented sites mentioned above, and also Copac, Waterstones, del.cio.us, Google Maps and Twitter. Anyone with the necessary skills can develop further extensions, as they wish.

Juice is available under the GNU General Public License v2 and full details and a download of Juice version 0.5 (146KB) are available at the Juice project site on Google Code. There is a useful review of Juice by David Tebbut in Information World Review, which is where I first heard about it. If you want to know even more, then also catch the very entertaining Juice introductory video, which captures a talk given by Richard Wallis at the Code{4}Lib conference in February 2009.

ISKO UK: Linked Data: A Crystallizing Vision

ISKO UK are proposing to run an all-day event on Linked Data in November 2009. We hope to have speakers who will tell us more about Linked Data as defined by Tim Berners Lee and the SemWeb community, some who will describe Linked Data initiatives currently under way (such as at the BBC), and also some who will describe similar and related developments which don’t necessarily fit within the SemWeb definition – like Juice and GoodRelations. If you’d support us by attending such an event, then drop a comment on this post saying ‘Yes Please’ or something equally encouraging.

‘Data’ is not synonymous with ‘meaning’. Although in all the recent fuss about Sir Tim Berners-Lee’s attempt to overturn the UK Civil Service’s ingrained culture of secrecy, this might easily be overlooked.

‘Data’ is not synonymous with ‘meaning’. Although in all the recent fuss about Sir Tim Berners-Lee’s attempt to overturn the UK Civil Service’s ingrained culture of secrecy, this might easily be overlooked.

Posted by bbater

Posted by bbater  I am increasingly impressed by what open source (OS) software communities are offering. Not just in terms of the sheer range of applications, but by their quality too. That’s an observation vindicated by the recent

I am increasingly impressed by what open source (OS) software communities are offering. Not just in terms of the sheer range of applications, but by their quality too. That’s an observation vindicated by the recent

One of the enduring attractions of our profession (that’s information management, knowledge management, records management, information science, knowledge organization – whatever you want to call it) for me, is that it impacts upon everything. Yes, literally, everything. When we build a taxonomy, relate descriptors in a thesaurus or assign keywords, we are mediators among a multiplicity of points-of-view, creeds and catechisms. But while that heterogeneity, that multicultural dimension, is often the root of our sense of fulfilment, contention can lie just below the surface.

One of the enduring attractions of our profession (that’s information management, knowledge management, records management, information science, knowledge organization – whatever you want to call it) for me, is that it impacts upon everything. Yes, literally, everything. When we build a taxonomy, relate descriptors in a thesaurus or assign keywords, we are mediators among a multiplicity of points-of-view, creeds and catechisms. But while that heterogeneity, that multicultural dimension, is often the root of our sense of fulfilment, contention can lie just below the surface.

With not even a soupçon of the quagmire I was entering, I recently looked up the definition of ‘document’. In case you didn’t know, the glib dictionary definitions hide a debate that has, well, not exactly raged, but rather limped on for nearly twenty years now. I don’t know, but I guess that it was the arrival of the digital ‘document’ with the first word processors in the early 1980s which sparked it in the first place.

With not even a soupçon of the quagmire I was entering, I recently looked up the definition of ‘document’. In case you didn’t know, the glib dictionary definitions hide a debate that has, well, not exactly raged, but rather limped on for nearly twenty years now. I don’t know, but I guess that it was the arrival of the digital ‘document’ with the first word processors in the early 1980s which sparked it in the first place. A

A

XTM Topic Maps (ISO 13250) is a Semantic Web-related technology using XML to describe knowledge structures. A number of start-up companies in Europe and the US in the early 2000s initiated programmes to develop applications supporting the creation and navigation of Topic Maps. Of them, only Ontopia in Norway seems to have survived in any commercial sense, with its

XTM Topic Maps (ISO 13250) is a Semantic Web-related technology using XML to describe knowledge structures. A number of start-up companies in Europe and the US in the early 2000s initiated programmes to develop applications supporting the creation and navigation of Topic Maps. Of them, only Ontopia in Norway seems to have survived in any commercial sense, with its  Most of us, I am sure, are familiar with Tag Clouds. But do they offer any real value?

Most of us, I am sure, are familiar with Tag Clouds. But do they offer any real value?